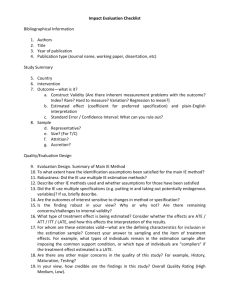

backgroundMatchingYA

advertisement

Background Estimation and Matching

for Strip82 Coadds

Yusra AlSayyad

University of Washington

DM Review

September 6, 2012

Outline

1) Overview of processing steps – Image to image matching

2) Discussion of Design Choices

- Estimation

- Median vs. Mean.

- NN2 vs. Not.

- Common Mask vs. per-Pair Mask

- Interpolation

- Detection.getBackground() {binsizes 128,256,378,512}

- Vs. Chebyshev polynomial orders {2,3,4,5}

3) Continuation of processing steps – strip to strip matching

4) Plan for next quarter

2

Very High level processing steps

1) Define any sized area of sky to coadd

2) Select a reference run per strip

3) For each field in reference run, generate a background

matched coadd

4) Stitch fields together to make coadded strips.

5) Match and stitch strips

6) Fit background to large coadd; subtract.

7) Iterate

NOTE: this process works for Stripe 82 only. Extending to LSST is a non-trivial problem.

3

Single field Coadd Processing Steps

1)

2)

3)

4)

Find Images that overlap reference run

PSF matching and warping

Photometric scaling

Background Matching

– Estimation

– Fitting spatial variation

5) Coaddition

4

Photometric Scaling

Reference Run

5

Photometric Scaling

Field 110

Field 111

Input images

warped image

6

Photometric Scaling

Field 110

Field 111

Overlap regions need to be identical

7

Scaling

Photometric Scaling

x in pixels (warped image frame)

8

Scaling

Photometric Scaling

x in pixels (warped image frame)

9

Background Matching

10

Background Estimation: Difference Image

11

How much to mask?

Sum of masks from 2 images Only. Call this “pairwise mask”

12

How much to mask?

Sum of all ~30 masks. Call this “common mask”

13

Background Estimation: Bin difference image

14

Background Estimation: counts histogram

For example: Some are nice and Gaussian. Some are not.

mean

median

Diff of medians

15

A few Background Estimation Options

For a superpixel (bin) in a difference image:

– Mean

– Median

– For N input images, find least sq. solution of all N(N-1)/2

difference images. (“NN2” Barris et al. 2009)

16

We will examine these 3 Estimate Choices Later

in presentation:

-

Estimation

- Common Mask vs. per-Pair Mask

- Median vs. Mean.

- NN2 vs. Not.

17

Background Matching: Fitting Spatial Variation

RA

– meas.algorithms.detection.getBackground(differenceImage)

– afw.math.Chebyshev1Function2D()

Dec

18

Background Matching: Fitting Spatial Variation

RA

– meas.algorithms.detection.getBackground(differenceImage)

– afw.math.Chebyshev1Function2D()

Dec

19

Examine choices

Background estimation

- Mean vs Median

- Masking: common mask vs. per-pair mask

- least squares fit of n(n-2)/2 difference images?

Spatial Variation

– How to model difference image (reference image – input

image)?

– Compare:

.detectionBinsize{512,378,256,128},

vs .backgroundOrder = {2,3,4,5}

20

1) First Qualitative Look

2) Quality Metric

– Because input images are matched to a reference run,

resulting coadds should be seamless.

– => Use RMS of overlap regions as background matching quality

metric

R.A.21

take rms of the overlap region’s unmasked pixels

For example: The difference of two overlap regions

22

Qualitative Look at Estimation: Pairwise Masks

23

Qualitative Look at Estimation: Common Mask

24

25

Metric: Estimation

26

Qualitative Look: Interpolation

RA

– meas.algorithms.detection.getBackground(differenceImage)

– afw.math.Chebyshev1Function2D()

Dec

27

Qualitative Look: Interpolation

– meas.algorithms.detection.getBackground(differenceImage)

– afw.math.Chebyshev1Function2D()

RA

Bin size comparison

Dec

28

Qualitative Look: Interpolation

RA

– meas.algorithms.detection.getBackground(differenceImage)

– afw.math.Chebyshev1Function2D()

Dec

29

Qualitative Look: Interpolation

30

Qualitative Look: Interpolation

meas.algorithms.detection.getBackground()

Bin size comparison

31

Quality Metric: Interpolation Methods

32

Strip to Strip matching

33

The Plan

1) Refactor uw background matching code to use the butler and skyMap. Merge in

features from ticket 2216. Make useable by other pipeTasks.

2) Incorporate stitching code:

a) Stitch patches (RA-wise) into strips (should just work)

b) Finish code to match and stitch N-S Strips (in the declination direction

using skyMap)

3) Validate by comparing mags, counts (to assess depth) with deeper catalogs.

4)Add enhancements:

a) Allow for coadds of any size to be generated per input specs.

b) Post-stitching background subtraction. e.g.:

i) Coadd components and stitch together.

ii) Fit a background model to this large chunk.

iii) Subtract this background model from the coadd.

iv) Use this coadd as the reference for another iteration of background

matched coadd, leaving the individual science images with a well-subtracted

background optimal for detection and measurement.

34