APARAPI

Java™ platform’s ‘Write Once Run Anywhere’ ® now includes the GPU

Gary Frost

AMD

PMTS Java Runtime Team

AGENDA

The age of heterogeneous computing is here

The supercomputer in your desktop/laptop

Why Java ™?

Current GPU programming options for Java developers

Are developers likely to adopt emerging Java OpenCL™/CUDA ™ bindings?

Aparapi

– What is it?

– How does it work?

Performance

Examples/Demos

Proposed Enhancements

Future work

2 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

THE AGE OF HETEROGENEOUS COMPUTE IS HERE

GPUs originally developed to accelerate graphics operations

Early adopters repurposed their GPUs for ‘general compute’ by performing ‘unnatural acts’

with shader APIs

OpenGL allowed shaders/textures to be compiled and executed via extensions

OpenCLTM/GLSL/CUDATM standardized/formalized how to express GPU compute

and simplified host programming

New programming models are emerging and lowering barriers to adoption

3 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

THE SUPERCOMPUTER IN YOUR DESKTOP

Some interesting tidbits from http://www.top500.org/

– November 2000

“ASCI White is new #1 with 4.9 TFlops on the Linpack"

http://www.top500.org/lists/2000/11

– November 2002

“3.2 TFlops are needed to enter the top 10”

http://www.top500.org/lists/2002/11

May 2011

– AMD RadeonTM 6990 5.1TFlops single precision performance

http://www.amd.com/us/products/desktop/graphics/amd-radeon-hd-6000/hd-6990/Pages/amd-radeon-hd-6990-overview.aspx#3

4 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

WHY JAVA?

One of the most widely used programming languages

– http://www.tiobe.com/index.php/content/paperinfo/tpci/index.html

Established in domains likely to benefit from heterogeneous compute

Java

C

7.54

C++

Even if applications are not implemented in Java, they may still run on the Java6.51

Virtual Machine (JVM)

5.01 C#

– JRuby, JPython, Scala, Clojure, Quercus(PHP)

PHP

Acts as a good proxy/indicator for enablement of other runtimes/interpreters

Objective C

18.16

4.58

– JavaScript, Flash, .NET, PHP, Python, Ruby, Dalvik?

Python

Other

32.89

– BigData , Search, Hadoop+Pig, Finance, GIS, Oil & 9.14

Gas

16.17

5 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

GPU PROGRAMMING OPTIONS FOR JAVA PROGRAMMERS

Emerging Java GPU APIs require coding a ‘Kernel’ in a domain-specific language

// JOCL/OpenCL kernel code

__kernel void squares(__global const float *in, __global float *out){

int gid = get_global_id(0);

out[gid] = in[gid] * in[gid];

}

import static org.jocl.CL.*;

import org.jocl.*;

public class Sample {

public static void main(String args[]) {

// Create input- and output data

int size = 10;

float inArr[] = new float[size];

float outArray[] = new float[size];

for (int i=0; i<size; i++) {

inArr[i] = i;

}

Pointer in = Pointer.to(inArr);

Pointer out = Pointer.to(outArray);

// Obtain the platform IDs and initialize the context properties

cl_platform_id platforms[] = new cl_platform_id[1];

clGetPlatformIDs(1, platforms, null);

cl_context_properties contextProperties = new cl_context_properties();

contextProperties.addProperty(CL_CONTEXT_PLATFORM, platforms[0]);

// Create an OpenCL context on a GPU device

cl_context context = clCreateContextFromType(contextProperties,

CL_DEVICE_TYPE_CPU, null, null, null);

As well as writing the Java ‘host’ CPU-based code to:

–

–

–

–

–

–

–

–

–

// Obtain the cl_device_id for the first device

cl_device_id devices[] = new cl_device_id[1];

clGetContextInfo(context, CL_CONTEXT_DEVICES,

Sizeof.cl_device_id, Pointer.to(devices), null);

// Create a command-queue

cl_command_queue commandQueue =

clCreateCommandQueue(context, devices[0], 0, null);

Initialize the data

Select/Initialize execution device

Allocate or define memory buffers for args/parameters

Compile 'Kernel' for a selected device

Enqueue/Send arg buffers to device

Execute the kernel

Read results buffers back from the device

Cleanup (remove buffers/queues/device handles)

Use the results

// Allocate the memory objects for the input- and output data

cl_mem inMem = clCreateBuffer(context, CL_MEM_READ_ONLY | CL_MEM_COPY_HOST_PTR,

Sizeof.cl_float * size, in, null);

cl_mem outMem = clCreateBuffer(context, CL_MEM_READ_WRITE,

Sizeof.cl_float * size, null, null);

// Create the program from the source code

cl_program program = clCreateProgramWithSource(context, 1, new String[]{

"__kernel void sampleKernel("+

" __global const float *in,"+

" __global float *out){"+

"

int gid = get_global_id(0);"+

"

out[gid] = in[gid] * in[gid];"+

"}"

}, null, null);

// Build the program

clBuildProgram(program, 0, null, null, null, null);

// Create and extract a reference to the kernel

cl_kernel kernel = clCreateKernel(program, "sampleKernel", null);

// Set the arguments for the kernel

clSetKernelArg(kernel, 0, Sizeof.cl_mem, Pointer.to(inMem));

clSetKernelArg(kernel, 1, Sizeof.cl_mem, Pointer.to(outMem));

// Execute the kernel

clEnqueueNDRangeKernel(commandQueue, kernel,

1, null, new long[]{inArray.length}, null, 0, null, null);

// Read the output data

clEnqueueReadBuffer(commandQueue, outMem, CL_TRUE, 0,

outArray.length * Sizeof.cl_float, out, 0, null, null);

// Release kernel, program, and memory objects

clReleaseMemObject(inMem);

clReleaseMemObject(outMem);

clReleaseKernel(kernel);

clReleaseProgram(program);

clReleaseCommandQueue(commandQueue);

clReleaseContext(context);

for (float f:outArray){

System.out.printf("%5.2f, ", f);

}

}

}

6 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

ARE DEVELOPERS LIKELY TO ADOPT EMERGING JAVA OPENCL/CUDA BINDINGS?

Some will

– Early adopters

– Prepared to learn new languages

– Motivated to squeeze all the performance they can from available compute devices

– Prepared to implement algorithms both in Java and in CUDA/OpenCL

Many won’t

– OpenCL/CUDA C99 heritage likely to disenfranchise Java developers

Many walked away from C/C++ or possibly never encountered it at all (due to CS education shifts)

Difficulties exposing low level concepts (such as GPU memory model) to developers who have ‘moved on’ and just

expect the JVM to ‘do the right thing’

Who pays for retraining of Java developers?

– Notion of writing code twice (once for Java execution another for GPU/APU) alien

Where’s my ‘Write Once, Run Anywhere’?

7 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

WHAT IS APARAPI?

An API for expressing data parallel workloads in Java

– Developer extends a Kernel base class

– Compiles to Java bytecode using existing tool chain

– Uses existing/familiar Java tool chain to debug the logic of their Kernel implementations

A runtime component capable of either :

– Executing Kernel via a Java Thread Pool

– Converting Kernel bytecode to OpenCL and executing on GPU

Yes

Execute Kernel

using Java

Thread Pool

Bytecode can

be converted

to OpenCL?

Yes

Convert

bytecode to

OpenCL

No

Platform

Supports

OpenCL?

No

MyKernel.class

8 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

Execute

OpenCL

Kernel

on GPU

AN EMBARRASSINGLY PARALLEL USE CASE

First lets revisit our earlier code example

– Calculate square[0..size] for a given input in[0..size]

final int[] square= new int[size];

final int[] in = new int[size];

// populating in[0..size] omitted

parallel-for

i=0;i++){

i<size; i++){ Note that the order we traverse the loop is unimportant

for

(int i=0; (int

i<size;

square[i] = in[i] * in[i];

Ideally Java would provide a way to indicate that the

}

body of the loop need not be executed sequentially

Something like a parallel-for ?

However we don’t want to modify the language, compiler

or tool chain.

9 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

REFACTORING OUR EXAMPLE TO USE APARAPI

final int[] square= new int[size];

final int[] in = new int[size]; // populating in[0..size] omitted

for (int i=0; i<size; i++){

square[i] = in[i] * in[i];

}

new Kernel(){

@Override public void run(){

int i = getGlobalID();

square[i] = in[i]*in[i];

}

}.execute(size);

10 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

EXPRESSING DATA PARALLEL IN APARAPI

kernel.execute(size);

Execute Kernel

using Java Thread

Pool

Bytecode can

be converted

to OpenCL?

Yes

Convert bytecode

to OpenCL

No

Kernel kernel = new Kernel(){

@Override public void run(){

int i=getGlobalID();

square[i]=int[i]*int[i];

}

};

Yes

No

Platform

Supports

OpenCL?

No

Yes

What happens when we call execute(n)?

Execute OpenCL Kernel

on GPU

Is this the

first

execution?

No

Do we have

OpenCL?

11 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

Yes

FIRST CALL OF KERNEL.EXECUTE(SIZE) WHEN OPENCL/GPU IS AVAILABLE

Reload classfile via classloader and locate all methods and fields

For ‘run()’ method and all methods reachable from ‘run()’

– Convert method bytecode to an IR

Expression trees

Conditional sequences analyzed and converted to if{}, if{}else{} and for{} constructs

– Create a list of fields accessed by the bytecode

Note the access type (read/write/read+write)

Accessed fields will be turned into args and passed to generated OpenCL

Create an OpenCL buffer for each accessed primitive array (read, write or readwrite)

– Create and Compile OpenCL

Bail and revert to Java Thread Pool if we encounter any issues in previous steps

12 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

ALL CALLS OF KERNEL.EXECUTE(SIZE) WHEN OPENCL/GPU IS AVAILABLE

Lock any accessed primitive arrays (so the garbage collector doesn’t move or collect them)

For each field readable by the kernel:

– If field is an array

→ enqueue a buffer write

– If field is scalar → set kernel arg value

Enqueue Kernel execution

For each array writeable by the kernel:

– Enqueue a buffer read

Wait for all enqueued requests to complete

Unlock accessed primitive arrays

13 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

KERNEL.EXECUTE(SIZE) WHEN OPENCL/GPU IS NOT AN OPTION

Create a thread pool

One thread per core

Clone Kernel once for each thread

Each Kernel accessed exclusively from a single thread

Each Kernel loops globalSize/threadCount times

Update globalId, localId, groupSize, globalSize on Kernel instance

Executes run() method on Kernel instance

Wait for all threads to complete

14 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

BYTECODE PRIMER

Variable Length instructions

Access to immediate values

–

Mostly constant pool and local variable table indexes

Stack Based execution

–

IMUL : multiply two integers from stack and push result

–

…,<op2>, <op1> => [ IMUL ] => …,<op1*op2>

–

Sometimes the types and number of operands cannot be determined from the bytecode alone. We

need to decode from the ConstantPool

Some surprising omissions

–

Store 0 in a local variable or field? (3+ bytes)

–

Instead we push 0, then pop into a local variable (4+ bytes)

15 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

BYTECODE PRIMER: FILE FORMAT

ClassFile {

u4 magic;

u2 minor_version;

u2 major_version;

u2 constant_pool_count;

cp_info constant_pool[constant_pool_count-1];

u2 access_flags;

u2 this_class;

u2 super_class;

u2 interfaces_count;

u2 interfaces[interfaces_count];

u2 fields_count;

field_info fields[fields_count];

u2 methods_count;

method_info methods[methods_count];

u2 attributes_count;

attribute_info attributes[attributes_count];

}

16 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

CONSTANTPOOL

Each class has one ConstantPool

A list of constant values used to describe the class

Not all express source artifacts

Pool is made up of one or more Entries containing:–

primitive types (int, float, double, long)

– Double and Longs take two slots

–

Strings (UTF8)

–

Class/Method/Field/Interface descriptors

These descriptors contain grouped references to other slots.

So a method descriptor will reference the slot containing the Class definition, the slot

containing the name of the method (utf8/String) the slot containing the signature

(utf8/String).. say “(Ljava/lang/String;I)[F”

17 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

ATTRIBUTES

The various sections of the classfile will contain sets of ‘attributes’

–

Each attribute has a name, a length and a sequence of bytes (the value)

Think ‘HashMap<String, Pair<int, ?>>’

–

Class/top level attributes include the name of the sourcefile, the generic signature information

etc.

–

Attributes can be nested

–

Allows new features to be added to the classfile without violating the original spec

Field sections have lists of attributes

–

Generic signature etc...

Method sections have lists of attributes

–

Generic signature etc...

–

One of the attributes of a Method is a ‘Code’ attribute

This contains the sequence of bytecodes representing the method body

18 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

A BYTECODE TOUR

0: iconst_0

1: istore_1

2: iconst_0

3: istore_2

public void run() {

4: goto 26

int total = 0;

7: iload_2

8: bipush 10

for (int i = 0; i < 100; i++) {

10: irem

if (i%10==0 && i%4==0) {

11: ifne 23

javap –c MyClass

14: iload_2

total++;

15: iconst_4

}

16: irem

17: ifne 23

}

20: iinc 1, 1

System.out.println(total);

23: iinc 2, 1

26: iload_2

}

27: bipush 100

29: if_icmplt

7

32: getstatic

#15; //Field java/lang/System.out:Ljava/io/PrintStream;

35: iload_1

36: invokevirtual #21; //Method java/io/PrintStream.println:(I)V

39: return

19 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

A BYTECODE TOUR …

0: iconst_0

1: istore_1

2: iconst_0

3: istore_2

public void run() {

4: goto 26

int total = 0;

7: iload_2

8: bipush 10

for (int i = 0; i < 100; i++) {

10: irem

if (i%10==0 && i%4==0) {

11: ifne 23

Store

0

in

var

slot

1

14: iload_2

total++;

15: iconst_4

}

16: irem

17: ifne 23

}

20: iinc 1, 1

System.out.println(total);

23: iinc 2, 1

26: iload_2

}

27: bipush 100

29: if_icmplt

7

32: getstatic

#15; //Field java/lang/System.out:Ljava/io/PrintStream;

35: iload_1

36: invokevirtual #21; //Method java/io/PrintStream.println:(I)V

39: return

20 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

A BYTECODE TOUR …

0: iconst_0

1: istore_1

2: iconst_0

3: istore_2

public void run() {

4: goto 26

int total = 0;

7: iload_2

8: bipush 10

for (int i = 0; i < 100; i++ ) {

Loop Control

10: irem

if (i%10==0 && i%4==0) {

11: ifne 23

Oracle javac style

14: iload_2

total++;

15: iconst_4

}

16: irem

Eclipse

javac

places

17: ifne 23

}

condition at top and

20: iinc 1, 1

System.out.println(total);

23: iinc 2, 1

unconditional at

26: iload_2

}

27: bipush 100 bottom

29: if_icmplt

7

32: getstatic

#15; //Field java/lang/System.out:Ljava/io/PrintStream;

35: iload_1

36: invokevirtual #21; //Method java/io/PrintStream.println:(I)V

39: return

21 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

A BYTECODE TOUR …

0: iconst_0

1: istore_1

2: iconst_0

3: istore_2

public void run() {

4: goto 26

int total = 0;

7: iload_2

8: bipush 10

for (int i = 0; i < 100; i++) {

10: irem

if (i%10==0 && i%4==0) {

11: ifne 23 Executed once

14: iload_2

total++;

15: iconst_4 Store 0 in var slot 2

}

16: irem

Branch to instruction #26

17: ifne 23

}

20: iinc 1, 1

System.out.println(total);

23: iinc 2, 1

26: iload_2

}

27: bipush 100

29: if_icmplt

7

32: getstatic

#15; //Field java/lang/System.out:Ljava/io/PrintStream;

35: iload_1

36: invokevirtual #21; //Method java/io/PrintStream.println:(I)V

39: return

22 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

A BYTECODE TOUR …

0: iconst_0

1: istore_1

2: iconst_0

3: istore_2

public void run() {

4: goto 26

int total = 0;

7: iload_2

8: bipush 10

for (int i = 0; i < 100; i++) {

10: irem

if (i%10==0 && i%4==0) {

11: ifne 23

Increment

var

slot

2

by

1

14: iload_2

total++;

if var slot 2 < 100

15: iconst_4

16: irem

branch to instruction at 7 }

17: ifne 23

}

20: iinc 1, 1

System.out.println(total);

23: iinc 2, 1

26: iload_2

}

27: bipush 100

29: if_icmplt

7

32: getstatic

#15; //Field java/lang/System.out:Ljava/io/PrintStream;

35: iload_1

36: invokevirtual #21; //Method java/io/PrintStream.println:(I)V

39: return

23 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

A BYTECODE TOUR …

0: iconst_0

1: istore_1

2: iconst_0

3: istore_2

public void run() {

4: goto 26

int total = 0;

7: iload_2

8: bipush 10

for (int i = 0; i < 100; i++) {

10: irem

if (i%10==0 && i%4==0) {

11: ifne 23

“Loop

Body”

14: iload_2

total++;

15: iconst_4

}

16: irem

17: ifne 23

}

20: iinc 1, 1

System.out.println(total);

23: iinc 2, 1

26: iload_2

}

27: bipush 100

29: if_icmplt

7

32: getstatic

#15; //Field java/lang/System.out:Ljava/io/PrintStream;

35: iload_1

36: invokevirtual #21; //Method java/io/PrintStream.println:(I)V

39: return

24 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

A BYTECODE TOUR …

0: iconst_0

1: istore_1

2: iconst_0

3: istore_2

public void run() {

4: goto 26

int total = 0;

7: iload_2

8: bipush 10

for (int i = 0; i < 100; i++) {

10: irem

if (i%10==0 && i%4==0) {

11: ifne 23

“Condition

control”

14: iload_2

total++;

15: iconst_4

}

16: irem

17: ifne 23

}

20: iinc 1, 1

System.out.println(total);

23: iinc 2, 1

26: iload_2

}

27: bipush 100

29: if_icmplt

7

32: getstatic

#15; //Field java/lang/System.out:Ljava/io/PrintStream;

35: iload_1

36: invokevirtual #21; //Method java/io/PrintStream.println:(I)V

39: return

25 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

A BYTECODE TOUR …

0: iconst_0

1: istore_1

2: iconst_0

3: istore_2

public void run() {

4: goto 26

int total = 0;

7: iload_2

8: bipush 10

for (int i = 0; i < 100; i++) {

10: irem

if (i%10==0 && i%4==0) {

11: ifne 23

14: iload_2

total++;

15: iconst_4

}

16: irem

17: ifne 23

}

20: iinc 1, 1

System.out.println(total);

23: iinc 2, 1

26: iload_2

}

27: bipush 100

29: if_icmplt

7

32: getstatic

#15; //Field java/lang/System.out:Ljava/io/PrintStream;

35: iload_1

36: invokevirtual #21; //Method java/io/PrintStream.println:(I)V

39: return

26 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

A BYTECODE TOUR …

0: iconst_0

1: istore_1

2: iconst_0

3: istore_2

public void run() {

4: goto 26

int total = 0;

7: iload_2

8: bipush 10

for (int i = 0; i < 100; i++) {

10: irem

if (i%10==0 && i%4==0) {

Logical operators

11: ifne 23

14: iload_2

total++;

result in ‘short

15: iconst_4

circuit’ branches

}

16: irem

17: ifne 23

}

20: iinc 1, 1

System.out.println(total);

23: iinc 2, 1

26: iload_2

}

27: bipush 100

29: if_icmplt

7

32: getstatic

#15; //Field java/lang/System.out:Ljava/io/PrintStream;

35: iload_1

36: invokevirtual #21; //Method java/io/PrintStream.println:(I)V

39: return

27 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

A BYTECODE TOUR …

0: iconst_0

1: istore_1

2: iconst_0

3: istore_2

public void run() {

4: goto 26

int total = 0;

7: iload_2

8: bipush 10

for (int i = 0; i < 100; i++) {

10: irem

if (i%10==0 && i%4==0) {

11: ifne 23

14: iload_2

total++;

15: iconst_4

}

16: irem

17: ifne 23

}

20: iinc 1, 1

System.out.println(total);

23: iinc 2, 1

26: iload_2

}

27: bipush 100

29: if_icmplt

7

32: getstatic

#15; //Field java/lang/System.out:Ljava/io/PrintStream;

35: iload_1

36: invokevirtual #21; //Method java/io/PrintStream.println:(I)V

39: return

28 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

A BYTECODE TOUR …

0: iconst_0

1: istore_1

2: iconst_0

3: istore_2

public void run() {

4: goto 26

int total = 0;

7: iload_2

8: bipush 10

for (int i = 0; i < 100; i++) {

10: irem

if (i%10==0 && i%4==0) {

“Conditional body”

11: ifne 23

14: iload_2

total++;

15: iconst_4

}

16: irem

17: ifne 23

}

20: iinc 1, 1

System.out.println( total );

23: iinc 2, 1

26: iload_2

}

27: bipush 100

29: if_icmplt

7

32: getstatic

#15; //Field java/lang/System.out:Ljava/io/PrintStream;

35: iload_1

36: invokevirtual #21; //Method java/io/PrintStream.println:(I)V

39: return

29 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

A BYTECODE TOUR …

0: iconst_0

1: istore_1

2: iconst_0

3: istore_2

public void run() {

4: goto 26

int total = 0;

7: iload_2

8: bipush 10

for (int i = 0; i < 100; i++) {

10: irem

if (i%10==0 && i%4==0) {

11: ifne 23

14: iload_2

total++;

15: iconst_4

}

16: irem

17: ifne 23

}

20: iinc 1, 1

System.out.println( total );

23: iinc 2, 1

26: iload_2

}

27: bipush 100

29: if_icmplt

7

32: getstatic

#15; //Field java/lang/System.out:Ljava/io/PrintStream;

35: iload_1

36: invokevirtual #21; //Method java/io/PrintStream.println:(I)V

39: return

30 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

LETS LOOK AT AN EXAMPLE

Lets ‘fold’ the following instructions

0:

1:

2:

3:

4:

5:

iload_2

iload_1

iadd

iconst_2

idiv

ireturn

Start with an empty list

head and tail pointing to ‘NULL’

head

NULL

tail

31 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

LETS LOOK AT AN EXAMPLE

0:

1:

2:

3:

4:

5:

iload_2

iload_1

iadd

iconst_2

idiv

ireturn

head

iload_2 consumes ‘0’ stack operands

Create a new iload_2 and make it the tail of the list

NULL

tail

head

32 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

iload_2

tail

LETS LOOK AT AN EXAMPLE

0:

1:

2:

3:

4:

5:

iload_1 consumes ‘0’ stack operands

iload_2

iload_1

iadd

iconst_2

idiv

ireturn

head

Create a new iload_1 and add to the tail of the existing linked list

iload_2

tail

head

iload_2

33 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

iload_1

tail

LETS LOOK AT AN EXAMPLE

0:

1:

2:

3:

4:

5:

head

iload_2

iload_1

iadd

iconst_2

idiv

ireturn

iload_2

iadd consumes ‘2’ stack operands

Create a new iadd

Remove ‘tail’ from list (adjust tail) and make it operand[1] of iadd

Remove new ‘tail’ (and adjust tail) and make it operand[0] of iadd

iload_1

tail

head

operand 0

iload_2

34 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

iadd

tail

operand 1

iload_1

LETS LOOK AT AN EXAMPLE

0:

1:

2:

3:

4:

5:

Iconst_2 consumes ‘0’ stack operands

iload_2

iload_1

iadd

iconst_2

idiv

ireturn

head

operand 0

iload_2

Create a new iconst_2 and add to tail

iadd

tail

operand 1

iload_1

head

iadd

operand 0

iload_2

35 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

iconst_2

operand 1

iload_1

tail

LETS LOOK AT AN EXAMPLE

0:

1:

2:

3:

4:

5:

idiv consumes ‘2’ stack operands

iload_2

iload_1

iadd

iconst_2

idiv

ireturn

head

operand 0

iload_2

Create a new idiv

Remove ‘tail’ from list (adjust tail) and make it operand[1] of idiv

Remove new ‘tail’ (and adjust tail) and make it operand[0] of idiv

iadd

iconst_2

tail

head

operand 1

idiv

operand 0

iload_1

operand 1

iadd

operand 0

iload_2

36 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

tail

iconst_2

operand 1

iload_1

LETS LOOK AT AN EXAMPLE

0:

1:

2:

3:

4:

5:

ireturn consumes ‘1’ stack operands

iload_2

iload_1

iadd

iconst_2

idiv

ireturn

head

Create a new ireturn and move existing tail as operand[0]

idiv

operand 0

ireturn

head

operand 1

iadd

operand 0

tail

operand 0

iconst_2

idiv

operand 1

operand 0

iload_2

tail

iload_1

operand 1

iadd

operand 0

iload_2

37 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

iconst_2

operand 1

iload_1

THE RESULT

After parsing we determine that this is a single return statement

For reference here is the source

public int mid(int _min, int _max){

return((_max+_min)/2);

}

When we apply this approach to more complex methods we end up with a linked list of instructions

which represent the ‘roots’ of expressions or statements.

Essentially we end up with a list comprised of conditionals, goto’s, assignments and return statements.

All branch targets are ‘roots’

From this we can fairly easily recognize larger level structures (for/while/if/else)

38 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

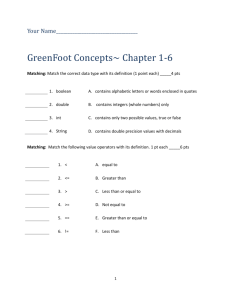

ADOPTION CHALLENGES (APARAPI VS EXISTING JAVA GPU BINDINGS)

Emerging GPU

bindings

Aparapi

Learn OpenCL/CUDA

DIFFICULT

N/A

Locate potential data parallel opportunities

MEDIUM

MEDIUM

Refactor existing code/data structures

MEDIUM

MEDIUM

Create Kernel Code

DIFFICULT

EASY

Create code to coordinate execution and buffer transfers

MEDIUM

N/A

Identify GPU performance bottlenecks

DIFFICULT

DIFFICULT

Iterate code/debug algorithm logic

DIFFICULT

MEDIUM

Solve build/deployment issues

DIFFICULT

MEDIUM

39 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

MANDELBROT EXAMPLE

new Kernel(){

@Override public void run() {

int gid = getGlobalId();

float x = (((gid % w)-(w/2))/(float)w); // x {-1.0 .. +1.0}

float y = (((gid / w)-(h/2))/(float)h); // y {-1.0 .. +1.0}

float zx = x, zy = y, new_zx = 0f;

int count = 0;

while (count < maxIterations && zx * zx + zy * zy < 8) {

new_zx = zx * zx - zy * zy + x;

zy = 2 * zx * zy + y;

zx = new_zx;

count++;

}

rgb[gid] = pallette[count];

}).execute(width*height);

40 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

CONWAYS ‘GAME OF LIFE’ EXAMPLE

@Override public void run() {

int gid = getGlobalId();

int to = gid + toBase;

int from = gid + fromBase;

int x = gid%width;

int y = gid/width;

if ((x == 0 || x == width - 1 || y == 0 || y == height - 1)) {

imageData[to] = imageData[from];

}else{

int neighbors = (imageData[from - 1] & 1) + // EAST

(imageData[from + 1] & 1) + // WEST

(imageData[from - width - 1] & 1) + // NORTHEAST

(imageData[from - width] & 1) + // NORTH

(imageData[from - width + 1] & 1) + // NORTHWEST

(imageData[from + width - 1] & 1) + // SOUTHEAST

(imageData[from + width] & 1) + // SOUTH

(imageData[from + width + 1] & 1); // SOUTHWEST

if (neighbors == 3 || (neighbors == 2 && imageData[from] == ALIVE)) {

imageData[to] = ALIVE;

}else{

imageData[to] = DEAD;

}

}

}

41 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

EXPRESSING DATA PARALLEL IN JAVA WITH APARAPI BY EXTENDING KERNEL

class SquareKernel extends Kernel{

final int[] in, square;

public SquareKernel(final int[] in){

this.in = in;

this.square = new int[in.length);

}

@Override public void run(){

int i=getGlobalID();

square[i]=int[i]*int[i];

}

public int[] square(){

execute(in.length);

square() method ‘wraps’ the execution

return(square);

Provides a more natural Java API

}

}

int []in = new int[size];

SquareKernel squareKernel = new SquareKernel(in);

// populating in[0..size] omitted

int[] result = squareKernel.square();

42 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

mechanics

EXPRESSING DATA PARALLELISM IN APARAPI USING PROPOSED JAVA 8 LAMBDAS

JSR 335 ‘Project Lambda’ proposes addition of ‘lambda’ expressions to Java 8.

http://cr.openjdk.java.net/~briangoetz/lambda/lambda-state-3.html

How we expect Aparapi will make use of the proposed Java 8 extensions

final int [] square = new int[size];

final int [] in = new int[size]; // populating in[0..size] omitted

Kernel.execute(size, #{ i -> out[i]=int[i]*int[i]; });

43 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

HOW APAPAPI EXECUTES ON THE GPU

At runtime Aparapi converts Java bytecode to OpenCL

OpenCL compiler converts OpenCL to device specific ISA (for GPU/APU)

GPU comprised of multiple SIMD (Single Instruction Multiple Dispatch) Cores

SIMD performance stems from executing the same instruction on different data at the same time

– Think single program counter shared across multiple threads

– All SIMDs executing at the same time (in lock-step)

new Kernel(){

@Override public void run(){

int i = getGlobalID();

int temp= in[i]*2;

temp = temp+1;

out[i] = temp;

}

}.execute(4)

i=0

i=1

i=2

i=3

int temp =in[0]*2

int temp =in[1]*2

int temp =in[2]*2

int temp =in[3]*2

temp=temp+1

temp=temp+1

temp=temp+1

temp=temp+1

out[0]=temp

out[1]=temp

out[2]=temp

out[3]=temp

44 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

DEVELOPER IS RESPONSIBLE FOR ENSURING PROBLEM IS DATA PARALLEL

Data dependencies may violate the ‘in any order’ contract

for (int i=1; i< 100; i++){

out[i] = out[i-1]+in[i];

}

new Kernel(){ @Override public void run(){

int i = getGlobalID();

out[i] = out[i-1]+in[i];

}}.execute(100);

out[i-1] refers to a value resulting from a previous iteration which may not have been evaluated yet

Loops mutating shared data will need to be refactored or will necessitate atomic operations

for (int i=0; i< 100; i++){

sum += in[i];

}

new Kernel(){ @Override public void run(){

int i = getGlobalID();

sum+= in[i];

}}.execute(100);

sum += x causes a race condition

Almost certainly will not be atomic when translated to OpenCL

Not safe in multi-threaded Java either

45 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

SOMETIMES WE CAN REFACTOR TO RECOVER SOME PARALLELISM

for (int i=0; i< 100; i++){

sum += in[i];

}

new (int

Kernel(){

for

n=0; n<10; n++){

@Override

public

void

run(){

for (int i=0;

i<10;

i++){

partial[n]

+= data[n*10+i];

int

i = getGlobalID();

}sum+= in[i];

} }

for

(int i=0; i< 10; i++){

}.execute(100);

sum+=partial[i];

new Kernel(){

} @Override public void run(){

int n = getGlobalID()

for (int i=0; i<10; i++)

partial[n] += data[n*10+i];

}

}.execute(10);

for (int i=0; i< 10; i++){

sum+=partial[i];

}

46 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

TRY TO AVOID BRANCHING WHEREVER POSSIBLE

SIMD performance impacted when code contains branches

– To stay in lockstep SIMDs must process both the 'then' and 'else' blocks

– Use result of 'condition' to predicate instructions (conditionally mask to a no-op)

new Kernel(){

@Override public void run(){

int i = getGlobalID();

int temp= in[i]*2;

if (i%2==0)

temp = temp+1;

else

temp = temp -1;

out[i] = temp;

}

}.execute(4)

i=0

i=1

i=2

i=3

int temp =in[0]*2

int temp =in[1]*2

int temp =in[2]*2

int temp =in[3]*2

<c> = (0%2==0)

<c> = (1%2==0)

<c> = (2%2==0)

<c> = (3%2==0)

if< c> temp=temp+1

if< c> temp=temp+1

if< c> temp=temp+1

if< c> temp=temp+1

if <!c> temp=temp-1

if <!c> temp=temp-1

if <!c> temp=temp-1

if <!c> temp=temp-1

out[0]=temp

out[1]=temp

out[2]=temp

out[3]=temp

47 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

CHARACTERISTICS OF IDEAL DATA PARALLEL WORKLOADS

Code which iterates over large arrays of primitives

– Where the order of iteration is not critical

Avoid data dependencies between iterations

– Each iteration contains sequential code (few branches)

Good balance between data size (low) and compute (high)

Compute

– 32/64 bit data types preferred

– Transfer of data to/from the GPU can be costly

Although APUs likely to mitigate this over time

– Trivial compute often not worth the transfer cost

– May still benefit by freeing up CPU for other work

48 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

Ideal

Data Size

GPU

Memory

NBODY EXAMPLE

@Override public void run() {

int body = getGlobalId();

int count = bodies * 3;

int globalId = body * 3;

float accx = 0.f;

float accy = 0.f;

float accz = 0.f;

float myPosx = xyz[globalId + 0];

float myPosy = xyz[globalId + 1];

float myPosz = xyz[globalId + 2];

for (int i = 0; i < count; i += 3) {

float dx = xyz[i + 0] - myPosx;

float dy = xyz[i + 1] - myPosy;

float dz = xyz[i + 2] - myPosz;

float invDist = rsqrt((dx * dx) + (dy * dy) + (dz * dz) + espSqr);

float s = mass * invDist * invDist * invDist;

accx = accx + s * dx;

accy = accy + s * dy;

accz = accz + s * dz;

}

accx = accx * delT;

accy = accy * delT;

accz = accz * delT;

xyz[globalId + 0] = myPosx + vxyz[globalId + 0] * delT + accx * .5f * delT;

xyz[globalId + 1] = myPosy + vxyz[globalId + 1] * delT + accy * .5f * delT;

xyz[globalId + 2] = myPosz + vxyz[globalId + 2] * delT + accz * .5f * delT;

vxyz[globalId + 0] = vxyz[globalId + 0] + accx;

vxyz[globalId + 1] = vxyz[globalId + 1] + accy;

vxyz[globalId + 2] = vxyz[globalId + 2] + accz;

}

49 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

APARAPI NBODY EXAMPLE

NBody is a common OpenCL/CUDA benchmark/demo

– For each particle/body

Calculate new position based on the gravitational force impinged on each body, by every other body

Essentially a N^2 space problem

– If we double the number of bodies, we perform four times the positional calculations

Following charts compare

– Naïve Java version (single loop)

– Aparapi version using Java Thread Pool

– Aparapi version running on the GPU (ATI Radeon ™ 5870)

50 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

APARAPI NBODY PERFORMANCE (FRAMES RATE VS NUMBER OF BODIES)

800

Frames per second

700

Java Single Thread

670.2

Aparapi Thread Pool

600

Aparapi GPU

500

400

300

389.12

260.8

200

100

186.05

80.42

19.96

0

1k

79.87

72.67

2k

19.37

5.19

5.47

1.29

34.24

1.45

0.32

12.18

0.38

0.08

4k

8k

16k

32k

Number of bodies/particles

51 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

3.57

0.1

0.02

0.94

0.01

0.02

64k

128k

NBODY PERFORMANCE: CALCULATIONS PER ΜSEC VS. NUMBER OF BODIES

Position calculations per µS

18000

15663

Java Single Thread

16000

16101

Aparapi Thread Pool

14000

13078

Aparapi GPU

12000

10000

9190

8000

6000

5360

4000

2000

0

3146

1632

702

273

304

313

367

388

407

412

412

84

83

83

86

86

86

86

86

1k

2k

64k

128k

4k

8k

16k

32k

Number of bodies/particles

52 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

APARAPI EXPLICIT BUFFER MANAGEMENT

This code demonstrates a fairly common pattern. Namely a Kernel executed inside a loop

int [] buffer = new int[HUGE];

int [] unusedBuffer = new int[HUGE];

Kernel k = new Kernel(){

@Override public void run(){

// mutates buffer contents

// no reference to unusedBuffer

}

};

Although Aparapi analyzes kernel methods to optimize host

buffer transfer requests,

it has no knowledge of buffer accesses from the enclosing loop.

Aparapi must assume that the buffer is modified between

invocations.

This forces (possibly unnecessary) buffer copies to and from the

device for each invocation of Kernel.excute(int)

for (int i=0; i< 1000; i++){

//Transfer buffer to GPU

k.execute(HUGE);

//Transfer buffer from GPU

}

53 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

APARAPI EXPLICIT BUFFER MANAGEMENT

Using the new explicit buffer management APIs

int [] buffer = new int[HUGE];

Kernel k = new Kernel(){

@Override public void run(){

// mutates buffer contents

}

};

Developer takes control (of all buffer transfers) by

k.setExplicit();

marking the kernel as explicit

k.put(buffer);

for (int i=0; i< 1000; i++){

k.execute(HUGE);

Then coordinates when/if transfers take place

}

k.get(buffer);

Here we save 999 buffer writes and 999 buffer reads

54 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

APARAPI EXPLICIT BUFFER MANAGEMENT

A possible alternative might be to expose the ‘host’ code to Aparapi

int [] buffer = new int[HUGE];

Kernel k = new Kernel(){

@Override public void run(){

// mutates buffer contents

}

@Override public void host(){

for (int i=0; i< 1000; i++){

execute(HUGE);

}

}

};

k.host();

Developer exposes the host code to Aparapi by

overriding the host() method.

By analyzing the bytecode of host(), Aparapi can

determine when/if buffers are mutated and can ‘inject’

appropriate put()/get() requests behind the scenes.

55 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

APARAPI BITONIC SORT WITH EXPLICIT BUFFER MANAGEMENT

Bitonic mergesort is a parallel friendly ‘in place’ sorting algorithm

– http://en.wikipedia.org/wiki/Bitonic_sorter

On 10/18/2010 the following post appeared on Aparapi forums

“Aparapi 140x slower than single thread Java?! what am I doing wrong?”

– Source code (for Bitonic Sort) was included in the post

An Aparapi Kernel (for each sort pass) executed inside a Java loop.

Aparapi was forcing unnecessary buffer copies.

Following chart compares :

– Single threaded Java version

– Aparapi/GPU version without explicit buffer management (default AUTO mode)

– Aparapi/GPU version with recent explicit buffer management feature enabled.

Both Aparapi versions running on ATI Radeon ™ 5870.

56 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

EXPLICIT BUFFER MANAGEMENT EFFECT ON BITONIC SORT IMPLEMENTATION

3500

3235

Java Single Thread

3000

2855

GPU (AUTO)

Time (ms)

2500

GPU (EXPLICIT)

2000

1500

1525

1462

1000

850

632

500

495

332

0

337

296

117

17

13

137

21

19

164

36

23

215

69

25

142

34

54

97

16k

32k

64k

128k

256k

512k

1024k

Number of integers

57 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

165

2048k

4096k

APARAPI EXPLICIT BUFFER MANAGEMENT

Alternate for simple tight loops.

– Use an extended form of Kernel.execute(range, count)

int [] buffer = new int[HUGE];

Kernel k = new Kernel(){

@Override public void run(){

// mutates buffer contents

}

};

k.setExplicit();

k.put(buffer);

for (int i=0; i< 1000; i++){

k.execute(HUGE);

}

k.get(buffer);

k.execute(HUGE, 1000);

58 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

APARAPI ENHANCEMENT: ALLOW ACCESS TO ARRAYS OF OBJECTS

A Java developer implementing an 'nbody' solution would probably define a class for each particle

public class Particle{

private int x, y, z;

private String name;

private Color color;

// ...

}

… would make all fields private and limit access via setters/getters

public void setX(int x){ this.x = x};

public int getX(){return this.x);

// same for y,z, name etc

… and expect to create a Kernel to update positions for an array of such particles

Particle[] particles = new Particle[1024];

ParticleKernel kernel = new ParticleKernel(particles);

while(displaying){

kernel.execute(particles.length);

updateDisplayPositions(particles);

}

59 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

APARAPI ENHANCEMENT: ALLOW ACCESS TO ARRAYS OF OBJECTS

Unfortunately the original ‘alpha’ version of Aparapi would fail to convert this kernel to OpenCL

Would fall back to using a Thread Pool.

Aparapi initially required that OO form be refactored so that data is held in primitive arrays

int[] x = new int[1024];

int[] y = new int[1024];

int[] z = new int[1024];

Color[] color = new Color[1024];

String[] name = new String[1024];

Positioner.position(x, y, z);

This is clearly a potential ‘barrier to adoption’

60 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

APARAPI ENHANCEMENT: ALLOW ACCESS TO ARRAYS OF OBJECTS

Enhancement allows Aparapi Kernels to access arrays (or array based collections) of objects

At runtime Aparapi:

– Tracks all fields accessed via objects reachable from Kernel.run()

– Extracts the data from these fields into a parallel-array form

– Executes a Kernel using the parallel-array form

– Returns the data back into the original object array

These extra steps do impact performance (compared with refactored data parallel form)

– However, we can still demonstrate performance gains over non-Aparapi versions

61 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

FUTURE WORK

Sync with ‘project lambda’ (Java 8) and allow kernels to be represented as lambda expressions

Continue to investigate automatic extraction of buffer transfers from object collections

Determine how to utilize Aparapi from Hadoop

Hand more explicit control to ‘power users’

– Explicit buffer (or even sub buffer) transfers

– Expose local memory and barriers

– Allow new library methods to be defined in OpenCL+Java and accessed from Kernels

Possibly using JOCL

Library developer must provide Java + JOCL solution. Aparapi chooses which gets executed.

62 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

SIMILAR INTERESTING/RELATED WORK

Tidepowerd

– Offers a similar solution for .NET

– NVIDIA cards only at present

http://www.tidepowerd.com/

java-gpu

– An open source project for extracting kernels from nested loops

– Extracts code structure from bytecode

– Creates CUDA behind the scenes

http://code.google.com/p/java-gpu/

RiverTrail

– Intel’s recently release open source project. Converts javascript to OpenCL (via Firefox plugin) at

runtime.

https://github.com/RiverTrail/RiverTrail/wiki

63 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

SUMMARY

APUs/GPUs offer unprecedented performance for the appropriate workload

Don’t assume everything can/should execute on the APU/GPU

Profile your Java code to uncover potential parallel opportunities

Aparapi provides an ideal framework for executing data-parallel code on the GPU

Find out more about Aparapi at http://aparapi.googlecode.com

Please participate in the Aparapi Open Source community

64 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

QUESTIONS

Disclaimer & Attribution

The information presented in this document is for informational purposes only and may contain technical inaccuracies, omissions and

typographical errors.

The information contained herein is subject to change and may be rendered inaccurate for many reasons, including but not limited to product

and roadmap changes, component and motherboard version changes, new model and/or product releases, product differences between

differing manufacturers, software changes, BIOS flashes, firmware upgrades, or the like. There is no obligation to update or otherwise correct or

revise this information. However, we reserve the right to revise this information and to make changes from time to time to the content hereof

without obligation to notify any person of such revisions or changes.

NO REPRESENTATIONS OR WARRANTIES ARE MADE WITH RESPECT TO THE CONTENTS HEREOF AND NO RESPONSIBILITY IS

ASSUMED FOR ANY INACCURACIES, ERRORS OR OMISSIONS THAT MAY APPEAR IN THIS INFORMATION.

ALL IMPLIED WARRANTIES OF MERCHANTABILITY OR FITNESS FOR ANY PARTICULAR PURPOSE ARE EXPRESSLY DISCLAIMED.

IN NO EVENT WILL ANY LIABILITY TO ANY PERSON BE INCURRED FOR ANY DIRECT, INDIRECT, SPECIAL OR OTHER

CONSEQUENTIAL DAMAGES ARISING FROM THE USE OF ANY INFORMATION CONTAINED HEREIN, EVEN IF EXPRESSLY ADVISED

OF THE POSSIBILITY OF SUCH DAMAGES.

AMD, AMD Radeon, the AMD arrow logo, and combinations thereof are trademarks of Advanced Micro Devices, Inc. All other names used in

this presentation are for informational purposes only and may be trademarks of their respective owners.

OpenCL is a trademark of Apple Inc used under license to the Khronos Group, Inc.

NVIDIA, the NVIDIA logo, and CUDA are trademarks or registered trademarks of NVIDIA Corporation.

Java , JVM, JDK and “Write Once, Run Anywhere" are trademark s of Oracle and/or its affiliates.

© 2011 Advanced Micro Devices, Inc. All rights reserved.

66 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011

BACKUP

ADOPTION CHALLENGES (APARAPI VS EXISTING JAVA GPU BINDINGS)

Emerging GPU

bindings

Aparapi

Learn OpenCL/CUDA

DIFFICULT

N/A

Locate potential data parallel opportunities

MEDIUM

MEDIUM

Refactor existing code/data structures

MEDIUM

MEDIUM

Create Kernel Code

DIFFICULT

EASY

Create code to coordinate execution and buffer transfers

MEDIUM

N/A

Identify GPU performance bottlenecks

DIFFICULT

DIFFICULT

Iterate code/debug algorithm logic

DIFFICULT

MEDIUM

Solve build/deployment issues

DIFFICULT

MEDIUM

68 | APARAPI : Java™ platform’s ‘Write Once Run Anywhere’® now includes the GPU | Austin JUG Nov 2011