Condor @ Brookhaven

advertisement

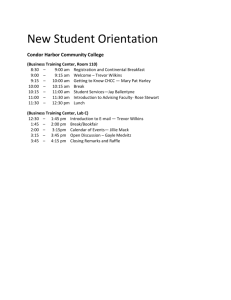

Condor at Brookhaven Xin Zhao, Antonio Chan Brookhaven National Lab CondorWeek 2009 Tuesday, April 21 Outline • RACF background • RACF condor batch system • USATLAS grid job submission using condor-g RACF • Brookhaven (BNL) is multi-disciplinary DOE lab. • RHIC and ATLAS Computing Facility (RACF) provides computing support for BNL activities in HEP, NP, Astrophysics, etc. – RHIC Tier0 – USATLAS Tier1 • Large installation – 7000+ cpus, 5+ PB of storage, 6 robotic silos with capacity of 49,000+ tapes • Storage and computing to grow by a factor ~5 by 2012. New Data Center rising New data center will increase floor space by a factor ~2 in summer of 2009. BNL Condor Batch System • Introduced in 2003 to replace LSF. • Steep learning curve – much help from Condor staff. • Extremely successful implementation. • Complex use of job slots (formerly VM’s) to determine job priority (queues), eviction, suspension and back-filling policies. Condor Queues • Originally designed with vertical scalability – Complex queue priority configuration per core – Maintainable with old less core hardware • Changed to horizontal scalability in 2008 – More and more Multi-core hardware now – Simplified queue priority configuration per core – Reduce administrative overhead Condor Policy for ATLAS (old) ATLAS Condor configuration (old) Condor Policy @ BNL ATLAS Condor configuration (new) Condor Queue Usage Job Slot Occupancy (RACF) • Left-hand plot is for 01/2007 to 06/2007. • Right-hand plot is for 06/2007 to 05/2008. • Occupancy remained at 94% between the two periods. Job Statistics (2008) • Condor usage by RHIC experiments increased by 50% (in terms of number of jobs) and by 41% (in terms of cpu time) since 2007. • PHENIX executed ~50% of its jobs in the general queue. • General queue jobs amounted to 37% of all RHIC Condor jobs during this period. • General queue efficiency increased from 87% to 94% since 2007. Near-Term Plans • Continue integration of Condor with Xen virtual systems. • OS upgrade to 64-bit SL5.x – any issues with Condor? • Condor upgrade from 6.8.5 to stable series 7.2.x • Short on manpower – open Condor admin position at BNL. If interested, please talk to Tony Chan. Condor-G Grid job submission • BNL, as USATLAS Tier1, provides support to the ATLAS PanDA production system. PanDA Job Flow • One critical service is to maintain PanDA autopilot submission using Condor-G – Very large number (~15000) of current pilot jobs as a single user – Need to maintain very high submission rate • Autopilot attempts to always keep a set number of pending jobs in every queue of every remote USATLAS production sites – Three Condor-G submit hosts in production • Quad-core Intel Xeon E5430 @ 2.66GHz, 16G Memory and two 750GB SATA drives (mirrored disks) Weekly OSG Gratia Job Count Report for USATLAS VO • We work closely with condor team to tune Condor-G for better performance. Many improvements have been implemented and suggested by Condor team. New Features and Tuning of Condor-G submission (not a complete list) • Gridmanager publishes resources classads to collector, users can easily query and get the grid job submission status to all remote resources. $> condor_status -grid Name Job Limit gt2 atlas.bu.edu:211 2500 gt2 gridgk04.racf.bn 2500 gt2 heroatlas.fas.ha 2500 gt2 osgserv01.slac.s 2500 gt2 osgx0.hep.uiuc.e 2500 gt2 tier2-01.ochep.o 2500 gt2 uct2-grid6.mwt2. 2500 gt2 uct3-edge7.uchic 2500 Running 376 1 100 611 5 191 1153 0 Submit Limit 200 200 200 200 200 200 200 200 In Progress 0 0 0 0 0 0 0 0 • Nonessential jobs – Condor assumes every job is important, it carefully holds and retries • Pile-up of held jobs often clogs condor-g, prevents it from submitting new jobs – A new job attribute , Nonessential, is introduced. • Nonessential jobs will be aborted instead of being put on hold. – Suited for “pilot” jobs • pilots are job sandbox, not real job payload. Pilots themselves are not as essential as real jobs. • Job payload connects to PanDA server through its own channel. PanDA server knows their status and can abort them directly if needed. • GRID_MONITOR_DISABLE_TIME – New configurable condor-g parameter • Controls how long condor-g waits, after a grid monitor failure, before submitting a new grid monitor job – Old default value of 60 minutes is too long • New job submission quiet often pauses during the wait time, job submission can not sustain at high rate level – New value is 5 minutes • Much better submission rate seen in production. – Condor-G developers have plan to trace the underneath Grid monitor failures, in Globus context • Separate throttle for limiting jobmanagers based on their role – Job submission won’t compete with job stage_out/removal • Globus bug fix – GRAM client (inside GAHP) stops receiving connections from remote jobmanager for job status updates. – We ran cronjob to periodically kill GAHP server to clear up the connections issue. Slower job submission rate. – New condor-g binary compiles against newer Globus libraries, so far so good. Need more time to verify. • Some best practices in Condor-G submission – Reduce frequency of voms-proxy renewal on the submit host • Condor-G aggressively pushes out new proxies to all jobs • Frequent renewal of voms-proxy on the submit hosts slow down job submission – Avoid hard-kill jobs (-forcex) from client side • Reduces job debris on the remote gatekeepers • On the other hand, on the remote gatekeepers, we need to more aggressively clean up debris Near-Term Plans Continue the good collaboration with condor team for better performance of condor/condor-g in our production environment.