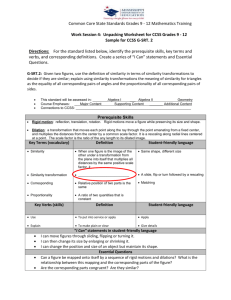

Document

advertisement

Cognitive data analysis Nikolay Zagoruiko Institute of Mathematics of the Siberian Devision of the Russian Academy of Sciences, Pr. Koptyg 4, 630090 Novosibirsk, Russia, zag@math.nsc.ru Area of interests Data Analysis, Pattern Recognition, Empirical Prediction, Discovering of Regularities, Data Mining, Machine Learning, Knowledge Discovering, Intelligence Data Analysis Cognitive Calculations Human-centered approach: The person - object of studying its cognitive mechanisms The decision of new strategic tasks is impossible without the accelerated increase of an intellectual level of means of supervision, the analysis and management. The person - the subject using results of the analysis Complexity of functioning of these means and character of received results complicate understanding of results. In these conditions the person, actually, is excluded from a man-machine control system. Specificity of DM tasks: • • • • • Great volumes of data Polytypic attributes Quantity of attributes >> numbers of objects Presence of noise and blanks Absence of the information on distributions and dependences Ontology of DM Abundance of methods is result of absence the uniform approach to the decision of tasks of different type That can learn at the person? What deciding rules the person uses? 1967 Recognition 12 1 * * * * 11 What deciding rules the person uses? 1967 Taxonomy 12 1 * 11 * * 1. Person understands a results if classes are divided by the perpendicular planes y y y Y’ X=0.8Y-3 a x X’ x x 2. Person understands a results if classes are described by standards y y y * * * * Y’ * * * * x X’ x Уникальная способность человека распознавать трудно различимые образы основана на его умении выбирать информативные признаки. x If at the solving of different classification tasks the person passes from one basis to another? Most likely, peoples use some universal psycho-physiological function Our hypothesis: Basic function, used by the person at the classification, recognition, feature selection etc., consists in measure of similarity Functions of Similarity 1) FS1 ( a, b) 1 n a b 2 ( x x i i i) , i 1 n 2) FS 2 ( a, b) 1 i | xia xib | i 1 3) FS3 ( a, b) 1 max | x x |, a i b i min( xia , xib ) 4) FS 4 ( a, b) i , a b max( xi , xi ) i 1 n 5) FS ( a, b) 1 e n i 1 ( xia xib ) 2 ,.... Similarity is not absolute, but a relative category Is a object b close to a or it is distant? a b Similarity is not absolute, but a relative category Is a object b close to a or it is distant? a b a b c Similarity is not absolute, but a relative category Is a object b close to a or it is distant? a b a b a b c We should know the answer on question: In competition with what? c Function of Cоmpetitive (Rival) Similarity (FRiS) ( r2 r1 ) F ( z,1 | 2) ( r2 r1 ) B r2 A r1 z +1 F A r1 z B r2 -1 Compact ness All pattern recognition methods are based on hypothesis of compactness Braverman E.M., 1962 The patterns are compact if -the number of boundary points is not enough in comparison with their common number; - compact patterns are separated from each other refer to not too elaborate borders. B B A A B B A A Compact ness Similarity between objects of one pattern should be maximal Similarity between objects of different patterns should be minimal Compactness Defensive capacity: Compact patterns should satisfy to condition of the Maximal similarity between objects of the same pattern b B r2 j F ( j, i | b) (r2 r1 ) / (r2 r1 ) b r1 i j A r1 r2 j 1 Di MA MA F ( j , i | b) j 1 r2 r1 b Compactness Tolerance: Compact patterns should satisfy to the condition Maximal difference of these objects with the objects of other patterns b s 1 Ti M AM B MA MB i 1 q 1 F ( q, s | i ) B q r2 r2 j F (q, s | i) (r2 r1 ) / (r2 r1 ) r1 r1 i A Ci ( Di Ti ) / 2 1 CA MA MA C i 1 i 1 CB MB MB C q 1 q C C A * CB Selection of the standards (stolps) Algorithm FRiS-Stolp max Ci ( Di Ti ) / 2 Value of FRiS for points on a plane Criteria Informativeness by Fisher for normal distribution IF | 1 2 | 2 2 1 2 Compactness has the same sense and can be used as a criteria of informativeness, which is invariant to low of distribution and to relation of NM Selection of feature Initial set of features Xo 1, 2, 3, …..… …. j…. …..… Engine GRAD Variant of subset X <1,2,…,n> Criteria FRiS-compactness Good Bad N GRAD Algorithm GRAD It based on combination of two greedy algorithms: forward and backward searches. At a stage forward algorithm Addition is used J.L. Barabash, 1963 LA N ( N 1) ( N 2) n 1 ( N n 1) ( N j ) j 0 At a stage backward algorithm Deletion is used Merill T. and Green O.M., 1963 LD N ( N 1) ( N 2) (n 1) N n 1 ( N j) j 0 GRAD Algorithm AdDel To easing influence of collecting errors a relaxation method it is applied. n1 - number of most informative attributes, add-on to subsystem (Add), n2<n1 - number of less informative attributes, eliminated from subsystem (Del). AdDel Relaxation method: n steps forward - n/2 steps back Algorithm AdDel. Reliability (R) of recognition at different dimension space. R(AdDel) > R(DelAd) > R(Ad) > R(Del) GRAD Algorithm GRAD • AdDel can work with groups of attributes (granules) of different capacity m=1,2,3,…: , , ,… The granules can be formed by the exhaustive search method. • But: Problem of combinatory explosion! Decision: orientation on individual informativeness of attributes f It allows to granulate a most informative part attributes only L Dependence of frequency f hits in an informative subsystem from serial number L on individual informativeness GRAD Algorithm GRAD (GRanulated AdDel) 1. Independent testing N attributes Selection m1<<N first best 2 C 2. Forming m1 combinations Selection m2<< Cm21 first best 3 C 3. Forming m1 combinations 3 Selection m3<< Cm1 first best (m1 granules power 1) (m2 granules power 2) (m3 granules power 3) M =<m1,m2,m3> - set of secondary attributes (granules) AdDel selects m*<<|M| best granules, which included n*<<N attributes X x2 ,3 x6 ,5 x9 , x25 ,... Criteria Comparison of the criteria (CV FRiS) 1,1 1 Fs 0,9 U 0,8 Fs U 0,7 noise 0,6 0,05 Order of attributes by informativeness 0,1 0,15 0,2 0,25 N=100 M=2*100 mt =2*35 mC =2*65 +noise ....... ....... ....... ....... C = 0,661 C = 0,883 0,3 noise Some real tasks Task K M N Medicine: Diagnostics of Diabetes II type Diagnostics of Prostate Cancer Recognition of type of Leukemia Microarray data 9 genetic tables 3 4 2 2 2 Physics: Complex analysis of spectra 7 20-400 1024 Commerse: Forecasting of book sealing (Data Mining Cup 2009) - 4812 1862 43 5520 322 17153 38 7129 1000 500000 50-150 2000-12000 Recognition of two types of Leukemia - ALL and AML Training set Control set ALL 38 27 34 20 AML 11 14 N = 7129 I. Guyon, J. Weston, S. Barnhill, V. Vapnik Gene Selection for Cancer Classification using Support Vector Machines. Machine Learning. 2002, 46 1-3: pp. 389-422. Pentium T=15 sec Pentium T=3 hours В 27 первых подпространствах P =34/34 Training set 38 N g Vsuc Vext Vmed 7129 0,95 0,01 0,42 4096 0,82 -0,67 0,30 2048 0,97 0,00 0,51 1024 1,00 0,41 0,66 512 0,97 0,20 0,79 256 1,00 0,59 0,79 128 1,00 0,56 0,80 64 1,00 0,45 0,76 32 1,00 0,45 0,65 16 1,00 0,25 0,66 8 1,00 0,21 0,66 4 0,97 0,01 0,49 2 0,97 -0,02 0,42 1 0,92 -0,19 0,45 Test set 34 Tsuc Text Tmed P 0,85 -0,05 0,42 29 0,71 -0,77 0,34 24 0,85 -0,21 0,41 29 0,94 -0,02 0,47 32 0,88 0,01 0,51 30 0,94 0,07 0,62 32 0,97 -0,03 0,46 33 0,94 0,11 0,51 32 0,97 0,00 0,39 33 1,00 0,03 0,38 34 1,00 0,05 0,49 34 0,91 -0,08 0,45 31 0,88 -0,23 0,44 30 0,79 -0,27 0,23 27 I.Guyon, J.Weston, S.Barnhill, V.Vapnik FRE FRiS 0,72656 0,71373 0,71208 0,71077 0,70993 0,70973 0,70711 0,70574 0,70532 0,70243 Decision Rules 537/1 , 1833/1 , 2641/2 , 4049/2 1454/1 , 2641/1 , 4049/1 2641/1 , 3264/1 , 4049/1 435/1 , 2641/2 , 4049/2 , 6800/1 2266/1 , 2641/2 , 4049/2 2266/1 , 2641/2 , 2724/1 , 4049/2 2266/1 , 2641/2 , 3264/1 , 4049/2 2641/2 , 3264/1 , 4049/2 , 4446/1 435/1 , 2641/2 , 2895/1 , 4049/2 2641/2 , 2724/1 , 3862/1 , 4049/2 P 34 34 34 34 34 34 34 34 34 34 Name of gene Weight 2641/1 , 4049/1 2641/1 33 32 Zagoruiko N., Borisova I., Dyubanov V., Kutnenko O. Best features SVM FRiS 803,4846 30(88%) 33(97%) 27(79%) 30(88%) 4846 Projection a training set on 2641 и 4049 features AM L ALL Comparison with 10 methods • Jeffery I.,Higgins D.,Culhane A. Comparison and evaluation of methods for generating differentially expressed gene lists from microarray data. // • http://www.biomedcentral.com/1471-2105/7/359 9 tasks on microarray data. 10 methods the feature selection. Independent attributes. Selection of n first (best). Criteria – min of errors on CV: 10 time by 50%. Decision rules: Support Vector Machine (SVM), Between Group Analysis (BGA), Naive Bayes Classification (NBC), K-Nearest Neighbors (KNN). Methods of selection Methods Results Significance analysis of microarrays (SAM) 42 Analysis of variance (ANOVA) 43 Empirical Bayes t-statistic 32 Template matching 38 maxT 37 Between group analysis (BGA) 43 Area under the receiver operating characteristic curve (ROC) 37 Welch t-statistic 39 Fold change 47 Rank products 42 FRiS-GRAD Empirical Bayes t-statistic – for middle set of objects Area under a ROC curve – for small noise and large set Rank products – for large noise and small set 12 Results of comperasing • • • • • • • • • • Задача ALL1 ALL2 ALL3 ALL4 Prostate Myeloma ALL/AML DLBCL Colon N0 m1/m2 max of 4 GRAD 12625 95/33 100.0 100.0 12625 24/101 78.2 80.8 12625 65/35 59.1 73.8 12625 26/67 82.1 83.9 12625 50/53 90.2 93.1 12625 36/137 82.9 81.4 7129 47/25 95.9 100.0 7129 58/19 94.3 93.5 2000 22/40 88.6 89.5 average 85.7 88.4 Unsettled problems • • • • • • • • • • Censoring of training set Recognition with boundary Stolp+corridor (FRiS+LDR) Imputation Associations Unite of tasks of different types (UC+X) Optimization of algorithms Realization of program system (OTEX 2) Applications (medicine, genetics,…) ….. Conclusion FRiS-function: 1.Provides effective measure of similarity, informativeness and compactness 2.Provides unification of methods 3.Provides high quality of decisions Publications: http://math.nsc.ru/~wwwzag Thank you! • Questions, please? Stolp Decision rules Choosing a standards (stolps) The stolp is an object which protects own objects and does not attack another's objects Defensive capacity: Similarity of the objects to a stolp should be maximal a minimum of the miss of the targets, Tolerance: Similarity of the objects to another's objects - minimally a minimum of false alarms Stolp Algorithm FRiS-Stolp Compact patterns should satisfy to two conditions: Defencive capacity: Maximal similarity of objects on stolp i F(j,i)|b=(R2-R1)/(R2+R1) b s B q R2 1 MA DCi F ( j, i) | b M A j 1 R1 R2 j R1 i A Tolerance: Maximal difference of other’s objects with stolp i 1 Ti MB MB F ( q, s ) | i q 1 1 Si ( DCi Ti ) 2 Stolp Algorithm FRiS-Stolp F(j,i)|b=(R2-R1)/(R2+R1) Security: Maximal similarity of objects on stolp i b s DCi 1 F ( j, i) | b M A j 1 B q R2 MA R1 R2 j R1 i A Tolerance: Maximal difference of other’s objects with stolp i 1 Ti MB MB F ( q, s ) | i q 1 1 Si ( DCi Ti ) 2 Decision rules Алгоритм FRiS-Stolp Примеры таксономии алгоритмом FRiS-Class Примеры таксономии алгоритмом FRiS-Class Сравнение FRiS-Class с другими алгоритмами таксономии 0,9 0,8 0,7 FRiS-Cluster Kmeans 0,6 Forel Scat 0,5 FRiS-Tax 0,4 K 0,3 2 3 4 5 6 7 8 9 10 11 12 13 14 15