Chapter 12 Section 1

advertisement

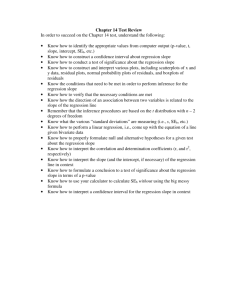

Chapter 12 Section 1 Inference for Linear Regression Inference for Linear Regression Students will be able to check conditions for performing inference about the slope (beta) for the population (true) regression line. to interpret computer output from a least-squares regression analysis to construct and interpret a confidence interval for the slope (beta) of the population (true) regression line. to perform a significance test about the slope (beta) of a population (true) regression line. Inference for Linear Regression Observing the scatter plot on pp. 739, the line that is draw out is known as the population regression line due to it using all the observations. If we take sample size out of the population (still use the equation (y (phat) = a + bx) for the sample regression line. More than likely the slope of the sample will vary on your choice of samples. The pattern of variation in the slope b is described by its sampling distribution. Sampling Distribution of b Confidence intervals and significance tests about the slope of the population regression line is based upon the sampling distribution of b, the slope of the sample regression line. Sampling Distribution of b Describing the approximate sampling distribution: Shape – a strong linear pattern in the graph tells that the approximate sampling distribution is close to Normal. Center – calculate the mean: as long as the mean of the sample is close to the mean of the population, then you are good. Spread – calculate the standard deviation: same rules of the center applies Conditions for Regression Inference Conditions: Linear – the actual relationship between x and y is linear. For any fixed value of x, the mean response (mhew), falls on the population (true) regression line mhewx = alpha + betax. The slope beta and intercept alpha are usually unknown parameters. Independent – individual observations are independent of each other (one does not effect the other) Normal – for any fixed value of x, the response y varies according to a normal distribution. Equal variance – the standard deviation of y (call it sigma) is the same for all values of x. The common standard deviation sigma is usually an unknown parameter. Random – the data come from a well-designed random sample or randomized experiment. Conditions for Regression Inference Regression model tells us: a linear regression tells us whatever x does it concludes with a predicted y value. **** Remember to always check conditions before doing inference about the regression model. Take a look at example on pp. 743 - 744 Estimating the Parameters When conditions are met, we can proceed to calculating the unknown parameters. If we calculate the least-square regression line, the slope is an unbiased estimator of the true slope and the y intercept is an unbiased estimator of the true y intercept. The remaining parameter is the standard deviation (sigma), which describes the variability of the response y about the population (true) regression line. Residuals estimate how much y varies about the population line. The standard deviation of responses about the population regression line, we estimate standard deviation using the formula at the top of page 745 Estimating the Parameters Take a look at example on pp. 745 It is possible to do inference about any of the three parameters. However, the slope (beta) is usually the most important parameter in a regression problem. So try to stick with that one. Sampling Distribution of b For spread – since we do not know the standard deviation, then we estimate using the standard deviation of the residuals. Then we estimate the spread of the sampling distribution of b with the standard error of the slope (formula on pp. 746) If we transform to a formula we use (the middle formula on pp. 746) which translates to the last formula (use this one). Now when calculating the degrees of freedom take the “n” value and subtract 2 from it (we use 2 instead of 1 – explanation is deeper and complicating). Constructing a Confidence Interval for the Slope The slope (beta) is the rate of change of the mean response as the explanatory variable increases. Mhew x = alpha + beta x A confidence interval is more useful than the point estimate because it shows how precise the estimate b is likely to be. (Statistic) plus/minus (critical value) * (standard deviation) B plus/minus t * Seb Take a look at yellow box on pp. 747 and example on pp. 747-748 Performing a Significance Test for the Slope Null hypothesis has the general form H0 : beta = hypothesized value. To do the test: Test statistic = (statistic – parameter) / (standard deviation of statistic) T = b – beta0 / Seb To find p-value, use t distribution with n – 2 Take a look at yellow box on pp. 751 Take a look at the remainder of the examples in this section for clarification