Automatic Answer Validation

advertisement

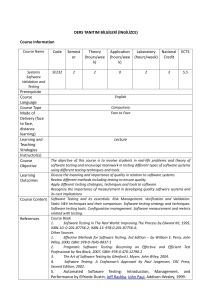

Automatic Answer Validation in Open-Domain Question Answering Hristo Tanev TCC,ITC - IRST Open Domain Question Answering Which is the capital of Italy? ROME • Automatic extracting of the answer of a natural language question • Related fields: – Information Extraction – Information Retrieval • Deeper text analysis How it works Question type: Which-LOCATION Keywords: capital Italy Which is the capital of Italy? Question processing Document collection Question type: Which-LOCATION Keywords: capital Italy IR engine Selected documents /paragraph s Selected documents/ paragraphs Question type: Which-LOCATION Paris,capital Milan, Rome, Texas Keywords: Italy Answer extraction Candidate answers Paris - 1, Milan - 1, Rome Candidate answers Answer evaluation and validation Knowledge bases, Abduction -2 ROME! The complexity of the QA task • • • • The variety of question classes The infinite number of answer formulations Anaphora, ellipsis, synonymy Sometimes syntactic and semantic analysis are necessary, also world knowledge Answer inference • The problem: How to infer if a candidate answer is relevant with respect to the question? • Filtering out the irrelevant answer candidates • Score the candidate answers according to their relevance Contemporary approaches for answer inference • Deducing the question logical form (QLF) from the text logical form Sanda M. Harabagiu and Marius Pasca and Steven Maiorano “Experiments with Open-Domain Textual Question Answering”, COLING 2000,292-298 Example: Q: Why did David Koresh ask FBI for a word processor? A: Mr. Koresh sent a request for word processor to FBI to enable him to write his revelations QLF: ask(Koresh, FBI, word processor,reason=?) ALF: sent request(Koresh, FBI, word processor,reason: to write his revelations) Heuristic: send request => ask, ALF => QLF Contemporary approaches for answer inference (continued) • Abduction, using pragmatic axioms, and semantic representation Sanda Harabagiu , Steven Maiorano “Finding Answers in Large Collections of Texts: Paragraph Indexing + Abductive Inference” – action1 (e1, Person1) & action1 (e2, Person2) & related_events(e1, e2) => related(Person1, Person2) – Q:Who was Lincoln’s Secretary of State – A:Booth schemed to kill Lincoln, while his compatriots would murder Vice President Andrew Johnson and Secretary of State William Seward. – kill(e1, Lincoln) & murder(e2, Secretary of State William Seward) & related(e1 , e2) =>related(Lincoln, Secretary of State William Seward) Contemporary approaches for answer inference (continued) • Lexico – syntactic patterns Q: What forms of international crime exist? A:…international forms of crime, including terrorism, blackmail and drug-related problems. • These kinds of patterns are appropriate for certain type of questions, asking for taxonomic information Contemporary approaches for answer inference - disadvantages • A very large open domain knowledge base is requisite • The creation of knowledge bases is very expensive in time and resources • The present world knowledge bases (such as WordNet or ThoughtTreasure) are far away from being comprehensive • The question and its answer can be very different lexically, this poses the necessity from deep semantic analysis to infer the relation between the discourse entities Data Driven Answer Inference The simple approach – ask the oracle • The database should be large enough to encode a great part of the human knowledge • It should provide the necessary redundancy to contain different reformulations for the facts • It should be changed dynamically to reflect recent state of the Rome is the human knowledge about capital of the world Italy It should be easily accessible • World Wide Web as a source of knowledge • Comprehensive • Open domain nature • Constantly updated and expanded • Search indices and engines • Implicit knowledge My journey in Italy began in the capital Rome… Disadvantages: • Knowledge is in unstructured text form • Access to the search engines may be slow Web as a gigantic corpus • Parameters: – – – – 100.000.000 hosts AltaVista indexes over 1.000.000.000 Web pages Google 2.000.000.000 Web pages 86% English language pages, 5.8% German, 2.36 French, 1.6% Italian • Accessibility Different public accessible search engines: AltaVista, Fast, Google, Excite, Lycos, Yahoo!, Northern Light Validation Statements Question • • • • • Candidate Answer Who is Galileo? astronomer Galileo is an astronomer Which is the capital of Italy? Rome Rome is the capital of Italy Why the moon turns orange? because it enters the Earth shadow • The moon turns orange because it enters the Earth shadow Validation Statements (continued) The core of the data-driven answer validation is searching on-line texts, similar to the validation statement for a questionanswer pair The Answer Validation Algorithm • Question + Answer = Validation Pattern – Q: How far is it from Denver to Aspen – A: 200 miles – QAP : [Denver … Aspen … 200 miles] • Submit validation pattern to search engine • Infer the power of the relation between Question & Answer on the basis of the search engine result An Example • QA pair: Who is Galileo? astronomer • Submit to AltaVista the query “Galileo” – AltaVista returns 2000 hits • Submit to AltaVista the query “astronomer” – AltaVista returns 10000 hits • Submit to AltaVista the query Galileo NEAR astronomer – AltaVista returns 1000 hits • PMI(Galileo, astronomer) = 14 > threshold Validation Patterns The validation pattern is the base of the query which is submitted to the search engine to check if the question and the answer tend to appear together Word Level Validation Patterns • Qk1, Qk2,…. The question keywords • A The Answer • The query to the search engine is formed by linking the question keywords and answer with operators like AND or NEAR – Qk1 NEAR Qk2 NEAR …NEAR A – Qk1 AND Qk2 AND …AND A – (Qk1 AND Qk2 …) NEAR A • This way co-occurrence between the question and answer keywords is searched in Intenet Phrase Level Validation Patterns • Validation pattern is composed by syntactic phrases instead of separate keywords • Example: – Q: What city had a world fair in 1900? – A: Paris – Query: ( city NEAR “world fair” NEAR “in 1900” ) NEAR Paris • Pages found by these type of patterns are more likely to contain texts confirming the answer corectness • Disadvantage: less probable, often obtain 0 hits even for the right answer Phrase Level Validation Patterns (continued) • The phrases may be extracted by parser from the question • More probable and coherent phrases should be preferred over the rare and non coherent phrases • The phrase frequency may be measured using Web as a corpus Sentence Level Patterns • If the question and the answer are short, the whole validation statement can be submitted to the search engine • “When did Hawaii become a state?” – 1959 • “Hawaii became a state in 1959” • Linguistic transformations are necessary to transform the QA pair in a validation statement Morphological Variations and Symonymy in Patterns • The question and answer keywords may occur in different morphological forms • Synonyms can also appear instead of the original keywords • Most search engines (Google, AltaVista, Yahoo) allow the use of keyword variants by OR operator • Q: What date did John Lenon die? • Question pattern: John NEAR Lenon NEAR (die OR died) Types of data driven answer inference • Pure quantitative approach: only the number of hits, returned by the search engine are considered. Statistical techniques form the core of this class of approaches • Qualitative approaches: the document content is processed Statistical answer validation • By search engine queries are obtained the frequencies of the question pattern, the answer and the questionanswer validation pattern • Example Question: How far is it from Denver to Aspen? Question Pattern : far NEAR Denver NEAR Aspen Answer : 200 miles QAP: far NEAR Denver NEAR Aspen NEAR 200 miles • Search engine: – Frequency(Question Pattern) – Frequency(Answer) – Frequency(QAP) Statistical answer validation • Using the frequencies and the number approximating the pages indexed by the search engine are calculated the following probabilities for occurrence in Web: P(Question Pattern), P(Answer), P(Question-Answer co-occurrence) Statistical answer validation • Thus calculated probabilities are combined in formulae, which are derived from classical cooccurrence formulae. • The difference from the classical co-occurrence task is that we search how the appearance of the question pattern implies the appearance of the answer. Thus non symmetrical formulae are necessary. • These formulae return a value, which is an indication for the answer corectness with respect to the question. Statistical answer validation Answer validation formulae P( Answer | Question ) P( Answer ) 2 / 3 P( Answer | Question ) P( Answer ) P( Answer ) Qualitative Approach • The qualitative answer validation considers the content of the obtained documents as a result of the validation pattern submition to the search engine • The distance between the question and answer keywords is considered Qualitative Approach • The use of document snippets can speed up this approach • Certain search engines, like Yahoo! and Google return text snippets from the documents, where the keywords appear Qualitative Approach. Extraction of data from the snippets. Q: Who is the first man to fly across the Pacific Ocean? A: Pangborn Query, submitted to Google: first AND man AND fly OR flew AND Pacific AND Ocean AND Pangborn Text snippets returned: “Pangborn became the first pilot to cross Pacific” “Pangborn with co-pilot Hew Herndon flew across Pacific” Qualitative Approach. Extraction of data from the snippets (continued). Obtained co-occurrence relations: (Pangborn, first, Pacific) (Pangborn, fly, Pacific) Numerical values, obtained from the relations: Proportion of question keywords, related to answer (0.6 in the example , 3 question keywords (first, Pacific, fly) related to answer from total of 5 question keywords) Number of different relations and their length Qualitative Approach. Calculating answer relevance • Only the different co-occurrence relations are considered, co-occurrences, which are included in others are excluded PQK . 2length( r ) 1 r PQK percent of question keywords, related to the answer r relations, obtained for the answer from the snippets length(r) the number of words in the co-occurrence relation r Qualitative Approach. Calculating answer relevance (continued) • Keyword density in the co-occurrence relations may also be considered • The formula may be the sum of the keyword densities for all the relations KeyDensity (r ) r Combining approaches • The qualitative approach can be used to extract cooccurrences • Statistical techniques can be used to evaluate these coocurrences Experiments and results Experiment • The statistical approach was tested • The TREC10 question-answer list has been used, provided by NIST – for total of 492 questions maximum three right and three wrong answers are taken • Two experiments were carried out Performance of the system on the full set of questions Named entities questions • A baseline model was introduced Experiment (continued) • For every 50 byte answer the algorithm extracts only the entities that correspond to the question type • The pairs question – answer were evaluated using AltaVista • Phrase level patterns and two types of word-level patterns has been used Experiment. The Patterns. • Three types of patterns: – Phrase,Word level with NEAR,Word level with AND • Example: – – – – – – – – Q: “What city had a world fair in 1900?” A: Paris Phrase pattern: (city NEAR “world fair” NEAR 1900) NEAR Paris Word level with NEAR: (city NEAR world NEAR fair NEAR 1900) NEAR Paris World level with AND: (city AND world AND fair AND 1900) NEAR Paris Results Test Set Success Rate 3000 question-answer pairs from TREC10 81% 1500 question-answer pairs for named entity questions from TREC10 86% Baseline model 52% Future Directions Much more to do… • Improvement of the statistical formulae • Research on the search engine use • Combining the qualitative and statistical approach • Creation of reliable validation patterns • Introducing new techniques for answer validation • Integration in QA system