Generalized linear panel data models

advertisement

11. Categorical choice and survival

models

•

•

•

•

11.1

11.2

11.3

11.4

Homogeneous models

Multinomial logit models with random effects

Transition (Markov) models

Survival models

11.1 Homogeneous models

•

•

•

•

•

•

11.1.1 Statistical inference

11.1.2 Generalized logit

11.1.3 Multinomial (conditional) logit

11.1.4 Random utility interpretation

11.1.5 Nested logit

11.1.6 Generalized extreme-value distributions

11.1.1 Statistical inference

• We are now trying to understand a response that is an

unordered categorical variable.

• Let’s assume that the response may take on values 1, 2, ..., c

corresponding to c categories.

• Examples include

– employment choice, such as Valletta (1999E),

– mode of transportation choice, such as the classic work

by McFadden (1978E),

– choice of political party affiliation, such as Brader and

Tucker (2001O)

– marketing brand choice, such as Jain et al. (1994O).

Likelihood

• Denote the probability of choosing the jth category as πit,j =

Prob(yit = j),

– so that πit,1 + … + πit,c = 1.

• The likelihood for the ith subject at the tth time point is:

π

π it1

π it 2

π itc

c

yitj

itj

j 1

if yit 1

if yit 2

if yit c

– where yitj is an indicator of the event yit= j.

• Assuming independence, the total log-likelihood is

L

c

y

it

j 1

it , j

ln π it , j

11.1.2 Generalized logit

• Define the linear combinations of explanatory variables

• And probabilities

Vit, j xit β j

Prob( yit j ) it , j

expV

exp Vit , j

c

k 1

it ,k

• Here βj may depend on the alternative j, whereas the

explanatory variables xit do not.

• A convenient normalization for this model is βc = 0.

• We interpret j as the proportional change in the odds ratio.

Prob( yit j )

Vit, j Vit,c xit β j

ln

Prob( yit c)

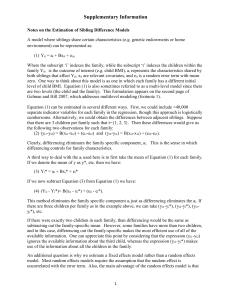

Example 11.2 Job security

•

•

•

Valetta (1999E) - determinants of job turnover

There are has c = 5 categories: dismissal, left job because of plant

closures, “quit,” changed jobs for other reasons and no change in

employment.

Table 11.1 shows that turnover declines as tenure increases.

– To illustrate, consider a typical man in the 1992 sample where we

have time = 16 and focus on dismissal probabilities.

– For this value of time, the coefficient associated with tenure for

dismissal is -0.221 + 16 (0.008) = -0.093 (due to the interaction

term).

– From this, we interpret an additional year of tenure to imply that

the dismissal probability is exp(-0.093) = 91% of what it would be

otherwise, representing a decline of 9%.

Variable

Table 11.1 Turnover Generalized Logit and Probit Regression Estimates

Probit

Generalized Logit Model

Regression

Dismissed

Plant

Other

Model

Closed

Reason

Tenure

Time Trend

Tenure (Time Trend)

-0.084

(0.010)

-0.002

(0.005)

0.003

(0.001)

0.094

(0.057)

-0.020

(0.009)

-0.221

(0.025)

-0.008

(0.011)

0.008

(0.002)

0.286

(0.123)

-0.061

(0.023)

-0.086

(0.019)

-0.024

(0.016)

0.004

(0.001)

0.459

(0.189)

-0.053

(0.025)

-0.068

(0.020)

0.011

(0.013)

-0.005

(0.002)

-0.022

(0.158)

-0.005

(0.025)

Quit

-0.127

(0.012)

-0.022

(0.007)

0.006

(0.001)

0.333

(0.082)

-0.027

(0.012)

Change in Logarithmic Sector

Employment

Tenure (Change in Logarithmic

Sector Employment)

Notes: Standard errors in parentheses. Omitted category is no change in employment. Other variables

controlled for consisted of education, marital status, number of children, race, years of full-time work

experience and its square, union membership, government employment, logarithmic wage, the U.S.

employment rate and location.

11.1.3 Multinomial (conditional)

logit

• Use the linear combination of explanatory variables

Vit , j xit , j β

• Here, xit,j is a vector of explanatory variables that depends

on the jth alternative, whereas the parameters β do not.

• The total log-likelihood is

L(β)

c

y

it

j 1

it , j

ln π it , j

c

c

yit , j x it , j β ln expx it ,k β

k 1

j 1

it

• Drawback: independence of irrelevant alternatives

– The ratio, it,1 / it,2 = exp(Vit,1 ) / exp(Vit,2 ) ,

does not depend on the underlying values of the other

alternatives, Vit,j, for j=3, ..., c.

Multinomial vs generalized logit

• The generalized logit model is a special case of the

multinomial logit model.

– To see this, consider explanatory variables xit and

parameters βj, each of dimension K 1.

β1

β2

β

β

c

– With this specification, we have xit,j β = xit βj .

x it, j

0

0

x it

0

0

• Thus, a statistical package that performs multinomial logit

estimation can also perform generalized logit estimation

• Further, some authors use the descriptor multinomial logit

when referring to the Section 11.1.2 generalized logit model.

Ex. 11.3 Choice of yogurt brands

•

•

•

•

•

Scanner data - they are obtained from optical scanning of grocery

purchases at check-out.

The subjects consist of n =100 households in Springfield, Missouri.

The response of interest is the type of yogurt purchased, consisting of

four brands: Yoplait, Dannon, Weight Watchers and Hiland.

The households were monitored over a two-year period with the

number of purchases ranging from 4 to 185; the total number of

purchases is N=2,412.

The two marketing variables of interest are PRICE and FEATURES.

– For the brand purchased, PRICE is recorded as price paid, that is,

the shelf price net of the value of coupons redeemed. For other

brands, PRICE is the shelf price.

– FEATURES is a binary variable, defined to be one if there was a

newspaper feature advertising the brand at time of purchase, and

zero otherwise.

– Note that the explanatory variables vary by alternative, suggesting

the use of a multinomial (conditional) logit model.

Multinomial

logit model

fit

•

Table 11.4 Yogurt Multinomial Logit Model Estimates

Variable

Parameter t-statistic

estimate

4.450

23.78

Yoplait

3.716

25.55

Dannon

3.074

21.15

Weight Watchers

0.491

4.09

FEATURES

-36.658

-15.04

PRICE

10,138

-2 Log Likelihood

10,148

AIC

A multinomial logit model was fit to the data, using

Vit, j j 1PRICEit, j 2 FEATUREit, j

•

•

using Hiland as the omitted alternative.

Results:

– Each parameter is statistically significantly different from zero.

– For the FEATURES coefficient, a consumer is exp(0.4914) = 1.634

times more likely to purchase a product that is featured in a

newspaper ad compared to one that is not.

– For the PRICE coefficient, a one cent decrease in price suggests

that a consumer is exp(0.3666) = 1.443 times more likely to

purchase a brand of yogurt.

11.1.4 Random utility interpretation

• In economic applications, we think of an individual

choosing among c categories.

– Preferences among categories are indexed by an

unobserved utility function.

– For the ith individual at the tth time period, let’s use Uitj for

the utility of the jth choice.

• To illustrate, we assume that the individual chooses the first

category if

– Uit1 > Uitj for j=2, ..., c

– and denote this choice as yit = 1.

Random utility

• We model utility as an underlying value plus random noise,

that is, Uitj = Vitj + eitj .

• The value function is an unknown linear combination of

explanatory variables.

• The noise variable is assumed to have an extreme-value, or

Gumbel, distribution of the form F(eitj a) = exp ( e-a) .

• This is mainly for computational convenience. With this,

one can show

1

Prob( y 1)

1

expV

c

j 2

• Our multinomial logit form

j

V1

expV1

expV

c

j

j 1

11.1.5 Nested logit

•

•

We now introduce a type of hierarchical model known as a nested logit

model.

In the first stage, one chooses an alternative (say the first type) with

probability

expVit ,1

it ,1 Prob( yit 1)

c

expVit,1 k 2 expVit ,k /

•

Conditional on not choosing the first alternative, the probability of

choosing any one of the other alternatives follows a multinomial logit

model with probabilities

it, j

expVit , j /

Prob( yit j | yit 1) c

1 it ,1

expVit ,k /

k 2

•

•

•

The value of ρ = 1 reduces to the multinomial logit model.

Interpret Prob(yit = 1) to be a weighted average of values from the first

choice and the others.

Conditional on not choosing the first category, the form of Prob(yit = j|

yit 1) has the same form as the multinomial logit.

Nested logit

• The nested logit generalizes the multinomial logit model we no longer have the problem of independence of

irrelevant alternatives.

• A disadvantageis that only one choice is observed;

– thus, we do not know which category belongs in the first

stage of the nesting without additional theory regarding

choice behavior.

• One may view the nested logit as a robust alternative to the

multinomial logit - examine each one of the categories in

the first stage of the nesting.

11.1.6 Generalized extreme value

distribution

• The nested logit model can also be given a random utility

interpretation.

• McFadden (1978E) introduced the generalized extremevalue distribution of the form:

Fa1 ,..., ac exp G e a1 ,..., e ac

• Under regularity conditions on G, McFadden (1978E)

showed that this yields

Prob( y j ) Prob U j U k for k 1,..., c, k j

• where

G j ( x1 ,..., xc )

G( x1 ,..., xc )

x j

e j G j (e V1 ,..., e Vc )

V

G( e V1 ,..., e Vc )

11.2 Multinomial logit models with

random effects

• We now consider linear combinations of explanatory

variables

Vit , j z it , j α i xit , j β

• A typical parameterization for the value function is of the

form: Vit,j = ij + xit j.

– That is, the slopes may depend on the choice j and the

intercepts may depend on the individual i and the choice j.

Parameterizing the value function

• Consider: Vit,j = ij + xit j.

• We need to impose additional restrictions on the model.

– To see this, replace ij by ij*= ij + 6 to get that

*

itj

exp ij* xit β j

exp

k

*

ik

xit β k

exp ij 6 xit β j

exp

k

ik

6 xit β k

exp ij xit β j

exp

k

ik

xit β k

• A convenient normalization is to take i1 = 0 and 1 = 0 so

that Vit,1 = 0 and

it1 = 1/( 1 + Sk=2 exp(ik + xit k) )

itj = exp(ik + xit k) /(1+Sk=2 exp(ik + xit k)) , for j=2,...,c.

itj

Likelihood

• The conditional (on the heterogeneity) probability that the

ith subject at time t chooses the jth alternative is

π itj

•

expα x β

α

expV expα x β

exp Vit , j

i

ij

c

it , j

c

it ,k

k 1

ik

k 1

To avoid parameter redundancies, we take ic = 0.

it ,k

• The conditional likelihood for the ith subject is

Ly i | α i

π α

Ti

c

it , j

t 1

yit , j

i

j 1

Ti

t 1

exp

it , j β

y

α

x

it

,

j

ij

j 1

c

expαik xit ,k β

k 1

c

• We assume that {αi} is i.i.d. with distribution function Gα,

– typically taken to be multivariate normal.

• The (unconditional) likelihood for the ith subject

Ly i Ly i | adG α (a)

Relation with nonlinear random effects

Poisson model

• Few statistical packages will estimate multinomial logit

models with random effects.

– Statistical packages for nonlinear Poisson models are readily

available;

– they can be used to estimate parameters of the multinomial logit

model with random effects.

• Instruct a statistical package to “assume” that

– the binary random variables yit,j are Poisson distributed with

conditional means πit,j and,

– conditional on the heterogeneity terms, are independent.

– Not valid assumptions

• Binary varibles can’t be Possion

• Certainly not independent

Relation with nonlinear random effects

Poisson model

• With this “assumption,” the conditional likelihood

interpreted by the statistical package is

Ly i | α i

Ti

c

t 1 j 1

π α

it , j

i

y it , j

exp - πit, j α i

yit, j !

c

πit , j α i

t 1

j 1

Ti

• this is the same conditional likelihood as above

y it , j

1

e

Example 11.3 Choice of yogurt brands

•

•

•

Random intercepts for Yoplait, Dannon and Weight Watchers were

assumed to follow a multivariate normal distribution with an

unstructured covariance matrix.

Here, we see that the coefficients for FEATURES and PRICE are

qualitatively similar to the model without random effects.

Overall, the AIC statistic suggests that the model with random effects is

the preferred model.

Table 11.5 Yogurt Multinomial Logit Model Estimates

Without Random

With Random Effects

Effects

Variable

Parameter t-statistic

Parameter

t-statistic

estimate

estimate

4.450

23.78

5.622

7.29

Yoplait

3.716

25.55

4.772

6.55

Dannon

3.074

21.15

1.896

2.09

Weight Watchers

0.491

4.09

0.849

4.53

FEATURES

-36.658

-15.04

-44.406

-11.08

PRICE

10,138

7,301.4

-2 Log Likelihood

10,148

7,323.4

AIC

11.3 Transition (Markov) models

• Another way of accounting for heterogeneity

– trace the development of a dependent variable over time

– represent the distribution of its current value as a

function of its history.

• Define Hit to be the history of the ith subject up to time t.

– If the explanatory variables are assumed to be nonstochastic, then we might use Hit = {yi1, …, yi,t-1}.

– Thus, the likelihood for the ith subject is

Ti

f ( y

Ly i f ( yi1 )

t 2

it

| H it )

Special Case: Generalized linear

model (GLM)

• The conditional distribution is

yit it b( it )

f ( yit | H it ) exp

S( yit , )

• where

E( yit | H it ) b( it )

Var ( yit | H it ) b( it )

• Assuming a canonical link, for the systematic component,

one could use

it gE( yit | H it ) xit β j j yi ,t j

•

See Diggle, Heagerty, Liang and Zeger (2002S, Chapter 10) for further

applications of and references to general transition GLMs.

Unordered categorical response

• First consider Markov models of order 1 so that the history

Hit need only contain yi,t-1.

• Thus,

it, jk Probyit k | yi ,t 1 j Probyit k | yi ,t 1 j, yi ,t 2 ,..., yi ,1

• Organize the set of transition probabilities πit,jk as a matrix

it ,11 it ,12

it , 21 it , 22

Π it

it ,c1 it ,c 2

it ,1c

it , 2c

it ,cc

• Here, each row sums to one.

• We call the row identifier, j, the state of origin and the

column identifier, k, the destination state.

Pension plan example

• c = 4 categories

– 1 denotes active continuation in the pension plan,

– 2 denotes retirement from the pension plan,

– 3 denotes withdrawal from the pension plan and

– 4 denotes death.

• In a given problem, one assumes that a certain subset of

transition probabilities are zero.

1

2

1 = active membership

1

2

3

4

2 = retirement

3 = withdrawal

4 = death

3

4

Multinomial logit

• For each state of origin, use

π it , jk

exp Vit , jk

expV

c

it , jh

h 1

• where Vit, jk xit, jk β j

• The conditional likelihood is

f ( yit | yi ,t 1 )

π

c

c

yit , jk

it , jk

j 1 k 1

• where

yit , jk

1 if yit k and yi ,t 1 j

0 otherwise

• When πit,jk = 0 (by assumption), we have that yit,jk = 0.

– Use the convention that 00 = 1.

Partial log-likelihood

•

•

To simplify matters, assume that the initial state distribution, Prob(yi1),

is described by a different set of parameters than the transition

distribution, f(yit | yi,t-1).

Thus, to estimate this latter set of parameters, one only needs to

maximize the partial log-likelihood

LP

•

Ti

ln f ( y

i

it

| yi ,t 1 )

t 2

Special case: specify separate components for each alternative

c

c

(

β

)

ln

f

(

y

|

y

j

)

y

x

β

ln

exp(

x

β

)

•L PIdea:

we

can

split

up

the

data

according

to

each

(lagged)

choice

(j)

,j

j

it

i ,t 1

it , jk it , jk j

it , jk j

i t 2

i t 2

k 1

k 1

Ti

Ti

– determine MLEs for each alternative (at time t-1), in isolation of

the others.

Example 11.3 Choice of yogurt

brands - Continued

• Ignore the length of time between purchases

– Sometimes referred to as “operational time.”

– One’s most recent choice of a brand of yogurt has the

same effect on today’s choice, regardless as to whether

the prior choice was one day or one month ago.

1

2

1 = Yoplait

2 = Dannon

3 = Weight Watchers

4 = Hiland

3

4

Choice of yogurt brands

• Table 11.6a

– Only 2,312 observations under consideration

– Initial values from each of 100 subjects are not available

for the transition analysis.

– “Brand loyalty” is exhibited by most observation pairs

• the current choice of the brand of yogurt is the same as chosen

most recently.

– Other observation pairs can be described as “switchers.”

• Table 11.6b

– Rescale counts by row totals to give (rough) empirical

transition probabilities.

– Brand loyalty and switching behavior is more apparent

– Customers of Yoplait, Dannon and Weight Watchers

exhibit more brand loyalty compared to those of Hiland

who are more prone to switching.

Choice of yogurt brands

Origin State

Yoplait

Dannon

Weight Watchers

Hiland

Total

Table 11.6a Yogurt Transition Counts

Destination State

Yoplait

Dannon

Weight

Watchers

654

71

44

14

783

65

822

18

17

922

41

19

473

6

539

Hiland

Total

17

16

5

30

68

777

928

540

67

2,312

Table 11.6b Yogurt Transition Empirical Probabilities, in Percent

Destination State

Yoplait

Dannon

Weight

Hiland

Origin State

Watchers

84.2

8.4

5.3

2.2

Yoplait

7.7

88.6

2.0

1.7

Dannon

8.1

3.3

87.6

0.9

Weight Watchers

20.9

25.4

9.0

44.8

Hiland

Total

100.0

100.0

100.0

100.0

Choice of yogurt brands

Variable

Yoplait

Dannon

Weight Watchers

FEATURES

PRICE

-2 Log Likelihood

•

•

•

Table 11.7 Yogurt Transition Model Estimates

State of Origin

Yoplait

Dannon

Weight Watchers

Estimate

t-stat Estimate

t-stat Estimate

t-stat

Hiland

Estimate

t-stat

5.952

12.75

2.529

7.56

1.986

5.81

0.593

2.07

-41.257

-6.28

2,397.8

0.215

0.32

0.210

0.42

-1.105

-1.93

1.820

3.27

-13.840

-1.21

281.5

4.125

9.43

5.458

16.45

1.522

3.91

0.907

2.89

-48.989

-8.01

2,608.8

4.266

6.83

2.401

4.35

5.699

11.19

0.913

2.39

-37.412

-5.09

1,562.2

Purchase probabilities for customers of Dannon, Weight Watchers and

Hiland are more responsive to a newspaper ad than Yoplait customers.

Compared to the other three brands, Hiland customers are not price

sensitive in that changes in PRICE have relatively impact on the

purchase probability (it is not even statistically significant).

The total (minus two times the partial) log-likelihood is 2,379.8 + … +

281.5 = 6,850.3.

– Without origin state, the corresponding value of 9,741.2.

– The likelihood ratio test statistic is LRT = 2,890.9.

– This corroborates the intuition that the most recent type of purchase

has a strong influence in the current brand choice.

Example 11.4 Income tax payments

and tax preparers

•

•

•

•

Yogurt - the explanatory variables FEATURES and PRICE depend on

the alternative.

The choice of a professional tax preparer - financial and demographic

characteristics do not depend on the alternative.

97 tax filers never used a preparer in the five years under consideration

and 89 always did, out of 258 subjects.

Table 11.8 shows the relationship between the current and most recent

choice of preparer.

– 258 initial observations are not available for the transition analysis,

reducing our sample size to 1,290 – 258 = 1,032.

Table 11.8 Tax Preparers Transition Empirical

Probabilities, in Percent

Origin State

Destination State

Count

PREP = 0

PREP = 1

546

89.4

10.6

PREP = 0

486

8.4

91.6

PREP = 1

Tax preparers

Table 11.9 Tax Preparers Transition Model Estimates

State of Origin

PREP = 0

PREP = 1

Variable

Estimate

t-stat Estimate

t-stat

-10.704 -3.06

0.208

0.18

Intercept

1.024

2.50

0.104

0.73

LNTPI

-0.072 -2.37

0.047

2.25

MR

0.352

0.85

0.750

1.56

EMP

361.5

264.6

-2 Log Likelihood

•

•

•

•

A likelihood ratio test provides strong evidence that most recent choice

is an important predictor of the current choice.

To interpret the regression coefficients, consider a “typical” tax filer

with LNTPI = 10, MR = 23 and EMP = 0.

If this tax filer had not previously chosen to use a preparer, then

– V = -10.704 + 1.024(10) – 0.072(23) + 0.352(0) = -2.12.

– Estimated prob = exp(-2.12)/(1+exp(-2.12)) = 0.107.

If this tax filer had chosen to use a preparer, then the estimated

probability is 0.911.

– This example underscores the importance of the intercept.

Higher order Markov models

• It is customary in Markov modeling to simply expand the

state space to handle higher order time relationships.

• Define a new categorical response, yit* = {yit, yi,t-1}.

– The order 1 transition probability, f(yit* | yi,t-1*), is

equivalent to an order 2 transition probability of the

original response,

f(yit | yi,t-1, yi,t-2).

– The conditioning events are the same,

yi,t-1* = {yi,t-1, yi,t-2}

– yi,t-1 is completely determined by the conditioning event

yi,t-1*.

• Expansions to higher orders can be readily accomplished in

a similar fashion.

Tax preparers

Table 11.10 Tax Preparers Order 2 Transition Empirical Probabilities,

in Percent

Origin State

Destination State

Lag

Lag 2

Count

PREP = 0

PREP = 1

PREP

PREP

376

89.1

10.9

0

0

28

67.9

32.1

0

1

43

25.6

74.4

1

0

327

6.1

93.9

1

1

•

Table 11.10 suggests that there are important differences in the

transition probabilities for each lag 1 origin state (yi,t-1 = Lag PREP)

between levels of the lag two origin state (yi,t-2 = Lag 2 PREP).

Tax preparers

Variable

Intercept

LNTPI

MR

EMP

-2 Log Likelihood

•

•

Table 11.11 Tax Preparers Order 2 Transition Model Estimates

State of Origin

Lag PREP = 0,

Lag PREP = 0,

Lag PREP = 1,

Lag PREP = 1,

Lag 2 PREP = 0

Lag 2 PREP = 1

Lag 2 PREP = 0

Lag 2 PREP = 1

Estimate

t-stat Estimate

t-stat Estimate

t-stat Estimate

t-stat

-9.866

0.923

-0.066

0.406

254.2

-2.30

1.84

-1.79

0.84

-7.331

-0.81

0.675

0.63

-0.001

-0.01

0.050

0.04

33.4

1.629

-0.210

0.065

0.25

-0.27

0.89

NA

NA

42.7

-0.251

-0.19

0.197

1.21

0.040

1.42

1.406

1.69

139.1

The total (minus two times the partial) log-likelihood is 469.4.

– With no origin state, the corresponding value of 1,067.7.

– Thus, the likelihood ratio test statistic is LRT = 567.3.

– With 12 degrees of freedom, this is statistically significant.

With one lag, the log-likelihood of 490.2.

– Thus, the likelihood ratio test statistic is LRT = 20.8. With 8 degrees of

freedom, this is statistically significant.

– Thus, the lag two component is statistically significant contribution to

the model.

11.4 Survival Models