Analysis of Algorithms

advertisement

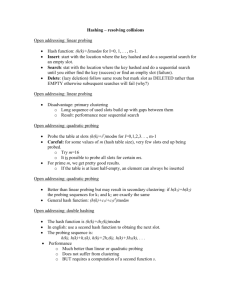

Hashing The Magic Container Interface • Main methods: – Void Put(Object) – Object Get(Object) … returns null if not i – … Remove(Object) • Goal: methods are O(1)! (ususally) • Implementation details – HashTable: the storage bin – hashfunction(object): tells where object should go – collision resolution strategy: what to do when two objects “hash” to same location. • In Java, all objects have default int hashcode(), but better to define your own. Except for strings. • String hashing in Java is good. HashFunctions • Goal: map objects into table so distribution is uniform • Tricky to do. • Examples for string s – product ascii codes, then mod tablesize • nearly always even, so bad – sum ascii codes, then mod tablesize • may be too small – shift bits in ascii code • java allows this with << and >> – Java does a good job with Strings Example Problem • Suppose we are storing numeric id’s of customers, maybe 100,000 • We want to check if a person is delinquent, usually less than 400. • Use an array of size 1000, the delinquents. • Put id in at id mod tableSize. • Clearly fast for getting, removing • But what happens if entries collide? Separate Chaining • • • • Array of linked lists The hash function determines which list to search May or may keep individual lists in sorted order Problems: – needs a very good hash function, which may not exist – worse case: O(n) – extra-space for links • Another approach: Open Addressing – everything goes into the array, somehow – several approaches: linear, quadratic, double, rehashing Linear Probing • Store information (or prts to objects) in array • Linear Probing – When inserting an object, if location filled, find first unfilled position. I.e look at hi(x)+f(i) where f(i)= i; – When getting an object, start at hash addresses, and do linear search till find object or a hole. – primary clustering blocks of filled cells occur – Harder to insert than find existing element – Load factor =lf = percent of array filled – Expected probes for • insertion: 1/2(1+1/(1-lf)^2)) • successful search: 1/2(1+1/(1-lf)) Expected number of probes Load factor failure success .1 1.11 1.06 .2 1.28 1.13 .3 1.52 1.21 .4 1.89 1.33 .5 2.5 1.50 .6 3.6 1.75 .7 6.0 2.17 .8 13.0 3.0 .9 50.5 5.5 Quadratic Probing • Idea: f(i) = i^2 (or some other quadratic function) • Problem: If table is more than 1/2 full, no quarantee of finding any space! • Theorem: if table is less than 1/2 full, and table size is prime, then an element can be inserted. • Good: Quadratic probing eliminates primary clustering • Quadratic probing has secondary clustering (minor) – if hash to same addresses, then probe sequence will be the same Proof of theorem • Theorem: The first P/2 probes are distinct. – Suppose not. – Then there are i and j <P/2 that hash to same place – So h(x)+i^2 = h(y)+j^2 and h(x) = h(y). – So i^2 = j^2 mod P – (i+j)*(i-j) = 0 mod P – Since P is prime and i and j are less than P/2 – then i+j and i-j are less than P and P factors. – Contradiction Double Hashing • • • • • Goal: spreading out the probe sequence f(i) = i*hash2(x), where hash2 is another hash function Dangerous: can be very bad. Also may not eliminate any problems In best case, it’s great Rehashing • All methods degrade when table becomes too full • Simpliest solution: – create new table, twice as large – rehash everything – O(N), so not happy if often – With quadratic probing, rehash when table 1/2 full Extendible Hashing: Uses secondary storage • Suppose data does not fit in main memory • Goal: Reduce number of disks accesses. • Suppose N records to store and M records fit in a disk block • Result: 2 disk accesses for find (~4 for insert) • Let D be max number of bits so 2^D < M. • This is for root or directory (a disk block) • Algo: – hash on first D bits, yields ptr to disk block • Expected number of leaves: (N/M) log 2 • Expected directory size: O(N^(1+1/M) / M) • Theoretically difficult, more details for implementation Applications • Compilers: keep track of variables and scope • Graph Theory: associate id with name (general) • Game Playing: E.G. in chess, keep track of positions already considered and evaluated (which may be expensive) • Spelling Checker: At least to check that word is right. – But how to suggest correct word • Lexicon/book indices HashSets vs HashMaps • HashSets store objects – supports adding and removing in constant time • HashMaps store a pair (key,object) – this is an implementation of a Map • HashMaps are more useful and standard • Hashmaps main methods are: – put(Object key, Object value) – get(Object key) – remove(Object key) • All done in expected O(1) time. Lexicon Example • Inputs: text file (N) + content word file (the keys) (M) • Ouput: content words in order, with page numbers Algo: Define entry = (content word, linked list of integers) Initially, list is empty for each word. Step 1: Read content word file and Make HashMap of content word, empty list Step 2: Read text file and check if work in HashMap; if in, add to page number, else continue. Step 3: Use the iterator method to now walk thru the HashMap and put it into a sortable container. Lexicon Example • Complexity: – step 1: O(M), M number of content words – step 2: O(N), N word file size – step 3: O(M log M) max. – So O(max(N, M log M)) • Dumb Algorithm – Sort content words O(Mlog M) (balanced tree) – Look up each word in Content Word tree and update • O(N*logM) – Total complexity: O(N log M) – N = 500*2000 =1,000,000 and M = 1000 – Smart algo: 1,000,000; dumb algo: 1,000,000*10. Memoization • Recursive Fibonacci: fib(n) = if (n<2) return 1 else return fib(n-1)+fib(n-2) • Use hashing to store intermediate results Hashtable ht; fib(n) = Entry e = (Entry)ht.get(n); if (e != null) return e.answer; else if (n<2) return 1; else ans = fib(n-1)+fib(n-2); ht.put(n,ans); return ans;