Fermilab Control System

advertisement

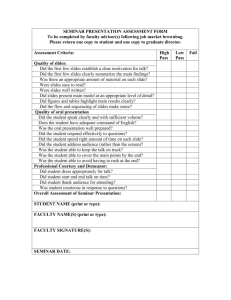

The Fermilab Control System Jim Patrick - Fermilab CERN AB Seminar October 5, 2006 Outline Accelerator Complex Overview Control System Overview Selected Control System Features Migration Efforts Summary October 5, 2006 Fermilab Control System - CERN AB Seminar 2 Fermilab Accelerator Complex Main Injector miniBooNE NUMI pbar Fixed Target Linac Booster Recycler e-cool J. Patrick September 19, 2006 Tevatron Fermilab Accelerator Complex 400 MeV Linac (up to 15 Hz) 8 GeV Booster Synchrotron (up to 15 Hz) 120 GeV Main Injector Synchrotron (~0.5 Hz) 980 GeV Tevatron Superconducting Synchrotron/Storage Ring 8 GeV Debuncher/Accumulator for antiproton production 8 GeV “Recycler” for antiproton accumulation (same tunnel as MI) with electron cooling October 5, 2006 Fermilab Control System - CERN AB Seminar 4 Operating Modes 1.96 TeV proton-antiproton collider (24-30 hour stores) 8 GeV fixed target from booster (miniBooNE) - ~5 Hz 120 GeV fixed target from Main Injector - ~1/minute 120 GeV fixed target for NUMI (~0.5 Hz) 120 GeV from MI for pbar production (0.25 – 0.5 Hz) Cycles interleaved Main Injector load contains batches for both NUMI and pbar October 5, 2006 Fermilab Control System - CERN AB Seminar 5 The Run II Challenge Operate the Tevatron collider at > 10 x the luminosity of the previous (1992-6) run (began Mar 2001) Collider stores + pbar production Incorporate Recycler ring (May 2004) With Electron cooling (July 2005) Operate miniBooNE - (Dec 2001) Operate 120 GeV fixed target (Feb 2003) Operate NUMI high intensity neutrino beam (Dec 2004) Get all this working, all at the same time Meanwhile modernize the control system without affecting commissioning and operation of the above Simultaneous collider and fixed target operation new to Fermilab October 5, 2006 Fermilab Control System - CERN AB Seminar 6 Outline Accelerator Complex Overview Control System Overview Selected Features Migration Efforts Summary October 5, 2006 Fermilab Control System - CERN AB Seminar 7 Control System Overview Known as “ACNET” Unified control system for the entire complex All accelerators, all machine and technical equipment Common console manager can launch any application Common software frameworks Common timing system Three-tier system Applications Services Front-ends Distributed intelligence Enabled by front-ends, special hardware System is tolerant to high level software disruptions October 5, 2006 Fermilab Control System - CERN AB Seminar 8 Control System Overview Application 70+70+ Central 120 Console Applications Java Applications Web Applications Central Services Servlets Open Access Clients Labview Front-Ends IRM Front-Ends Database Consolidators ethernet MOOC Front-Ends Front-End field bus: VME, SLD, Arcnet, ethernet, … >500 Field Hardware October 5, 2006 CAMAC, VME, PMC, IP, Multibus, CIA, GPIB, … Fermilab Control System - CERN AB Seminar 9 ACNET History Originally developed for Tevatron in early 80’s Substantial evolution over the years CAMAC field hardware -> very broad diversity Major modernization in the 90’s PDP-11/Mac-16 front-ends -> x86 Multibus -> PPC VME DEC PCL network-> IEEE 802.5 token ring -> ethernet VAX database -> Sybase PDP-11 applications -> VAXstations VAX only through 2000 Java framework, port to Linux since then October 5, 2006 Fermilab Control System - CERN AB Seminar 10 Device Model Maximum 8 character device names e.g. T:BEAM for Tevatron beam intensity Informal conventions provide some rationality More verbose hierarchy layer created, but not utilized/maintained Each device has one or more of a fixed set of properties: Reading, setting, analog & digital alarms, digital status & control Device descriptions stored in central Sybase database Monotype data, arrays are transparently supported Mixed type structures done, but support not transparent Application (library) layer must properly interpret the data from the front-end and perform required data translations ~200,000 devices/350,000 properties in the system October 5, 2006 Fermilab Control System - CERN AB Seminar 11 ACNET Protocol Custom protocol used for intra-node communication Layered, task directed protocol developed in early 80’s Old, but ahead of its time Currently uses UDP over ethernet Timeout/retry mitigates UDP unreliability Very little packet loss in our network Get/Set devices Single or repetitive reply; immediate, periodic, or on clock event Multiple requests in single network packet Fast Time Plot (FTP) Blocks of readings taken at up to 1440 Hz returned every 0.5 sec. Snapshot Block of 2048 readings taken at an arbitrary rate October 5, 2006 Fermilab Control System - CERN AB Seminar 12 ACNET Protocol Has proven to be very reliable, robust, scalable and extensible protocol for many years For example, new GET/SET protocol adds precise time stamps, collection on event+delay, states, larger device address space Main weakness is lack of transparent mixed type support But this is often done manually Difficult to program, but high level API hides details Difficult to port to new platforms but has been done October 5, 2006 Fermilab Control System - CERN AB Seminar 13 Timing and Control Networks TCLK (Clock Events) Trigger various actions in the complex Dedicated 10 MHz serial network 256 possible events defined for the complex Includes 1 Hz GPS time sync MDAT (Machine Data) General mechanism for rapid data transfer 36 slots updated at 720 Hz Beam Sync – revolution markers derived from RF States – discussed later Both TCLK and MDAT are multicast at 15 Hz for central layer processes that don’t require hard real-time response October 5, 2006 Fermilab Control System - CERN AB Seminar 14 Application Environment Console environment VAX based; Now mostly ported to Linux Integrated application/graphics/data access architecture C language (+ legacy FORTRAN) Java environment Platform independent; Windows/Sun/Linux/Mac all utilized Full access to entire control system Both environments have a standard application framework that provides a common look and feel All applications launched from “Index Pages” Separate for Console, Java environments Tightly managed code in both environments Also some web browser applications of varying styles October 5, 2006 Fermilab Control System - CERN AB Seminar 15 Index Page October 5, 2006 Fermilab Control System - CERN AB Seminar 16 Parameter Page October 5, 2006 Fermilab Control System - CERN AB Seminar 17 Device Database GUI October 5, 2006 Fermilab Control System - CERN AB Seminar 18 Synoptic Display (Web) Drag&Drop Builder Uses AJAX technology October 5, 2006 Fermilab Control System - CERN AB Seminar 19 Alarms Front-ends post (push) alarms to central server for distribution and display October 5, 2006 Fermilab Control System - CERN AB Seminar 20 Central Layer Database Open Access Clients (“virtual front-ends”) Java Servlets Bridge device requests from outside firewall and for web apps Request Consolidation Requests from different clients consolidated to a single request to the front-end Alarms server Front-end download Load last settings on reboot October 5, 2006 Fermilab Control System - CERN AB Seminar 21 Database Central Sybase database Three parts: All device and node descriptions Application information Used by applications as they wish Save/Restore and Shot Data database MySQL used for data loggers Data volume too high for Sybase Not for general application use Many older programs still use central “file sharing” system October 5, 2006 Fermilab Control System - CERN AB Seminar 22 Open Access Clients (OACs) “Virtual” front-ends Obey same ACNET communication protocol as front-ends Several classes: Utility – Data loggers, scheduled data acquisition, virtual devices Consolidate devices across front-ends into single array Calculations – Both database driven and custom Process control – Pulse to pulse feedback of fixed target lines Bridges – to ethernet connected scopes, instrumentation, etc. Easy access to devices on multiple front-ends Friendlier programming environment than VxWorks front-ends Framework transparently handles ACNET communication Access to database, other high level operating system features Do not provide hard real-time response; clock events by multicast > 100; Almost all run in Java framework October 5, 2006 Fermilab Control System - CERN AB Seminar 23 Front-Ends MOOC – Minimally Object-Oriented Communication Very wide diversity of functionality Processors all VME (VXI) based Very wide diversity of field hardware supported - CAMAC, Multibus, VME, VXI, CIA, ethernet attached instruments, etc. VxWorks Operating System ~325 in the system IRM – Internet Rack Monitor Turnkey system with 64 channel ADC + DAC + Digital I/O PSOS operating system ~125 in the system Labview – Used for one or few of a kind instrumentation A front-end to the control system; only experts use Labview GUI October 5, 2006 Fermilab Control System - CERN AB Seminar 24 Outline Accelerator Complex Overview Control System Overview Selected Features Migration Efforts Summary October 5, 2006 Fermilab Control System - CERN AB Seminar 25 Sequencer Overview The Sequencer is an accelerator application which automates the very complex sequence of operations required to operate the collider (and other things) Operation divided into “Aggregates” for each major step “Inject Protons”, “Accelerate”, etc. Aggregates are described in a simple command language Easily readable, not buried in large monolithic code Easily modified by machine experts No need to compile/build etc. The sequencer program provides an editor to assist writing aggregates Pick from list of commands Aggregate descriptions are stored in the database October 5, 2006 Fermilab Control System - CERN AB Seminar 26 Tevatron Collider Operation Squeeze Flattop Ramping Acceleration Recovery Cogging Inject Pbars Eject Protons October 5, 2006 Initiate Collisions HEP Remove Halo Pause HEP Before Ramp Proton Removal Unsqueeze Flattop2 Pbar Injection porch Deceleration Proton Injection porch Reset Extraction porch Inject Protons Proton Injection tune up Extract Pbars Fermilab Control System - CERN AB Seminar 27 Sequencer Overview Each Aggregate contains a number of commands Set, get, check devices Wait for conditions Execute more complex operations implemented as ACL scripts or specially coded commands Start regular programs (for example TeV injection closure) Start plots Send data to shot log … The Sequencer program steps through the commands Stops on error Allow operator to restart at failed command Fermilab sequencer is not a formal finite state machine It is not just for collider operations Configure other parts of complex, studies October 5, 2006 Fermilab Control System - CERN AB Seminar 28 Sequencer GUI Inject Protons October 5, 2006 Fermilab Control System - CERN AB Seminar 29 Sequencer Overview The Fermilab complex employs “distributed intelligence” Separate sequencer instances for TeV, MI, pbar, recycler Lower level programs control complex operations such as collimator movement for scraping Sequencer just issues high level control commands Waveforms preloaded to ramp cards, triggered by clock events “States” concept used to provide synchronization October 5, 2006 Fermilab Control System - CERN AB Seminar 30 States ACNET devices owned by STATES OAC process When a state device is set, it is reflected to the rest of the control system by the STATES process Multicast Registered listeners Thus can be used to trigger actions, do synchronization Condition persists in time unlike clock events Examples Collider State Inject Protons, Inject pbars, Accelerate, HEP, etc. Collimators – Commands for collimator movement Go to Init; Remove Halo, Retract for Store Wire scanner profile calculation complete October 5, 2006 Fermilab Control System - CERN AB Seminar 31 Waveform Generators Magnets need to do different things at different times in the machine cycle Rather than redownload on each cycle, custom function generator cards that preload up to 32 waveforms are used Correct one for a part of the cycle triggered by clock event Waveforms are functions of time and MDAT frame values On board processor does smooth interpolation Thus waveforms need not be downloaded at each phase of the machine cycle Enhances reliability Reduced dependence on high level software in stable operation October 5, 2006 Fermilab Control System - CERN AB Seminar 32 Clock Events and Waveform Generators Example of preloaded hardware triggered by clock events October 5, 2006 Fermilab Control System - CERN AB Seminar Clock events 33 Details of a Waveform Generator Clock events Time table October 5, 2006 Energy table Squeeze table Fermilab Control System - CERN AB Seminar All three tables play simultaneously 34 Finite State Machine (Java) Graphical Drag&Drop Builder State machine description stored in database FSM OAC runs machines Viewer application for control and monitoring Primary current usage is e-cool Pelletron control Keeps track of HV sparks and beam related trips Automates recovery sequence More formal state machine than sequencer, perhaps less scalable October 5, 2006 Fermilab Control System - CERN AB Seminar 35 SDA Sequenced Data Acquisition – Shot Data Analysis Record data at each step in collider operation in dedicated database Compute and track injection efficiencies, emittance growth, luminosity, … Variety of tools to extract, analyze data Web reports created in real time “Supertable” summary – web page + Excel spreadsheet Many predefined plots available on the web SDA Viewer – spreadsheet like Java application SDA Plotter – plot snapshots and fast time plots Java Analysis Studio input module Java API for custom programs October 5, 2006 Fermilab Control System - CERN AB Seminar 36 SDA Reports October 5, 2006 Fermilab Control System - CERN AB Seminar 37 Data Loggers – aka “Lumberjack” 70 instances running in parallel on individual central nodes Allocated to departments to use as they wish, no central management. Continual demand for more ~48,000 distinct devices logged Rate up to 15 Hz or on clock or state event (+ delay) 80 GB storage per node in MySQL database Stored in compressed binary time blocks in the database Keyed by device, block start time Circular buffer; wraparound time depends on device count/rates Data at maximum 1 Hz rate extracted from each logger to “Backup” logger every 24 hours Currently 850 million points extracted per day Backup logger is available online => data accessible forever October 5, 2006 Fermilab Control System - CERN AB Seminar 38 Data Loggers Logger tasks are Open Access Clients Reads via logger task rather than direct database access Variety of retrieval tools available Standard console applications, Console and Java environments Web applications Java Analysis Studio programmatic APIs Retrieval rates typically ~10-30K points/second October 5, 2006 Fermilab Control System - CERN AB Seminar 39 Data Logger Plots October 5, 2006 Fermilab Control System - CERN AB Seminar 40 Security Limit access to the control system Control system network inside a firewall with limited access in/out Limit setting ability Privilege classes (roles) defined Roles assigned to users, services, nodes Devices tagged with map of which roles may set it Take logical and of user/service/node/device mask Allows fine grained control, but in practice Device masks allow all roles to set; flat device hierarchy tedious… A limited number of roles are actually used Applications outside MCR start with settings disabled Settings must be manually enabled. Times out after some period Applications started outside MCR exit after some time limit October 5, 2006 Fermilab Control System - CERN AB Seminar 41 Remote Access Console environment via remote X display Settings generally not allowed Java applet version of console Java apps can be launched from anywhere via Webstart Access to inner parts of control system only via VPN Servlets provide read access for some applications “Secure Controls Framework” – Kerberos authentication for low level communication – allows read access outside firewall Access from outside standard application environments: xml-rpc protocol used; bindings to many languages Readings of any device; setting of select “virtual” devices No setting of hardware associated devices Used by all current experiments; both fetch and input data October 5, 2006 Fermilab Control System - CERN AB Seminar 42 Accountability Considerable usage information is logged Who/what/where/when Most reports web accessible Settings Errors – reviewed each morning Database queries Device reads from Java clients Application usage Clock (TCLK) events State transitions October 5, 2006 Fermilab Control System - CERN AB Seminar 43 Minimize Restarts Minimize need to restart parts of the system: Changes to the device or node database are multicast to the control system, they do not need to be restarted to pick these up For many front-ends devices can be added without a reboot When something is restarted, minimize what else must be restarted Restarts set state transition Monitor (periodic) requests automatically reestablished Application automatically recovers middle layer restart October 5, 2006 Fermilab Control System - CERN AB Seminar 44 Redirection The console manager may cause I/O requests to be redirected from the normal hardware. Possibilities alternatives are: An OAC process A default “MIRROR” process reflects settings into readings A Save file An SDA file This allows testing basic functionality of some applications without risk This provides a potentially good framework for integrating accelerator models, but hasn’t been done… October 5, 2006 Fermilab Control System - CERN AB Seminar 45 Some Issues… Short device names, no hierarchy Structured device properties not transparent Old fashioned GUI in console environment Lack of standard machine configuration description Lack of integration with models Lack of integration with Matlab and related tools Weak inter-front end communication Specific to Fermilab environment, no attempt at portability October 5, 2006 Fermilab Control System - CERN AB Seminar 46 Outline Accelerator Complex Overview Control System Overview Some Selected Features Migration Efforts Summary October 5, 2006 Fermilab Control System - CERN AB Seminar 47 Migration Strategy By the late 90’s VAXes had been eliminated from Fermilab except in the accelerator control system Some migration strategy was essential CDF/D0 run until ~2006-8; BTeV until ~2012+ (since cancelled) Strategy would have to be incremental Not possible to replace the entire control system in one shutdown Should be possible to replace a single application at a time New and old parts would have to interoperate Should require no or minimal changes to front-ends Most of these had been migrated from Multibus/i386/RTOS to VME/PPC/VxWorks in the 90’s Implied that ACNET protocol would remain primary communication mechanism October 5, 2006 Fermilab Control System - CERN AB Seminar 48 Migration Plan Architecture developed using the Java language Multi-tier Applications Data Acquisition Engines (middle layer) Front-ends (unmodified) Applications divided into thin GUI client with business logic in DAE. Predated J2EE and related ideas Communication via Java RMI Drifted back to heavy client, DAE only does device access Open Access Clients running in DAE (same Java virtual machine) Device request consolidation across all Java clients October 5, 2006 Fermilab Control System - CERN AB Seminar 49 Java Migration Progress Required infrastructure was created prior to 2001 run start ACNET protocol DAE framework OAC framework Device access library Database access library Application framework Etc. SDA implemented; continually evolved to meet needs Data acquisition Analysis tools Most VAX OACs converted to Java and new ones written Java versions of many utility applications written October 5, 2006 Fermilab Control System - CERN AB Seminar 50 Java Migration SDA has been very successful in tracking performance of the accelerators Data loggers had greatly expanded capacity and rate capability, this has been valuable Ease of creating new OACs has been valuable Much more information is now available over the web Some machine applications have been written, but machine departments did not in general embrace the Java application framework Some key Tevatron applications done Extensive operational usage in electron cooling Pbar source department also has many expert Java applications October 5, 2006 Fermilab Control System - CERN AB Seminar 51 Java Application Migration Issues Accelerator performance a higher priority Number of active applications badly underestimated Authors of many applications long gone Long transition period in division management Development environment much more difficult than VAX Two-tier framework; device access API, development environment These issues were mostly corrected but late Separate console managers, not easy to merge Primitive graphics => fast; fast application startup “If the Java application works differently, I don’t want to learn how to use it.” “If the Java application works the same, then why should I use it?” October 5, 2006 Fermilab Control System - CERN AB Seminar 52 Console Migration to Linux In the fall of 2003, a division wide group was formed to assess the strategy for controls migration The base of active applications was determined to be much larger then generally believed; more than 800 Porting all of these to Java with the resources available, while operating and upgrading the accelerator complex was deemed impractical A port the VAX console infrastructure and all active VAX applications to Linux was initiated in ~March 2004 The Java framework continues to be supported and new applications written using it. Nothing backported! October 5, 2006 Fermilab Control System - CERN AB Seminar 53 Linux Migration Phases Phase I Console infrastructure ACNET communication protocol Core libraries Emulate VAX development environment (MECCA) ~1M lines of code Done entirely by controls department Phase II Applications Done incrementally, not en masse In practice, most porting done by controls department personnel Some porting and extensive testing done by other departments Phase III Remaining VAX services October 5, 2006 Fermilab Control System - CERN AB Seminar 54 Linux Port Much harder than anticipated Large volume of code - ~3.5M non-comment lines Extensive interdependencies – Primary/Secondary application communication K&R C -> ANSI C++ C++ compiler required for variable argument capability Stricter compiler did catch some actual bugs Insisted all compiler warnings be addressed Extensive use of VAX system services “BLOBs” in the database and in flat files and over network Floating point issues Structure alignment issues October 5, 2006 Fermilab Control System - CERN AB Seminar 55 Port Mechanics Scripts developed to make many changes automatically Required manual changes flagged Nightly builds with detailed web accessible reports Liason assigned from each department for Phase II Database developed with information on each application Sensitivity to operations assigned to each application Status – imported, compiles, builds, all manual issues addressed, initializes, tested, certified, default Linux Testing of sensitive applications requires scheduling Certification of sensitive applications requires keeper + department liason + operations sign-off Dual hosted console manager developed that runs applications on correct platform (maintained in database) October 5, 2006 Fermilab Control System - CERN AB Seminar 56 Application Tracking October 5, 2006 Fermilab Control System - CERN AB Seminar 57 Status Begin Beam Operation Applications Certified Phase I completed ~late 2005 Phase II expected to be complete by end of 2006 Linux usage in operations began at end of shutdown in June 2006 Very modest impact on operations. No lost collider stores! Phase III expected to be complete by mid 2007 (next shutdown) Fermilab Control System - CERN AB Seminar October 5, 2006 58 Modernizing BPMs and BLMs BPMs in Recycler, Tevatron, Main Injector, Transfer Lines Performance issues Obsolete hardware technology Need for more BPMs in transfer lines Replaced with a digital system using Echotek VME boards Beam Loss Monitors in Tevatron and Main Injector Obsolete hardware technology Additional features needed with more beam in MI, Tevatron Replaced with new custom system (still in progress) All were replaced in a staged manner without interruption to operations Library support routines provided common data structure for both old and new systems during the transition period Enormous improvement in performance and reliability October 5, 2006 Fermilab Control System - CERN AB Seminar 59 Outline Accelerator Complex Overview Control System Overview Some Selected Features Migration Efforts Summary October 5, 2006 Fermilab Control System - CERN AB Seminar 60 Collider Peak Luminosity October 5, 2006 Fermilab Control System - CERN AB Seminar 61 Collider Integrated Luminosity Recent observation of Bs mixing by CDF October 5, 2006 Fermilab Control System - CERN AB Seminar 62 5/1/2005 5/6/2005 5/11/2005 5/16/2005 5/21/2005 5/26/2005 5/31/2005 6/5/2005 6/10/2005 6/15/2005 6/20/2005 6/25/2005 6/30/2005 7/5/2005 7/10/2005 7/15/2005 7/20/2005 7/25/2005 7/30/2005 8/4/2005 8/9/2005 8/14/2005 8/19/2005 8/24/2005 8/29/2005 9/3/2005 9/8/2005 9/13/2005 9/18/2005 9/23/2005 9/28/2005 10/3/2005 10/8/2005 10/13/2005 10/18/2005 10/23/2005 10/28/2005 11/2/2005 11/7/2005 11/12/2005 11/17/2005 11/22/2005 11/27/2005 12/2/2005 12/7/2005 12/12/2005 12/17/2005 12/22/2005 12/27/2005 1/1/2006 1/6/2006 1/11/2006 1/16/2006 1/21/2006 1/26/2006 1/31/2006 2/5/2006 2/10/2006 2/15/2006 2/20/2006 2/25/2006 3/2/2006 3/7/2006 3/12/2006 3/17/2006 3/22/2006 3/27/2006 4/1/2006 4/6/2006 4/11/2006 4/16/2006 4/21/2006 4/26/2006 5/1/2006 5/6/2006 5/11/2006 5/16/2006 5/21/2006 5/26/2006 5/31/2006 6/5/2006 6/10/2006 6/15/2006 6/20/2006 6/25/2006 6/30/2006 7/5/2006 7/10/2006 7/15/2006 7/20/2006 7/25/2006 7/30/2006 8/4/2006 8/9/2006 8/14/2006 8/19/2006 8/24/2006 8/29/2006 9/3/2006 9/8/2006 9/13/2006 9/18/2006 9/23/2006 protons (xE17) NUMI Performance 2000 1900 1800 1700 1600 Total NuMI Protons Starting 3 Dec 2004 1500 1400 1300 1200 1100 1000 900 800 700 600 500 400 300 200 100 0 date Best current measurement of neutrino oscillation despite problems with target, horn, etc. October 5, 2006 Fermilab Control System - CERN AB Seminar 63 MiniBooNE Performance October 5, 2006 Fermilab Control System - CERN AB Seminar 64 Accelerator Future Tevatron collider is scheduled to run for ~3 more years Emphasis for complex will then be neutrino program Recycler + pbar accumulator get converted to proton stackers for NUMI – up to ~1 MW average beam power NOnA experiment approved – 2nd far detector in Minnesota ILC accelerator test facilities under construction These will not use ACNET but instead probably some combination of EPICS and the DESY DOOCS system used at TTF Strategy not yet determined – broad collaborative facility Some interoperation efforts with EPICS; bridge in middle layer Evaluating EPICS 4 “Data Access Layer” (DAL) October 5, 2006 Fermilab Control System - CERN AB Seminar 65 Java Parameter Page/EPICS Devices October 5, 2006 Fermilab Control System - CERN AB Seminar 66 Summary The control system has met the Run II Challenge Accelerator complex has gone well beyond previous runs in performance and diversity of operational modes Unified control system with solid frameworks and distributed intelligence was instrumental in this success During accelerator operation the control system has been substantially modernized Incremental strategy essential It is difficult to upgrade a large system while operating! It is difficult to get people to upgrade a system that works! The control system will finally be VAX-free at the next major shutdown currently scheduled for June, 2007 Control system will be viable for the projected life of the complex October 5, 2006 Fermilab Control System - CERN AB Seminar 67