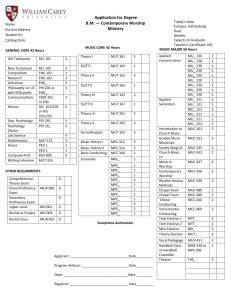

Variable and Value Ordering for MPE Search

advertisement

Variable and Value Ordering

for MPE Search

Sajjad Siddiqi and Jinbo Huang

Most Probable Explanation

(MPE)

N : Bayesian network

X : {X,Y,Z}

e : {X=x}

Y

X

Z

X

Y

Z

Pr(X,Y,Z)

x

y

z

0.05

x

y

z

0.3

x

y

z

0.05

x

y

z

0.1

x

y

z

0.1

x

y

z

0.2

x

y

z

0.1

x

y

z

0.1

Most Probable Explanation

(MPE)

N : Bayesian network

X : {X,Y,Z}

e : {X=x}

max

Y

X

Z

X

Y

Z

Pr(X,Y,Z)

x

y

z

0.05

x

y

z

0.3

x

y

z

0.05

x

y

z

0.1

x

y

z

0.1

x

y

z

0.2

x

y

z

0.1

x

y

z

0.1

Exact MPE by Inference

Variable Elimination

– Bucket Elimination

Exponential in the treewidth of the elimination order.

Compilation

– Decomposable Negation Normal Form (DNNF)

Exploits local structure so treewidth is not

necessarily the limiting factor.

Both methods can either run out of time or

memory

Exact MPE by Searching

X

x

y

z

0.1

Z

Y

y

z

0.2

z

0.1

Z

z

0.1

Exact MPE by Searching

Depth-First Search

– Exponential in the number of variables X.

Depth-First Branch-and-Bound Search

– Computes an upper bound on any extension

to the current assignment.

– Backtracks when upper bound >= current

solution.

– Reduces complexity of search.

Exact MPE by B-n-B Search

X

x

current solution = 0.2

y

z

0.1

Z

Y

if upper bound <= 0.2

y

z

0.2

z

0.1

Z

z

0.1

Computing Bounds: MiniBuckets

Ignores certain dependencies amongst

variables:

– New network is easier to solve.

– Solution grows only in one direction.

Splits a bucket into two or more minibuckets.

– Focuses on generating tighter bounds.

Mini-buckets is a special case of node

splitting.

Node Splitting

Y

splitting

Y

X

Z

^

Y^1

Q

(N)

R

^

X

Q

^

Y

2

^

Q

1

Z

(N`)

Y1 and Y2 are clones of Y: fully split

split variables = {Q,Y}

^

Q

2

R

Node Splitting

e: an instantiation of variables X in N.

e: a compatible assignment to their

clones in N´

^

^

e.g. if e = {Y=y}, then e = {Y1=y, Y2=y}

then

MPEp (N, e) <= MPEp (N´, e, e)

= total number of instantiations of

clone variables

Computing Bounds: Node

Splitting (Choi et. Al 2007).

Split network is easier to solve, its MPE

computes the bound.

Search performed only over the ‘split

variables’ instead of all.

Focuses on good network relaxations

trying to reduce the number of splits.

B-n-B Search for MPE

MPE(N`,{X=x})

Y

bound

^ =y, ^

MPE(N`,{X=x, Y=y, Y

Y2=y})

1

Q

bound

q

y

y

^ =y, ^

MPE(N`,{X=x, Y=y, Y

Y2=y})

1

Q

bound

^ =y, Y

^2=y,Q=q, Q

^ 1=q, Q

^ 2=q})

MPE(N`,{X=x, Y=y, Y

1

exact solution

= 4, for two split variables with binary domain

B-n-B Search for MPE

Leaves of the search tree give candidate

MPE solutions.

Elsewhere we get upper bounds to prune

the search.

A branch gets pruned if bound <= current

solution.

Choice of Variables to Split

Reduce the number of split variables.

– Heuristic based on the reduction in the size

of jointree cliques and separators.

Split enough variables to reduce the

treewidth to a certain threshold (when

the network is easy to solve).

Variable and Value Ordering

Reduce search space using an efficient

variable and value ordering.

(Choi et al. 2007) do not address this

and use a neutral heuristic.

Several heuristics are analyzed and

their powers combined to produce an

effective heuristic.

Scales up the technique.

Entropy-based Ordering

Pr(Y=y), Pr(Y=y)

Pr(Q=q), Pr(Q=q)

entropy(Y), entropy(Q)

Computation

Do the same for clones

Y

y

Q

and get average probabilities:

y

Q

Pr (Y=y) =

^ =y)+Pr(Y

^ =y)]/3

[Pr(Y=y)+Pr(Y

1

2

Entropy-based Ordering

Computation

Prefer Y over Q if

entropy(Y) < entropy(Q).

Prefer Y=y over Y=y if

Pr(Y=y) > Pr(Y=y)

Y

y

Q

y

Static and Dynamic versions.

Q

Favor those instantiations that are more likely to be MPEs.

Entropy-based Ordering

Probabilities computed using DNNF:

– Evaluation and Differentiation of AC

Experiments:

– Static heuristic, significantly faster

than the neutral.

– Dynamic heuristic, generally too

expensive to compute and slower.

Nogood Learning

g = {X=x, Y=y, Z=z} is a nogood if

MPEp (N’, g, g) <= current solution

x

y

z

Z

Y

X

current solution=1.0

bound=1.3

bound=1.2

bound=0.5

bound=1.5

let g’ = g \ {Y=y} &

MPEp (N’, g’, g’) <= current solution

then g = g’

Nogood-based Ordering

Scores:

S(X=x) = number of occurrences in nogoods

S(X) = [S(X=x) + S(X=x)]/2 (binary variables)

Dynamic Ordering:

Prefer higher scores.

Impractical: overhead of repeated bound

computation during learning.

Score-based Ordering

A more effective approach based on nogoods.

Scores of variables/values tell how can a nogood be

obtained quickly (backtrack early).

S(X=x) += 1.5-1.3=0.2 X

x

S(Y=y) += 1.3-1.2=0.1

y

S(Z=z) += 1.2-0.5=0.7 Z

z

Y

bound=1.3

bound=1.2

bound=0.5

bound=1.5

Improved Heuristic

Periodically reinitialize scores (focus on

recent past).

Use static entropy-based order as the initial

order of variables/values.

Experimental Setup

Intel Core Duo 2.4 GHz + AMD Athlon 64 X2

Dual Core Processor 4600+, both with 4 GB of

RAM running Linux.

A memory limit of 1 GB on each MPE query.

C2D DNNF compiler [Darwiche, 2004; 2005].

Trivial seed of 0 as the initial MPE solution to

start the search.

Keep splitting the network variables until

treewidth <= 10.

Comparing search spaces on grid networks

Comparing search time on grid networks

Comparing nogood learning and score-based DVO on grid network

Results on grid networks, 25 queries per network

Random networks

20 queries per network

Networks for genetic linkage analysis, which are some

of the hardest networks

Only SC-DVO succeeded

Comparison with SamIam on grid networks

Comparison with (Marinescu & Dechter, 2007) on grid networks

(SMBBF – Static mini-bucket best first)

Parameter ‘i=20’, where ‘i’ controls the size of the mini-bucket

We tried a few cases from random and genetic linkage analysis

networks which SMBBF could not solve (4 random networks of

sizes 100, 110, 120, and 130 and pedigree13 from the genetic

linkage analysis network).

Conclusion

Novel and efficient heuristic for dynamic

variable ordering for computing the MPE

in Bayesian networks.

A significant improvement in time and

space over less sophisticated heuristics

and other MPE tools.

Many hard network instances solved for

the first time.