Powerpoint - Department of Statistics

advertisement

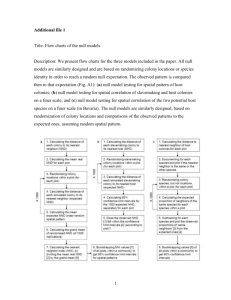

Score Tests in Semiparametric Models Raymond J. Carroll Department of Statistics Faculties of Nutrition and Toxicology Texas A&M University http://stat.tamu.edu/~carroll Papers available at my web site Texas is surrounded on all sides by foreign countries: Mexico to the south and the United States to the east, west and north Palo Duro Canyon, the Grand Canyon of Texas West Texas East Texas Wichita Falls, Wichita Falls, that’s my hometown Guadalupe Mountains National Park College Station, home of Texas A&M University I-45 Big Bend National Park I-35 Palo Duro Canyon of the Red River Co-Authors Arnab Maity Co-Authors Nilanjan Chatterjee Co-Authors Kyusang Yu Enno Mammen Outline • Parametric Score Tests • Straightforward extension to semiparametric models • Profile Score Testing • Gene-Environment Interactions • Repeated Measures Parametric Models • Parametric Score Tests • Parameter of interest = b Nuisance parameter = q Interested in testing whether b = 0 Log-Likelihood function = L (Y ; X ; Z; ¯ ; µ) Parametric Models • Score Tests are convenient when it is easy to maximize the null loglikelihood P n i = 1 L (Y i ; X i ; Z i ; 0; µ) • But hard to maximize the entire loglikelihood P n i = 1 L (Y i ; X i ; Z i ; ¯ ; µ) Parametric Models b ) be the MLE for a given value of b • Let µ(¯ • Let subscripts denote derivatives • Then the normalized score test statistic is just S= ¡ 1=2 P n n i = 1L b f Y ; X ; Z ; 0; µ(0)g i i i ¯ Parametric Models • Let I be the Fisher Information evaluated at b = 0, and with sub-matrices such as I ¯ µ • Then using likelihood properties, the score statistic under the null hypothesis is asymptotically equivalent to · ¡ 1=2 P n n i = 1 L ¯ f Y i ; X i ; Z i ; 0; µg ¸ 1 ¡ I ¯ µ I ¡µµ L µ f Y i ; X i ; Z i ; 0; µg Parametric Models • The asymptotic variance of the score statistic is T = I ¯ ¯ ¡ I ¯ µ I ¡ 1 I µ¯ µµ • Remember, all computed at the null b = 0 • Under the null, if b = 0 has dimension p, then S > T ¡ 1 S ) Â2p Parametric Models • The key point about the score test is that all computations are done at the null hypothesis • Thus, if maximizing the loglikelihood at the null is easy, the score test is easy to implement. Semiparametric Models • Now the loglikelihood has the form L f Y i ; X i ; ¯ ; µ(Z i )g • Here, µ(¢) is an unknown function. The obvious score statistic is ¡ 1=2 P n n i = 1L • Where b i ; 0)g f Y ; X ; 0; µ(Z i i ¯ b i ; 0) is an estimate under the null µ(Z Semiparametric Models • Estimating µ(¢) in a loglikelihood like L f Y i ; X i ; 0; µ(Z i )g • This is standard • Kernel methods used local likelihood • Splines use penalized loglikelihood Simple Local Likelihood • Let K be a density function, and h a bandwidth • Your target is the function at z • The kernel weights for local likelihood are Zi -z K h • If K is the uniform density, only observations within h of z get any weight Simple Local Likelihood Only observations within h = 0.25 of x = -1.0 get any weight Simple Local Likelihood • Near z, the function should be nearly linear • The idea then is to do a likelihood estimate local to z via weighting, i.e., maximize P µ n i = 1K Zi ¡ z h • Then announce ¶ L f Y i ; X i ; 0; ®0 + ®1 (Z i ¡ z)g θ̂(z) = 0 Simple Local Likelihood • It is well-known that the optimal bandwidth is h / n¡ 1=5 • The bandwidth can be estimated from data using such things as cross-validation Score Test Problem • The score statistic is S= ¡ 1=2 P n n i = 1L b i ; 0)g f Y ; X ; 0; µ(Z i i ¯ ¡ 1=5 • Unfortunately, when h / n this statistic is no longer asymptotically normally distributed with mean zero • The asymptotic test level = 1! Score Test Problem • The problem can be fixed up in an ad hoc way by setting h / n ¡ 1=3 • This defeats the point of the score test, which is to use standard methods, not ad hoc ones. Profiling in Semiparametrics • In profile methods, one does a series of steps • For every b, estimate the function by using local likelihood to maximize µ ¶ Pn Zi ¡ z L f Y i ; X i ; ¯ ; ®0 + ®1 (Z i ¡ z)g i = 1K h • Call it b ¯) µ(z; Profiling in Semiparametrics • Then maximize the semiparametric profile loglikelihood ¡ 1=2 P n b i ; ¯ )g n L f Y ; X ; ¯ ; µ(Z i i i= 1 • Often difficult to do the maximization, hence the need to do score tests Profiling in Semiparametrics • The semiparametric profile loglikelihood has many of the same features as profiling does in parametric problems. • The key feature is that it is a projection, so that it is orthogonal to the score for µ(Z) , or to any function of Z alone. Profiling in Semiparametrics • The semiparametric profile score is ¡ 1=2 P n n i= 1 @ b i ; ¯ )g L f Y i ; X i ; ¯ ; µ(Z ¯= 0 @¯ · ¡ 1=2 P n b ¼n i = 1 L ¯ f Y i ; X i ; 0; µ(Z i ; 0)g @b b + L µ f Y i ; X i ; 0; µ(Z i ; 0)g µ(Z i ; ¯ ) ¯ = 0 @¯ ¸ Profiling in Semiparametrics • The problem is to compute @b µ(Z i ; ¯ ) ¯ = 0 @¯ • Without doing profile likelihood! Profiling in Semiparametrics • The definition of local likelihood is that for every b, £ ¤ 0 = E L µ f Y ; X ; ¯ ; µ(Z; ¯ )gjZ = z • Differentiate with respect to b. Profiling in Semiparametrics • Then h i E L ¯ µ f Y ; X ; 0; µ(Z; 0)gjZ = z @b £ ¤ µ(Z; 0) = ¡ @¯ E L µµ f Y ; X ; 0; µ(Z; 0)gjZ = z • Algorithm: Estimate numerator and denominator by nonparametric regression • All done at the null model! Results • There are two things to estimate at the null model b µ(Z; 0) @b b (Z; 0) µ(Z; 0) = µ ¯ @¯ • Any method can be used without affecting the asymptotic properties • Not true without profiling Results • We have implemented the test in some cases using the following methods: • • • • Kernels Splines from gam in Splus Splines from R Penalized regression splines • All results are similar: this is as it should be: because we have projected and profiled, the method of fitting does not matter Results • The null distribution of the score test is asymptotically the same as if the following were known µ(Z) @ µ(Z; 0) = µ¯ (Z; 0) @¯ Results • This means its variance is the same as the variance of ¡ 1=2 P n n i= 1 · L ¯ f Y i ; X i ; 0; µ(Z i )g ¸ + L µ f Y i ; X i ; 0; µ(Z i )gµ¯ (Z i ; 0) • This is trivial to estimate • If you use different methods, the asymptotic variance may differ Results • With this substitution, the semiparametric score test requires no undersmoothing • Any method works • How does one do undersmoothing for a spline or an orthogonal series? Results • Finally, the method is a locally semiparametric efficient test for the null hypothesis • The power is: the method of nonparametric regression that you use does not matter Example • Colorectal adenoma: a precursor of colorectal cancer • N-acetyltransferase 2 (NAT2): plays important role in detoxification of certain aromatic carcinogen present in cigarette smoke • Case-control study of colorectal adenoma • Association between colorectal adenoma and the candidate gene NAT2 in relation to smoking history. Example • Y = colorectal adenoma • X = genetic information (below) • Z = years since stopping smoking More on the Genetics • Subjects genotyped for six known functional SNP’s related to NAT2 acetylation activity • Genotype data were used to construct diplotype information, i.e., The pair of haplotypes the subjects carried along their pair of homologous chromosomes More on the Genetics • We identifies the 14 most common diplotypes • We ran analyses on the k most common ones, for k = 1,…,14 The Model • The model is a version of what is done in genetics, namely for arbitrary ° , © > ª > pr (Y = 1jX ; Z) = H X ¯ + µ(Z i ) + ° X ¯ µ(Z i ) • The interest is in the genetic effects, so we want to know whether b = 0 • However, we want more power if there are interactions The Model • For the moment, pretend ° is fixed © > ª > pr (Y = 1jX ; Z) = H X ¯ + µ(Z i ) + ° X ¯ µ(Z i ) • This is an excellent example of why score testing: the model is very difficult to fit numerically • With extensions to such things as longitudinal data and additive models, it is nearly impossible to fit The Model • Note however that under the null, the model is simple nonparametric logistic regression pr (Y = 1jX ; Z) = H f µ(Z i )g • Our methods only require fits under this simple null model The Method • The parameter ° is not identified at the null © > ª > pr (Y = 1jX ; Z) = H X ¯ + µ(Z i ) + ° X ¯ µ(Z i ) • However, the derivative of the loglikelihood evaluated at the null depends on ° • The, the score statistic depends on S n (° ) ° The Method • Our theory gives a linear expansion and an easily calculated covariance matrix for each ° ¡ 1=2 P n n i = 1 ª i (° S n (° ) = covf S n (° )g ! T (° ) ) + op (n ¡ • The statistic S n (° ) as a process in ° converges weakly to a Gaussian process 1=2 ) The Method • Following Chatterjee, et al. (AJHG, 2006), the overall test statistic is taken as - n = m ax a· h i > ¡ 1 S (° )T (° )S n (° ) °· c n • (a,c) are arbitrary, but we take it as (-3,3) Critical Values • Critical values are easy to obtain via simulation • Let b=1,…,B, and let N ib = N or mal(0; 1) Recall ¡ 1=2 P n n i = 1 ª i (° S n (° ) = ) + op (n ¡ 1=2 ) • By the weak convergence, this has the same limit distribution as (with estimates under the null) S b n (° ) = ¡ 1=2 P n b i (° n ª i= 1 in the simulated world )N ib Critical Values • This means that the following have the same limit distributions under the null N i b = N or mal(0; 1) h i - n = m ax a· ° · c S >n (° )T ¡ 1 (° )S n (° ) h i - b n = m ax a· ° · c S >b n (° )T ¡ 1 (° )S b n (° ) • This means you just simulate - b n times to get the null critical value a lot of Simulation • We did a simulation under a more complex model (theory easily extended) © > ª > > pr (Y = 1jX ; Z) = H S ´ + X ¯ + µ(Z i ) + ° X ¯ µ(Z i ) • Here X = independent BVN, variances = 1, and with means given as ¯ = c(1; 1) > ; • c = 0 is the null ; c = 0; 0:01; :::; 0:15 Simulation • In addition, © > ª > > pr (Y = 1jX ; Z) = H S ´ + X ¯ + µ(Z i ) + ° X ¯ µ(Z i ) Z = U nifor m [¡ 2; 2] µ(z) = sin(2z) S = N or m al(0; 1); ´ = 1 ¡ 3· ° · 3 • We varied the true values as ° t r ue = 0; 1; 2 Power Simulation Simulation Summary • The test maintains its Type I error • Little loss of power compared to no interaction when there is no interaction • Great gain in power when there is interaction • Results here were for kernels: almost numerically identical for penalized regression splines NAT2 Example • Case-control study with 700 cases and 700 controls • As stated before, there were 14 common diplotypes • Our X was the design matrix for the k most common, k = 1,2,…,14 NAT2 Example • Z was years since stopping smoking • Co-factors S were age and gender • The model is slightly more complex because of the non-smokers (Z=0), but those details hidden here NAT2 Example Results NAT2 Example Results • Stronger evidence of genetic association seen with the new model • For example, with 12 diplotypes, our p-value was 0.036, the usual method was 0.214 Extensions: Repeated Measures • We have extended the results to repeated measures models • If there are J repeated measures, the loglikelihood is L f Y i 1 ; :::; Y i J ; X i 1 ; :::; X i J ; ¯ ; µ(Z i 1 ); :::µ(Z i J )g • Note: one function, but evaluated multiple times Extensions: Repeated Measures • If there are J repeated measures, the loglikelihood is L f Y i 1 ; :::; Y i J ; X i 1 ; :::; X i J ; ¯ ; µ(Z i 1 ); :::µ(Z i J )g • There is no straightforward kernel method for this • Wang (2003, Biometrika) gave a solution in the Gaussian case with no parameters • Lin and Carroll (2006, JRSSB) gave the efficient profile solution in the general case including parameters Extensions: Repeated Measures • It is straightforward to write out a profiled score at the null for this loglikelihood L f Y i 1 ; :::; Y i J ; X i 1 ; :::; X i J ; ¯ ; µ(Z i 1 ); :::µ(Z i J )g • The form is the same as in the non-repeated measures case: a projection of the score for ¯ onto the score for µ(¢) Extensions: Repeated Measures @ µ(Z i ; ¯ ) ¯ = 0 @¯ • Here the estimation of is not trivial because it is the solution of a complex integral equation Extensions : Repeated Measures • Using Wang (2003, Biometrika) method of nonparametric regression using kernels, we have figured out a way to estimate @ µ(Z i ; ¯ ) ¯ = 0 @¯ • This solution is the heart of a new paper (Maity, Carroll, Mammen and Chatterjee, JRSSB, 2009) Extensions : Repeated Measures • The result is a score based method: it is based entirely on the null model and does not need to fit the profile model • It is a projection, so any estimation method can be used, not just kernels • There is an equally impressive extension to testing genetic main effects in the possible presence of interactions Extensions : Nuisance Parameters • Nuisance parameters are easily handled with a small change of notation Extensions: Additive Models • We have developed a version of this for the case of repeated measures with additive models in the nonparametric part Y ij = X >ij ¯+ P D d= 1 µd (Z i j d ) (² i 1 ; :::; ² i J ) > = [0; § ]: + ² ij Extensions: Additive Models • The additive model method uses smooth backfitting (see multiple papers by Park, Yu and Mammen) Summary • Score testing is a powerful device in parametric problems. • It is generally computationally easy • It is equivalent to projecting the score for ¯ onto the score for the nuisance parameters Summary • We have generalized score testing from parametric problems to a variety of semiparametric problems • This involved a reformulation using the semiparametric profile method • It is equivalent to projecting the score for onto the score for µ(¢) ¯ • The key was to compute this projection while doing everything at the null model Summary • Our approach avoided artificialities such as ad hoc undersmoothing • It is semiparametric efficient • Any smoothing method can be used, not just kernels • Multiple extensions were discussed

![[#EL_SPEC-9] ELProcessor.defineFunction methods do not check](http://s3.studylib.net/store/data/005848280_1-babb03fc8c5f96bb0b68801af4f0485e-300x300.png)