Isis Horus Ensemble

advertisement

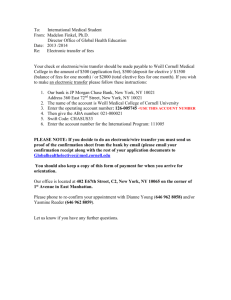

The Horus and Ensemble Projects:

Accomplishments and Limitations

Ken Birman, Robert Constable,

Mark Hayden, Jason Hickey, Christoph Kreitz,

Robbert van Renesse, Ohul Rodeh, Werner Vogels

Department of Computer Science

Cornell University

Reliable Distributed Computing:

Increasingly urgent, still unsolved

Distributed computing has swept the world

Impact has become revolutionary

Vast wave of applications migrating to networks

Already as critical a national infrastructure as water,

electricity, or telephones

Yet distributed systems remain

Unreliable, prone to inexplicable outages

Insecure, easily attacked

Difficult (and costly) to program, bug-prone

January, 2000

Cornell Presentation at DISCEX

A National Imperative

Potential for catastrophe cited by

DARPA ISAT study commissioned by Anita Jones

(1985, I briefed the findings, became basis for

refocusing of much of ITO under Howard Frank)

PCCIP report, PTAC

NAS study of trust in cyberspace

Need a quantum improvement in technologies,

packaged in easily used, practical forms

January, 2000

Cornell Presentation at DISCEX

Quick Timeline

Cornell has developed 3 generations of

reliable group communication technology

Isis Toolkit: 1987-1990

Horus System: 1990-1994

Ensemble System: 1994-1999

Today engaged in a new effort reflecting

a major shift in emphasis

Spinglass Project: 1999-

January, 2000

Cornell Presentation at DISCEX

Questions to consider

Have these projects been successful?

What did we do?

How can impact be quantified?

What limitations did we encounter?

How is industry responding?

What next?

January, 2000

Cornell Presentation at DISCEX

Timeline

Isis

Horus

•

•

•

•

January, 2000

Ensemble

Introduced reliability into group computing

Virtual synchrony execution model

Elaborate, monolithic, but adequate speed

Many transition successes

• New York, Swiss Stock Exchanges

• French Air Traffic Control console system

• Southwestern Bell Telephone network mgt.

• Hiper-D (next generation AEGIS)

Cornell Presentation at DISCEX

Virtual Synchrony

Model

G0={p,q}

G1={p,q,r,s}

p

G2={q,r,s}

G3={q,r,s,t}

crash

q

r

s

t

r, s request to join

r,s added; state xfer

p fails

t requests to join

t added, state xfer

January, 2000

Cornell Presentation at DISCEX

Why a “model”?

Models can be reduced to theory – we

can prove the properties of the model,

and can decide if a protocol achieves it

Enables rigorous application-level

reasoning

Otherwise, the application must guess at

possible misbehaviors and somehow

overcome them

January, 2000

Cornell Presentation at DISCEX

Virtual Synchrony

Became widely accepted – basis of

literally dozens of research systems and

products worldwide

Seems to be the only way to solve

problems based on replication

Very fast in small systems but faces

scaling limitations in large ones

January, 2000

Cornell Presentation at DISCEX

How Do We Use The

Model?

Makes it easy to reason about the state

of a distributed computation

Allows us to replicate data or

computation for fault-tolerance (or

because multiple users share same data)

Can also replicate security keys, do loadbalancing, synchronization…

January, 2000

Cornell Presentation at DISCEX

French ATC system

(simplified)

Onboard

Radar

X.500

Directory

Controllers

January, 2000

Air Traffic Database (flight plans, etc)

Cornell Presentation at DISCEX

A center contains...

Perhaps 50 “teams” of 3-5 controllers each

Each team supported by workstation cluster

Cluster-style database server has flight plan

information

Radar server distributes real-time updates

Connections to other control centers (40 or so

in all of Europe, for example)

January, 2000

Cornell Presentation at DISCEX

Process groups arise

here:

Cluster of servers running critical database

server programs

Cluster of controller workstations support ATC

by teams of controllers

Radar must send updates to the relevant

group of control consoles

Flight plan updates must be distributed to the

“downstream” control centers

January, 2000

Cornell Presentation at DISCEX

Role For Virtual

Synchrony?

French government knows requirements for

safety in ATC application

With our model, we can reduce their need to a

formal set of statements

This lets us establish that our solution will

really be safe in their setting

Contrast with usual ad-hoc methodologies...

January, 2000

Cornell Presentation at DISCEX

More Isis Users

New York Stock Exchange

Swiss Stock Exchange

Many VLSI Fabrication Facilities

Many telephony control applications

Hiper-D – an AEGIS rebuild prototype

Various NSA and military applications

Architecture contributed to SC-21/DD-21

January, 2000

Cornell Presentation at DISCEX

Timeline

Isis

Horus

Ensemble

• Simpler, faster group communication system

• Uses a modular layered architecture. Layers are

“compiled,” headers compressed for speed

• Supports dynamic adaptation and real-time apps

• Partitionable version of virtual synchrony

• Transitioned primarily through Stratus Computer

Phoenix product, for telecommunications

January, 2000

Cornell Presentation at DISCEX

Layered Microprotocols

in Horus

Interface to Horus is extremely flexible

Horus manages group abstraction

group semantics (membership, actions,

events) defined by stack of modules

Ensemble stacks

plug-and-play

modules to give

design flexibility

to developer

January, 2000

vsync

filter

encrypt

Cornell Presentation at DISCEX

ftol

sign

Layered Microprotocols

in Horus

Interface to Horus is extremely flexible

Horus manages group abstraction

group semantics (membership, actions,

events) defined by stack of modules

Ensemble stacks

plug-and-play

modules to give

design flexibility

to developer

January, 2000

vsync

filter

encrypt

Cornell Presentation at DISCEX

ftol

sign

Layered Microprotocols

in Horus

Interface to Horus is extremely flexible

Horus manages group abstraction

group semantics (membership, actions,

events) defined by stack of modules

Ensemble stacks

plug-and-play

modules to give

design flexibility

to developer

January, 2000

filter

ftol

vsync

encrypt

Cornell Presentation at DISCEX

sign

Group Members Use Identical

Multicast Protocol Stacks

ftol

ftol

ftol

vsync

vsync

vsync

encrypt

encrypt

encrypt

January, 2000

Cornell Presentation at DISCEX

With Multiple Stacks,

Multiple Properties

ftol

ftol

ftol

ftol

vsync

vsync

encrypt

ftol

vsync

vsync

encrypt

ftol

vsync

vsync

encrypt

encrypt

encrypt

encrypt

January, 2000

Cornell Presentation at DISCEX

Timeline

Isis

Horus

Ensemble

• Horus-like stacking architecture, equally fast

• Includes an group-key mechanism for secure

group multicast and key management

• Uses high level language, can be formally

proved, an unexpected and major success

• Many early transition successes

DD-21, Quorum via collaboration with BBN

Nortel, STC: commercial users

Discussions with MS (COM+): could be basis

of standards.

January, 2000

Cornell Presentation at DISCEX

Proving Ensemble Correct

Unlike Isis and Horus, Ensemble is coded

in a language with strong semantics (ML)

So we took a spec. of virtual synchrony

from MIT’s IOA group (Nancy Lynch)

And are actually able to prove that our

code implements the spec. and that the

spec captures the virtual synchrony

property!

January, 2000

Cornell Presentation at DISCEX

Why is this important?

If we use Ensemble to secure keys, our

proof is a proof of security of the group

keying protocol…

And the proof extends not just to the

algorithm but also to the actual code

implementing it

These are the largest formal proofs every

undertaken!

January, 2000

Cornell Presentation at DISCEX

Why is this feasible?

Power of the NuPRL system: a fifth

generation theorem proving technology

Simplifications gained through

modularity: compositional code inspires a

style of compositional proof

Ensemble itself is unusually elegant,

protocols are spare and clear

January, 2000

Cornell Presentation at DISCEX

Other

Accomplishments

An automated optimization technology

Often, a simple modular protocol becomes

complex when optimized for high

performance

Our approach automates optimization: the

basic protocol is only coded once and we

work with a single, simple, clear version

Optimizer works almost as well as handoptimization and can be invoked at runtime

January, 2000

Cornell Presentation at DISCEX

Optimization

ftol

vsync

encrypt

Original code is simple

but inefficient

January, 2000

Optimized is provably the same

yet inefficiencies are eliminated

Cornell Presentation at DISCEX

Other

Accomplishments

Real-Time Fault-Tolerant Clusters

Problem originated in AEGIS tracking server

Need a scalable, fault-tolerant parallel server

with rapid real-time guarantees

January, 2000

Cornell Presentation at DISCEX

AEGIS Problem

Emulate this…

With this…

Tracking

Server

100ms

deadline

January, 2000

Tracking

Server

Tracking

Server

Tracking

Server

Tracking

Server

Tracking

Server

Tracking

Server

Tracking

Server

Tracking

Server

Tracking

Server

Tracking

Server

Tracking

Server

Tracking

Server

Tracking

Server

Tracking

Server

Tracking

Server

Tracking

Server

Cornell Presentation at DISCEX

Other

Accomplishments

Real-Time Fault-Tolerant Clusters

Problem originated in AEGIS tracking server

Need a scalable, fault-tolerant parallel server with

rapid real-time guarantees

With Horus, we achieved 100ms response time,

even when nodes crash, scalability to 64 nodes or

more, load balancing and linear speedups

Our approach emerged as one of the major

themes in SC-21, which became DD-21

January, 2000

Cornell Presentation at DISCEX

Other

Accomplishments

A flexible, object-oriented toolkit

Standardizes the sorts of things

programmers do most often

Programmers are able to work with high

level abstractions rather than being forced

to reimplement common tools, like

replicated data, each time they are needed

Embedding into NT COM architecture

January, 2000

Cornell Presentation at DISCEX

Security Architecture

Group key management

Fault-tolerant, partitionable

Currently exploring a very large scale

configuration that would permit rapid key

refresh and revocation even with millions

of users

All provably correct

January, 2000

Cornell Presentation at DISCEX

Transition Paths?

Through collaboration with BBN,

delivered to DARPA QUOIN effort

Part of DD-21 architecture

Strong interest in industry, good

prospects for “major vendor” offerings

within a year or two

A good success for Cornell and DARPA

January, 2000

Cornell Presentation at DISCEX

What Next?

Continue some work with Ensemble

Research focuses on proof of replication stack

Otherwise, keep it alive, support and extend it

Play an active role in transition

Assist standards efforts

But shift in focus to a completely new effort

Emphasize adaptive behavior, extreme scalability,

robustness against local disruption

Fits “Intrinisically Survivable Systems” initiative

January, 2000

Cornell Presentation at DISCEX

Throughput Stability: Achilles

Heel of Group Multicast

When scaled to even modest environments,

overheads of virtual synchrony become a

problem

One serious challenge involves management of

group membership information

But multicast throughput also becomes unstable

with high data rates, large system size, too.

A problem in every protocol we’ve studied

including other “scalable, reliable” protocols

January, 2000

Cornell Presentation at DISCEX

Thoughput Scenario

Most members are

healthy….

… but one is slow

January, 2000

Cornell Presentation at DISCEX

Group throughput (healthy members)

Virtually synchronous Ensemble multicast protocols

250

200

32 group members

150

64 group members

100

96 group members

50

0

0

0.1

0.2

0.3

0.4

0.5

0.6

Degree of slowdown

0.7

0.8

0.9

Throughput as one member of a multicast group is "perturbed" by forcing it to

sleep for varying amounts of time.

January, 2000

Cornell Presentation at DISCEX

Bimodal Multicast in

Spinglass

A new family of protocols with stable

throughput, extremely scalable, fixed and low

overhead per process and per message

Gives tunable probabilistic guarantees

Includes a membership protocol and a

multicast protocol

Ideal match for small, nomadic devices

January, 2000

Cornell Presentation at DISCEX

Throughput with 25%

Slow processes

180

180

160

160

average throughput

200

average throughput

200

140

140

120

120

100

100

80

80

60

60

40

40

20

20

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

0

0.1

January, 2000

0.2

0.3

0.4

0.5

0.6

slowdown

slowdown

Cornell Presentation at DISCEX

0.7

0.8

0.9

Spinglass:

Summary of objectives

Radically different approach yields stable,

scalable protocols with steady throughput

Small footprint, tunable to match conditions

Completely asynchronous, hence demands

new style of application development

But opens the door to a new lightweight

reliability technology supporting large

autonomous environments that adapt

January, 2000

Cornell Presentation at DISCEX

Conclusions

Cornell: leader in reliable distributed computing

High impact on important DoD problems, such as

AEGIS (DD-21), QUOIN, NSA intelligence

gathering, many other applications

Demonstrated modular plug-and-play protocols

that perform well and can be proved correct

Transition into standard, off the shelf O/S

Spinglass – the next major step forward

January, 2000

Cornell Presentation at DISCEX