CSCE 212 Computer Architecture

CSCE 742 Software Architectures

Lecture 17

Architecture Tradeoff Analysis Method

ATAM

Topics

Evaluating Software Architectures

What an evaluation should tell

What can be examined, What Can’t

ATAM

Next Time: Case Study: the Nightingale System

Ref: The “Evaluating Architectures” book and Chap 11

October 22, 2003

Overview

Last Time

Analysis of Architectures

Why, When, Cost/Benefits

Techniques

Properties of Successful Evaluations

New: Analysis of Architectures

Why, When, Cost/Benefits

Techniques

Properties of Successful Evaluations

Next time:

References:

– 2 – CSCE 742 Fall 03

Last Time

Why evaluate architectures?

When do we Evaluate?

Cost / Benefits of Evaluation of SA

Non-Financial Benefits

EvaluationTechniques

Active Design Review,” SAAM (SA Analysis Method), ATAM

(Architecture Tradeoff Analysis Method), CBAM (2001/SEI) –

Cost Benefit Analysis Method

Planned or Unplanned Evaluations

Properties of Successful Evaluations

Results of an Evaluation

Principles behind the Agile Manifesto

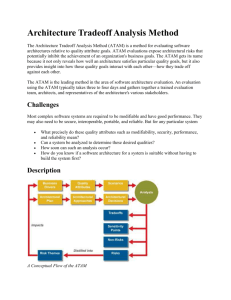

Architecture Tradeoff Analysis Method (ATAM)

Conceptual Flow ATAM

– 3 – CSCE 742 Fall 03

ATAM Overview

ATAM is based upon a set of attribute-specific measures of the system

Analytic measures, based upon formal models

Performance

Availability

Qualitative measures, based upon formal inspections

Modifiability

Safety

security

– 4 – CSCE 742 Fall 03

ATAM Benefits

clarified quality attribute requirements

improved architecture documentation

documented basis for architectural decisions

identified risks early in the life-cycle

increased communication among stakeholders

– 5 – CSCE 742 Fall 03

Conceptual Flow ATAM

– 6 –

For What Qualities can we Evaluate?

From the architecture we can’t tell if the resulting system will meet all of its quality goals

Why?

Usability largely determined by user interface

User interface is typically at lower level than SA

UMLi http://www.ksl.stanford.edu/people/pp/papers/PinheirodaSilva_ksl_02_04.pdf

ATAM concentrates on evaluating a SA based on certain quality attributes

– 7 – CSCE 742 Fall 03

ATAM Quality Attributes

Performance

Reliability

Availability

Security

Modifiability

Portability

Functionality

Variability – how well the architecture can be expanded to produce new SAs in preplanned ways ( important for product lines)

Subsetability

Conceptual integrity

– 8 – CSCE 742 Fall 03

Non-suitable Quality Attributes (for ATAM)

There are some quality attributes that are just to vague to be used as the basis for an evaluation.

Examples

“The system must be robust.”

“The system shall be highly modifiable.”

Quality attributes are evaluated in some context

A system is modifiable wrt a specific kind of change.

A system is secure wrt a specific kind of threat.

– 9 – CSCE 742 Fall 03

Outputs of an Architecture Evaluation

1.

Prioritized statement of quality attribute requirements

“You've got to be very careful if you don't know where you're going, because you might not get there.” Yogi Berra

Yogi also said "I didn't really say everything I said."

2.

Mapping of approaches to quality attributes

How the architectural approaches will achieve or fail to achieve quality attributes

Provides some “rationale” for the architecture

3.

Risks and non-risks

Risks are potentially problematic architectural decisions

There are more for each specific analysis technique. We will consider ATAM soon.

– 10 – CSCE 742 Fall 03

Documenting Risks and Non-Risks

Documenting risks and non-risks consists of:

An architectural decision (that has not been made)

A specific quality attribute response being addressed by that decision

A rationale for the positive or negative effect that the decision has on satisfying the quality attribute

– 11 – CSCE 742 Fall 03

Example of a Risk*

The rules for writing business logic modules in the second tier of your three tier client-server system are not clearly articulated. (decision to be made)

This could result in replication of functionality thereby compromising modifiability of the third tier (a quality attribute response and its consequences)

Unarticulated rules for writing business logic can result in unintended and undesired coupling of components (rationale for the negative effect)

*Example from: Evaluating Software Architectures: Methods and Case

– 12 –

Studies by Clements, Kazman and Klein

CSCE 742 Fall 03

Example of a Non-Risk*

Assuming message arrival rates of once per second, a processing time of less than 30 milliseconds and the existence of one higher priority process (the architectural decisions)

A one-second soft deadline seems appropriate (the quality attribute response and its consequence)

Since the arrival rate is bounded and the preemptive effects of higher priority processes and known and can be accommodated (the rationale)

*Example from: Evaluating Software Architectures: Methods and Case

– 13 –

Studies by Clements, Kazman and Klein

CSCE 742 Fall 03

Participants in ATAM

The evaluation team

Team leader –

Evaluation leader

Scenario scribe

Proceedings scribe

Timekeeper

Process observer

Process enforcer

Questioner

Project Decision makers – people empowered to speak for the development project

Architecture stakeholders –

including developers, testers, …,

users,

– 14 –

builders of systems interacting with this one CSCE 742 Fall 03

Evaluation Team Roles and Responsibilities

Team leader

Sets up evaluation (set evaluation contract)

forms team

interfaces with client

Oversees the writing of the evaluation report

Evaluation leader

Runs the evaluation

Facilitates elicitation of scenarios

Administers selection and prioritization of scenarios process

Facilitates evaluation of scenarios against architecture

Scenario scribe

Records scenarios on a flip chart

Captures (and insists on) agreed wording

Proceedings scribe

– 15 – CSCE 742 Fall 03

Evaluation Team Roles and Responsibilities

Proceedings scribe

Captures proceedings in electronic form

Raw scenarios with motivating issues

Resolution of each scenario when applied to the architecture

Generates a list of adopted scenaios

Timekeeper

Helps evaluation leader stay on schedule

Controls the time devoted to each scenario

Process observer

Keeps notes on how the evaluation process could be improved

Process enforcer – helps the evaluation leader stay “on process”

Questioner raises issues of architectural interest stakeholders may not have thought of

– 16 – CSCE 742 Fall 03

Outputs of the ATAM

A Concise presentation of the architecture

Frequently there is “too much”

ATAM will force a “one hour” presentation of the architecture forcing it to be concise and clear

Articulation of Business Goals

Quality requirements in terms of collections of scenarios

Mapping of architectural decisions to quality attribute requirements

A set sensitivity and tradeoff points

Eg. A backup database positively affects reliability

(sensitivity point with respect to reliability)

However it negatively affects performance (tradeoff)

– 17 – CSCE 742 Fall 03

More Outputs of the ATAM

A set of risks and non-risks

A risk is an architectural decision that may lead to undesirable consequences with respect to a stated quality attribute requirement

A non-risk is an architectural decision that, after analysis, is deemed to be safe

Identified risks can form the basis of a “architectural risk mitigation” plan

A set of risk themes

Examine the collection of risks produced looking for themes that are the result of systematic weaknesses in the architecture

Other outputs – more documentation, rationale, sense of community between stakeholders, architect, …

– 18 – CSCE 742 Fall 03

Phases of the ATAM

Phase Activity

0 Preparation

Participants

Team leadership/key project decision makers

Duration

Over a few weeks

1 Evaluation

3 Follow-up

Evaluation team and project decision makers

2 Evaluation with Ditto + stakeholders

Stakeholders

1 day + hiatus of 2 to 3 weeks

2 days

Evaluation team and client 1 week

– 19 – CSCE 742 Fall 03

Steps of the Evaluation Phase(s)

1.

Present the ATAM

2.

Present Business drivers

3.

Present Architecture

4.

Identify architectural approaches

5.

Generate quality attribute utility tree

6.

Analyze architectural approaches

Hiatus and start of phase 2

7.

Brainstorm and prioritize scenarios

8.

Analyze architectural approaches

9.

Present results

– 20 – CSCE 742 Fall 03

Phase 0: Partnership

Client – someone who can exercise control of the project whose architecture is being evaluated

Perhaps a manger

Perhaps someone in an organization considering a purchase

Issues that must be resolved in Phase 0:

1.

The client must understand the evaluation method and process (give them a book, make them a video)

2.

The client should describe the system and architecture. A “go/no-go” decision is made here by the evaluation team leader.

3.

Statement of work is negotiated

4.

Issues of proprietary information resolved

– 21 – CSCE 742 Fall 03

Phase 0: Preparation

Forming the evaluation team

Holding an evaluation kickoff meeting

Assignment of roles

Good idea to not get into ruts; try varying assignments

Roles not necessarily one-to-one

The minimum size evaluation team is 4

One person can be process observer, timekeeper and questioner

Team leader’s responsibilities are mostly “outside” the evaluation. He can double up. (Often the evaluation leader.)

Questioners should be chosen to represent the spectrum of expertise in performance, modifiability, …

– 22 – CSCE 742 Fall 03

Phase 1: Activities in Addition to the steps

Organizational meeting of evaluation team and key project personnel

Form schedule

The right people attend the meetings

They are prepared (know what is expected of them)

They have the right attitude

Besides carrying out the steps the team needs to gather as much information as possible to determine

Whether the remainder of the evaluation is feasible

Whether more architectural documentation is required

Which stakeholders should be present for phase 2

– 23 – CSCE 742 Fall 03

Step 1: Present the ATAM

Evaluation leader presents the ATAM to all participants

To explain the process

To answer questions

To set the context and expectations for the process

Using standard presentation discuss ATAM steps in brief and outputs of the evaluation

– 24 – CSCE 742 Fall 03

A Typical ATAM Agenda for Phases 1 and 2

Figure 3.9 from “Evaluating Software Architectures:

Methods and Case Studies,” by Clements, Kazman and Klein

– 25 – CSCE 742 Fall 03

Step 2: Present Business Drivers

Everyone needs to understand the primary business drivers motivating/guiding the development of the system

A project decision maker presents a system overview from the business perspective

The system’s functionality

Relevant constraints: technical, managerial, economic, or political

Business goals

Major stakeholders

The architectural drivers – the quality attributes that shape the architecture

– 26 – CSCE 742 Fall 03

Step 3: Present the Architecture

The lead architect makes the presentation at the appropriate level of detail

Architecture Presentation (~20 slides; 60 minutes)

Architectural drivers and existing standrards/models/approaches for meeting (2-3 slides)

Important Architectural Information (4-8 slides)

Context diagram

Module or layer view

Component and connector view

Deployment view

Architectural approaches, patterns, or tactics employed

– 27 – CSCE 742 Fall 03

Step 3: Present the Architecture (cont)

Architectural approaches, patterns, or tactics employed

Use of COTS (commercial off the shelf) products

Trace of 1-3 most important use cases scenarios

Trace of 1-3 most important change scenarios

Architectural issues/risks with respect to meeting the driving architectural requirements

Glossary

Should have a high signal to noise ratio, don’t get bogged down too deeply in details

Should cover technical constraints such as operating system, hardware and middleware

– 28 – CSCE 742 Fall 03

Step 4: Identify Architectural Approaches

The ATAM analyzes an architecture by focusing on its architectural approaches

Captured by not analyzed (here) by the evaluation team

The evaluation team should explicitly asked the architect to name these

– 29 – CSCE 742 Fall 03

Step 5: Generate Quality Attribute Utility Tree

– 30 – CSCE 742 Fall 03

Scenarios

Types of Scenarios:

Use case scenarios

The user wants to examine budgetary data

Growth scenarios

Change the maximum number of tracks from 150 to 200 and keep the latency of disk to screen at 200ms or less

Exploratory scenarios

Switch the OS from Unix to Windows

Improve availability from 98% to 99.99%

– 31 – CSCE 742 Fall 03

Step 6:Analyze Architectural Approaches

The evaluation team examines the highest priority scenarios one at a time and the architect is asked how the architecture supports each one.

– 32 – CSCE 742 Fall 03

– 33 – CSCE 742 Fall 03

Step 7: Brainstorm and Prioritize Scenarios

The Hiatus

Evaluation team distills what has been learned and informally contacts architecture for more information where needed

After

– 34 – CSCE 742 Fall 03

References

“Evaluating Software Architectures: Methods and Case

Studies

”

by Clements, Kazman and Klein, published by Addison-Wesley, 2002. Part of the SEI Series in

Software Engineering.

SEI Software Architecture publications

http://www.sei.cmu.edu/ata/pub_by_topic.html

– 35 – CSCE 742 Fall 03