Transport Protocols

advertisement

Ch. 7 : Internet

Transport Protocols

1

Transport Layer

Our goals:

understand principles

behind transport

layer services:

Multiplexing /

demultiplexing data

streams of several

applications

reliable data transfer

flow control

congestion control

Transport Layer

Chapter 6:

rdt principles

Chapter 7:

multiplex/ demultiplex

Internet transport layer

protocols:

UDP: connectionless

transport

TCP: connection-oriented

transport

• connection setup

• data transfer

• flow control

• congestion control

2

Transport vs. network layer

Transport Layer

Network Layer

logical communication

between processes

logical communication

between hosts

exists only in hosts

exists in hosts and

in routers

ignores network

routes data through

network

Port #s used for routing

in destination computer

IP addresses used for

routing in network

Transport layer uses Network layer services

adds more value to these services

3

Multiplexing &

Demultiplexing

4

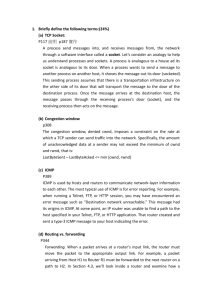

Multiplexing/demultiplexing

Multiplexing at send host:

gather data from multiple

sockets, envelop data with

headers (later used for

demultiplexing), pass to L3

application

transport

network

link

P3

P1

P1

Demultiplexing at rcv host:

receive segment from L3

deliver each received

segment to correct socket

= socket

application

transport

network

P2

= process

P4

application

transport

network

link

link

physical

host 1

physical

host 2

physical

host 3

5

each datagram has source

IP address, destination IP

address in its header

each datagram carries one

transport-layer segment

each segment has source,

destination port number

in its header

host uses port numbers, and

sometimes also IP addresses

to direct segment to correct

socket

from socket data gets to

the relevant application

process

appl. msg

host receives IP datagrams

L4 header L3 hdr

How demultiplexing works

32 bits

source IP addr dest IP addr.

other IP header fields

source port #

dest port #

other header fields

application

data

(message)

TCP/UDP segment format

6

Connectionless demultiplexing (UDP)

Processes create sockets

with port numbers

a UDP socket is identified

by a pair of numbers:

(my IP address , my port number)

Client decides to contact:

a server ( peer IP-address) +

an application ( peer port #)

Client puts those into the

UDP packet he sends; they

are written as:

dest IP address - in the

IP header of the packet

dest port number - in its

UDP header

When server receives

a UDP segment:

checks destination port

number in segment

directs UDP segment to

the socket with that port

number

(packets from different

remote sockets directed

to same socket)

the UDP message waits in

socket queue and is

processed in its turn.

answer message sent to

the client UDP socket

(listed in Source fields of

query packet)

7

Connectionless demux (cont)

client socket:

port=5775, IP=B

client socket:

port=9157, IP=A

L5

P2

L4

P3

Reply

L3

L2

message

L1

S-IP: C

S-IP: C

D-IP: A

D-IP: B

SP: 53

SP: 53

DP: 9157

message

DP: 5775

S-IP: A

client

IP: A

server socket:

port=53, IP = C

Wait for

application

SP: 9157 Getting

DP: 53 Service

D-IP: C

SP = Source port number

DP= Destination port number

S-IP= Source IP Address

D-IP=Destination IP Address

P1

Reply

message

server

IP: C

S-IP: B

Getting

Service

IP-Header

D-IP: C

SP: 5775

DP: 53

Client

IP:B

message

UDP-Header

SP and S-IP provide “return address”

8

Connection-oriented demux (TCP)

TCP socket identified

by 4-tuple:

local (my) IP address

local (my) port number

remote IP address

remote port number

receiving host uses all

four values to direct

segment to appropriate

socket

Server host may support

many simultaneous TCP

sockets:

each socket identified by

its own 4-tuple

Web servers have a

different socket for

each connecting client

If you open two browser

windows, you generate 2

sockets at each end

non-persistent HTTP will

open a different socket

for each request

9

Connection-oriented demux (cont)

client socket:

LP= 9157, L-IP= A

RP= 80 , R-IP= C

L5

server socket:

LP= 80 , L-IP= C

RP= 9157, R-IP= A

P1

L4

P4

server socket:

LP= 80 , L-IP= C

RP= 5775, R-IP= B

P5

P6

S-IP: B

D-IP: C

message

packet:

client

IP: A

S-IP: A

D-IP: C

SP: 9157

DP: 80

H3

H4

server

IP: C

packet:

S-IP: B

D-IP: C

SP: 9157

message

LP= Local Port , RP= Remote Port

L-IP= Local IP , R-IP= Remote IP

P1P3

DP: 80

L2

L1

P2

SP: 5775

server socket:

LP= 80 , L-IP= C

RP= 9157, R-IP= B

L3

packet:

client socket:

LP= 9157, L-IP= B

RP= 80 , R-IP= C

“L”= Local

= My

“R”= Remote = Peer

DP: 80

message

Client

IP: B

client socket:

LP= 5775, L-IP= B

RP= 80 , R-IP= C

10

UDP Protocol

11

UDP: User Datagram Protocol [RFC 768]

simple transport protocol

“best effort” service, UDP

segments may be:

lost

delivered out of order

to application

with no correction by UDP

UDP will discard bad

checksum segments if so

configured by application

connectionless:

no handshaking between

UDP sender, receiver

each UDP segment

handled independently

of others

Why is there a UDP?

no connection establishment

saves delay

no congestion control:

better delay & BW

simple:

small segment header

typical usage: realtime appl.

loss tolerant

rate sensitive

other uses (why?):

DNS

SNMP

12

UDP segment structure

Total length of

segment (bytes)

32 bits

source port #

length

dest port #

checksum

application

data

(variable length)

Checksum computed over:

• the whole segment

• part of IP header:

– both IP addresses

– protocol field

– total IP packet length

Checksum usage:

• computed at destination to detect

errors

• in case of error, UDP will discard

the segment, or

13

UDP checksum

Goal: detect “errors” (e.g., flipped bits) in transmitted

segment

Sender:

treat segment contents

as sequence of 16-bit

integers

checksum: addition (1’s

complement sum) of

segment contents

sender puts checksum

value into UDP checksum

field

Receiver:

compute checksum of

received segment

check if computed checksum

equals checksum field value:

NO - error detected

YES - no error detected.

14

TCP Protocol

15

TCP: Overview

point-to-point:

one sender, one receiver

between sockets

reliable, in-order byte

steam:

no “message boundaries”

pipelined:

TCP congestion and flow

control set window size

send & receive buffers

socket

door

application

writes data

application

reads data

TCP

send buffer

TCP

receive buffer

RFCs: 793, 1122, 1323, 2018, 2581

full duplex data:

bi-directional data flow

in same connection

MSS: maximum segment

size

connection-oriented:

handshaking (exchange

of control msgs) init’s

sender, receiver state

before data exchange

flow controlled:

sender will not

overwhelm receiver

socket

door

segment

16

TCP segment structure

hdr length in

32 bit words

32 bits

URG: urgent data

(generally not used)

ACK: ACK #

valid

PSH: push data now

(generally not used)

RST, SYN, FIN:

connection estab

(setup, teardown

commands)

Internet

checksum

(as in UDP)

source port #

dest port #

sequence number

acknowledgement number

head not

UA P R S F

len used

checksum

rcvr window size

ptr urgent data

Options (variable length)

counting

by bytes

of data

(not segments!)

# bytes

rcvr willing

to accept

application

data

(variable length)

17

TCP sequence # (SN) and ACK (AN)

SN:

byte stream

“number” of first

byte in segment’s

data

AN:

SN of next byte

expected from other

side

cumulative ACK

Qn: how receiver handles

out-of-order segments?

puts them in receive

buffer but does not

acknowledge them

Host A

Host B

host A

sends

100 data

bytes

host ACKs

receipt

of data ,

sends no data

WHY?

host B

ACKs 100

bytes

and sends

50 data

bytes

time

simple data transfer scenario

(some time after conn. setup)

18

Connection Setup: Objective

Agree on initial sequence numbers

a sender should not reuse a seq# before it is

sure that all packets with the seq# are purged

from the network

• the network guarantees that a packet too old will be

purged from the network: network bounds the life

time of each packet

To avoid waiting for seq #s to disappear, start

new session with a seq# far away from previous

• needs connection setup so that the sender tells the

receiver initial seq#

Agree on other initial parameters

e.g. Maximum Segment Size

19

TCP Connection Management

Setup: establish connection

between the hosts before

exchanging data segments

called: 3 way handshake

initialize TCP variables:

seq. #s

buffers, flow control

info (e.g. RcvWindow)

client : connection initiator

opens socket and cmds OS

to connect it to server

server : contacted by client

has waiting socket

accepts connection

generates working socket

Teardown: end of

Three way handshake:

Step 1: client host sends TCP

SYN segment to server

specifies initial seq #

no data

Step 2: server host receives

SYN, replies with SYNACK

segment (also no data)

allocates buffers

specifies server initial

SN & window size

Step 3: client receives SYNACK,

replies with ACK segment,

which may contain data

connection

(we skip the details)

20

TCP Three-Way Handshake (TWH)

A

B

X+1

Y+1

Send Buffer

Send Buffer

Y+1

Receive Buffer

X+1

Receive Buffer

21

Connection Close

Objective of closure

handshake:

each side can release

resource and remove

state about the

connection

• Close the socket

client

server

initial

close :

release

resource?

close

close

release

resource

release

resource

22

Ch. 7 : Internet

Transport Protocols

Part B

23

TCP reliable data transfer

TCP creates reliable

service on top of IP’s

unreliable service

pipelined segments

cumulative acks

single retransmission

timer

receiver accepts out

of order segments but

does not acknowledge

them

Retransmissions are

triggered by

timeout events

Initially consider

simplified TCP sender:

ignore flow control,

congestion control

3-24

TCP sender events:

data rcvd from app:

create segment with

seq #

seq # is byte-stream

number of first data

byte in segment

start timer if not

already running (think

of timer as for oldest

unACKed segment)

expiration interval:

TimeOutInterval

timeout:

retransmit segment

that caused timeout

restart timer

ACK rcvd:

if acknowledges

previously unACKed

segments

update what is known to

be ACKed

start timer if there are

outstanding segments

3-25

NextSeqNum = InitialSeqNum

SendBase = InitialSeqNum

loop (forever) {

switch(event)

event: data received from application above

create TCP segment with sequence number NextSeqNum

if (timer currently not running)

start timer

pass segment to IP

NextSeqNum = NextSeqNum + length(data)

event: timer timeout

retransmit not-yet-acknowledged segment with

smallest sequence number

start timer

event: ACK received, with ACK field value of y

if (y > SendBase) {

SendBase = y

if (there are currently not-yet-acknowledged segments)

start timer

}

} /* end of loop forever */

3-26

TCP

sender

(simplified)

Comment:

• SendBase-1: last

cumulatively

ACKed byte

Example:

• SendBase-1 = 71;

y= 73, so the rcvr

wants 73+ ;

y > SendBase, so

that new data is

ACKed

Transport Layer

TCP actions on receiver events:

application takes data:

data rcvd from IP:

free the room in

if Checksum fails, ignore

buffer

segment

give the freed cells

If checksum OK, then :

new numbers

if data came in order:

circular numbering

update AN+WIN

WIN increases by the

number of bytes taken

AN grows by the number of

new in-order bytes

WIN decreases by same #

if data out of order:

Put in buffer, but don’t count it

for AN/ WIN

3-27

TCP: retransmission scenarios

Host A

Host A

Host B

Host B

start

timer for

SN 92

start

timer for

SN 92

stop

timer

X

loss

start

timer for

SN 100

TIMEOUT

start

timer for

new SN 92

stop

timer

NO timer

stop

timer

timeA. normal scenario

timer setting

actual timer run

NO timer

time

B. lost ACK + retransmission

3-28

TCP retransmission scenarios (more)

Host A

Host A

Host B

start

timer for

SN 92

Host B

start

timer for

SN 92

X

loss

TIMEOUT

stop

timer

star fort 92

stop

start for 100

NO timer

stop

NO timer

time

C. lost ACK, NO retransmission

redundant ACK

time

D. premature timeout

אפקה תשע"א ס"ב

Transport Layer 3-29

TCP ACK generation

[RFC 1122, RFC 2581]

Event at Receiver

TCP Receiver action

Arrival of in-order segment with

expected seq #. All data up to

expected seq # already ACKed

Delayed ACK. Wait up to 500ms

for next segment. If no data segment

to send, then send ACK

Arrival of in-order segment with

expected seq #. One other

segment has ACK pending

Immediately send single cumulative

ACK, ACKing both in-order segments

Arrival of out-of-order segment

with higher-than-expect seq. # .

Gap detected

Immediately send duplicate ACK,

indicating seq. # of next expected byte

This Ack carries no data & no new WIN

Arrival of segment that

partially or completely fills gap

Immediately send ACK, provided that

segment starts at lower end of gap

Transport Layer

3-30

Fast Retransmit

time-out period often

relatively long:

Causes long delay before

resending lost packet

detect lost segments

via duplicate ACKs.

sender often sends many

segments back-to-back

if segment is lost, there

will likely be many

duplicate ACKs for that

segment

If sender receives 3

ACKs for same data, it

assumes that segment

after ACKed data was

lost:

fast retransmit: resend

segment before timer

expires

Transp

3-31

ort

Host A

seq # x1

seq # x2

seq # x3

seq # x4

seq # x5

Host B

X

ACK # x2

ACK # x2

ACK # x2

ACK # x2

timeout

triple

duplicate

ACKs

time

Transp

3-32

ort

Fast retransmit algorithm:

event: ACK received, with ACK field value of y

if (y > SendBase) {

SendBase = y

if (there are currently not-yet-acknowledged segments)

start timer

}

else {if (segment carries no data & doesn’t change WIN)

increment count of dup ACKs received for y

if (count of dup ACKs received for y = 3) {

{ resend segment with sequence number y

count of dup ACKs received for y = 3 }

}

a duplicate ACK for

already ACKed segment

fast retransmit

3-33

Transp

ort

TCP:

Flow Control

34

TCP Flow Control for A’s data

flow control

receive side of TCP

connection at B has a

receive buffer:

Receive

Buffer

data

taken by

application

TCP data

in buffer

spare

room

AN

data

from IP

(sent by

TCP at A)

WIN

node B : Receive process

application process at B may

be slow at reading from

buffer

sender won’t overflow

receiver’s buffer by

transmitting too much,

too fast

flow control matches the

send rate of A to the

receiving application’s

drain rate at B

Receive buffer size set

by OS at connection init

WIN = window size =

number bytes A may send

starting at AN

3-35

TCP Flow control: how it works

non-ACKed data in buffer

(arrived out of order) ignored

Rcv Buffer

data

taken by ACKed data

application in buffer

s p a r e

r o o m

AN

Formulas:

data from IP

(sent by TCP at A)

WIN

node B : Receive process

Procedure:

AN = first byte not received yet

sent to A in TCP header

AckedRange =

= AN – FirstByteNotReadByAppl =

= # bytes rcvd in sequence & not taken

WIN = RcvBuffer – AckedRange

= SpareRoom

AN and WIN sent to A in TCP header

Data rcvd out of sequence is considered

part of ‘spare room’ range

אפקה תשע"א ס"ב

Rcvr advertises “spare

room” by including value of

WIN in his segments

Sender A is allowed to send

at most WIN bytes in the

range starting with AN

guarantees that receive

buffer doesn’t overflow

3-36

בקרת זרימה של – TCPדוגמה 1

3-37

אפקה תשע"א ס"ב

בקרת זרימה של – TCPדוגמה 2

3-38

אפקה תשע"א ס"ב

TCP: setting

timeouts

39

TCP Round Trip Time and Timeout

Q: how to set TCP

timeout value?

longer than RTT

note: RTT will vary

too short: premature

timeout

unnecessary

retransmissions

too long: slow reaction

to segment loss

Q: how to estimate RTT?

SampleRTT: measured time from

segment transmission until ACK

receipt

ignore retransmissions,

cumulatively ACKed segments

SampleRTT will vary, want

estimated RTT “smoother”

use several recent

measurements, not just

current SampleRTT

40

High-level Idea

Set timeout = average + safe margin

41

Estimating Round Trip Time

SampleRTT: measured time from

350

300

RTT (milliseconds)

segment transmission until ACK

receipt

SampleRTT will vary, want a

“smoother” estimated RTT

use several recent

measurements, not

just current SampleRTT

RTT: gaia.cs.umass.edu to fantasia.eurecom.fr

250

200

150

100

1

8

15

22

29

36

43

50

57

64

71

78

85

92

99

106

time (seconnds)

SampleRTT

Estimated RTT

EstimatedRTT = (1- )*EstimatedRTT + *SampleRTT

Exponential weighted moving average

influence of past sample decreases exponentially fast

typical value: = 0.125

42

Setting Timeout

Problem:

using the average of SampleRTT will generate

many timeouts due to network variations

Solution:

freq.

EstimtedRTT plus “safety margin”

RTT

large variation in EstimatedRTT -> larger safety margin

DevRTT = (1-)*DevRTT + *|SampleRTT-EstimatedRTT|

(typically, = 0.25)

Then set timeout interval:

TimeoutInterval = EstimatedRTT + 4*DevRTT

43

An Example TCP Session

44

TCP:

Congestion

Control

45

TCP Congestion Control

Closed-loop, end-to-end, window-based congestion

control

Designed by Van Jacobson in late 1980s, based on

the AIMD alg. of Dah-Ming Chu and Raj Jain

Works well so far: the bandwidth of the Internet

has increased by more than 200,000 times

Many versions

TCP/Tahoe: this is a less optimized version

TCP/Reno: many OSs today implement Reno type

congestion control

TCP/Vegas: not currently used

For more details: see TCP/IP illustrated; or read

http://lxr.linux.no/source/net/ipv4/tcp_input.c for linux implementation

46

TCP & AIMD: congestion

Dynamic window size [Van Jacobson]

Initialization: MI

• Slow start

Steady state: AIMD

• Congestion Avoidance

Congestion = timeout

TCP Tahoe

Congestion = timeout || 3 duplicate ACK

TCP Reno & TCP new Reno

Congestion = higher latency

TCP Vegas

47

Visualization of the Two Phases

MSS

threshold

Congestion avoidance

Congwing

Slow start

48

TCP Slowstart: MI

Host A

initialize: Congwin = 1 MSS

for (each segment ACKed)

Congwin+MSS

until (congestion event OR

CongWin > threshold)

RTT

Slowstart algorithm

Host B

exponential increase

(per RTT) in window

size (not so slow!)

In case of timeout:

time

Threshold=CongWin/2

49

TCP Tahoe Congestion Avoidance

Congestion avoidance

/* slowstart is over

*/

/* Congwin > threshold */

Until (timeout) { /* loss event */

on every ACK:

CWin/MSS+= 1/(Cwin/MSS)

}

threshold = Congwin/2

Congwin = 1 MSS

perform slowstart

TCP Tahoe

50

TCP Reno

Fast retransmit:

Try to avoid waiting for timeout

Fast recovery:

Try to avoid slowstart.

used only on triple duplicate event

Single packet drop: not too bad

51

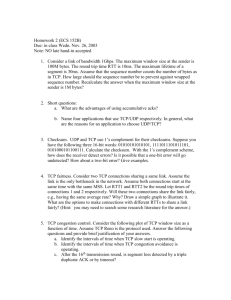

TCP Reno cwnd Trace

70

threshold

triple duplicate Ack

congestion

window

timeouts

50

fast retransmission

20

10

CA

CA

additive increase

slow start period

Sl.Start

30

CA

Slow Start

40

Slow Start

Congestion Window

60

0

0

10

20

30

Time

40

50

60

52

TCP congestion control: bandwidth probing

“probing for bandwidth”: increase transmission rate on receipt

of ACK, until eventually loss occurs, then decrease

transmission rate

continue to increase on ACK, decrease on loss (since available

bandwidth is changing, depending on other connections in network)

ACKs being received,

so increase rate

X loss, so decrease rate

sending rate

X

X

X

TCP’s

“sawtooth”

behavior

X

time

Q: how fast to increase/decrease?

details to follow

Transp

3-53

ort

TCP Congestion Control: details

sender limits rate by limiting number

of unACKed bytes “in pipeline”:

LastByteSent-LastByteAcked cwnd

cwnd: differs from rwnd (how, why?)

sender limited by min(cwnd,rwnd)

roughly,

rate =

cwnd

RTT

cwnd

bytes

bytes/sec

cwnd is dynamic, function of

perceived network congestion

RTT

ACK(s)

Transp

3-54

ort

TCP Congestion Control: more details

segment loss event:

reducing cwnd

timeout: no response

from receiver

cut cwnd to 1

3 duplicate ACKs: at

least some segments

getting through (recall

fast retransmit)

ACK received: increase cwnd

slowstart phase:

start low (cwnd=MSS)

increase cwnd exponentially

fast (despite name)

used: at connection start, or

following timeout

congestion avoidance:

increase cwnd linearly

cut cwnd in half, less

aggressively than on

timeout

Transp

3-55

ort

TCP Slow Start

when connection begins, cwnd =

Host A

Host B

RTT

1 MSS

example: MSS = 500 bytes

& RTT = 200 msec

initial rate = 20 kbps

available bandwidth may be >>

MSS/RTT

desirable to quickly ramp up

to respectable rate

increase rate exponentially

until first loss event or when

threshold reached

double cwnd every RTT

done by incrementing cwnd

by 1 for every ACK received

time

Transp

3-56

ort

TCP: congestion avoidance

when cwnd > ssthresh

grow cwnd linearly

increase cwnd

by 1 MSS per RTT

approach possible

congestion slower than

in slowstart

implementation: cwnd =

cwnd + MSS^2/cwnd

for each ACK received

AIMD

ACKs: increase cwnd by

1 MSS per RTT: additive

increase

loss: cut cwnd in half

(non-timeout-detected loss

): multiplicative decrease

true in macro picture

may require Slow Start

first to grow up to this

AIMD: Additive Increase

Multiplicative Decrease

Transp

3-57

ort

TCP congestion control FSM: overview

slow

start

cwnd > ssthresh congestion

loss:

timeout

loss:

timeout

loss:

timeout

loss:

3dupACK

fast

recovery

avoidance

new ACK loss:

3dupACK

Transp

3-58

ort

TCP congestion control FSM: details

duplicate ACK

dupACKcount++

L

cwnd = 1 MSS

ssthresh = 64 KB

dupACKcount = 0

slow

start

timeout

ssthresh = cwnd/2

cwnd = 1 MSS

dupACKcount = 0

retransmit missing segment

dupACKcount == 3

ssthresh= cwnd/2

cwnd = ssthresh + 3 MSS

retransmit missing segment

new ACK

cwnd = cwnd+MSS

dupACKcount = 0

transmit new segment(s),as allowed

cwnd > ssthresh

L

timeout

ssthresh = cwnd/2

cwnd = 1 MSS

dupACKcount = 0

retransmit missing segment

timeout

ssthresh = cwnd/2

cwnd = 1 MSS

dupACKcount = 0

retransmit missing segment

new ACK

cwnd = cwnd + MSS (MSS/cwnd)

dupACKcount = 0

transmit new segment(s),as allowed

.

congestion

avoidance

duplicate ACK

dupACKcount++

New ACK

cwnd = ssthresh

dupACKcount = 0

dupACKcount == 3

ssthresh= cwnd/2

cwnd = ssthresh + 3 MSS

retransmit missing segment

fast

recovery

duplicate ACK

cwnd = cwnd + MSS

transmit new segment(s), as allowed

Transp

3-59

ort

cwnd window size (in

segments)

Popular “flavors” of TCP

TCP Reno

ssthresh

ssthresh

TCP Tahoe

Transmission

round

Transp

3-60

ort

Summary: TCP Congestion Control

when cwnd < ssthresh, sender in slow-start

phase, window grows exponentially.

when cwnd >= ssthresh, sender is in congestion-

avoidance phase, window grows linearly.

when triple duplicate ACK occurs, ssthresh set

to cwnd/2, cwnd set to ~ ssthresh

when timeout occurs, ssthresh set to cwnd/2,

cwnd set to 1 MSS.

Transp

3-61

ort

TCP throughput

Q: what’s average throughout of TCP as

function of window size, RTT?

ignoring slow start

let W be window size when loss occurs.

when

window is W, throughput is W/RTT

just after loss, window drops to W/2,

throughput to W/2RTT.

average throughout: .75 W/RTT

Transp

3-62

ort

TCP Fairness

fairness goal: if K TCP sessions share same

bottleneck link of bandwidth R, each should have

average rate of R/K

TCP connection 1

TCP

connection 2

bottleneck

router

capacity R

Transp

3-63

ort

Why is TCP fair?

Two competing sessions: (Tahoe, Slow Start ignored)

Additive increase gives slope of 1, as throughout increases

multiplicative decrease decreases throughput proportionally

R

equal bandwidth share

y = x+(b-a)/4

loss: decrease window by factor of 2

congestion avoidance: additive

loss:(a/2+t

decrease

window by factor of 2

increase

1/2+t,b/2+t1/2+t) => y = x+(b-a)/2

congestion avoidance: additive

increase (a+t,b+t) => y = x+(b-a)

(a,b)

Connection 1 throughput

R

Transp

3-64

ort

Fairness (more)

Fairness and UDP

multimedia apps often

do not use TCP

do not want rate

throttled by congestion

control

instead use UDP:

pump audio/video at

constant rate, tolerate

packet loss

Fairness and parallel TCP

connections

nothing prevents app from

opening parallel

connections between 2

hosts.

web browsers do this

example: link of rate R

supporting already

9 connections;

new app asks for 1 TCP, gets

rate R/10

new app asks for 11 TCPs,

gets R/2 !!

Transp

3-65

ort

Exercise

MSS = 1000

Only one event per row

Transp

3-66

ort