PowerPoint - LINGUIST List

advertisement

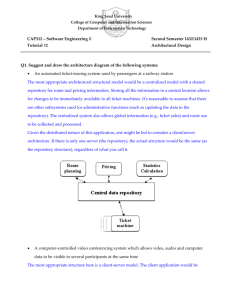

Overview: Requirements for implementing the AARDVARC vision Gary Simons SIL International AARDVARC Workshop 9–11 May 2013, Ypsilanti, MI The context A cross-cutting, NSF-wide initiative called Cyberinfrastructure Framework for 21st Century Science and Engineering (CIF21) Vision statement “CIF21 will provide a comprehensive, integrated, sustainable, and secure cyberinfrastructure to accelerate research and education and new functional capabilities in computational and data-intensive science and engineering, thereby transforming our ability to effectively address and solve the many complex problems facing science and society.” 2 The funding program AARDVARC grant was awarded by NSF’s program on Building Community and Capacity for DataIntensive Research in the Social, Behavioral, and Economic Sciences and in Education and Human Resources (BCC-SBE/EHR) We “seek to enable research communities to develop visions, teams, and prototype capabilities dedicated to creating and utilizing innovative and large-scale data resources and relevant analytic techniques to advance fundamental research for the SBE and EHR areas of research.” 3 A three-stage program 1. Funded projects focus on bringing together cross-disciplinary communities to work on the design of cyberinfrastructure for data-intensive research. [2012 and 2013] 2. A selection (perhaps one-fourth) of these communities will be funded to develop prototypes of the facilities designed in Stage 1. [Beginning 2014, funding permitting] 3. An even smaller number of projects will be funded to develop the actual facility. 4 Roadmap for current project The competition will be fierce across a wide range of disciplines. In order to succeed in the second stage of the program, we must write a top-25% proposal. Can we put ourselves in the shoes of potential reviewers and anticipate what the likely critiques to an AARDVARC implementation proposal might be? If so, that could help us set an agenda for the problems we should be working on during the course of the current project. 5 Fast forward to implementation The current AARDVARC proposal is not an implementation proposal However, reading it through that lens sheds light on what would need to be addressed if it were Reading the proposal in this way, I have imagined four show-stopping reviewer critiques that we want to be sure to avoid This presentation discusses the requirements for an implementation proposal that would avoid these critiques 6 Critiques we want to avoid 1. The focus seems too narrow to be truly transformative. 2. The issues of sustainability are not adequately addressed. 3. It is not clear that automatic transcription of under-resourced languages is even possible. 4. There is not an adequate story about how the community will work on a large scale to fill the repository. 7 1. Find the right framing Vision of CIF21: “transform our ability to effectively address and solve the many complex problems facing science and society” Potential critique The AARDVARC focus seems too narrow to be truly transformative. Requirement A successful proposal will need to frame the proposed cyberinfrastructure in terms that nonlinguists will embrace as truly transformative. 8 Problem The name AARDVARC frames the problem in terms of a repository for automatically annotated video and audio resources Among non-linguists is a framing in terms of automatic annotation likely to rise to the top 25% of cross-cutting problems? Probably not since solving the transcription bottleneck puts the focus on a means to the end, rather than the end itself The true end is having a repository of data from every language 9 A more compelling framing The AARDVARC name fails to name the main thing — language The most fundamental problem for dataintensive research in the 21st century is that we lack a repository of interoperable data from every human language Among non-linguists, would a framing like that rise to the top 25% of cross-cutting problems? This seems much more likely And others have already laid some groundwork 10 Human Language Project Building by analogy to the Human Genome Project, Abney and Bird have proposed a Human Language Project to the computational linguistics community: “We present a grand challenge to build a corpus that will include all of the world’s languages, in a consistent structure that permits large-scale crosslinguistic processing, enabling the study of universal linguistics.” (Abney and Bird 2010) In two conference papers, they have argued the motivation for the project and specified basic formats for data 11 Language Commons Building on “the commons” tradition, Bice, Bird, and Welcher have spearheaded the Language Commons “The Language Commons is an international consortium that is creating a large collection of written and spoken language material, made available under open licenses. The content includes text and speech corpora, along with translations, lexicons and other linguistic resources that support large-scale investigation of the world's languages.” Currently an open collection in the Internet Archive Browse: http://archive.org/details/LanguageCommons Submit: http://upload.languagecommons.org/ 12 We need to join forces AARDVARC, Human Language Project, and the Language Commons are variations on the same fundamental vision A repository of interoperable data from every human language Facing fierce competition with other disciplines We are too small to have competing visions, we need a single vision that others will find compelling For an implementation proposal, we should all join forces to create a grand vision of cyberinfrastructure for language-related research in the 21st century that will embrace every language 13 References The Human Language Project: Building a universal corpus of the World’s languages Steven Abney and Steven Bird. 2010. Proceedings of the 48th Annual Meeting of the Association for Computational Linguistics, 88-97, Uppsala, Sweden Towards a data model for the Universal Corpus Steven Abney and Steven Bird. 2011. Proceedings of the 4th Workshop on Building and Using Comparable Corpora, 120127, Portland, USA The Language Commons Wiki Ed Bice and others. 2010. Presentation at Wikimania 2010, Gdańsk, Poland The Rosetta Project and The Language Commons Laura Welcher. 2011. Presentation posted on The Long Now Foundation blog. 14 2. Ensure sustainability Vision of CIF21: “provide a … sustainable ... cyberinfrastructure” Potential critique The issues of sustainability are not adequately addressed. Requirement A successful proposal will need to give a convincing plan for the sustainability of the infrastructure and the resources it houses. 15 A repository is not enough Simply building a repository does not ensure sustainability It must also function as an archive that guarantees access far into the future A huge NSF investment in the repository we envision would go to waste if it could not Continue operating after the grant money ran out Survive the inevitable upgrades to hardware and system software at the host institution Recover from a disaster (natural or institutional) 16 Non-use is also waste Even deeper than the sustained functioning of a repository is the sustained use of the resources it houses The huge investment would also go to waste if Resources deteriorate or slip to obsolete formats Potential users never discover relevant resources Users are unable to access discovered resources Users cannot make sense of resources they access Accessed resources are not compatible with the computational working environments of users 17 Conditions of sustainable use A complete proposal would addresses the conditions of sustainable use (Simons & Bird 2008, sec. 3) Extant — Preserved through off-site backup, refreshing copies, format migration, fixity metadata Discoverable — Adequate descriptive metadata accessed through open and easy-to-use search Available — User has rights to access as well as a means of access Interpretable — Markup, encoding, abbreviations, terminology, methodologies are well documented Portable — File formats that are open (not proprietary) and work on all platforms 18 Checklist for responsible archiving A good proposal would measure up against the criteria of the TAPS Checklist (Chang 2010, pp. 136-7) Based on a review of mainstream tools for assessing archival practices, TAPS is a checklist of 16 points to help linguists evaluate whether a prospective home for their data will be a responsible archive Target — Are the mission and audience a good fit? Access — Will your audiences have adequate access? Preservation — Is the archive following best practices for ensuring long-term preservation? Sustainability — Is the institution well situated for the long term? 19 A repository or an aggregator? Or should the infrastructure have an aggregator at the center rather than a single repository? In today’s web economy, being the aggregator (rather than a supplier) is the sweet spot (Simons 2007 paints a vision of such a cyberinfrastructure) This would require community agreement on: Metadata standards (content, format, protocol) — OLAC provides a starting point Data standards (contents, formats, protocols) — Universal Corpus provides a starting point Still needs a self-service default repository e.g. Language Commons in Internet Archive 20 References Toward a global infrastructure for the sustainability of language resources Gary Simons and Steven Bird. 2008. Proceedings of the 22nd Pacific Asia Conference on Language, Information and Computation, 20– 22 November 2008, Cebu City, Philippines. Pages 87–100. TAPS: Checklist for responsible archiving of digital language resources Debbie Chang. 2010. MA thesis, Graduate Institute of Applied Linguistics. Dallas, TX. Doing linguistics in the 21st century: Interoperation and the quest for the global riches of knowledge Gary Simons. 2007. Proceedings of the E-MELD/DTS-L Workshop: Toward the Interoperability of Language Resources, 13–15 July 2007, Palo Alto, CA. 21 3. Focus on achievable automation Purpose of BCC-SBE/EHR: “enable research communities to develop … prototype capabilities” Potential critique It is not clear that automatic transcription of under-resourced languages is even possible. Requirement A successful proposal will need a compelling description of automated helps for annotation that can be implemented today. 22 The BCC-SBE/EHR vision Building Community and Capacity for Data-Intensive Research program is about activity in the present to support research in the future: Present We “seek to enable research communities activities to develop visions, teams, and prototype capabilities Present focus dedicated to creating and utilizing innovative and large-scale data resources and relevant analytic techniques Future result to advance fundamental research for the SBE and EHR areas of research.” 23 Setting the right target Automated transcription of under-resourced languages is still in the future It is an advance in fundamental research that can be furthered by a data-intensive cyberinfrastructure The follow-up proposal in the BCC program is an implementation proposal, not a research proposal It must focus on the automated helps for annotation that we can implement immediately It is not meant to be a request to support research on annotation tasks we cannot currently automate It should implement a framework into which we can 24 plug the latter as that research comes to fruit Sorting the tasks During the AARDVARC project we should Identify annotation tasks that we can automate now Plan work modules for these in the proposed implementation grant Identify annotation tasks that are clearly in the future Pursue research grants on these through the normal research programs Implementation proposal would mention supplying data to future research as within its broader impacts Identify annotation tasks that are borderline Conduct proof-of-concept testing now to determine whether it belongs in the first set or the second set Breaking the bottleneck The repository should embrace all strategies for breaking the transcription bottleneck Focus on the end of data in every language, as opposed to a particular means for getting it A promising new strategy is oral annotation Woodbury (2003) proposed this to turn a huge collection of tapes from 15 years of Cup’ik radio broadcasts into usable data Make running oral translations Do careful respeaking of “hard-to-hear tapes” This inspired the development of BOLD: Basic Oral Language Documentation 26 References Defining documentary linguistics Anthony Woodbury. 2003. In Peter Austin (ed.), Language Documentation and Description 1:35-51. London: SOAS. The rise of documentary linguistics and a new kind of corpus Gary Simons. 2008. Presented at 5th National Natural Language Research Symposium, De La Salle University, Manila, 25 Nov 2008. Basic Oral Language Documentation D. Will Reiman. 2010. Language Documentation and Conservation, Vol. 4 , pp. 254-268 A scalable method for preserving oral literature from small languages Steven Bird. 2010. Proceedings of the 12th International Conference on Asia-Pacific Digital Libraries, 5-14, Gold Coast, Australia To BOLDly go where no one has gone before Brenda Boerger. 2011. Language Documentation and Conservation, Vol. 5 , pp. 208-233 27 Example of respeaking Original recording on first recorder Careful respeacking From fieldwork of Will Reiman on Kasanga [cji] language, Guinea-Bissau on second recorder Original played back (with pauses) into left channel Respoken on mike into right channel 28 A known best practice in field methods Instructions for the Recording of Linguistic Data In Bouquiaux and Thomas (1976), trans. Roberts (1992). Studying and Describing an Unwritten Language. Dallas: Summer Institute of Linguistics. “Go over this spontaneous recording, either with the narrator himself or with a qualified speaker, in order to have it repeated sentence by sentence, in a careful, relatively slow, yet normal manner, and to have it whistled (tone languages).” (p. 180) Goes on to describe method using 2 tape recorders This method may be even more essential today as we prepare recordings for automatic transcription BOLD:PNG A project led by Steven Bird; see www.boldpng.info Trained university students to use low-cost digital recorders to go back to their home villages to make recordings and to annotate them orally Problems: Managing all the files on all the recorders did not scale 30 Two recorder annotation was too complicated Working on solutions Language Preservation 2.0: Crowdsourcing Oral Language Documentation using Mobile Devices http://lp20.org/ They have developed an Android app, Aikuma Files shared within community via Internet or local Wi-Fi hub; supports voting for what to release Annotate on a single device with a simple two-button tool Blog post containing two demo videos from Bird’s current field trip in the Amazon 31 4. Foster global collaboration Purpose of BCC-SBE/EHR: “enable research communities … to creat[e] new, large-scale, next-generation data resources” Potential critique There is not an adequate story about how the community will work on a large scale. Requirement A successful proposal will need a compelling account of how a global community of researchers, speakers, and citizen scientists will collaborate to 32 fill the repository with annotated resources. The real challenge Building the repository is one thing, but filling it with resources from most languages will be quite another Funded staff will be able to implement the repository, but it will take thousands of volunteers to really fill it Realizing the vision will depend on Mobilizing the research community to participate Mobilizing speaker communities to participate Mobilizing citizen scientists to participate Building an infrastructure that supports collaboration among all these players on a global scale 33 Resources as open-ended Repository must support open-ended annotation After initial deposit, other players should be able to Add careful respeaking Add a translation (either oral or written) Add a transcription (of text or of translation) Add a translation of the translation Invoke an automatic transcription or translation Check and revise the automatic output Each addition should be a separate deposit (with its own metadata) that links back to what it annotates 34 (i.e., stand-off markup) Resource workflow The types and languages of the complete set of annotations associated with a resource comprise the state of that resource The annotation tasks are operators on that state Each annotation task has a prerequisite state Performing the task changes the state of the resource This defines an implicit workflow For any resource, there is a set of possible next tasks The infrastructure needs to manage that workflow 35 Supply and demand We need to match up two things: The huge demand for annotation tasks to be done — all of the possible next tasks for all resources The supply of people worldwide who could do them Our infrastructure needs to be a marketplace that matches supply with demand E.g., eBay, eHarmony, mTurk.com Match a user’s language profile to find next tasks to do E.g., TED’s Open Translation Project using Amara Web tool to segment videos and add subtitles 140 languages, ~10,000 translators, >50,000 translations 36 If we build it … They won’t necessarily come! In addition to describing the infrastructure we would implement to match supply and demand, a compelling proposal would also: Describe the plans for organizing the people who participate (including governance) Describe plans for mobilizing the various target communities: researchers, speakers, citizens Describe incentives for participation, especially ones that are built into the design of the infrastructure 37 Conclusion The AARDVARC project gives us the opportunity to build the vision and plans for a sustainable cyberinfrastructure to Collect and provide access to interoperable data resources from every human language Harness automation wherever possible to add the needed transcriptions and translations Create a marketplace that will permit thousands worldwide to collaborate in performing the annotation tasks that cannot be automated Thus transforming our ability to address and solve language-related problems facing science and society