Evaluation and Assessment of National Science Foundation Projects

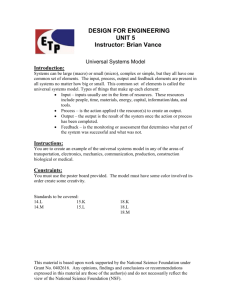

advertisement

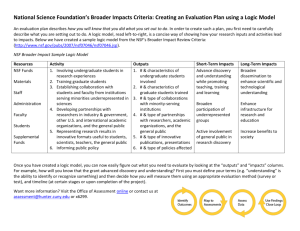

National Science Foundation Project Evaluation and Assessment Dennis W. Sunal Susan E. Thomas Alabama Science Teaching and Learning Center http://astlc.ua.edu/ College of Education University of Alabama Evaluation and Assessment of National Science Foundation Projects Outline Consider your present approach and difficulties toward evaluation Consider NSF’s perception of evaluation difficulties Explore the process and skills associated with proficiently planning a project evaluation Investigate difficulties with a case study example Creating your own evaluation plan Group Activity: Form small groups of two or three Group members will list “project evaluation” difficulties they have recognized in the past Create a question from one of the listed items " What is the difficulty?” Difficulties with NSF Project Evaluation Whole Audience Activity: Consider the question; What are the critical areas that define “evaluation difficulties” in your projects? NSF Recognized Common Difficulties with Project Evaluations? NSF Diagnosed Difficulties ? Lack of funding planned for evaluation Too short a time, (e.g. longer than life of the project, follow grad student for years) Use of only quantitative methods Lack of knowledge of evaluation techniques Did not use information available about what has worked in other projects Data collection not extended extended over time Ambiguous requirements and/or outcomes Evaluation Evaluation has varied definitions…. Accepted definition for NSF Projects: “Systematic investigation of the worth or merit of an object…” -Joint committee on Standards for Education Evaluation NSF Rationale and Purpose of Conducting Project Evaluation Develop a diverse internationally competitive and globally engaged workforce of scientists, engineers, and well-prepared citizens; Enabling discoveries across the frontiers of science and engineering connected to learning, innovations, and service to society; Providing broadly accessible, state-of-the-art information bases and shared research and education tools. How NSF Thinks About Evaluation! A component that is an integral part of the research and development process It is not something that comes at the end of the project It is a continuous process that begins during planning Evaluation is regularly and iteratively performed during the project and is completed at the end of the project Different questions are appropriate at different phases of the project Evaluation can be accomplished in different ways Project planning/ modification Needs assessment and collection of baseline data Project Evaluation There is no single model that can be universally applied Data gathered may be quantitative, qualitative, or both Project development The Project Development/Evaluation Cycle Important Criteria for Evaluation Focus on an important question about what is being accomplished and learned in the project Emphasis on gathering data that can be used to identify necessary midproject changes Plan a strong evaluation design (e.g. with comparison groups and well-chosen samples) that clearly addresses the main questions and rules out threats to validity Use sound data collection instruments, appropriate to the questions addressed Establish procedures to assure the evaluation is carried out objectively and sources of bias are eliminated Data analysis appropriate to questions asked and data collection methodologies being used A reasonable budget given the size of the project, about 5 to 10 percent NSF Expects Grantee will clearly lay out and evaluation plan in the proposal Refine the plan after the award Include in the final report a separate section on the evaluation, its purpose, and what was found In some cases a separate report or interim reports may be expected. Example RFP - Faculty Early Career Development Proposal Content A. Project Summary: Summarize the integrated education and research activities of the plan B. Project description: Provide results from prior NSF support. Provide a specific proposal for activities over 5 years that will build a firm foundation for a lifetime of integrated contributions to research and education. Plan for development should include: The objectives and significance of the research and education activities. The relationship of the research to current knowledge in the field. An outline of the plan; including evaluation of the educational activities on a yearly basis. The relationship of the plan to career goals and objectives. A summary of prior research and educational accomplishments. NSF Merit Review Broader Impacts Criterion: Representative Activities NSF criteria relate to 1) intellectual merit and 2) broader impact. Criteria: Broader Impacts of the Proposed Activity 1. Does the activity promote discover, understanding, teaching, training and learning? 2. Does the proposed activity include participants of underrepresented groups? 3. Does it enhance the infrastructure for research and education 4. Will the results be disseminated broadly to enhance scientific and technological understandings? 5. What are the benefits of the proposed activity to society? 1. Advance discovery and understanding while promoting teaching, training and learning Integrate research activities into the teaching of science at all levels Involve students Participate in recruiting and professional development of teachers Develop research based educational materials and databases Partner researchers and educators Integrate graduate and undergraduate students Develop, adapt, or disseminate effective models and pedagogic approaches to teaching 2. Broaden participation of underrepresented groups Establish research and education collaborations with students and teachers Include students from underrepresented groups Make visits and presentations on school campuses Mentor early year scientists and engineers Participate in developing new approaches to engage underserved individuals Participate in conferences, workshops, and field activities 3. Enhance infrastructure for research and education Identify and establish collaborations between disciplines and institutions Stimulate and support next generation instrumentation and research and education platforms Maintain and modernize shared research and education infrastructure Upgrade the computation and computing infrastructure Develop activities that ensure multi-user facilities are sites of research and mentoring 4. Broaden dissemination to enhance scientific and technological understanding Partner with museums, science centers, and others to develop exhibits Involve public and industry in research and education activities Give presentations to broader community Make data available in a timely manner Publish in diverse media Present research and education results in formats useful to policy makers Participate in multi- and interdisciplinary conferences, workshops, and research activities. Integrate research with education activities 5. Benefits to society Demonstrate linkage between discovery and societal benefit through application of research and education results. Partner with others on projects to integrate research into broader programs Analyze, interpret, and synthesize research and education results in formats useful to nonscientists Planning Evaluation Necessary to assess understanding of a project’s goals, objectives, strategies and timelines. The criteria can also indicate a baseline for measuring success. Planning Evaluation Addresses the Following… Why was the project developed? Who are the stakeholders? What do the stakeholders want to know? What are the activities and strategies that will address the problem which was identified? Where will the program be located? How long will the program operate? How much does it cost in relation to outcomes? What are the measurable outcomes to be achieved? How will data be collected? Two Kinds of Evaluation Program Evaluation determines the value of the collection of projects (e.g. the Alabama DOE-EPSCoR Program) Project Evaluation focuses on an individual project and its many components funded under an umbrella of the program (research plan components, educational plan components). It answers a limited number of questions. Types of Evaluation Formative Evaluation – Implementation – Progress Summative Evaluation – Near end of a major milestone or – At the end of a project Formative Evaluation Assesses ongoing project activities … Purpose: assess initial and ongoing project activities Done regularly at several points in throughout the developmental life of the project. Main goal is to check, monitor, and improve - to see if activities are being conducted and components are progressing toward project goals Implementation Evaluation Early check to see if all elements in place Assess whether project is being conducted as planned Done early, several times during a life cycle Cannot evaluate outcomes or impact unless you are sure components are operating according to plan Sample question guides: Were appropriate students selected, was the make-up of group consistent with NSF’s goal for a more diverse workforce? Were appropriate recruitment strategies used? Do activities and strategies match those described in the plan? Progress Evaluation: Assess progress in meeting goals Collect information to learn if benchmarks of progress were met, what impact activities have had, and to determine unexpected developments Useful throughout life of project Can contribute to summative evaluation Sample question guides: Are participants moving toward goals, improving understanding of the research process Are numbers of students reached increasing? Is progress sufficient to reach goals? Summative Evaluation Assesses project’s success and answers… Purpose: Assess the quality and impact of a fully mature project Was the project successful? To what extent did the project meet the overall goals? What components were the most effective? Were the results worth the projects cost? Is the project replicable? Characteristics Collects information about outcomes and impacts and the processes, strategies, and activities that led to them Needed for decision making – disseminate, continue probationary status, modify, or discontinue Important to have external evaluator who is seen as objective and unbiased or have an internal evaluation with an outside agent review of the design and findings Consider unexpected outcomes Evaluation Compared to other Data Gathering Activities Evaluation differs from other types of activities that provide information on accountability Performance Indicators and Milestones Different information serves different purposes Formative Evaluation Project Description: Proposal Summative Evaluation Basic Research Studies Formative vs. Summative “When the cook tastes the soup, that is formative; when the guest taste the soup, that is summative.” The Evaluation Process Steps in conducting an evaluation (six phases) 1. 2. 3. 4. 5. 6. Develop a conceptual model of the program and identify key evaluation points Develop evaluation questions and define measurable outcomes Develop an evaluation design Collect data Analyze data Reporting Conditions to be met Information gathered is not perceived as valuable or useful (Wrong questions asked) Information gathered is not seen as credible or convincing (wrong techniques used) Report is late or not understandable (does not contribute to decision making process) 1. Develop a Conceptual Model Start with a conceptual model to which an evaluation design is applied. In the case below a “logic model” is applied. Identify program components and show expected connections among them. Inputs Activities Short-Term Outcomes Long-Term Outcomes Inputs (Examples) Resource streams NSF Funds Local and State funds Other partnerships In-kind contributions Activities (Examples) services, materials, and actions that characterize project goals Recruit traditionally underrepresented students Infrastructure development Provisions of extended standards-based professional development Public outreach Mentoring by senior scientist Short-Term Outcomes (Examples) Effective use of new materials Numbers of people, products or institutions reached (17 students mentored) Changes resulting from experience (impact on choice of major of research RAs) Long-Term Outcomes (Examples) Broader more enduring impact Changes in instructional practice leading to enhanced student learning and performance Selecting a career in NSF-related research activity Next steps: Determine, review, and/or clarify timeline Identify critical achievements and times that need to be met. 2. Question Development Identify key stakeholders and audiences early to help shape questions. Multiple audiences exist. (Scientists, NSF, students, administration, community ..) Formulate potential evaluation questions of interest considering stakeholders and audiences. Define outcomes in measurable terms, including criteria for success. Determine feasibility and prioritize and eliminate questions. Questions to consider when developing an evaluation approach… 1. 2. 3. 4. 5. 6. Who is the information for and who will use the findings? What kinds of information are needed? How is the information to be used? When is the information needed? What resources are available to conduct the evaluation? Given the answers to the preceding questions, what methods are appropriate? 2. …Defining Measurable Outcomes Briefly describe the purpose of the project. State in terms of a general goal. State an objective to be evaluated as clearly as you can. 4. Can this objective be broken down further? 5. Is the objective measurable? If not – restate. 6. Once you have completed the above steps, go back to # 3 and write the next objective. Continue with steps 4, 5, and 6. 1. 2. 3. 3. Develop and Evaluation Plan Select a methodological approach and data collection instruments – Quantitative or qualitative – Lead to different questions asked, timeframe, skills needed, type of data seen as credible Determine who will be studied and when – Sampling, use of comparison groups, timing, sequencing, frequency of data collection, and cost. 4. Data Collection Obtain necessary clearance and permission. Consider the needs and sensitivities of the respondents. Make sure your data collectors are adequately trained and will operate in an objective, unbiased manner. Obtain data from as many members of your sample as possible. Cause as little disruption as possible to the ongoing effort. 4. Data Collection Sources and Techniques Checklists or inventories Rating scales Semantic differentials Questionnaires Interviews Written responses Samples of work Tests Observations Audiotapes Videotapes Time-lapse photographs 5. Analysis of Data Check raw data and prepare data for analysis. Conduct initial analysis based on the evaluation plan. Conduct additional analysis based on the initial results. Integrate and synthesize findings Develop conclusions regarding what the data shows 6. Reporting Final Reports typically include six major sections: a) Background b) Evaluation study questions c) Evaluation procedures d) Data analysis e) Findings f) Conclusions (and recommendations) a. Background The background section describes: The problem or needs addressed A literature review (if relevant) The stakeholders and their information needs The participants The project’s objectives The activities and components Location and planned longevity of the project The project’s expected measurable outcomes b. Evaluation and Study Questions Describes and lists the questions the evaluation addressed. Based on: Need for specific information Stakeholders c. Evaluation Procedures This section describes the groups and types of data collected and the instruments used for the data collection activities. For example: Data for identified critical indicators Ratings obtained in questionnaires and interviews Descriptions of activities from observations of key instrumental components of the project Examination of extant data records d. Data Analysis Describes the techniques used to analyze the data collected Describes the various stages of analysis that were implemented Describes checks that were carried out to make sure that the data were free of as many confounding factors as possible Contains a discussion of the techniques used e. Findings Presents the results of the analysis described previously Organized in terms of the questions presented in the section on evaluation study questions Provides a summary that presents the major conclusions f. Conclusions Reports the findings with more broad-based and summative statements Statements must relate to the findings of the project’s evaluation questions ad to the goals of the overall program. Sometimes includes recommendations for NSF or the other undertaking projects similar in goals, focus, and scope. Recommendations must be based solely on robust findings that are data-based and not on anecdotal evidence. Other Sections An Abstract: a summary of the study and its findings presented in approximately one half page of text. An executive summary: a summary which may be as long as 4 to 10 pages, that provides an overview of the evolution, its findings, and implications. Formal Report Outline Summary Sections – Abstract – Executive summary Background – Problems or needs addressed – Literature review – Stakeholders and their information needs – Participants – Projects’ objectives – Activities and components – Location and planed longevity of the project – Resources used to implement the project – Constraints Evaluation study questions – Questions addressed by the study – Questions that could not be addressed by the study Evaluation Procedures – Sample: Selection procedures Representativeness of the sample – Data collection Methods Instruments – Summary matrix Evaluation questions Variables Data gathering approaches Respondents Data collection schedule Findings – Results of the analysis organized by study questions Conclusions – Broad-based, summative statements – Recommendations, when applicable Disseminating the Information Consider what various groups need to know Best manner for communicating information to them Audiences – Funding sources and potential funding sources – Others involved with similar projects or areas of research – Community members, especially those who are directly involved with the project or might be involved – Members of the business or political community, etc. Finding an Evaluator University setting - contact department chairs for availability of staff skilled in project evaluation Independent contractors – department chairs, phone book, state departments, private foundations (Kellogg Foundation in Michigan), and other local colleges and universities will be cognizant of available services Contact other researchers or peruse research and evaluation reports Evaluation Application Activity Group Activity: Form small groups and assign roles. All group members should read the “Sample Proposal Outline” on the next set of slides. Consider the question, Develop an Evaluation Plan for the NSF Project. " What should be considered?” Proposal Outline Sample Research Proposal CAREER: Fundamental Micromechanics and Materials Dynamics of Thermal Barrier Coating Systems Containing Multiple Layers A. Research Plan 1. Introduction General background General definitions Explanation of procedures Benefits Objectives • • • Characterize the dynamics of the process Monitoring techniques Development of models 2. Proposed Research Introduction Experimental Approach Materials Micro-structural Characterization Mechanical Properties Micro-mechanical Characterization – Nanoindentation Bulk Mechanical Properties Residual Stresses and Techniques Modeling 3. Prior Research Accomplishments 4. Significance and Impact of Research 5. Industrial Interest B. Education Plan 1. Objectives Enhance the undergraduate curriculum Encourage the best undergraduate students to pursue graduate studies To increase diversity by attracting underrepresented minority students 2. Education Activities – The education of undergraduate and graduate students in materials and mechanical characterization and laboratory report preparation. – Encourage undergraduates to pursue graduate work. – Actively recruit undergraduate minority students 3. Teaching Activities 4. Teaching & Education Accomplishments References NOVA Web Site (NASA Opportunities for Visionary Academics) http://education.nasa.gov/nova NSF (2002). Division of Research, Evaluation and Communication National Science Foundation (2002).The 2002 User-Friendly Handbook for Project Evaluations. Henson, K. (2004). Grant writing in higher education, Boston: Pearson Publishers. Knowles, C. (2002). The first time grant writer's guide to success, Thousand Oaks CA: Corwin Press Burke, M. (2002). Simplified grant writing, Thousand Oaks CA: Corwin Press NSF (2004). A Guide for Proposal Writing nsf04016_Criteria for Evaluation: Intellectual merit and broader impacts. www.nsf.gov/pubs/2004/nsf04016/nsf04016_4.htm - NSF 02-057: The 2002 User-Friendly Handbook for Project Evaluation, a basic guide to quantitative and qualitative evaluation methods for educational projects NSF 97-153: User-Friendly Handbook for Mixed Method Evaluations, a monograph "initiated to provide more information on qualitative [evaluation] techniques and ... how they can be combined effectively with quantitative measures" Online Evaluation Resource Library (OERL) for NSF's Directorate for Education and Human Resources, a collection of evaluation plans, instruments, reports, glossaries of evaluation terminology, and best practices, with guidance for adapting and implementing evaluation resources Field-Tested Learning Assessment Guide (FLAG) for Science, Math, Engineering, and Technology Instructors, a collection of "broadly applicable, self-contained modular classroom assessment techniques and discipline-specific tools for ... instructors interested in new approaches to evaluating student learning, attitudes, and performance." National Science Foundation Project Evaluation and Assessment Dennis W. Sunal Susan E. Thomas Alabama Science Teaching and Learning Center http://astlc.ua.edu/ College of Education University of Alabama