Database management final project

advertisement

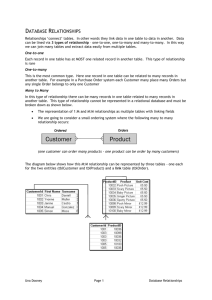

IE 330: Spring 2015 Team Project Gaston Moliva Hunter Molnar Mikal Nelson Nurbolat Ordabek May 1st, 2015 Introduction Databases are are a collection of data that is primarily organized to model aspects of reality in a way that supports processes requiring information. The area of study dedicated to the development and understanding of databases is known as database management systems (DBMS). DBMS are computer software applications that interact with the user, other applications, and the database itself to capture and analyze data. A general-purpose DBMS is designed to allow the definition, creation, querying, update, and administration of databases. The DBMS provides various functions that allow entry, storage and retrieval of large quantities of information as well as provide ways to manage how that information is organized. Because of the close relationship between them, the term "database" is often used casually to refer to both a database and the DBMS used to manipulate it. Problem Description For this project, multiple preformed Microsoft excel tables (named ‘Transaction Data’ and ‘Demographic Data’) of data were given containing the following descriptors of the data: Customer ID, Item Type, Item Number, Vendor ID, Week, Day, Units Bought, Coupon Origin, Coupon Value (Cents), and Coupon ID. The descriptors under the demographic data are as follows: Customer ID, Family Size, Income, Ethnicity, Dogs, Cats, TVs, Age, Children, Work Hours, Occupation, Education, and Subscription (magazines/newspapers). The original data must be split into tables with relationships among them so that the database can be used to create queries and analyze relational trends among the data in these tables. Objectives Create Microsoft Access tables containing just the columns of the data Making relationships between the tables Enforcing referential integrity appropriately Generate several queries using the relationships created Construct graphs, visually showcasing the relational trends of the data Methodology of Analysis In the second part of the project, the team needed to analyze data using skills that were learned in the class such as regression, forecasting, trend based modeling, and measurement of error. Each question required a specific approach to be analyzed. Question 1 For the first question, the two highest selling items for the first 25 weeks were using the Transactions data. Then, sales forecasts for these items were computed using different methods. Finally, the prediction error for weeks 15 through 25 was found and Mean Squared Error(MSE), Mean Absolute Deviation(MAD), and Tracking Signal(TS) were calculated. The two highest selling items during the first 25 weeks, Items 17 and 3, were found using a pivot table in Excel. Finding 2 highest selling items for the first 25 weeks using pivot table in Excel. Figure 1.1: Two highest selling items for the first 25 weeks. Then, using the Excel Analysis ToolPak, the 4-period moving average and exponential smoothing sales forecast were calculated for Item #17 and Item #3. Figure 1.2: 4-period moving average and exponential smoothing for items 17 and 3 To find the 2-period weighted moving averages for items 3 and 17, the previous (i-1) and current points (i) were added and divided by two for each week. Figure 1.3: 2 period moving weighted moving average when w_t-1=0.6 and w_t-2= 0.4 for items 17 and 3 for weeks 15 through 25 Next, prediction errors were calculated for weeks 15 through 25 using various tools such as Mean Squared Error, Mean Absolute Deviation, and Tracking Signal. The prediction error is the difference between the actual value and forecasted value for each week. Figure 1.4 shows all the Errors. Figure 1.4: Prediction Errors for 4-period, 2-period moving averages and Exponential smoothing for items 17 and 3 for weeks 15 through 25 The Mean Squared Error is the sum of square errors(differences between actual and forecast values) divided by the number of forecasts. The formula for MSE is: The Mean Absolute Deviation is the sum of the absolute values of the errors(difference between actual and forecast value). The formula for MAD is: The Tracking Signal is the sum of errors which is the Cumulative Forecast Error(CFE) divided by Mean Absolute Deviation(MAD). The formulas for TS and CFE are: The excel file was used to find these values using the above formulas(Figure 1.5) Figure 1.5: MSE, MAD, CFE, and TS for items 17 and 3 for weeks 15 through 25 Question 2: Regression 1. For the second question, team needed to find 10 top items by total volume and construct the time series. Also provide regressions. Table 2.1:Regression table for top 10 items. Because the R values are very small, we conclude that there is no particular trend in demand. 2 Figure 2.1 shows the time series for all 10 items. Item type 17- Snacks Item type 12-eggs Eggs sale does not have any trend at all. But around week 88 it went up. That may be cause of some holidays when people cook cookies or some foods using eggs. Item type 13-Ice cream The time series shows that sometimes ice- cream sales volume went up. It may be because of summer seasons or hot days. Item type 8- cook Item type 9- crackers Item type 5-cereal Item type 18-soap Item type 11-dogs Item type 10- detergents Item type 19-soft 2. In this part, the top 3 highest selling items were identified for the first 60 week period, and regression analysis was done. The pivot table function was used to find the 3 highest selling items for the first 60 week period. These 3 items are items #17, #12, #3 (Figure 3.1 and 3.2). Table 3.1: 3 highest selling items Table 3.2: Regression for 3 highest selling items. 3. Using the regression equations from part 2(Table 3.2), total weekly sales were predicted. Predicted values were obtained using regression equation and plugging in weeks as a X values. Table 3.3: Predicted values for each items from week 61 through 80 Question 4: K-means clustering Figure 1: Clustering of items 17 and 14 (k=3) Figure 2: Clustering of items 17 and 14 (k=4) Figure 3: Clustering of items 17 and 14 (k=5) Based on the analysis, it would appear that the best K-means clustering to use would be when k=3. The close proximity of the data indicates more commonality between items than it does in the cases when k=4 and k=5. This is also evident by how close the values of the centroid are in relation to each other and to the pair of items independently. Figure 3: Clustering of items 5 and 7 (k=4) Figure 3: Clustering of items 12 and 1 (k=4) Results Database Schema Diagram Queries **Note: Most query results do not include all rows in order to save space 1) SELECT CouponID, CouponValue FROM Coupons WHERE CouponValue > 100; Lists the coupon id and value of the coupon for all coupons that are worth more than $1.00. 2) SELECT CustomerID, UnitsBought FROM Transactions WHERE UnitsBought > 1; Returns a list of all customers who purchased more than 1 item over the sample period and the total amount of items that each purchased. 3) SELECT Transactions.CustomerID, SUM(CouponValue) FROM Transactions RIGHT JOIN Coupons ON Transactions.CouponID = Coupons.CouponID WHERE CouponOrigin > 0 GROUP BY Transactions.CustomerID; Returns a list of customers who used coupons and the total value that each saved over the sample period. 4) SELECT Customer.Income, SUM(UnitsBought) FROM Transactions INNER JOIN Customer ON Transactions.CustomerID = Customer.CustomerID WHERE Customer.Income <> 0 GROUP BY Customer.Income; Lists a unique column for income levels and the total number of items that customers from each income level purchased. 5) SELECT Week, SUM(UnitsBought) FROM Transactions GROUP BY Week; Lists the total amount of units bought by each week. 6) SELECT DISTINCT VendorID, ItemType FROM Transactions WHERE ItemType = 17; Lists all vendors that sell snacks. (The highest selling item as seen by another query) 7) SELECT ItemType, COUNT(CouponID) FROM Transactions WHERE CouponID <> 'C1' GROUP BY ItemType; Finds the number of transactions in which coupons were used for each item type 8) SELECT COUNT(CouponID), ItemType FROM Transactions WHERE CouponID = 'C1' GROUP BY ItemType; Finds the number of transaction in which coupons were not used for each item type. ***This and the similar previous query were used to create Graph 4. 9) SELECT CustomerID FROM Customer; Selects a list of customer ids. 10) SELECT SUM (UnitsBought) FROM Transactions; Lists the total number of items sold over the period for all items. 11) SELECT ItemType, SUM(UnitsBought) FROM Transactions GROUP BY ItemType; Lists the total units bought by item type over the entire period. 12) SELECT Customer.Cats, AVG(UnitsBought) FROM Transactions RIGHT JOIN Customer ON Transactions.CustomerID = Customer.CustomerID WHERE Customer.Cats > 0 AND Transactions.ItemType = 4 GROUP BY Customer.Cats; Lists the average amount of cat food purchased depending on the number of cats a customer has. 13) SELECT Customer.FamilySize, AVG(UnitsBought) FROM Transactions RIGHT JOIN Customer ON Transactions.CustomerID = Customer.CustomerID WHERE Transactions.Day = 7 GROUP BY Customer.FamilySize; Lists the average amount of items purchased on Saturdays by the family size of customers. 14) SELECT ItemType, SUM(UnitsBought) FROM Transactions RIGHT JOIN Customer ON Transactions.CustomerID = Customer.CustomerID WHERE Income = 11 GROUP BY ItemType; Lists the total quantity purchased by item type for customers making more than $75,000 per year. 15) SELECT TVs, AVG(UnitsBought) FROM Transactions RIGHT JOIN Customer ON Transactions.CustomerID = Customer.CustomerID WHERE TVs <> 9 GROUP BY TVs; Lists the average amount of items bought by the number of TVs a customer may have. 16) SELECT ItemType, SUM(UnitsBought) FROM Transactions RIGHT JOIN Customer ON Transactions.CustomerID = Customer.CustomerID WHERE MaleAge = 1 GROUP BY ItemType; Lists the number of each item type bought by males age 18-29. 17) SELECT VendorID FROM Transactions WHERE ItemType = 16 AND 17 AND 13 AND 20 GROUP BY VendorID; Lists Vendors that sell Items 13, 16, 17, and 20. 18) SELECT Day, SUM(UnitsBought) FROM Transactions GROUP BY Day; Shows the total units bought for each day of the week throughout the sample period. 19) SELECT ItemType, COUNT(CouponOrigin) FROM Transactions RIGHT JOIN Coupons ON Transactions.CouponID = Coupons.CouponID WHERE CouponOrigin = 22 GROUP BY ItemType; Lists the number of times a magazine coupon as used to purchase each item type. 20) SELECT Ethnicity, SUM(UnitsBought) FROM Transactions RIGHT JOIN Customer ON Transactions.CustomerID = Customer.CustomerID WHERE Ethnicity <> 0 GROUP BY Ethnicity; Lists the units bought by ethnicity. Graphs 1) The chart below shows the highest selling items over the entire sales period. Snacks, eggs, butter, cooking items, and cereal were the five highest selling items. The demand for these items is likely driven by the fact that these are all common households items that you could expect to find in most homes. 2) While there seems to be a correlation between items bought and income level from income level 2 to around 8, this is not the case when looking at the highest income levels. This is likely because as you increase or decrease income levels to very high or very low, the amount of people who make that level of income will be lower. 3) There does not seem to be any strong correlation between the week and the number of items sold. While the best fitting trend line is a polynomial (indicative of peaks and valleys in the data over time), the R value is still very low. 2 4) Graph shows which items had the highest proportions of transactions in which the customer used a coupon during purchase. Cereal (5), coffee (7), pills (15), detergents (10), and soap (18) showed the highest level of coupon usage. These are the items that customers were more likely to seek out discounts on. 5) When comparing the average units bought per transaction by family size, there seems to be a linear correlation between the avg. units bought and the size of a customer's family. Using regression analysis in excel, we can conclude that there is a significant linear relationship between family size and average units bought on Saturday at alpha = .05 6) From the graph, it can be seen that certain days such as 4 and especially 5 and 6, have a higher sales quantity than the other four days. It can be inferred from this data that most people tend to do their shopping towards the end of the week. Takeaways Access is a very useful resource for storing and maintaining relationships in databases. It allows primary and foreign keys to be created to easily update and manipulate records. For interpreting and finding meaningful data within the access database, using SQL was incredibly helpful. It is possible to find trends between customer demographics and quantities of purchases, days where more units are likely to be bought, and other interactions such as the number of TVs owned by the average units bought per transaction. Once data has been queried, graphs and charts can be used to further analyze trends using moving averages or regression in excel. A few examples of the different ways SQL can be used to aid in data analysis have been covered, but there are countless ways in which the database can be manipulated to show us the relations and trends in the sales data. Recommendations In terms if the Demographics data, it is rather important to note that is mainly moderately sized families that are the subjects of the database system. Within these families, several attributes are used to describe more about them. Within these attributes, certain values are used to denote which characteristic of the attribute specifically applies to the user. Some attributes use binary variables (Subscription) while others implement scales using the NOIR (Nominal, Ordinal, Interval, Ratio) format. Anyone looking to isolate a particular person or group from the data should be mindful of this as it can help to narrow the scope when targeting a specific demographic. Appendix Figure 1: Time Series table of top 10 items (by total volume) Item Regression Equation R^2 17 -0.0381x + 82.848 0.0019 12 0.0093x + 71.203 5.00E-05 13 -0.1284x + 71.372 0.0101 8 -0.3352x + 70.059 0.23 9 -0.192x + 60.99 0.1023 5 -0.1098x + 47.056 0.0553 18 -0.0386x + 42.807 0.0036 11 -0.1284x + 43.08 0.0525 10 -0.1948x + 44.675 0.1835 19 -0.0473x + 26.93 0.0088 Figure 2: Regression equations for each top item Figure 3: Moving Average graph of most sold item Figure 4: Smoothed data graph of most sold item Figure 5: Moving Average graph of second most sold item Figure 6: Smoothed data graph of second most sold item Figure 7: Time series table of top 3 items sold (by total volume) Project Roles Hunter: Focused on getting the access tables created with correct primary keys and relationships between the tables. Wrote queries and descriptions, along with query charts and their discussions. Nurbolat: Did methodology part, questions 1,2,3 for part 2. Gaston: Worked on part 2 moving average and exponential smoothing, along with regression problems and discussion in report. Mikal: Wrote the introduction, objectives, problem statement, and questions 4 and 5 for Part 2.