S6-04Michael-Oltmans - MIT Computer Science and Artificial

Understanding Naturally

Conveyed Explanations of

Device Behavior

Michael Oltmans and Randall Davis

MIT Artificial Intelligence Lab

Roadmap

The problem

• Our approach

• Implementation

– System architecture

– How ASSISTANCE interprets descriptions

– Demonstrating understanding

• Evaluation and contributions

• Related and future work

Michael Oltmans

Sketches Models

• We have a sketch of a device

• A simulation model can be generated from the sketch

• Life is good… or is it?

Michael Oltmans

Michael Oltmans

The Problem

• No representation of intended behavior

• People talk and sketch but the computer doesn’t understand

Michael Oltmans

Task

• Understand descriptions of device behavior:

– Given:

• A model of the device’s structure

• A natural explanation of the behavior

– Generate a causal model of behavior

Michael Oltmans

Michael Oltmans

Roadmap

• The problem

Our approach

• Implementation

– System architecture

– How ASSISTANCE interprets descriptions

– Demonstrating understanding

• Evaluation and contributions

• Related and future work

Michael Oltmans

Naturally Conveyed

Explanations

• Natural input modalities

– Sketched devices

– Sketched gestures

– Speech

• Natural content of descriptions

– Causal

– Behavioral

Michael Oltmans

Example: Describing the

Behavior of a Spring

Tool: Description:

Michael Oltmans

Example: Describing the

Behavior of a Spring

Tool:

Mechanical

CAD

Description:

Spring length = 2.3cm

Rest length = 3.0cm

Michael Oltmans

Example: Describing the

Behavior of a Spring

Tool:

Mechanical

CAD

Qualitative

Reasoner

Description:

Spring length = 2.3cm

Rest length = 3.0cm

(< (length spring)

(rest-length spring))

Michael Oltmans

Example: Describing the

Behavior of a Spring

Tool:

Mechanical

CAD

Qualitative

Reasoner

ASSISTANCE

Michael Oltmans

Description:

Spring length = 2.3cm

Rest length = 3.0cm

(< (length spring)

(rest-length spring))

“The spring pushes the block”

Sources of power

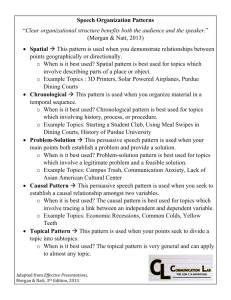

• Conventions in explanations aide interpretation

– Description order suggests causal order

• Constrained vocabulary

• Overlapping descriptions provide constraints on interpretations

Michael Oltmans

Roadmap

• The problem

• Our approach

Implementation

System architecture

How ASSISTANCE interprets descriptions

Demonstrating understanding

• Evaluation and contributions

• Related and future work

Michael Oltmans

Sketch Speech

A SSIST

•Recognize sketch

ViaVoice™

•Recognize speech

•Parse

A SSISTANCE

•Interpret explanation

LTRE

•Truth Maintenance

•Rule System

Michael Oltmans

Causal Model and Simulation

Outputs

• Consistent causal model

– Tree

– Nodes are events

– Links indicate causal relationships

• Demonstration of understanding

– Natural language descriptions of causality

– Parameter constraints

Michael Oltmans

The Representation of

Utterances

• Input comes from ViaVoice™ :

– Grammar constructed based on observed explanations

– Tagged with parts of speech and semantic categories

Michael Oltmans

Representing the parse tree

“body 1 pushes body 2”

SENTENCE SIMPLE_SENTENCE

(… “body 1 pushes body 2” (S0) t1)

SUBJECT NOUN NOUN-PHRASE

(… “body 1” (S0 t1) t2)

VERB_PHRASE

(… “pushes body 2” (S0 t1) t3)

PROPELS VERB

(… “pushes” (S0 t1 t3) t4)

DIRECT_OBJECT NOUN NOUN-PHRASE

(… “body 2” (S0 t1 t3) t5)

Michael Oltmans

Steps In Interpreting

Explanations:

1. Infer motions from annotations and build event representations

2. Find causal connections

3. Search for consistent causal structures

4. Pick best causal structure

Michael Oltmans

Step 1: Inferring Motions from

Annotations

• Inputs:

– Arrows

– Utterances

• “moves,” “pushes,” “the spring releases”

• Outputs:

– (moves body-1 moves-body-1-394)

– (describes arrow-2 moves-body-1-394)

Michael Oltmans

Inferring Motion From Arrows

•Rule triggers:

–Arrow

–Arrow referent (i.e. a body)

–The body is mobile

•Rule body records that:

–The body moves

–The arrow describes the path

Michael Oltmans

Inferring Motion From Arrows

(rule

((:TRUE (arrow ?arrow) :VAR ?f1

)

(:TRUE (arrow-referent ?arrow ?body)

:VAR ?f2

)

(:TRUE (can-move ?body) :VAR ?f3

)

(:TRUE (name ?name ?body) ))

(rlet ((?id (new-id “Moves” ?name)))

(rassert!

(:implies

(:AND ?f1 ?f2 ?f3

)

(:AND (moves ?body ?id)

(describes ?arrow ?id) ))

:ARROW-IS-MOTION)))

Michael Oltmans

Multi-Modal References

• Match a sentence whose subject is

“this” and a pointing gesture

• Assert the referent as the subject of the sentence

• Limitations:

– User must point at referent before the utterance

– Allow one “this” per utterance

Michael Oltmans

Redundant Events

• Redundant explanations lead to multiple move statements for some events

• Merge them into a unique event statement

Event 1

(moves body-1 id-1)

(moves body-1 id-2)

“Body 1” falls

Michael Oltmans

Step 2: Find Causal

Connections

• Plausible causes

– Arrow indicating motion near another object

– Exogenous forces

• Definite causes

– “When … then …” utterances

– “Body 1 pushes body 2”

Michael Oltmans

Step 3: Search for Consistent

Causal Structures

• Some events have several possible causes

• Find consistent causal chains

• Search

– Forward looking depth-first-search

– Avoids repeating bad choices by recording bad combinations of assumptions

Michael Oltmans

Step 4: Find the Best

Interpretation

• Filter out interpretations that have unnecessary exogenous causes

• Pick the interpretation that most closely matches the explanation order

• While there are multiple valid interpretations

– Choose one event with multiple possible causes

– Assume the causal relation whose cause has the earliest description time

Michael Oltmans

Answer Queries and

Adjust Parameters

• Queries:

– Designer : What is body 2 involved in?

– A SSISTANCE : The motion of body 3 causes the motion of body 2 which causes the motion of body 5

• Parameter Adjustment

– Set spring length

Michael Oltmans

Roadmap

• The problem

• Our approach

• Implementation

– System architecture

– How ASSISTANCE interprets descriptions

– Demonstrating understanding

Evaluation and contributions

• Related and future work

Michael Oltmans

Limitations of the Implementation

• Scope of applicability restricted

– State transitions are one step deep

– Cannot handle conjunctions of causes

• Limited knowledge about common device patterns

– Latches, linkages, etc…

– Supports and prevents

• Natural language limitations

– Use a full featured NL system like START

– Formally determine the grammar

Michael Oltmans

Evaluation of the Approach

• Advantages

– Focus on behavior in accordance with survey results

– Move away from rigidity of WIMP interfaces

– Similar to person-to-person interaction

• Alternatives

– More dialog and feedback

– Natural vs. efficient

– Open claim that the domain is adequately constrained

Michael Oltmans

Contributions

• Understanding naturally conveyed descriptions of behavior

• Generating representations of device behavior

– Match the designer’s explanation

– Generate simple explanations of causality

– Allow the calculation of simulation parameters

Michael Oltmans

Related Work

• Understanding device sketches

– Alvarado 2000

• Multimodal interfaces

– Oviatt and Cohen

• Causality

– C. Rieger and M. Grinberg 1977

Michael Oltmans

Future Work

• Direct manipulation

• Dialog

• Expand natural language capabilities

• Smart design tools

Michael Oltmans