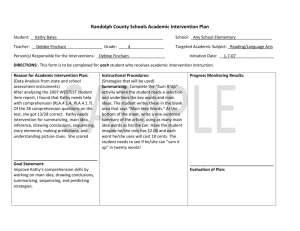

PGAS-Stanford

advertisement

Compilation Technology for

Computational Science

Kathy Yelick

Lawrence Berkeley National Laboratory

and UC Berkeley

Joint work with

The Titanium Group: S. Graham, P. Hilfinger, P. Colella, D. Bonachea,

K. Datta, E. Givelberg, A. Kamil, N. Mai, A. Solar, J. Su, T. Wen

The Berkeley UPC Group: C. Bell, D. Bonachea, W. Chen, J. Duell,

P. Hargrove, P. Husbands, C. Iancu, R. Nishtala, M. Welcome

PGAS Languages

1

Kathy Yelick

Parallelism Everywhere

• Single processor Moore’s Law effect is ending

• Power density limitations; device physics below 90nm

• Multicore is becoming the norm

• AMD, IBM, Intel, Sun all offering multicore

• Number of cores per chip likely to increase with density

• Fundamental software change

• Parallelism is exposed to software

• Performance is no longer solely a hardware problem

• What has the HPC community learned?

• Caveat: Scale and applications differ

Kathy Yelick, 3

Phillip Colella’s “Seven dwarfs”

High-end simulation in the physical sciences = 7 methods:

1.

2.

3.

4.

5.

6.

7.

• Add 4 for embedded;

Structured Grids (including

covers all 41 EEMBC

Adaptive Mesh Refinement)

benchmarks

Unstructured Grids

8. Search/Sort

Spectral Methods (FFTs, etc.)

9. Filter

Dense Linear Algebra

10. Comb. logic

Sparse Linear Algebra

11. Finite State

Machine

Particles

Monte Carlo Simulation

Note: Data sizes (8 bit to 32 bit) and types (integer, character)

differ, but algorithms the same

Games/Entertainment close to scientific computing

Slide source: Phillip Colella, 2004 and Dave Patterson, 2006

Kathy Yelick, 4

Parallel Programming Models

• Parallel software is still an unsolved problem !

• Most parallel programs are written using either:

• Message passing with a SPMD model

• for scientific applications; scales easily

• Shared memory with threads in OpenMP, Threads, or Java

• non-scientific applications; easier to program

• Partitioned Global Address Space (PGAS)

Languages off 3 features

• Productivity: easy to understand and use

• Performance: primary requirement in HPC

• Portability: must run everywhere

Kathy Yelick, 5

Partitioned Global Address Space

Global address space

• Global address space: any thread/process may

directly read/write data allocated by another

• Partitioned: data is designated as local (near) or

global (possibly far); programmer controls layout

x: 1

y:

x: 5

y:

l:

l:

l:

g:

g:

g:

p0

p1

x: 7

y: 0

By default:

• Object heaps

are shared

• Program

stacks are

private

pn

• 3 Current languages: UPC, CAF, and Titanium

• Emphasis in this talk on UPC & Titanium (based on Java)

Kathy Yelick, 6

PGAS Language Overview

• Many common concepts, although specifics differ

• Consistent with base language

• Both private and shared data

• int x[10];

and

shared int y[10];

• Support for distributed data structures

• Distributed arrays; local and global pointers/references

• One-sided shared-memory communication

• Simple assignment statements: x[i] = y[i];

or

t = *p;

• Bulk operations: memcpy in UPC, array ops in Titanium and CAF

• Synchronization

• Global barriers, locks, memory fences

• Collective Communication, IO libraries, etc.

Kathy Yelick, 7

Example: Titanium Arrays

• Ti Arrays created using Domains; indexed using Points:

double [3d] gridA = new double [[0,0,0]:[10,10,10]];

• Eliminates some loop bound errors using foreach

foreach (p in gridA.domain())

gridA[p] = gridA[p]*c + gridB[p];

• Rich domain calculus allow for slicing, subarray, transpose and

other operations without data copies

• Array copy operations automatically work on intersection

data[neighborPos].copy(mydata);

intersection (copied area)

“restrict”-ed (non-ghost)

cells

ghost cells

mydata

data[neighorPos]

Kathy Yelick, 8

Productivity: Line Count Comparison

Fortran

Lines of code

2000

C

1500

1000

MPI+F

500

CAF

0

UPC

NPB-CG

NPB-EP

NPB-FT

NPB-IS

NPB-MG

Titanium

• Comparison of NAS Parallel Benchmarks

• UPC version has modest programming effort relative to C

• Titanium even more compact, especially for MG, which uses multi-d

arrays

• Caveat: Titanium FT has user-defined Complex type and cross-language

support used to call FFTW for serial 1D FFTs

UPC results from Tarek El-Gazhawi et al; CAF from Chamberlain et al;

Titanium joint with Kaushik Datta & Dan Bonachea

Kathy Yelick, 9

Case Study 1: Block-Structured AMR

• Adaptive Mesh Refinement

(AMR) is challenging

• Irregular data accesses and

control from boundaries

• Mixed global/local view is useful

Titanium AMR benchmarks available

AMR Titanium work by Tong Wen and Philip Colella

Kathy Yelick, 10

AMR in Titanium

C++/Fortran/MPI AMR

Titanium AMR

• Chombo package from LBNL

• Bulk-synchronous comm:

• Entirely in Titanium

• Finer-grained communication

• Pack boundary data between procs

• No explicit pack/unpack code

• Automated in runtime system

Code Size in Lines

C++/Fortran/MPI

Titanium

AMR data Structures

35000

2000

AMR operations

6500

1200

Elliptic PDE solver

4200*

1500

10X reduction

in lines of

code!

* Somewhat more functionality in PDE part of Chombo code

Work by Tong Wen and Philip Colella; Communication optimizations joint with Jimmy Su Kathy Yelick, 11

Performance of Titanium AMR

Speedup

80

speedup

70

60

50

Comparable

performance

40

30

20

10

0

16

28

36

56

112

#procs

Ti

Chombo

• Serial: Titanium is within a few % of C++/F; sometimes faster!

• Parallel: Titanium scaling is comparable with generic optimizations

- additional optimizations (namely overlap) not yet implemented

Kathy Yelick, 12

Immersed Boundary Simulation in Titanium

• Modeling elastic structures in an

incompressible fluid.

• Blood flow in the heart, blood clotting,

inner ear, embryo growth, and many more

• Complicated parallelization

• Particle/Mesh method

• “Particles” connected into materials

Pow3/SP 256^3

Pow3/SP 512^3

P4/Myr 512^2x256

Time per timestep

time (secs)

100

80

60

40

Code Size in Lines

20

0

1

2

4

8

# procs

16

32

64

12

8

Joint work with Ed Givelberg, Armando Solar-Lezama

Fortran

Titanium

8000

4000

Kathy Yelick, 13

High Performance

Strategy for acceptance of a new language

• Within HPC: Make it run faster than anything else

Approaches to high performance

• Language support for performance:

• Allow programmers sufficient control over resources for tuning

• Non-blocking data transfers, cross-language calls, etc.

• Control over layout, load balancing, and synchronization

• Compiler optimizations reduce need for hand tuning

• Automate non-blocking memory operations, relaxed memory,…

• Productivity gains though parallel analysis and optimizations

• Runtime support exposes best possible performance

• Berkeley UPC and Titanium use GASNet communication layer

• Dynamic optimizations based on runtime information

Kathy Yelick, 14

One-Sided vs Two-Sided

one-sided put message

address

data payload

two-sided message

message id

host

CPU

network

interface

data payload

memory

• A one-sided put/get message can be handled directly by a network

interface with RDMA support

• Avoid interrupting the CPU or storing data from CPU (preposts)

• A two-sided messages needs to be matched with a receive to

identify memory address to put data

• Offloaded to Network Interface in networks like Quadrics

• Need to download match tables to interface (from host)

Kathy Yelick, 15

Performance Advantage of One-Sided

Communication: GASNet vs MPI

900

GASNet put (nonblock)"

MPI Flood

700

Bandwidth (MB/s)

(up is good)

800

600

500

Relative BW (GASNet/MPI)

400

2. 4

2. 2

300

2. 0

1. 8

200

1. 6

1. 4

1. 2

100

1. 0

10

1000 S i z e ( b y t e s )100000

10000000

0

10

100

1,000

10,000

100,000

1,000,000

10,000,000

Size (bytes)

• Opteron/InfiniBand (Jacquard at NERSC):

• GASNet’s vapi-conduit and OSU MPI 0.9.5 MVAPICH

• Half power point (N ½ ) differs by one order of magnitude

Joint work with Paul Hargrove and Dan Bonachea

Kathy Yelick, 16

GASNet: Portability and High-Performance

8-byte Roundtrip Latency

24.2

25

22.1

MPI ping-pong

Roundtrip Latency (usec)

(down is good)

20

GASNet put+sync

18.5

17.8

15

14.6

13.5

9.6

10

9.5

8.3

6.6

6.6

4.5

5

0

Elan3/Alpha

Elan4/IA64

Myrinet/x86

IB/G5

IB/Opteron

SP/Fed

GASNet better for latency across machines

Joint work with UPC Group; GASNet design by Dan Bonachea

Kathy Yelick, 17

GASNet: Portability and High-Performance

Flood Bandwidth for 2MB messages

Percent HW peak (BW in MB)

(up is good)

100%

90%

857

244

858

225

228

799

795

255

1504

1490

80%

610

70%

630

60%

50%

40%

30%

20%

10%

MPI

GASNet

0%

Elan3/Alpha

Elan4/IA64

Myrinet/x86

IB/G5

IB/Opteron

SP/Fed

GASNet at least as high (comparable) for large messages

Joint work with UPC Group; GASNet design by Dan Bonachea

Kathy Yelick, 18

GASNet: Portability and High-Performance

Flood Bandwidth for 4KB messages

100%

223

90%

231

Percent HW peak

(up is good)

80%

70%

MPI

763

714

702

GASNet

679

190

152

60%

420

50%

40%

750

547

252

30%

20%

10%

0%

Elan3/Alpha

Elan4/IA64

Myrinet/x86

IB/G5

IB/Opteron

SP/Fed

GASNet excels at mid-range sizes: important for overlap

Joint work with UPC Group; GASNet design by Dan Bonachea

Kathy Yelick, 19

Case Study 2: NAS FT

• Performance of Exchange (Alltoall) is critical

• 1D FFTs in each dimension, 3 phases

• Transpose after first 2 for locality

• Bisection bandwidth-limited

• Problem as #procs grows

• Three approaches:

• Exchange:

• wait for 2nd dim FFTs to finish, send 1

message per processor pair

• Slab:

• wait for chunk of rows destined for 1

proc, send when ready

• Pencil:

• send each row as it completes

Joint work with Chris Bell, Rajesh Nishtala, Dan Bonachea

Kathy Yelick, 20

Overlapping Communication

• Goal: make use of “all the wires all the time”

• Schedule communication to avoid network backup

• Trade-off: overhead vs. overlap

• Exchange has fewest messages, less message overhead

• Slabs and pencils have more overlap; pencils the most

• Example: Class D problem on 256 Processors

Exchange (all data at once)

512 Kbytes

Slabs (contiguous rows that go to 1

processor)

64 Kbytes

Pencils (single row)

16 Kbytes

Joint work with Chris Bell, Rajesh Nishtala, Dan Bonachea

Kathy Yelick, 21

NAS FT Variants Performance Summary

Best MFlop rates for all NAS FT Benchmark versions

1000

.5 Tflops

Best NAS Fortran/MPI

Best MPI

Best UPC

MFlops per Thread

800

600

400

200

0

56

et 6 4

nd 2

a

B

i

Myr in

Infin

3 256

Elan

3 512

Elan

4 256

Elan

4 512

Elan

• Slab is always best for MPI; small message cost too high

• Pencil is always best for UPC; more overlap

Joint work with Chris Bell, Rajesh Nishtala, Dan Bonachea

Kathy Yelick, 22

Case Study 3: LU Factorization

• Direct methods have complicated dependencies

• Especially with pivoting (unpredictable communication)

• Especially for sparse matrices (dependence graph with

holes)

• LU Factorization in UPC

• Use overlap ideas and multithreading to mask latency

• Multithreaded: UPC threads + user threads + threaded BLAS

• Panel factorization: Including pivoting

• Update to a block of U

• Trailing submatrix updates

• Status:

• Dense LU done: HPL-compliant

Joint work with

Parry Husbands

• Sparse

version

underway

Kathy Yelick, 23

UPC HPL Performance

X1 Linpack Performance

Opteron Cluster

Linpack

Performance

1400

Altix Linpack

Performance

160

MPI/HPL

1200

UPC

140

200

120

800

100

600

100

400

GFlop/s

150

GFlop/s

GFlop/s

1000

MPI/HPL

80

60

UPC

40

MPI/HPL

UPC

•MPI HPL numbers

from HPCC

database

•Large scaling:

• 2.2 TFlops on 512p,

• 4.4 TFlops on 1024p

(Thunder)

50

200

20

0

0

0

60

X1/64

X1/128

Opt/64

Alt/32

• Comparison to ScaLAPACK on an Altix, a 2 x 4 process grid

• ScaLAPACK (block size 64) 25.25 GFlop/s (tried several block sizes)

• UPC LU (block size 256) - 33.60 GFlop/s, (block size 64) - 26.47 GFlop/s

• n = 32000 on a 4x4 process grid

• ScaLAPACK - 43.34 GFlop/s (block size = 64)

• UPC - 70.26 Gflop/s (block size = 200)

Kathy Yelick, 24

Joint work with Parry Husbands

Automating Support for Optimizations

• The previous examples are hand-optimized

• Non-blocking put/get on distributed memory

• Relaxed memory consistency on shared memory

• What analyses are needed to optimize parallel

codes?

• Concurrency analysis: determine which blocks of code

could run in parallel

• Alias analysis: determine which variables could access the

same location

• Synchronization analysis: align matching barriers, locks…

• Locality analysis: when is a general (global pointer) used

only locally (can convert to cheaper local pointer)

Joint work with Amir Kamil and Jimmy Su

Kathy Yelick, 25

Reordering in Parallel Programs

In parallel programs, a reordering can change the

semantics even if no local dependencies exist.

Initially, flag = data = 0

T1

data = 1

T1

T2

flag = 1

T2

f = flag

f = flag

d = data

d = data

flag = 1

data = 1

{f == 1, d == 0} is possible after reordering; not in original

Compiler, runtime, and hardware can produce such reorderings

Joint work with Amir Kamil and Jimmy Su

Kathy Yelick, 26

Memory Models

• Sequential consistency: a reordering is

illegal if it can be observed by another

thread

• Relaxed consistency: reordering may be

observed, but local dependencies and

synchronization preserved (roughly)

• Titanium, Java, & UPC are not sequentially

consistent

• Perceived cost of enforcing it is too high

• For Titanium and UPC, network latency is the cost

• For Java shared memory fences and code

transformations are the cost

Joint work with Amir Kamil and Jimmy Su

Kathy Yelick, 27

Conflicts

• Reordering of an access is observable

only if it conflicts with some other access:

• The accesses can be to the same memory location

• At least one access is a write

• The accesses can run concurrently

T1

T2

data = 1

f = flag

flag = 1

d = data

Conflicts

• Fences (compiler and hardware) need to

be inserted around accesses that conflict

Joint work with Amir Kamil and Jimmy Su

Kathy Yelick, 29

Sequential Consistency in Titanium

• Minimize number of fences – allow same

optimizations as relaxed model

• Concurrency analysis identifies

concurrent accesses

• Relies on Titanium’s textual barriers and singlevalued expressions

• Alias analysis identifies accesses to the

same location

• Relies on SPMD nature of Titanium

Joint work with Amir Kamil and Jimmy Su

Kathy Yelick, 30

Barrier Alignment

• Many parallel languages make no attempt

to ensure that barriers line up

• Example code that is legal but will deadlock:

if (Ti.thisProc() % 2 == 0)

Ti.barrier(); // even ID threads

else

; // odd ID threads

Joint work with Amir Kamil and Jimmy Su

Kathy Yelick, 31

Structural Correctness

• Aiken and Gay introduced structural

correctness (POPL’98)

• Ensures that every thread executes the same

number of barriers

• Example of structurally correct code:

if (Ti.thisProc() % 2 == 0)

Ti.barrier(); // even ID threads

else

Ti.barrier(); // odd ID threads

Joint work with Amir Kamil and Jimmy Su

Kathy Yelick, 32

Textual Barrier Alignment

• Titanium has textual barriers: all threads

must execute the same textual sequence

of barriers

• Stronger guarantee than structural correctness –

this example is illegal:

if (Ti.thisProc() % 2 == 0)

Ti.barrier(); // even ID threads

else

Ti.barrier(); // odd ID threads

• Single-valued expressions used to enforce

textual barriers

Joint work with Amir Kamil and Jimmy Su

Kathy Yelick, 33

Single-Valued Expressions

• A single-valued expression has the same

value on all threads when evaluated

• Example: Ti.numProcs() > 1

• All threads guaranteed to take the same

branch of a conditional guarded by a

single-valued expression

• Only single-valued conditionals may have barriers

• Example of legal barrier use:

if (Ti.numProcs() > 1)

Ti.barrier(); // multiple threads

else

; // only one thread total

Joint work with Amir Kamil and Jimmy Su

Kathy Yelick, 34

Concurrency Analysis

• Graph generated from program as follows:

• Node added for each code segment between

barriers and single-valued conditionals

• Edges added to represent control flow between

segments

1

// code segment 1

if ([single])

// code segment 2

2

3

else

// code segment 3

// code segment 4

4

barrier

Ti.barrier()

// code segment 5

Joint work with Amir Kamil and Jimmy Su

5

Kathy Yelick, 35

Concurrency Analysis (II)

• Two accesses can run concurrently if:

• They are in the same node, or

• One access’s node is reachable from the other

access’s node without hitting a barrier

• Algorithm: remove barrier edges, do DFS

1

Concurrent Segments

2

3

4

barrier

5

Joint work with Amir Kamil and Jimmy Su

1

2

3

4

5

1

X

X

X

X

2

X

X

X

3

X

X

X

4

X

X

X

X

5

X

Kathy Yelick, 36

Alias Analysis

• Allocation sites correspond to abstract

locations (a-locs)

• All explicit and implict program variables

have points-to sets

• A-locs are typed and have points-to sets

for each field of the corresponding type

• Arrays have a single points-to set for all indices

• Analysis is flow,context-insensitive

• Experimental call-site sensitive version – doesn’t

seem to help much

Joint work with Amir Kamil and Jimmy Su

Kathy Yelick, 37

Thread-Aware Alias Analysis

• Two types of abstract locations: local and

remote

• Local locations reside in local thread’s memory

• Remote locations reside on another thread

• Exploits SPMD property

• Results are a summary over all threads

• Independent of the number of threads at runtime

Joint work with Amir Kamil and Jimmy Su

Kathy Yelick, 38

Alias Analysis: Allocation

• Creates new local abstract location

• Result of allocation must reside in local memory

class Foo {

Object z;

}

static void bar() {

L1: Foo a = new Foo();

Foo b = broadcast a from 0;

Foo c = a;

L2: a.z = new Object();

}

Joint work with Amir Kamil and Jimmy Su

A-locs

1, 2

Points-to Sets

a

b

c

Kathy Yelick, 39

Alias Analysis: Assignment

• Copies source abstract locations into

points-to set of target

class Foo {

Object z;

}

static void bar() {

L1: Foo a = new Foo();

Foo b = broadcast a from 0;

Foo c = a;

L2: a.z = new Object();

}

Joint work with Amir Kamil and Jimmy Su

A-locs

1, 2

Points-to Sets

a

1

b

c

1

1.z

2

Kathy Yelick, 40

Alias Analysis: Broadcast

• Produces both local and remote versions

of source abstract location

• Remote a-loc points to remote analog of what

local a-loc points to

class Foo {

Object z;

}

static void bar() {

L1: Foo a = new Foo();

Foo b = broadcast a from 0;

Foo c = a;

L2: a.z = new Object();

}

Joint work with Amir Kamil and Jimmy Su

A-locs

1, 2, 1r

Points-to Sets

a

1

b

1, 1r

c

1

1.z

2

1r.z

2r

Kathy Yelick, 41

Aliasing Results

• Two variables A and B may

alias if:

$ xpointsTo(A).

xpointsTo(B)

• Two variables A and B may

alias across threads if:

$ xpointsTo(A).

R(x)pointsTo(B),

(where R(x) is the remote

counterpart of x)

Joint work with Amir Kamil and Jimmy Su

Points-to Sets

a

1

b

1, 1r

c

1

Alias [Across

Threads]:

a

b, c [b]

b

a, c [a, c]

c

a, b [b]

Kathy Yelick, 42

Benchmarks

Benchmark

Lines1 Description

pi

56

Monte Carlo integration

demv

122

Dense matrix-vector multiply

sample-sort

321

Parallel sort

lu-fact

420

Dense linear algebra

3d-fft

614

Fourier transform

gsrb

1090

Computational fluid dynamics kernel

gsrb*

1099

Slightly modified version of gsrb

spmv

1493

Sparse matrix-vector multiply

gas

8841

Hyperbolic solver for gas dynamics

1

Line counts do not include the reachable portion of the

1 37,000 line Titanium/Java 1.0 libraries

Joint work with Amir Kamil and Jimmy Su

Kathy Yelick, 43

Analysis Levels

• We tested analyses of varying levels of

precision

Analysis

Description

naïve

All heap accesses

sharing

All shared accesses

concur

Concurrency analysis + type-based AA

concur/saa

Concurrency analysis + sequential AA

concur/taa

Concurrency analysis + thread-aware AA

concur/taa/cycle

Concurrency analysis + thread-aware AA

+ cycle detection

Joint work with Amir Kamil and Jimmy Su

Kathy Yelick, 44

Static (Logical) Fences

Static Fence Removal

Percentage

120

100

80

60

40

20

co

nc

ur

/s

aa

co

nc

ur

co

/ta

nc

a

ur

/ta

a/

cy

cl

e

co

nc

ur

sh

ar

in

g

na

ïv

e

0

pi

demv

sample sort

lu fact

3d fft

gsrb

gsrb*

spmv

gas

GOOD

Percentages are for number of static fences reduced over naive

Joint work with Amir Kamil and Jimmy Su

Kathy Yelick, 45

Dynamic (Executed) Fences

Dynamic Fence Removal

120

Percentage

100

80

60

40

20

co

nc

ur

/s

aa

co

nc

ur

co

/ta

nc

a

ur

/ta

a/

cy

cl

e

co

nc

ur

sh

ar

in

g

na

ïv

e

0

pi

demv

sample sort

lu fact

3d fft

gsrb

gsrb*

spmv

gas

GOOD

Percentages are for number of dynamic fences reduced over naive

Joint work with Amir Kamil and Jimmy Su

Kathy Yelick, 46

Dynamic Fences: gsrb

• gsrb relies on dynamic locality checks

• slight modification to remove checks (gsrb*)

greatly increases precision of analysis

gsrb Dynamic Fence Removal

120

Percentage

100

80

60

40

GOOD

20

Joint work with Amir Kamil and Jimmy Su

cl

e

/c

y

ur

/t

aa

nc

ur

/t

nc

co

gsrb*

co

ur

/s

nc

co

gsrb

aa

aa

ur

nc

co

ar

in

g

sh

na

ïv

e

0

Kathy Yelick, 47

Two Example Optimizations

• Consider two optimizations for GAS

languages

1.Overlap bulk memory copies

2.Communication aggregation for irregular array

accesses (i.e. a[b[i]])

• Both optimizations reorder accesses, so

sequential consistency can inhibit them

• Both are addressing network

performance, so potential payoff is high

Joint work with Amir Kamil and Jimmy Su

Kathy Yelick, 48

Array Copies in Titanium

• Array copy operations are commonly used

dst.copy(src);

• Content in the domain intersection of the two

arrays is copied from dst to src

src

dst

• Communication (possibly with packing)

required if arrays reside on different threads

• Processor blocks until the operation is

complete.

Joint work with Amir Kamil and Jimmy Su

Kathy Yelick, 49

Non-Blocking Array Copy Optimization

• Automatically convert blocking array copies

into non-blocking array copies

• Push sync as far down the instruction stream as

possible to allow overlap with computation

• Interprocedural: syncs can be moved

across method boundaries

• Optimization reorders memory accesses –

may be illegal under sequential consistency

Joint work with Amir Kamil and Jimmy Su

Kathy Yelick, 50

Communication Aggregation on Irregular

Array Accesses (Inspector/Executor)

• A loop containing indirect array accesses is

split into phases

• Inspector examines loop and computes reference

targets

• Required remote data gathered in a bulk operation

• Executor uses data to perform actual computation

for (...) {

a[i] = remote[b[i]];

// other accesses

}

schd = inspect(remote, b);

tmp = get(remote, schd);

for (...) {

a[i] = tmp[i];

// other accesses

}

• Can be illegal under sequential consistency

Joint work with Amir Kamil and Jimmy Su

Kathy Yelick, 51

Relaxed + SC with 3 Analyses

• We tested performance using analyses of

varying levels of precision

Name

Description

relaxed

Uses Titanium’s relaxed memory model

naïve

Uses sequential consistency, puts

fences around every heap access

sharing

Uses sequential consistency, puts

fences around every shared heap

access

Uses sequential consistency, uses our

most aggressive analysis

concur/taa/cycle

Joint work with Amir Kamil and Jimmy Su

Kathy Yelick, 52

Dense Matrix Vector Multiply

Dense Matrix Vector Multiply

speedup

2

1.5

1

0.5

0

1

relaxed

2

naive

4

8

# of processors

sharing

16

concur/taa/cycle

• Non-blocking array copy optimization applied

• Strongest analysis is necessary: other SC

implementations suffer relative to relaxed

Joint work with Amir Kamil and Jimmy Su

Kathy Yelick, 53

Sparse Matrix Vector Multiply

Sparse Matrix Vector Multiply

100

speedup

80

60

40

20

0

1

relaxed

2

4

8

# of processors

naive

sharing

16

concur/taa/cycle

• Inspector/executor optimization applied

• Strongest analysis is again necessary and sufficient

Joint work with Amir Kamil and Jimmy Su

Kathy Yelick, 54

Portability of Titanium and UPC

• Titanium and the Berkeley UPC translator use a similar model

• Source-to-source translator (generate ISO C)

• Runtime layer implements global pointers, etc

• Common communication layer (GASNet)

Also used by gcc/upc

• Both run on most PCs, SMPs, clusters & supercomputers

• Support Operating Systems:

• Linux, FreeBSD, Tru64, AIX, IRIX, HPUX, Solaris, Cygwin, MacOSX, Unicos, SuperUX

• UPC translator somewhat less portable: we provide a http-based compile server

• Supported CPUs:

• x86, Itanium, Alpha, Sparc, PowerPC, PA-RISC, Opteron

• GASNet communication:

• Myrinet GM, Quadrics Elan, Mellanox Infiniband VAPI, IBM LAPI, Cray X1, SGI Altix,

Cray/SGI SHMEM, and (for portability) MPI and UDP

• Specific supercomputer platforms:

• HP AlphaServer, Cray X1, IBM SP, NEC SX-6, Cluster X (Big Mac), SGI Altix 3000

• Underway: Cray XT3, BG/L (both run over MPI)

• Can be mixed with MPI, C/C++, Fortran

Joint work with Titanium and UPC groups

Kathy Yelick, 55

Portability of PGAS Languages

Other compilers also exist for PGAS Languages

• UPC

•

•

•

•

Gcc/UPC by Intrepid: runs on GASNet

HP UPC for AlphaServers, clusters, …

MTU UPC uses HP compiler on MPI (source to source)

Cray UPC

• Co-Array Fortran:

• Cray CAF Compiler: X1, X1E

• Rice CAF Compiler (on ARMCI or GASNet), John Mellor-Crummey

•

•

•

•

Source to source

Processors: Pentium, Itanium2, Alpha, MIPS

Networks: Myrinet, Quadrics, Altix, Origin, Ethernet

OS: Linux32 RedHat, IRIS, Tru64

NB: source-to-source requires cooperation by backend compilers

Kathy Yelick, 56

Summary

• PGAS languages offer productivity advantage

• Order of magnitude in line counts for grid-based code in Titanium

• Push decisions about packing/not into runtime for portability

(advantage of language with translator vs. library approach)

• Significant work in compiler can make programming easier

• PGAS languages offer performance advantages

• Good match to RDMA support in networks

• Smaller messages may be faster:

• make better use of network: postpone bisection bandwidth pain

• can also prevent cache thrashing for packing

• Have locality advantages that may help even SMPs

• Source-to-source translation

• The way to ubiquity

• Complement highly tuned machine-specific compilers

Kathy Yelick, 57

End of Slides

PGAS Languages

58

Kathy Yelick

Productizing BUPC

• Recent Berkeley UPC release

• Support full 1.2 language spec

• Supports collectives (tuning ongoing); memory model compliance

• Supports UPC I/O (naïve reference implementation)

• Large effort in quality assurance and robustness

• Test suite: 600+ tests run nightly on 20+ platform configs

• Tests correct compilation & execution of UPC and GASNet

• >30,000 UPC compilations and >20,000 UPC test runs per night

• Online reporting of results & hookup with bug database

• Test suite infrastructure extended to support any UPC compiler

• now running nightly with GCC/UPC + UPCR

• also support HP-UPC, Cray UPC, …

• Online bug reporting database

• Over >1100 reports since Jan 03

• > 90% fixed (excl. enhancement requests)

Kathy Yelick, 59

Benchmarking

• Next few UPC and MPI application benchmarks

use the following systems

•

•

•

•

Myrinet: Myrinet 2000 PCI64B, P4-Xeon 2.2GHz

InfiniBand: IB Mellanox Cougar 4X HCA, Opteron 2.2GHz

Elan3: Quadrics QsNet1, Alpha 1GHz

Elan4: Quadrics QsNet2, Itanium2 1.4GHz

Kathy Yelick, 61

PGAS Languages: Key to High Performance

One way to gain acceptance of a new language

• Make it run faster than anything else

Keys to high performance

• Parallelism:

• Scaling the number of processors

• Maximize single node performance

• Generate friendly code or use tuned libraries (BLAS, FFTW, etc.)

• Avoid (unnecessary) communication cost

• Latency, bandwidth, overhead

• Avoid unnecessary delays due to dependencies

• Load balance

• Pipeline algorithmic dependencies

Kathy Yelick, 62

Hardware Latency

• Network latency is not expected to improve significantly

• Overlapping communication automatically (Chen)

• Overlapping manually in the UPC applications (Husbands, Welcome,

Bell, Nishtala)

• Language support for overlap (Bonachea)

Kathy Yelick, 63

Effective Latency

Communication wait time from other factors

• Algorithmic dependencies

• Use finer-grained parallelism, pipeline tasks (Husbands)

• Communication bandwidth bottleneck

• Message time is: Latency + 1/Bandwidth * Size

• Too much aggregation hurts: wait for bandwidth term

• De-aggregation optimization: automatic (Iancu);

• Bisection bandwidth bottlenecks

• Spread communication throughout the computation (Bell)

Kathy Yelick, 64

Fine-grained UPC vs. Bulk-Synch MPI

• How to waste money on supercomputers

• Pack all communication into single message (spend

memory bandwidth)

• Save all communication until the last one is ready (add

effective latency)

• Send all at once (spend bisection bandwidth)

• Or, to use what you have efficiently:

• Avoid long wait times: send early and often

• Use “all the wires, all the time”

• This requires having low overhead!

Kathy Yelick, 65

What You Won’t Hear Much About

• Compiler/runtime/gasnet bug fixes, performance

tuning, testing, …

• >13,000 e-mail messages regarding cvs checkins

• Nightly regression testing

• 25 platforms, 3 compilers (head, opt-branch, gcc-upc),

• Bug reporting

• 1177 bug reports, 1027 fixed

• Release scheduled for later this summer

• Beta is available

• Process significantly streamlined

Kathy Yelick, 66

Take-Home Messages

• Titanium offers tremendous gains in productivity

• High level domain-specific array abstractions

• Titanium is being used for real applications

• Not just toy problems

• Titanium and UPC are both highly portable

• Run on essentially any machine

• Rigorously tested and supported

• PGAS Languages are Faster than two-sided MPI

• Better match to most HPC networks

• Berkeley UPC and Titanium benchmarks

• Designed from scratch with one-side PGAS model

• Focus on 2 scalability challenges: AMR and Sparse LU

Kathy Yelick, 67

Titanium Background

• Based on Java, a cleaner C++

• Classes, automatic memory management, etc.

• Compiled to C and then machine code, no JVM

• Same parallelism model at UPC and CAF

• SPMD parallelism

• Dynamic Java threads are not supported

• Optimizing compiler

• Analyzes global synchronization

• Optimizes pointers, communication, memory

Kathy Yelick, 68

Do these Features Yield Productivity?

MG Line Count Comparison

CG Line Count Comparison

Computation

Communication

Declarations

203

700

600

500

400

552

300

200

92

100

0

60

58

Fortran w/ MPI

Titanium

36

Productive Lines of Code

160

Computation

Communication

Declarations

800

140

120

100

99

80

44

60

28

40

27

20

35

14

0

Fortran w/ MPI

Titanium

Language

Language

CG Class D Speedup - G5/IB

Speedup (Over Best 64

Proc Case)

MG Class D Speedup - Opteron/IB

Speedup (Over Best 32

Proc Case)

Productive Lines of Code

900

140

Linear Speedup

Fortran w/MPI

Titanium

120

100

80

60

40

20

0

0

50

100

150

Processors

Joint work with Kaushik Datta, Dan Bonachea

400

350

300

250

200

150

100

50

0

Linear Speedup

Fortran w/MPI

Titanium

0

50

100

150

200

250

300

Processors

Kathy Yelick, 69

GASNet/X1 Performance

single word get

14

13

Shm em

GASNet

12

MPI

14

13

Get Lat ency (m icroseconds)

Put per m essage gap (m icroseconds)

single word put

11

10

9

8

7

6

5

4

3

2

1

12

Shm em

GASNet

11

10

9

8

7

6

5

4

3

2

1

0

0

1

2

RMW

4

Sc a la r

8

16

32

64

128

Ve c t or

256

512 1024 2048

b c op y()

1

RMW

Message Size (byt es)

2

4

Sc a la r

8

16

32

64

Ve c t or

128

256 512 1024 2048

b c op y()

Message Size (byt es)

• GASNet/X1 improves small message performance over shmem and MPI

• Leverages global pointers on X1

• Highlights advantage of languages vs. library approach

Joint work with Christian Bell, Wei Chen and Dan Bonachea

Kathy Yelick, 70

High Level Optimizations in Titanium

• Irregular communication can be expensive

• “Best” strategy differs by data size/distribution and machine

parameters

• E.g., packing, sending bounding boxes, fine-grained are

• Use of runtime

optimizations

Itanium/Myrinet Speedup Comparison

• Inspector-executor

Average and maximum speedup

of the Titanium version relative to

the Aztec version on 1 to 16

processors

Joint work with Jimmy Su

1.5

speedup

• Performance on

Sparse MatVec Mult

• Results: best strategy

differs within the

machine on a single

matrix (~ 50% better)

Speedup relative to MPI code (Aztec library)

1.6

1.4

1.3

1.2

1.1

1

1

2

3

4

5

6

7

8

9 10 11 12 13 14 15 16 17 18 19 20 21 22

matrix number

average speedup

maximum speedup

Kathy Yelick, 71

Source to Source Strategy

• Source-to-source translation strategy

• Tremendous portability advantage

• Still can perform significant optimizations

• Use of “restrict” pointers in C

• Understand Multi-D array

indexing (Intel/Itanium issue)

• Support for pragmas like

IVDEP

• Robust vectorizators (X1,

SSE, NEC,…)

Performance Ratio C / UPC

• Relies on high quality back-end compilers and some

coaxing in code generation

Livermore Loops on Cray X1 (single Node)

48x

4

3.5

3

2.5

2

1.5

1

0.5

0

1 2 3 4 5

6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24

• On machines with integrated shared memory

hardware need access to shared memory operations

Joint work with Jimmy Su

Kathy Yelick, 72