IntrospectionZP - Center for Cognitive Science

Notes: Lecture 1

Lecture 1: Problems of Consciousness: Introspection

04/11/2020 4:52 PM

The view of consciousness held by philosophers and by Cognitive Scientists are, with a few exceptions, quite different.

1

I will begin with the Cognitive Scientists’ views which, ever the last century have tended to focus on methodological issues, in particular; how we can study consciousness even when we don’t know in any detail what a theory in this area would need to explain. We scarcely have any idea what consciousness is, except, possibly, when it occurs in the mind of the investigator – and even that assumes that it, whatever it is, is something that has a location, at least in the sense entailed by saying it is “in the mind”. In speaking about conscious states we generally begin with first-person descriptions – of what it’s like to be conscious of

we want quickly to go beyond describing what it’s like to be in a particular sort of state. The fervent hope is that the description we start off with will capture a natural kind that will eventually connect with other parts of cognitive science and neuroscience. So even when we give first-person accounts we expect there to be core properties that generalize across instances and across individuals and that will eventually ground out in some way in causal properties.

In the first part of the course we will begin with a view from psychology. For at least the first half of the

20th century, psychology was focused on describing states of consciousness as such. The method associated with this movement was called introspection and many proponents of this method argued that with proper understanding of this term and with proper training, the method could be an objective way of observing consciousness – its structure and its content (what phenomenal properties it embodies, such as color, shape, movement, and so on). A great deal of time was spent on making introspection reliable (repeatable) and valid (related to the object of the experience in a consistent way). There was also concern with what

Titchener called the Stimulus Error , in which observers reported properties of what their conscious states were about – what things in the actual or possible world

2

they depicted – rather than the experience itself

(we will have a lot more to say about that when we discuss mental images). We will read parts of papers that argue for the Introspective Method, as well as papers that argue that introspection was doomed as a scientific method because the object of study was essentially private and could not be publically shared. These arguments were conducted with considerable passion and, as far as I can see, did not affect the science of the time. What did affect the science was the obvious historical fact that theories based on introspection seemed to go nowhere and eventually this was seen (correctly) as lack of progress of a science based on introspection

(if it was a science).

In this course we will encounter many instances of arguments against introspection as a way of accessing mental states. Yet we need to know what mental states the mind goes through in order to understand mental processes. We present a number of arguments against the assumption that introspection gives us direct access to mental states (and therefor mental processes) that are not available by any other means. While the examples illustrate how accepting introspection as a uniquely privileged source of evidence about mental states carries the risk of leading us astray, they fail to do what early stages of science typically do; provide

1

Parts of these notes are taken from (Pylyshyn, 2003, Chapters 6-8).

2 The very idea of a logically possible but nonexistent (imaginary) world that we can experience consciously is problematic. For example, could we imagine invisible physical objects or objects that cannot be examined from different perspectives (e.g., that have no backs or sides)? Or worlds in which there are 4-dimensional objects (as assumed by some Inca mythologies or by special relativity)? Or even higher-order spacetime manifolds, such as the 26 spatial dimensions hypothesized by one version of

String Theory ?

1

Notes: Lecture 1 04/11/2020 4:52 PM ways to narrow or circumscribe or idealize the relevant sources of evidence and the relevant phenomena. In the case of consciousness as a natural domain one might have to confine the study to empirically derived domains within which some empirical regularities could be found and to which the grand arguments did not apply (e.g., that did not subsume what some have called the “hard problem” of consciousness) 3 . But this is

Fodor’s territory.

Suppose we accept that there are conscious phenomena that form the core of the patterns of cognition that can be explained in naturalistic terms. There still remains the problem of specifying what these conscious observations (introspections) tell us about mental processes. What they don’t tell us is the most obvious interpretation of our first-person observations. For example, they do not tell us are the form and content of our thoughts. Even less do they tell us why we believe one thing rather than another. Take the question; what are you thinking? People used to say, when they saw you staring into the middle distance, “a penny for your thoughts.” A penny doesn’t buy much these days but you should still take the dare because neither they nor you can say with any confidence whether your report of your thoughts of the moment are veridical. The evidence suggests that what you are doing in answering the question is pretty much the same as what some bystander would do in answering the same question about your thoughts, given that he knows the same relevant details as you do. In other words what both you and the third person would do is compute (or

reason, or infer) what must have been going through your mind. There is even evidence (discussed by

are doing when we tell someone (e.g., a psychologist studying thinking) our thoughts is we are being scientists ourselves and we infer what thoughts might have led to the experiences and statements we make when asked about our thoughts. Indeed in the Problem Behavior Graph that Allen Newell and Herb Simon

states involved in solving a problem. It does this by highlighting observed transitions that lack an appropriate operation or sequence of operations to change the ‘ knowledge state

’ associated with that transition. Some of these assumptions mentioned above will become clearer and more directly relevant later when we speak of reports of particular sorts of conscious contents, namely those we call mental imagery .

Do we need to appeal to conscious contents in cognitive science?

The shadow of Behaviorism no longer haunts cognitive science. When the human observer that we are studying (often called the “subject” but more recently referred to by the more acceptable term “observer”) utters something, we do not merely record it as a response, we consider what the subject was trying to tell us

(e.g., I didn’t hear what you said. Or , Did you have garlic for lunch?

). As with many seemingly radical changes, this rediscovery of mentalistic or folk-psychology initially produced a science as radically confused as the one it displaced. In this brave new world we typically take a subject’s statement as a literal report of what he or she was thinking (even putting aside self-promotion or acquiescence). In studying such cognitive processes as those involved in problem solving we often appeal to subjective states of consciousness and we are equally often lead astray when we do so, since reports of the content of conscious mental states are inevitably reconstructions that depend what the observers’ believe might have been involved in the mental processes, or on tacit theories we hold about the role of mental states. And we will see that such tacit theories, often based on folk psychology, are likely more often than not to be false. Yet there are contexts where we appeal to properties of conscious states because there is no other way to obtain evidence of mental

3 An excellent summary of some of the arguments can be found in Fodor’s notes for this class, as well as in Wikipedia

(http://tinyurl.com/nmpm64e).

2

Notes: Lecture 1 04/11/2020 4:52 PM processes and because the states that we advert to are our very own mental states , and why would we mislead ourselves (we will see some reasons why later in this chapter). True, in some of these cases the consciously accessible states might provide initial hypotheses that must subsequently be validated. On the other hand so would the day’s news, the weather, recalling a missed appointment, or any other fact you may have in mind.

That’s what makes prediction difficult – once you get past the early-vision stage the mind is ever so holistic!

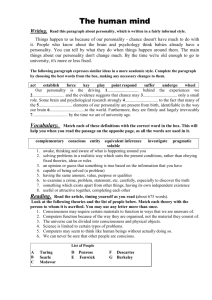

Let’s look at some examples of the sorts of theories one might come up with if one takes the content of our conscious experience as primary (and privileged). The description of your experience might be a complex one and might be an accurate characterization of your conscious experience, but not of the way you solve the problem. Consider, for example, this description of how we solve the “visual analogies” problems like those shown in Figure 1 below). The art critic and writer Rudolf Arnheim (1969) criticized contemporary views (based on information-processing ideas) of how visual analogy problems might be solved. Arnheim rejects the idea (which cognitivist theories typically assume) that the mind has to engage in what might be viewed as great deal of ratiocination, involving symbol manipulation, with its patternmatching, comparison, and inference. Not so, claims Arnheim. When people solve such problems they go through a quite different sequence of mental states; for example, ones that involve a “rich and dazzling experience” of “instability,” of “fleeting, elusive resemblances,” and of being “suddenly struck by” certain perceptual relationships. Such symbol-processing theories, according to Hubert Dreyfus (1979), fail to take seriously such distinctions as between “focal” and “fringe” consciousness and ignore a “sense of oddness” about the information processing story. We are guilty as charged. But so is every theory ever developed in physics or chemistry – it’s hard to imagine a theory with more “sense of oddness” than quantum mechanics or string theory. This is what happens when one takes the theoretical task in cognitive science to be, among other things, the rational reconstruction of processes that are sufficient to carry out the task. Explaining how it feels is certainly not a primary goal – and in fact many of us think it’s a distraction that more often takes us away from the goal of explaining how we think, rather than illuminating it.

Consider how one goes about applying the evidence of introspection to the task discussed by Rudolph

Arnheim: solving visual analogies problems of the sort commonly found in IQ tests. In the examples below

(1a) is an easy case while (1b) is somewhat harder because it is ambiguous. To see what may be involved, the reader should try to imagine a simple introspective narrative of what went on in your mind as you examined the figures while trying to find a solution to problem 1a of completing; “A is to B as 1 is to what figure?” and then repeat this exercise for panel b which continues the analogy of Panel 1a but presents two different possible solutions; do you prefer the solution labeled 1 or that labeled 2? How is this reflected in your introspective report? Try solving the puzzles and see whether it produces the flourish of pyrotechnics described by Arnheim – not because we doubt his description, but to see whether it enhances your understanding of how you solve problems of this kind.

(1a) ‘Easy’ geometrical analogies (1b) More difficult analogies

Figure 1 Illustration of some Geometrical Analogies problems

3

Notes: Lecture 1 04/11/2020 4:52 PM

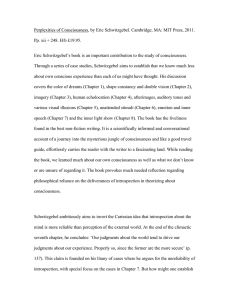

To illustrate the inability of introspection to provide an understanding of a task carried out in perception, including in short-term memory that receives the sensory information, consider what goes on in a rapid search task where the target item may or may not be in the STM store. Sternberg (1966) showed that rapid search through recently memorized sets of items obeys surprising regularities. Take the case where a person is asked to memorized a list of some 3 to 7 letters or digits or other individual objects such as words. In the experiment the observer is asked to indicate as quickly as possible whether a “probe” item presented from the same class as the memorized list (e.g., a letter or digit) was in the memorized list. People can do this very rapidly and accurately. But asked how they did it they are either at a loss or they confabulate some superficially plausible story. But standard psychophysical methods can disentangle the plausible intuitive or introspection-based options. The standard way of doing this is by using a reaction time measure. There is independent evidence that the time taken to carry out a task is generally a good indicator of how many steps or subtasks had to be taken to complete the problem. So, using reaction-time methodology, Sternberg and others, showed that the reaction time to respond “yes” or “no” to the question“ was a token of that probe in the memory set provided a good measure of how the search was conducted, even though it may not have been observed consciously. The critical variables were (1) the number of items in the memory set, (2) whether a token of the probe was identical to one of the items in the memory set, (3) the ordinal position of the matching memory item in the presentation of the memory set 4 , (4) the reaction time to respond in each case. Considering just the set-size, the present-vs-absent status of the probe item and (in some studies) the ordinal position of the critical target item in the memory set as it was presented to the observer. Considering only the set size, the present-vs-absent status of the probe item and reaction time, we can expect the results to tit one of the following three patterns, shown in the Figures 2a, 2b or 2c. Of those, the possible pattern of increasing reaction time when the number of items increases from 2 to 5 is shown in Figure 2. The question we can now ask is, which of these is more consonant with one’s introspection in doing the task?

Figure 2. Several of the possible functions relating reaction time to number of search items and presence or absence of a matching probe item in the memory set. Thus the case that matches one’s experience in doing the task is a serial self-terminating search, shown in either (a) or (c), whereas the actual observed result is the least plausible case

(b) which indicates a serial exhaustive

search (based on Sternberg, 1966).

4 Additional data, not mentioned here, show a serial order effect, suggesting that the search is a serial one that scans through the memory set as initially presented.

4

Notes: Lecture 1 04/11/2020 4:52 PM

Subjects debriefed after the experiment rarely opt for (b) or (c) – the impression is that the task is extremely fast and not obviously sensitive to either the number of potential matches (number of items in the search set) although the absence of a matching item in the memory set, a pattern partially fulfilled by case

(a), is selected by subjects, particularly ones who correctly anticipate that absence, even if it makes the heart grow fonder, is a condition that generally requires a second look (an effect observed in many experiments).

But figure (b) is more puzzling. It shows that the time taken increases linearly as the cardinality of the search set increases. Figure (b) shows merely that it takes longer to report the non-occurrence of a match, which is a widely observed phenomenon. In Figure (c), the difference in slope between the case where the probe item matches some memory item is also understandable insofar as observers are in a position to announce a match as soon as they encounter an item in their search. As long as the probe item is selected at random from among the possible memory items the observer can announce a hit as soon as a match is encountered, which on the average will be in half as many comparisons for matching targets as for non-matching targets since that cannot be decided until all search items have been examined. And the possibility of establishing the match-nonmatch status of the matching probe in half the number of comparisons would lead us to expect the slope of the match cases to be approximately half of that for the non-match cases – i.e., we would expect figure (c) to apply. What is observed, however, is a pattern resembling figure (b), which has been referred to as “exhaustive search”. In this type of search the sequential matching process does not stop even after a matching item is found. This option is never experienced, yet the data support it.

In general we do not experience phenomena that take place in a time span of less than about 300 msec.

Indeed (Newell, 1990) has argued that a duration somewhere around 300 ms marks a boundary between

levels of architecture corresponding to the biological on the one hand and the symbolic on the other (but not yet what he calls the knowledge level ).

References cited

Dunlap, K. (1912). The case against introspection. Psychological Review, 19 , 404-413.

Gopnik, A. (1993). How we know our minds: The illusion of first-person knowledge of intentionality.

Behavioral and Brain Sciences, 16 (1), 1-14.

James, W. (1982 / 1890). Text Book of Psychology (Chapter 7: The Methods and Snares of Psychology) .

London: MacMillan.

Nagel, T. (1947). What it is like to be a bat? Philosophical Review, 83 , 435-450.

Newell, A. (1990). Unified Theories of Cognition . Cambridge, MA: Harvard University Press.

Newell, A., & Simon, H. A. (1972). Human Problem Solving . Englewood Cliffs, N.J.: Prentice-Hall.

Nisbett, R. E., & Wilson, T. D. (1977). Telling more than we can know: Verbal reports on mental processes.

Psychological Review, 84 (3), 231-259.

Pylyshyn, Z. W. (2003). Seeing and visualizing: It's not what you think . Cambridge, MA: MIT

Press/Bradford Books.

Sternberg, S. (1966). High-Speed Scanning in Human Memory. Science, 153 , 652-654.

Titchener, E. B. (1912). The Schema of Introspection. American Journal of Psychology, 23 , 485-508.

Washburn, M. F. (1922). Introspection as an objective method. Psychological Review, 29 , 89-112.

5

Notes: Lecture 1

Consciousness Notes: Some topics to be covered

04/11/2020 4:52 PM

Introspection and its problems

The early goal of pure introspection (Wundt, Titchener,…)

Examples of hybrid descriptions (DES)

5

(Russell T. Hurlburt vs Eric Schwitzgebel)

Recordings of Melanie and what they tell us about the nature of mind and mental processes.

The experience of thinking or of our thoughts?

Do we think in our natural language? (The Linguistic Code?)

Do we think in Mental Images? (The Visual-Image Code)

Do we think in terms of other sensory experiences? (Or other sensory codes & schemas).

Are the sensory objects epiphenomenal? (and what does that mean?)

Is the experience of hearing speech or seeing an image from memory an illusion ?

The experience of memory (recall)

Is the sequence of recall governed by the architecture of the cognitive/memory system, or by how the world is.

The most common new theory (announced frequently) fits the template, “Consciousness is having an X in your brain” where at various times X has been “ working memory

,” synchronous activation (or 30 Hz standing wave patterns) in the brain, an anticipatory motor plan for acting upon the object of one’s conscious experience. None of these proposals face the “explanatory gap” bridging the qualitative feel

(or the qualia ) that is needed to answer the question; Why does being stimulated this way or in this location, with this pattern, result in the experience of (say) red or of ice-cold, as opposed to green, or to loud or high pitched, or sweet, or tired, and so on.

References cited

5 Description Experience Sampling (see Hurlburt &)

6