Designing Online Advertising Markets

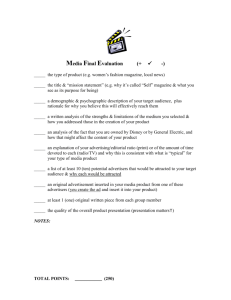

advertisement

Data-Driven Market Design Susan Athey, The Economics of Technology Professor, Stanford GSB Consulting researcher, Microsoft Research Based in part on joint work with: Denis Nekipelov (UVa, MSR) Guido Imbens (Stanford GSB) Stefan Wager (Stanford) Dean Eckles (MIT) Introduction Marketplaces • Uber, Lyft, Airbnb, TaskRabbit, Rover, Zeel, Urbansitter • Two groups of customers – Cross-side network effects Auction-based platforms • Online advertising • Used cars • eBay Market design matters Market Design Examples: Short Run v. Long Run eBay examples • Eliminate listing fees • Make pictures free • Change search algorithm – – Emphasize price Force sellers into more uniform categories • Shipping costs Search advertising examples • Change broad match criteria for looser matching • Change pricing See, e.g.: “Asymmetric Information, Adverse Selection and Online Disclosure: The Case of eBay Motors,” Lewis, 2014 “Consumer Price Search and Platform Design in Internet Commerce,” Dinerstein, Einav, Levin, Sundaresan, 2014 “A Structural Model of Sponsored Search Auctions,” Athey & Nekipelov, 2012 STANFORD GRADUATE SCHOOL OF BUSINESS 3 Influencing Market Design Theoretical framework is key • Makes arguments coherent and precise • Identifies equilibrium/long-term effects • Advertiser/sellers and consumer choices incorporated Complement with data Data, Experimentation, and Counterfactuals Data can inform what kinds of designs will work better or worse in range of environments similar to existing one Advocacy for design issues is much more effective with theory and data combined Experimentation crucial but also has limitations • Short-term experiments can’t show long-term outcomes, feedback effects • Can help gain insight by analyzing heterogeneity of effects One part of empirical economics focuses structure on empirical analysis in order to learn model “primitives” and perform “counterfactuals” • Learn bidder values, predict equilibrium responses • Map between short-run user experience and long-term willingness to click A/B Testing 100% users 50% 50% User interactions instrumented, analyzed, and compared Control: existing system Treatment: Modified system Control average outcome Treatment average outcome Results analysis and future design decisions STANFORD GRADUATE SCHOOL OF BUSINESS 6 Short Term A/B Tests Have Limitations: Search Advertising Case Study Standard industry practice – Run A/B tests on users or page views to test new algorithms – Make ship decision based on revenue & user impact Multiple errors – Unit of experimentation is not unit of analysis • Each advertiser only sees change on 1% of traffic – Interaction effects ignored, fixing bids & budgets • When released to market, other advertisers might hit budget constraints, causing a given advertiser to hit their own – Reactions of bids and budgets ignored – Long term participation ignored STANFORD GRADUATE SCHOOL OF BUSINESS 7 Short Term A/B Tests Have Limitations: Search Advertising Case Study Cheaper fixes (motivated by theory) - Modify evaluation criteria (short term metrics) - Instead of measuring actual short-term performance, focus on part that is correlated with long-term - E.g. only count good clicks - Theory: advertisers won’t pay for bad clicks in equilibrium - Do a small number of long term studies to relate short term metrics to long term - Up-front study only captures responsiveness to types of changes observed in study—can’t answer all questions - Add in constraints that “protect” advertisers - E.g. constrain price increases at the advertiser level - But advertisers very heterogeneous in preferences and responsiveness STANFORD GRADUATE SCHOOL OF BUSINESS 8 Short Term A/B Tests Have Limitations: Search Advertising Case Study Expensive solution I: Long-term advertiser-based A/B test - Apply treatments to randomly selected advertisers (stratify) - Watch for a long time - Problems: • Ignores advertiser interactions!! • Unclear whether conclusions will generalize once other advertisers respond • Expensive and disruptive to advertisers under experimentation • Takes a long time (advertisers respond slowly), interferes with ongoing innovation STANFORD GRADUATE SCHOOL OF BUSINESS 9 10 11 12 eCommerce Markets as Bipartite Graphs Query A Query B Sellers Query C STANFORD GRADUATE SCHOOL OF BUSINESS 13 Testing Interactions: Athey, Eckles, Imbens (2015) • How to do inference properly for various hypotheses? – Method for exact p-values for a class of non-sharp null hypotheses. • Exact: no large sample approximations • Sharp: outcome for each unit known under the null for different treatment assignments – Test hypotheses of the form • “Treating units j related to unit i in way Z has no effect on i” • “Only fraction of neighbors treated matters, not identity or their network position” – Novel insight • Can turn non-sharp null into a sharp one by defining an artificial experiment and analyzing only a subset of the units as “focal” units • Best ways to analyze existing experiments – Most powerful test statistics • Develop model-based test statistics that perform better than commonly used heuristics. • Insight: write down a structural model and use score-based test – How to use exogenous variation that is in the data most effectively Testing Hypotheses About Friends of Friends A Focal unit A C Auxiliary to Focal unit A Buffer for Focal unit A F Auxiliary to Focal units A and B D I Unit Buffer for Focal units A and B E Auxiliary to Focal units A and B G Yi(0 FOF*) Yi(>1 FOF*) Aux Unit Aux Wi A 3 3 C B 2 2 *Holding fixed own treatment and friends’ treat B Focal unit H B Buffer for Focal unit B Auxiliary to Focal unit BAlt. assignments of FOF Wi Aux FOF treat v. control: A has C,F v. D B has F v. D,G 1 2 3 4 5 6 1 1 0 1 0 0 1 D 0 1 0 0 1 1 0 F 1 0 1 0 1 0 1 G 0 0 1 1 0 1 0 1/6 1/6 1/6 1/6 1/6 1/6 8/37/3 =1/3 7/3-8/3 =-1/3 5/2-5/2 =0 5/2-5/2 =0 7/3-8/3 =-1/3 8/3-7/3 =1/3 Probabilities Test statistic: Edge Level Contrast for FOF links between Focal and Auxiliary units 1/3 Short Term A/B Tests Have Limitations: Search Advertising Case Study Expensive solution II: Long-term market-based A/B test STANFORD GRADUATE SCHOOL OF BUSINESS 16 Community Detection Modularity-based algorithm identifies clusters: Modularity = 𝐶 2 𝑖=1(𝑒𝑖𝑖 − 𝑎𝑖 ) Newman 2006 𝑒𝑖𝑖 : fraction of links with both nodes inside the community 𝑖 𝑎𝑖 : number of links with at least one node in community 𝑖 Clustered Randomization and Community-Level Analysis Treated Ugander et al (2013) proposal: • Define “treatment” as having high share of friends treated Control • Use propensity score weighting to adjust for non-random assignment to this condition This is open research area Treated Short Term A/B Tests Have Limitations: Search Advertising Case Study Expensive solution II: Long-term market-based A/B test - Cluster advertisers - Bipartite graph weighting links between advertisers and queries - Weights are clicks or revenue - Issue: spillovers large - Experiment at cluster level - Watch for a long time - Problems: • • Expensive and disruptive to advertisers under experimentation Takes a long time (advertisers respond slowly), interferes with ongoing innovation STANFORD GRADUATE SCHOOL OF BUSINESS 19 Further Approaches 1. Gain insight from A/B tests by studying heterogeneity of effects – Understand how innovation affects different advertisers and queries – Relate to other work showing which types of advertisers are most responsive 2. Build a structural econometric model and do counterfactual predictions – Requires investment in model development and validation – Relies on assumptions, may not be accepted even if validated – Can also explore variety of scenarios interacted with advertiser characteristics STANFORD GRADUATE SCHOOL OF BUSINESS 20 Experiments and Data-Mining • Concerns about ex-post “data-mining” – In medicine, scholars required to pre-specify analysis plan – In economics, calls for similar protocols • But how is researcher to predict all forms of heterogeneity in an environment with many covariates? • Goal of Athey & Imbens 2015, Wager & Athey 2015: – Allow researcher to specify set of potential covariates – Data-driven search for heterogeneity in causal effects with valid standard errors – See also Langford et al, Multi-World Testing Segments versus Personalized Predictions Segments with similar treatment effects • Data-driven search for subgroups • Pro: Interpretability, communicability, policy, inference with moderate sample sizes • Con: Not the best predictor for any individual; segments unstable Fully personalized predictions • Non-parametric estimator of treatment effect as function of covariates • Pro: Best possible prediction for each individual, and can do inference as per Wager-Athey 2015 • Con: Confidence intervals have poor coverage with too many covariates; hard to interpret and communicate Our contributions: • Optimize existing ML methods for each of these goals • Deal with issue: no observed ground truth • Methods provide valid confidence intervals without sparsity STANFORD GRADUATE SCHOOL OF BUSINESS 22 Regression Trees for Prediction Using Trees to Estimate Causal Effects Model: 𝑌𝑖 = 𝑌𝑖 𝑊𝑖 𝑌𝑖 (1) = 𝑌𝑖 (0) 𝑖𝑓 𝑊𝑖 = 1, 𝑜𝑡ℎ𝑒𝑟𝑤𝑖𝑠𝑒. • Random assignment of Wi • Want to predict individual i’s treatment effect 𝜏𝑖 = 𝑌𝑖 1 − 𝑌𝑖 (0) – This is not observed for any individual • Let 𝜇(𝑤, 𝑥) = 𝔼[𝑌𝑖 |𝑊𝑖 = 𝑤, 𝑋𝑖 = 𝑥] 𝜏(𝑥) = 𝜇(1, 𝑥) − 𝜇(0, 𝑥) Using Trees to Estimate Causal Effects 𝜇(𝑤, 𝑥) = 𝔼[𝑌𝑖 |𝑊𝑖 = 𝑤, 𝑋𝑖 = 𝑥] 𝜏(𝑥) = 𝜇(1, 𝑥) − 𝜇(0, 𝑥) • Approach 1: Analyze two groups separately – Estimate 𝜇(1, 𝑥) using dataset where 𝑊𝑖 = 1 – Estimate 𝜇(0, 𝑥) using dataset where 𝑊𝑖 = 0 – Do within-group cross-validation to choose tuning parameters – Construct prediction using 𝜇(1, 𝑥) − 𝜇(0, 𝑥) • Approach 2: estimate 𝜇(𝑤, 𝑥) using tree including both covariates – Choose tuning parameters as usual – Construct prediction using 𝜇(1, 𝑥) − 𝜇(0, 𝑥) – Estimate is zero for x where tree does not split on w Observations Problem 1 Estimation and cross-validation not optimized for goal Lots of segments in Approach 1: combining two distinct ways to partition the data What is a candidate estimator for 𝜏𝑖 (𝑥𝑖 ) Problem 2 How do you evaluate goodness of fit for tree splitting and crossvalidation? 𝜏𝑖 = 𝑌𝑖 1 − 𝑌𝑖 0 is not observed and thus you don’t have ground truth for any unit Causal Trees • Directly estimate treatment effects within each leaf • Modify splitting criterion to focus on treatment effect heterogeneity • Cross-validation criterion must estimate ground truth – Build on statistical theory • Honest trees: one sample to split, another to estimate effects, yields valid confidence intervals – Anticipating honesty changes algorithms • Result: for any ratio of covariates to observations and without sparsity assumptions, can discover meaningful heterogeneity and produce valid confidence intervals Swapping Positions of Algo Links: Basic Results Click-through rate of top link moved to lower position (US All Non-Navigational) 30.0% Moving a link from position 1 to position 3 decreases CTR by 13.6 percentage points 25.4% CTR 20.0% 11.8% 10.0% 7.5% 3.8% 0.0% Position 1 (natural) Position 3 Position 5 Position 10 Search Experiment Tree: Effect of Demoting Top Link Some data (Test Sample Effects) excluded with prob p(x): proportions do not match population Highly navigational queries excluded Test Sample Training Sample Treatment Standard Treatment Standard Effect Error Proportion Effect Error Proportion Use Test Sample for Segment Means & Std Errors to Avoid Bias Variance of estimated treatment effects in training sample 2.5 times that in test sample -0.124 -0.134 -0.010 -0.215 -0.145 -0.111 -0.230 -0.058 -0.087 -0.151 -0.174 0.026 -0.030 -0.135 -0.159 -0.014 -0.081 -0.045 -0.169 -0.207 -0.096 -0.096 -0.139 -0.131 0.004 0.010 0.004 0.013 0.003 0.006 0.028 0.010 0.031 0.005 0.024 0.127 0.026 0.014 0.055 0.026 0.012 0.023 0.016 0.030 0.011 0.005 0.013 0.006 0.202 0.025 0.013 0.021 0.305 0.063 0.004 0.017 0.003 0.119 0.005 0.000 0.002 0.011 0.001 0.001 0.013 0.001 0.011 0.003 0.023 0.069 0.013 0.078 -0.124 -0.135 -0.007 -0.247 -0.148 -0.110 -0.268 -0.032 -0.056 -0.169 -0.168 0.286 -0.009 -0.114 -0.143 0.008 -0.050 -0.045 -0.200 -0.279 -0.083 -0.096 -0.159 -0.128 0.004 0.010 0.004 0.013 0.003 0.006 0.028 0.010 0.029 0.005 0.024 0.124 0.025 0.015 0.053 0.050 0.012 0.021 0.016 0.031 0.011 0.005 0.013 0.006 0.202 0.024 0.013 0.022 0.304 0.064 0.004 0.017 0.003 0.119 0.005 0.000 0.002 0.010 0.001 0.000 0.013 0.001 0.011 0.003 0.022 0.070 0.013 0.078 What if we want personalized predictions? STANFORD GRADUATE SCHOOL OF BUSINESS 31 From Trees to Random Forests (Breiman, 2001) “Adaptive” nearest neighbors algorithm Causal Forests Random forest • Subsampling to create alternative trees – +Lower bound on probability each feature sampled • Causal tree: splitting based on treatment effects, estimate treatment effects in leaves • Honest: two subsamples, one for tree construction, one for estimating treatment effects at leaves – Alternative for observational data: construct tree based on propensity for assignment to treatment (outcome is W) • Output: predictions for 𝜏(𝑥) Main results (Wager & Athey, 2015) • First asymptotic normality result for random forests (prediction), extends to causal inference & observational setting • Confidence intervals for causal effects Applying Method to Long-Term Prediction • Estimate a model relating short-term metrics to long-term behavior, incorporating advertiser characteristics • Estimate heterogeneous effects of treatment in short-term test • Map effects to long-term impact on actors (advertisers) • Predict long-run responses based on responsiveness of the affected advertisers • This method has difficulty with full equilibrium response STANFORD GRADUATE SCHOOL OF BUSINESS 37 Approach 2: Build A Structural Model • Athey & Nekipelov (2012): – Assume profit maximization, estimate values • Athey & Nekipelov (2014, in progress): – Specify a set of objectives – Estimate Bayesian model of objective type and parameters • Use experiments and algorithmic releases to identify objectives—different objectives predict different reactions to change – Model the decision to change bid • Both: use model to predict how advertisers respond to short term metrics – Do short-term experiment – Calculate new equilibrium based on changes – Assume small interactions among advertisers are zero to approximate numerically STANFORD GRADUATE SCHOOL OF BUSINESS 38 39 40 41 42 43 44 45 46 47 48 Summary: A Structural Model • Findings – Substantial set of bidders predictably non-responsive in medium term (high implied cost of bid changing) – Exact match advertisers optimize for position, while broad match optimize for ROI from clicks – Short-term experiments and long-term counterfactuals can go in opposite direction – Examples: switch to Vickrey auction, improving accuracy of click predictor STANFORD GRADUATE SCHOOL OF BUSINESS 49 Conclusions • Power of A/B Testing leads to a culture of relying heavily on experiments • Standard experiments are not appropriate for many problems • Expensive to use correct experimentation approach • Layering analytics and structural models on top of experiments is cheaper • But a culture of short-term experiments leads to resistance to nonexperimental analytics, leading to short term focus for innovation • Advice: – Use rules of thumb to trigger when more costly long-term approaches brought to bear – Take every opportunity to study long-term effects, e.g. use staggered rollouts – Study heterogeneity and build insight STANFORD GRADUATE SCHOOL OF BUSINESS 50