ppt

advertisement

Techniques and Applications for

Audio

695410106

695410121

謝育任

劉威麟

Outline

Audio Watermark

Audio Classification :

Security Monitoring Using Microphone Arrays and Audio Classification

A Generic Audio Classification and Segmentation Approach for Multimedia

Indexing and Retrieval

Introduction

What’s watermark?

Paper watermarks appears nearly 700 years ago

A kind of technology for data hiding

The oldest one be found in 1292

The idea of digital image watermarking arose independently in 1990

Around 1993, coined the word “water mark”

Terminology

Steganography stands for techniques in general that allow secrete

communication

Watermarking , as opposed to steganography, has the additional notion of

robustness against attacks

Fingerprinting and labeling are terms that denote special applications of

watermarking. Ex. Copyright

Bit-stream watermarking is sometimes used for data hiding or watermarking

of compressed data

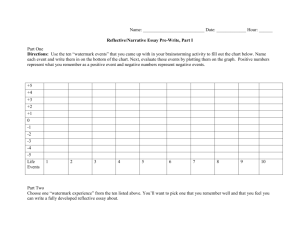

Requirement

A watermark shall convey as much information as possible

A watermark should in general be secret and should only be accessible by

authorized parties

A watermark should stay in the host data regardless of whatever happens

to the host data

A watermark should be imperceptible

Depend on media to be watermarked

Blend V.S Non-blend

Maybe required in a real time

Low complexity-time

Basic Watermarking Principle

There are three main issues in the design of a watermarking system

Design of the watermark signal W to be added to the host signal. Typically, the

watermark signal depends on a key K and watermark information I

possibly, it may also depend on the host data X into which it is embedded

Design of the embedding method itself that incorporates the watermark signal

W into the host data X yielding watermarked data Y

Design of the corresponding extraction method that recovers the watermark

information from the signal mixture using the key and with help of the original

or without the original

Embedding Technologies for Audio

Low-bit coding

By replacing the least significant bit of each sampling point by a coded

binary string

The major disadvantage of this method is its poor immunity to

manipulation

This method is useful only in close, digital-to-digital environment

Phase coding

By substituting the phase of an initial audio segment with a reference

phase that represents the data

Procedure

Break the sound sequence s[i], (0≦i≦I-1), into a series of N short segment,

sn[i] where (0≦n≦N-1)

Apply a K-points discrete Fourier transform to n-th segment, Sn[i], where (k=1/N), and

create a matrix of the phase, ψn(Wk), and magnitude, An(Wk) for (0≦k≦K-1)

Store the phase difference between each adjacent segment for (0≦n ≦N-1)

A binary set of data is represented as a ψdata = π/ 2 or –π/ 2 representing 0 or 1

Re-create phase matrixes for n > 0 by using the phase difference

Use the modified phase matrix and the original magnitude matrix to reconstruct the

sound signal by applying the inverse DFT

• Spread spectrum coding

In the decoding stage, the following is assumed:

The pseudorandom key is maximal

The key stream for the encoding is known by the receiver. Signal

synchronization is done, and the start/stop point of the spread data are

known

The following parameters are known by the receiver: chip rate, data rate,

and carrier frequency

To keep the noise level low and inaudible, the spread code is attenuated

to roughly 0.5 percent of the dynamic range of the host sound file

• Echo data hiding

– The data are hidden by varying three parameters of the echo: initial

amplitude, decay rate, and offset

Example

Decoding:

magnitude of the autocorrelation of the encoded signal’s cepstrum:

Classification of attacks

“Simple attacks” (other possible names include “waveform attacks” and

“noise attacks”) are conceptually simple attacks that attempt to impair the

embedded watermark by manipulations of the whole watermarked data

without an attempt to identify and isolate the watermark

“Detection-disabling attacks” (other possible names include

“synchronization attacks”) are attacks that attempt to break the correlation

and to make the recovery of the watermark impossible or infeasible for a

watermark detector

Classification of attacks (continue)

“Ambiguity attacks” (other possible names include “deadlock attacks”,

“inversion attacks”, “fake-watermark attacks”, and “fake-original attacks”)

are attacks that attempt to confuse by producing fake original data or fake

watermarked data

“Removal attacks” are attacks that attempt to analyze the watermarked

data, estimate the watermark or the host data, separate the watermarked

data into host data and watermark, and discard only the watermark

Watermark algorithm

LSB

Working in time-domain and embedding the watermark in the least significant

bits

The message is embedded many times into audio signal

Parameters

Secrete key, error correction code, embedding message, etc

Microsoft

Working in frequency domain and embedding watermark in the frequency

coefficients by using spread spectrum technique

Only one parameter: embedding message

VAWW ─ Viper Audio Water Wavelet

Working in wavelet domain and embedding the watermark in selected

coefficients

Parameter :

Secrete key

Threshold, which selects the coefficients for embedding. The default value is 40

Scale factor, which means the embedding strength. The default value is 0.2

Publimark

Open source tool

Parameter:

Embedded message

Public/private key

Reference

Multimedia Watermarking Technique

By Hartung, F.; Kutter, M.;

PROCEEDINGS OF THE IEEE, VOL. 87, NO. 7, JULY 1999

Techniques for data hiding

By W. Bender D. Gruhl N. Morimoto A. Lu ;

IBM SYSTEMS JOURNAL, VOL 35, NOS 3&4, 1996

Transparency and Complexity Benchmarking of Audio Watermarking

Algorithms Issus

By Andreas Lang, Jana Dittmann

Audio Classification

Audio Classification

Security

Multimedia Indexing and Retrieval

Other

Introduction

The proposed system :

Location

Type of sound

SNR (signal to noise ratio) :

Reflection coefficient : A reflection coefficient describes either the amplitude

or the intensity of a reflected wave relative to an incident wave.

Proposed Security monitoring instrument

Center Clipping

c(n) is the center clipped sample at time index n

s(n) is the audio sample at time index n

PR algorithm

The PR algorithm divides the audio segment into frames, estimate the

presence of the human pitch in each frame, and calculates a PR parameter.

PR = NP / NF

NP : the numbers of frames that have human pitch

NF : the total number of frames

Pitch Value

Pitch arg (max{Rxx ( ) : Rxx ( ) 0.4 RMSE})

Human Pitch = {Pitch : 70Hz < Pitch < 280Hz}

Proposed system

Non-speech Classification

Time Delay Neural Network (TDNN) is used to classify a nonspeech audio

segment into an audio Type. (e.g., door opening, fan noise…etc)

MFCC (Mel-Filtered Cepstral Coefficient) :

△ MFCC (Delta Mel-Filtered Cepstral Coefficient) :

Simulation Enviroment

Simulation Environment :

Simulation Results

OR (overlap ratio) = 0.85 .

SD ( segment duration) = 400 MS

Introduction

A Generic Audio Classification and Segmentation Approach for Multimedia

Indexing and Retrieval

Bi-model :

Bit-Stream : a series of bits

Generic mode : temporal and spectral information is extracted from the PCM

samples.

Classification :

Speech

Music

Silence

Fuzzy

Erroneous classification

Critical Errors : one pure class is misclassified into another pure class.

Semi-critical Errors : a fuzzy class type is misclassified as one of the pure

class types.

Non-critical errors : a pure class is misclassified as a fuzzy class.

Classification and Segmentation framework

Spectral Template

Pulse Code Modulation : PCM is a common method of storing and

transmitting uncompressed digital audio. PCM is also a very common format

for AIFF and WAV files.

1 : positive voltage pulse

0 : absence of pulse

Spectral template : it formed from the input audio source, and it can be

obtained from the MDCT coefficient of MP3 granules.

Power Spectrum : it obtained from the PCM samples.

About MP3

Layer3 encoding process starts by dividing the audio signal into frames,

which corresponds to one or two granules.

Each granules has 576 PCM samples.

There are three windowing modes in Mpeg layer3 encoding scheme : Long,

Short, Mixed

Bit-Stream Mode

MDCT (Modified discrete cosine transform) :

xk

2 N 1

n 0

1 N

1

xn cos[ (n )(k )]

N

2 2

2

Bit-Stream Mode

MDCT (w, f)

w represents the window number

f represents the line frequency index

Frame Features

Total Frame Energy (TFE) Calculation : to detect silent frame

TFE j

NoW NoF

2

(

SPEQ

(

w

,

f

)

)

j

w

f

Band Energy Ratio (BER) Calculation : to detect the ratio between of two

spectral regions that are separated by a single cut-off frequency.

BER j ( f c )

Now

f fc

w

f 0

Now

f fc

w

f f fc

( SPEQ j ( w, f )) 2

( SPEQ j ( w, f )) 2

Frame Features

Fundamental Frequency Estimation : if the input audio is harmonic over a

fundamental frequency, the real fundamental frequency (FF) value can be

estimated from the spectral coefficient (SPEQ(w,f))

Subband Centroid Frequency Estimation : Subband Centroid (SC) is the first

moment of the spectral distribution.

( SPEQ( w, f ) * FL( f ))

SPEQ(w, f )

NoW

f sc

w

NoF

f

NoW

NoF

w

f

Initial Classification

Segment Features

Transition Rate (TR) : transition between consecutive frames. TR has a forced

speech classification.

NoF i

NoF

TR( S )

TP i

2 NoF

Fundamental Frequency Segment Feature : FF has a forced music

classification

Subband Centroid Segment Feature : SC has two forced classification

region, one for music and the other for speech content.

Step2

Generic Decision Table

Step3