University Assessment Committee Report

advertisement

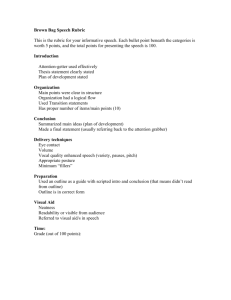

Institutional Planning, Assessment & Research Assessment Review Committee Report College of Education Diana B. Lys, CHAIR Anticipated Presentation, February 2013 2010 Institutional Planning,Assessment, Assessment & Research Research 2013 Institutional Planning, and 1 Mentoring/Review Process • The COE ARC held four meetings as part of its review process: – October 10 • Introduced ARC task. Commenced review of COE exemplars to build rating consistency. Time did not allow for a full review of a report. – October 16 • Reviewed ARC scoring assignments and timeline. Collaboratively developed COE ARC Guidelines for Rubric Review Process. Scoring assignments were paired, but completed independently. – November 18 • Discussed questions and concerns and revised Guideline accordingly. COE ARC began to enter results in online rubric. – December 10 • Reflected on ARC process and began drafting COE ARC report. Full feedback was collected via Qualtrics and compiled by OAA. 2013 Institutional Planning, Assessment & Research 2 2012-13 Component Data College of Education as of January 10, 2014 Developing Acceptable Proficient Outcome 23 33 109 Means of Assessment 81 123 57 Criteria for Success 59 66 137 Results 49 96 116 Actions Taken 83 72 104 Follow-Up to Actions Taken 125 51 75 Data from Alex Senior, IPAR. January 10, 2014 2013 Institutional Planning, Assessment & Research 3 Data Visualization 100% 90% 80% 70% 60% 50% 40% 30% 20% 10% 0% Proficient Acceptable Developing Data from Alex Senior, IPAR. January 10, 2014 2013 Institutional Planning, Assessment & Research 4 2012-13 Best Practices – “Closing the Loop” ELMID - Elementary Education (MAEd) • Outcome: Reflective Practitioner Elementary Education (MAED) candidates will reflect on their teaching practice by developing and implementing an action research project in an elementary classroom. • Means of Assessment: Means of Assessment #1 MAED students will complete the Exit Survey for Advanced Program Graduates upon graduation. Responses to item action research items will be calculated. The responses range from 1 - strongly disagree to 4 - strongly agree. Means of Assessment #2 MAEd students will complete GEP 3- Action Research Project in ELEM 6001. The evidence will be scored using a common, clearly defined rubric. ELEM 6001 instructors will score the evidence. The rubric scale is 1-Below Proficient, 2- Proficient, 3-Accomplished. The rubric criteria includes 1) Introduction, 2) Literature Review and Statement of Research Question, 3) Methodology, 4) Findings/Results, 5) Discussion and Conclusions, 6) Reflection, 7) Reference List, 8) Mechanics, 9) Appendices. • Criteria for Success: Criterion for Success #1 The mean rating for all graduates will be 3.30 or higher. The responses range from 1 - strongly disagree to 4 - strongly agree. Criterion for Success #2 Students will earn an overall mean score of 2.0 or higher. The rubric scale is 1-Below Proficient, 2-Proficient, 3-Accomplished. The rubric criteria includes 1) Introduction, 2) Literature Review and Statement of Research Question, 3) Methodology, 4) Findings/Results, 5) Discussion and Conclusions, 6) Reflection, 7) Reference List, 8) Mechanics, 9) Appendices. • 2012-2013 Results: Results #1 SPRING 2013: A total of 7 students completed the Graduate Exit Survey. For item #9 the mean was 3.57 out of 4.0 (The Program developed or enhanced by ability to apply research to my professional practice). Five students strongly agreed with the statement, 1 agreed with the statement, and 1 disagreed with the statement. For item #10 the mean was also 3.57 out of 4.00 (The program developed or enhanced my ability to conduct research in my professional field). Five students strongly agreed with the statement, 1 agreed with the statement, and 1 disagreed with the statement. Based upon the criterion of success of a mean of 3.30 or higher, the criterion was met. Results #2 A mean score of 77.25 out of 100 for 14 students was reported. The overall mean score on the rubric was a 2.32 out of 3.0. The criterion for success was identified as an overall mean score of 2.0 or higher. This indicates that the outcome was successfully met. Individual mean scores for each section are as follows: 1) Introduction - Mean 2.5 2) Literature Review and Statement of Research Question - Mean 2.43 3) Methodology - Mean 2.29 4) Findings/Results - Mean 2.0 5) Discussion and Conclusions - Mean 2.21 6) Reflection - Mean 2.43 7) Reference List - Mean 2.21 8) Mechanics - Mean 2.21 9) Appendices - Mean 2.32 The criterion for success was met. • Actions Taken (based on analysis of results): Actions Taken #1 Faculty met and discussed the results and concluded that the criterion for success had been met based upon the students completing the Spring 2013 survey. Faculty expressed concern that the response rate was low and provided inconclusive results based upon the objective. As a result of the limited data gathered in prior semesters, the faculty developed a strategy to involve more students in providing responses in order to create a more robust data set. This strategy includes identifying reporting times and reminding students and faculty that participation is important to provide an accurate representation of our program. The faculty also discussed the creation and implementation of a student reflection activity to be administered at the beginning of the ELEM MAEd program as well as a culminating activity in ELEM 6001. Actions Taken #2: Faculty met and discussed the results. The faculty emphasized the rubric scale for this item. A score of a 2 out of 3 represents that of proficiency. As students are learning to become action researchers, the goal of being proficient is a realistic one for students at this stage in their development as researchers. The mean for each part of the action research project was greater than 2.0. Faculty discussed providing additional support for students in the areas of methodology and findings/results. • Initiative/Change/Strategy Implemented for Program/Unit improvement: Follow-up to Actions Taken #1: The action implemented was a success. The criterion for success was still met. The faculty continued to support students in their journey to become reflective practitioners through the process of designing and conducting action research. The faculty implemented revisions based upon initial course discussion to provide further clarity of the process. Follow-up from Actions Taken #2: The action implemented was successful in enhancing student reflection on teaching practice. The faculty continued discussion regarding an increase in the criterion for success and determined that given the rubric scale of 1-Below Proficient, 2-Proficient, 3- Accomplished that a goal for students should be to become proficient as they are learning to become action researchers. 2013 Institutional Planning, Assessment & Research 5 2012-13 Best Practices – “Closing the Loop” HACE - Counselor Education (MS) • Outcome: Impact on Student Learning: Candidates completing the MS in Counselor Education should demonstrate a readiness to effectively work with diverse populations in the role of a counselor. • Means of Assessment: Counselor Education Comprehensive Examination • Criteria for Success: Students completing the Counselor Education Comprehensive Examination for the MSEd degree should answer questions related to diversity in counseling at a satisfactory level as determined by the scoring rubric. All students completing the degree must take this exam and successful completion is based on a scoring rubric determined by the faculty. More specifically, students are expected to attain a score that is no less than one standard deviation below the mean for the national norm group for the version of the Counselor Preparation Comprehensive Exam (CPCE) they complete. • 2012-2013 Results: Of 47 students taking the CPCE in the academic year 2012-2013, only 2 students failed to score at least within one standard deviation of the national mean on the subscale corresponding to Social and Cultural Foundations. • Actions Taken (based on analysis of results): The Counselor Education Faculty decided to continue to use the Counselor Preparation Comprehensive Exam (CPCE) as the criterion for success. In addition, faculty decided to require students who score lower than one standard deviation below the national mean on the Social and Cultural Foundations subscale of the CPCE to complete a remediation activity designed by the faculty. • Initiative/Change/Strategy Implemented for Program/Unit improvement: The strategy implemented for program improvement includes developing a remediation activity to help students who do not score better than 1 standard deviation below the mean on the CPCE on the Social and Cultural Foundations subscale. The purpose is to help students be better prepared to work with diverse populations as the move into counseling careers. 2013 Institutional Planning, Assessment & Research 6 2012-13 Best Practices – “Closing the Loop” ILS - Library Science (MLS) • Outcome: Content knowledge: Library Science (MLS) candidates develop information literacy, reference inquiry or information organizational skills • Means of Assessment: Candidates complete an assignment dealing with eight problems sets using a variety of reference sources • Criteria for Success: 95 percent of students will score at the proficient or above proficient level, as defined by the assignment rubric • 2012-2013 Results: 100% of students scored proficient or above proficient. Feedback from students was positive, undoubtedly because students felt a sense of accomplishment when they learned the basic information literacy skills of answering questions by employing successful strategies and using eight categories of reference works that they will use in their careers. • Actions Taken (based on analysis of results): The assignment was retained. The program faculty decided to examine those problem sets with lower candidate achievement to determine areas for improvement within the assignment. This outcome is critical to program candidate development of information literacy skills in other program courses. • Initiative/Change/Strategy Implemented for Program/Unit improvement: The program faculty decided to examine those problem sets with lower candidate achievement to determine areas for improvement within the assignment. This outcome is critical to program candidate development of information literacy skills in other program courses. 2013 Institutional Planning, Assessment & Research 7 2012-13 Best Practices – “Closing the Loop” LEED - School Administration (MSA) • Outcome: MSA candidates develop leadership skills and ability to lead complex educational organizations. • Means of Assessment: The MSA candidate's leadership portfolio is assessed using the service leadership rubrics that demonstrate proficiency of the leadership skills associated with the North Carolina State Standards for School Executive competencies. These competencies include: strategic leadership, instructional leadership, human resource leadership, managerial leadership, cultural leadership, and external development leadership. • Criteria for Success: 100% of the students completing the program of study will demonstrate a minimum numerical score of a 3 (which indicates proficiency) on an 4-point rubric. • 2012-2013 Results: 100% of the students (N=85) scored a level 3 or higher on the 4-point rubric. • Actions Taken (based on analysis of results): The scoring rubrics were finalized to be used in the portfolio reviews. In developing the rubrics the program was improved because the discussions about proficiency levels were then shared with students as they were completing their projects. These discussions helped develop a consensus among faculty about the expectations for proficiency. In addition, students were also able to understand more clearly the program's expectations for proficiency in the portfolio. • Initiative/Change/Strategy Implemented for Program/Unit improvement: Faculty indicated that the formative completed at the end of year 1 of the program alerted program adviser of potential learning issues. As a result, the internship supervisor contacted the student and the site supervisor to discuss the areas of concerns and to develop plans for improvement. As a team with the student, the areas of concerns were addressed during the internship with positive results. 2013 Institutional Planning, Assessment & Research 8 2012-13 Best Practices – “Closing the Loop” LEHE - History Education (BS) • Outcome: Evidence of Planning : HIED BS candidates demonstrate the ability to plan focused, sequenced instruction for a specific learning segment. • Means of Assessment: 1. Senior II interns are rated by their university supervisor a minimum of four times during the senior II semester. Students are assessed in six areas: (A) professional dispositions and relationships; (B) classroom climate and culture; (C) instructional planning; (D) implementation of instruction; (E) classroom management; and (F) evaluate/assessment - impact on student learning. Senior II interns are rated on a 3 point scale ranging from 1(below proficient) to 3 (above proficient). A score of 2 indicates "proficient." Items C1-14 assess senior II interns' proficiency in planning instruction and assessment. 2. Undergraduate faculty have met and determined that the identified items in this outcome and means of assessment are appropriate and relevant for programmatic feedback and growth and that they will continue to be used. Faculty discussed how data collected from the progress reports are indicative of positive practice in the program, yet there will need to be additional supports provided through methods courses and intern support protocols to assure that students are performing at the highest level with reference to this outcome. • Criteria for Success: 1. 90% of HIED senior II interns will score 2.0 or higher (on a 3 point scale) on the final (fourth) intern progress report. 2.90% of HIED senior II interns will score at level 3 (on a 5 level scale) on rubrics 1-5 of the edTPA portfolio. • 2012-2013 Results: 1. 100% (36 of 36) of interns scored at 2.0 or higher (on a 3 point scale) on items C1, C2, C3, C4, C5, C6, C9, C10, and C13 of the fourth intern progress report. 97.2 % (35 of 36) of interns scored at 2.0 or higher (on a 3 point scale) on items C7, C8, C11, C12, and C14 of the fourth intern progress report. 2.97.2% of HIED interns (35 of 36) scored at level 3 (on a 5 level scale) on rubric 1 of the edTPA portfolio. 100% of HIED interns (36 of 36) scored at level 3 (on a 5 level scale) on rubric 2 of the edTPA portfolio. 100% of HIED interns (36 of 36) scored at level 3 (on a 5 level scale) on rubric 3 of the edTPA portfolio. 94.4% of HIED interns (34 of 36) scored at level 3 (on a 5 level scale) on rubric 4 of the edTPA portfolio. 94.4% of HIED interns (34 of 36) scored at level 3 (on a 5 level scale) on rubric 5 of the edTPA portfolio. • Actions Taken (based on analysis of results): 1.Undergraduate faculty have met and determined that the identified items in this outcome and means of assessment are appropriate and relevant for programmatic feedback and growth and that they will continue to be used. Faculty discussed how data collected from the progress reports are indicative of positive practice in the program, yet there will need to be additional supports provided through methods courses and intern support protocols to assure that students are performing at the highest level with reference to this outcome. 2.Undergraduate faculty have met and determined that this is an effective measure of student development in this area and that this means of assessment should continue into the 2013-2014 academic year. Program faculty have also determined that the edTPA should continue to be used by the program as a means of systematic and ongoing program assessment. • Initiative/Change/Strategy Implemented for Program/Unit improvement: Change from 2011-12 to 2012-13: The professional portfolio was replaced by a more comprehensive performance assessment called the TPA assessment. Tasks 1 and 2 (rubrics 1-5) address students instructional planning and implementation and, therefore, those components relate to this outcome. IN spring 2012, 89.5% of students (17 of 19) earned a rating of 3.0 (proficient) or higher on rubrics 1-5 of TPA. 2013 Institutional Planning, Assessment & Research 9 2012-13 Best Practices – “Closing the Loop” MSITE - Mathematics, Secondary Education (BS) • Outcome: Global Perspective MATE BS candidates communicate, interact and work positively with individuals from other cultural groups. • Means of Assessment: Two measures will be used for this outcome: (1) Section B of the students’ 4th Progress Report during the Senior II internship is: “Classroom Climate and Culture.” This section has 8 items. Each item is evaluated on a 3-point scale: 1 = below proficient; 2 – proficient; 3 – above proficient. (2) UG Exit Survey items where students rate their confidence from (1) Not confident at all to (5) Very confident on the items: (a) “I respect cultural backgrounds different from my own”; and (b) “I am able to communicate with students and families from other cultural groups.” • Criteria for Success: (1) (a) 100% of students will score a 2 or higher on each item; (b) the overall class average for each item will be at least 2.5. (2) (a) 100% of students will score a 2 or higher on each item; (b) the overall class average for each item will be at least 2.5. • 2012-2013 Results: (1) For AY 2012-2013, 13 students completed their internship. 100% of students scored a 2 or higher on each item in Section B: “Classroom Climate and Culture”. Students scored a class average of 2.5 or higher on 6 of 8 items. The average score for item 5 “Demonstrates knowledge of diverse cultures” was 2.15. The average score for item 6: “Demonstrates culturally responsive teaching was 2.15. Criterion (1) not met. (2) For AY 2012-2013, 8 students completed the UG Exit Survey. (a) 100% of students scored a 3 or higher on the item “I respect cultural backgrounds different from my own” with an overall class average of 4. (b) 100% of students scored a 3 or higher on the item “I am able to communicate with students and families from other cultural groups” with an overall class average of 3.63. Criterion (2) met • Actions Taken (based on analysis of results): The MATE faculty met and reviewed results of data collected from AY 2012-2013. Because communicating and working positively with diverse individuals is essential to being a successful teacher, MATE faculty decided on the following actions: (1) Provide greater focus on classroom management and working with students from diverse backgrounds in MATE 4323, 4324, and 4325 by the addition of readings, activities, and field assignments focusing on diversity. (2) For Means of Assessment 1, add statement A3 (“Collaborate with colleagues to monitor student performance and make instruction responsive to cultural differences and individual learning needs”), and C10 (“Appropriately uses materials or lessons that counteract stereotypes and acknowledges the contributions of all cultures and incorporates different points of view in instruction”) to the items in Section B from the candidates’ 4th progress report. • Initiative/Change/Strategy Implemented for Program/Unit improvement: (1) For AY 2012-2013, 13 students completed their internship. 100% of students scored a 2 or higher on each item in Section B: “Classroom Climate and Culture”. Students scored a class average of 2.5 or higher on 6 of 8 items. Item 1 which was previously 2.38 increased to 2.62. However, the average score for item 5 “Demonstrates knowledge of diverse cultures” fell (2.15). In addition, item 6: “Demonstrates culturally responsive teaching fell (2.15). The action was not successful. (2) For AY 2012-2013, 8 students completed the UG Exit Survey. (a) 100% of students scored a 3 or higher on the item “I respect cultural backgrounds different from my own” with an overall class average of 4. (b) 100% of students scored a 3 or higher on the item “I am able to communicate with students and families from other cultural groups” with an overall class average of 3.63. The latter action was not successful. 2013 Institutional Planning, Assessment & Research 10 2012-13 Best Practices – “Closing the Loop” SEFR - Special Education, General/Adapted Curriculum (BS) • Outcome: SPED-BS candidates plan focused, sequenced instruction including method of assessment of student learning. • Means of Assessment: 1: SPED Faculty, University supervisors and approved volunteers will be responsible for scoring Senior II's Teacher Performance Assessment using the TPA Planning Rubric 1: Planning Instruction. Scoring is based on a clearly defined rubric upon which all scorers are trained. 2: University faculty will score the edTPA trial run embedded in the spring semester of the junior year (SPED 3100/3200) using the clearly defined rubric for edTPA located in TaskStream. • Criteria for Success: 1: 80% of Senior II interns will score a Level 3 or higher on a scale of 1-5. 2: 80% of students will earn an overall rating of proficient or higher. Criteria The mean response rating for each item will be 3.0 (proficiency) or higher on a scale of 1 to 5. • 2012-2013 Results: 1: Results from the 2012/2013 Senior II students’ edTPA Instruction Commentary and Rubric indicated that 83% of students achieved level 3 or better (proficient) on a 5-point scale on the October 2011 version of the SPED edTPA which evaluated Planning for Developing Content Understandings (Rubric SEIS1). 2: Results for AY 2012/2013: Fourteen (14) of juniors who are GC majors for whom scores were reported demonstrated proficiency in edTPA trial run embedded in the spring semester of the junior year (SPED 3100) using the clearly defined rubric for edTPA located in TaskStream. On average, students scored a 2.9 on a 3-point scale. • Actions Taken (based on analysis of results): 1: During the 2012/2013 academic year, assignments from SPED 2123, SPED 2109/2209, and 3109 were piloted to integrate more from the edTPA. The revised assignments were not piloted in 3209. In the 2013/2014 academic year in order to better develop student understanding and ability to elicit and build on student responses related to knowledge, skills, or strategies to provide access for learning additional reflections and activities in junior level courses of SPED 3100, 3109, 3200 and 3209 were further revised to incorporate more information from the edTPA Planning Commentary. 2: Based on the data from the edTPA overall and the observations of students in 3109 and seniors in the edTPA, the assignments for 3200/3209 and 3100/3109 were revised in the 2013-2014 academic year to further integrate the edTPA components and build student understanding and expectations. • Initiative/Change/Strategy Implemented for Program/Unit improvement: Increase in specificity in the language of the stated goal, assignment/course alignment, criteria, analysis, and data reporting. 2013 Institutional Planning, Assessment & Research 11 Substantive Changes (For example: All new Outcomes/MOAs or a reorganization of the assessment unit) ELMID - Middle Grades Education (BS) • Program/Unit: Middle Grades Education (BS) • Description of Changes: Faculty began preparations for the implementation of a new portfolio assessment. It was decided that all MIDG interns would complete a Teaching Performance Assessment (TPA) in Spring 2012. The nationally validated and reliable assessment included12 new rubrics. Assessment design would be a focus in those rubrics. The adoption of the edTPA necessitated that changes in course assignments be made in order to align program instruction and prepare students for successful completion of the edTPA. • Justification for Changes: – Describe how change(s) is/are designed to improve student learning, program quality, or unit performance in response to the previous year’s assessment results. Faculty discussed the inconsistency with previous portfolio assessments and possible flaws in data collection of those measures, specifically how the data was quantified. Due to the reporting structure for the data, it was not possible to drill down farther. Additional data was needed to further direct ongoing programmatic improvement. Faculty made the change from the previous portfolio to the edTPA because it was a more reliable and valid indicator of student performance. It would also allow interns from ECU to be compared to other teacher education candidates nationally. 2013 Institutional Planning, Assessment & Research 12 Substantive Changes (For example: All new Outcomes/MOAs or a reorganization of the assessment unit) HACE - Community College Instruction (G-Cert) • Program/Unit: Community College Instruction (G-Cert) • Description of Changes: In the CCI certificate, we had an outcome related to leadership which stated the following outcome: Prepare community college instructional personnel to be effective leaders in their institutions. The CCI faculty assessed this outcome using an assignment that students completed for one of the core courses in the program - a case study they wrote about an instructional leader. However, it was determined by faculty last year that this wasn't a great measure of leadership (being able to write about a leader doesn't make one a great leader). So the faculty decided to discontinue this measure, and are now looking at more specific components of leadership (focusing more on what the student learns about leadership in the program) that are included in the student's culminating portfolio for the CCI certificate. • Justification for Changes: – Describe how change(s) is/are designed to improve student learning, program quality, or unit performance in response to the previous year’s assessment results. This change was made in an effort to better measure the intended outcome. Including components related to leadership in the student's culminating portfolio for the CCI certificate gives faculty a clearer picture of what the student learned, rather than having the focus be on what they observed from another person. This makes the learning more personal and reflective, and a better measure of the student's learning related to this outcome. 2013 Institutional Planning, Assessment & Research 13 Substantive Changes (For example: All new Outcomes/MOAs or a reorganization of the assessment unit) ILS - Masters of Library Science (MLS) • Program/Unit: Masters of Library Science (MLS) • Description of Changes: Increase in specificity in the language of the stated goal, assignment/course alignment, criteria, analysis, and data reporting. • Justification for Changes: – Describe how change(s) is/are designed to improve student learning, program quality, or unit performance in response to the previous year’s assessment results. Changes allow faculty to be consistent in program assessment expectations and provide/demand a level of detail that gives more relevant information for ongoing program assessment. 2013 Institutional Planning, Assessment & Research 14 Substantive Changes (For example: All new Outcomes/MOAs or a reorganization of the assessment unit) LEED - Education Leadership K-12 (EdD) • Program/Unit: Education Leadership K-12 (EdD) • Description of Changes: With the redesign of the EdD (K12 concentration), a new outcome and associated means of assessment, and criterion for success was developed/implemented. The new outcome was directly related to the measure of how candidates doctoral dissertation study resolved problems of practice in the educational setting. • Justification for Changes: – Describe how change(s) is/are designed to improve student learning, program quality, or unit performance in response to the previous year’s assessment results. These changes are designed to improve the unit performance by collecting data on how the doctoral candidate's research impacted a problem of practice. Additionally, the assessment of the doctoral candidates skills associated with the dissertation in practice will be used to inform program activities / curriculum. 2013 Institutional Planning, Assessment & Research 15 Substantive Changes (For example: All new Outcomes/MOAs or a reorganization of the assessment unit) LEHE - Reading Education (MAEd) • Program/Unit: Reading Education (MAEd) • Description of Changes: The Reading Education faculty (together with the other areas in LEHE) aligned Program Outcomes to be consistent across the College of Education by adding additional outcomes (Evidence of Planning; Impact of Student Learning; Content Knowledge; Diverse Clinical/Field Experience; 21st Century Skills). These additional measures will provide additional information on which to base program area decisions. • Justification for Changes: – Describe how change(s) is/are designed to improve student learning, program quality, or unit performance in response to the previous year’s assessment results. Using information from an in-house assessment audit, the Reading Education faculty (together with the other areas in LEHE) aligned Program Outcomes to be consistent across the College of Education. These additional measures will provide additional starting points for information to be analyzed and on which to base program area decisions. The additional data collected and reported will promote a more consistent baseline for course modifications and thus in turn improve program quality. 2013 Institutional Planning, Assessment & Research 16 Substantive Changes (For example: All new Outcomes/MOAs or a reorganization of the assessment unit) MSITE - Mathematics, Secondary Education (BS) • Program/Unit: Mathematics, Secondary Education (BS) • Description of Changes: MATE faculty developed the SLO Evidence of planning to focus an area where students reported the need for additional work. MATE faculty collect end of program assessment information which indicates that graduating students report the need for more attention on planning. • Justification for Changes: – Describe how change(s) is/are designed to improve student learning, program quality, or unit performance in response to the previous year’s assessment results. Modifications to the outcomes were made due to the implementation of the edTPA. 2013 Institutional Planning, Assessment & Research 17 Substantive Changes (For example: All new Outcomes/MOAs or a reorganization of the assessment unit) SEFR - Special Education, General/Adapted Curriculum, (BS) • Program/Unit: Special Education, General/Adapted Curriculum, (BS) • Description of Changes: No substantive changes have been made. As noted in the outcome improvement, the program did create a process for examining assignments for improvement. • Justification for Changes: – Describe how change(s) is/are designed to improve student learning, program quality, or unit performance in response to the previous year’s assessment results. The program has had an extensive amount of work with our Task Stream rubrics and process last year and the year before in preparation for our accreditation. 2013 Institutional Planning, Assessment & Research 18 Rubric and Review Process Feedback • Provide feedback on the rubric and review process. –For example: • What worked for your ARC • What difficulties were encountered? 2013 Institutional Planning, Assessment & Research 19 Rubric and Review Process Feedback What worked. Difficulties. • Resources • Rubric – Having a rubric – Having samples/ exemplars to review prior to rating • Conversations with ARC colleagues • Simple rubric submission process • Multiple reviewers for each report 2010 Institutional Planning, Assessment & Research – Limits “Proficient” rating to outcome with 2 Means of Assessment – Piecemeal, not holistic review of each outcome • Time – End of semester challenge – Limited time to train on rubric and develop inter-rater reliability 20 Rubric and Review Process Feedback • Describe your ARC’s process and progress towards addressing comments for “developing” and “acceptable” components received in the 2013 assessment review. 2013 Institutional Planning, Assessment & Research 21 Rubric and Review Process Feedback • Feedback on ARC actions: – Decided to “err on low side” when reviewing report elements – Streamlined terminology brought consistency to the unit’s reports – Reviewed reports as group to build consensus, but limited time did not allow for full reviews • Additional issues to address with future reviews: – Inherently subjective process is challenging without more time or consensus building opportunities – “More time to reflectively analyze only enhances the quality of the product.” – “important to have ARC members to serve multiple years to help develop a consistency in the process.” 2010 Institutional Planning, Assessment & Research 22