ppt - Modeling the Internet and the Web

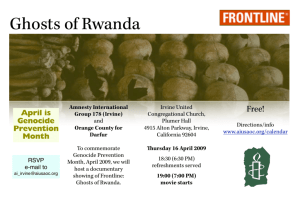

advertisement

Basic WWW Technologies 2.1 Web Documents. 2.2 Resource Identifiers: URI, URL, and URN. 2.3 Protocols. 2.4 Log Files. 2.5 Search Engines. Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine What Is the World Wide Web? The world wide web (web) is a network of information resources. The web relies on three mechanisms to make these resources readily available to the widest possible audience: 1. A uniform naming scheme for locating resources on the web (e.g., URIs). 2. Protocols, for access to named resources over the web (e.g., HTTP). 3. Hypertext, for easy navigation among resources (e.g., HTML). Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 2 Internet vs. Web Internet: • Internet is a more general term • Includes physical aspect of underlying networks and mechanisms such as email, FTP, HTTP… Web: • Associated with information stored on the Internet • Refers to a broader class of networks, i.e. Web of English Literature Both Internet and web are networks Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 3 Essential Components of WWW Resources: • Conceptual mappings to concrete or abstract entities, which do not change in the short term • ex: ICS website (web pages and other kinds of files) Resource identifiers (hyperlinks): • Strings of characters represent generalized addresses that may contain instructions for accessing the identified resource • http://www.ics.uci.edu is used to identify the ICS homepage Transfer protocols: • Conventions that regulate the communication between a browser (web user agent) and a server Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 4 Standard Generalized Markup Language (SGML) • Based on GML (generalized markup language), developed by IBM in the 1960s • An international standard (ISO 8879:1986) defines how descriptive markup should be embedded in a document • Gave birth to the extensible markup language (XML), W3C recommendation in 1998 Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 5 SGML Components SGML documents have three parts: • Declaration: specifies which characters and delimiters may appear in the application • DTD/ style sheet: defines the syntax of markup constructs • Document instance: actual text (with the tag) of the documents More info could be found: http://www.W3.Org/markup/SGML Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 6 DTD Example One <!ELEMENT UL - - (LI)+> • ELEMENT is a keyword that introduces a new element type unordered list (UL) • The two hyphens indicate that both the start tag <UL> and the end tag </UL> for this element type are required • Any text between the two tags is treated as a list item (LI) Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 7 DTD Example Two <!ELEMENT IMG - O EMPTY> • The element type being declared is IMG • The hyphen and the following "O" indicate that the end tag can be omitted • Together with the content model "EMPTY", this is strengthened to the rule that the end tag must be omitted. (no closing tag) Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 8 HTML Background • HTML was originally developed by Tim BernersLee while at CERN, and popularized by the Mosaic browser developed at NCSA. • The Web depends on Web page authors and vendors sharing the same conventions for HTML. This has motivated joint work on specifications for HTML. • HTML standards are organized by W3C : http://www.w3.org/MarkUp/ Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 9 HTML Functionalities HTML gives authors the means to: • Publish online documents with headings, text, tables, lists, photos, etc – Include spread-sheets, video clips, sound clips, and other applications directly in their documents • Link information via hypertext links, at the click of a button • Design forms for conducting transactions with remote services, for use in searching for information, making reservations, ordering products, etc Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 10 HTML Versions • HTML 4.01 is a revision of the HTML 4.0 Recommendation first released on 18th December 1997. – HTML 4.01 Specification: http://www.w3.org/TR/1999/REC-html401-19991224/html40.txt • HTML 4.0 was first released as a W3C Recommendation on 18 December 1997 • HTML 3.2 was W3C's first Recommendation for HTML which represented the consensus on HTML features for 1996 • HTML 2.0 (RFC 1866) was developed by the IETF's HTML Working Group, which set the standard for core HTML features based upon current practice in 1994. Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 11 Sample Webpage Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 12 Sample Webpage HTML Structure <HTML> <HEAD> <TITLE>The title of the webpage</TITLE> </HEAD> <BODY> <P>Body of the webpage </BODY> </HTML> Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 13 HTML Structure • An HTML document is divided into a head section (here, between <HEAD> and </HEAD>) and a body (here, between <BODY> and </BODY>) • The title of the document appears in the head (along with other information about the document) • The content of the document appears in the body. The body in this example contains just one paragraph, marked up with <P> Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 14 HTML Hyperlink <a href="relations/alumni">alumni</a> • A link is a connection from one Web resource to another • It has two ends, called anchors, and a direction • Starts at the "source" anchor and points to the "destination" anchor, which may be any Web resource (e.g., an image, a video clip, a sound bite, a program, an HTML document) Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 15 Resource Identifiers URI: Uniform Resource Identifiers • URL: Uniform Resource Locators • URN: Uniform Resource Names Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 16 Introduction to URIs Every resource available on the Web has an address that may be encoded by a URI URIs typically consist of three pieces: • The naming scheme of the mechanism used to access the resource. (HTTP, FTP) • The name of the machine hosting the resource • The name of the resource itself, given as a path Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 17 URI Example http://www.w3.org/TR • There is a document available via the HTTP protocol • Residing on the machines hosting www.w3.org • Accessible via the path "/TR" Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 18 Protocols Describe how messages are encoded and exchanged Different Layering Architectures • ISO OSI 7-Layer Architecture • TCP/IP 4-Layer Architecture Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 19 ISO OSI Layering Architecture Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 20 ISO’s Design Principles • A layer should be created where a different level of abstraction is needed • Each layer should perform a well-defined function • The layer boundaries should be chosen to minimize information flow across the interfaces • The number of layers should be large enough that distinct functions need not be thrown together in the same layer, and small enough that the architecture does not become unwieldy Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 21 TCP/IP Layering Architecture Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 22 TCP/IP Layering Architecture • A simplified model, provides the end-toend reliable connection • The network layer – Hosts drop packages into this layer, layer routes towards destination – Only promise “Try my best” • The transport layer – Reliable byte-oriented stream Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 23 Hypertext Transfer Protocol (HTTP) • A connection-oriented protocol (TCP) used to carry WWW traffic between a browser and a server • One of the transport layer protocol supported by Internet • HTTP communication is established via a TCP connection and server port 80 Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 24 GET Method in HTTP Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 25 Domain Name System DNS (domain name service): mapping from domain names to IP address IPv4: • IPv4 was initially deployed January 1st. 1983 and is still the most commonly used version. • 32 bit address, a string of 4 decimal numbers separated by dot, range from 0.0.0.0 to 255.255.255.255. IPv6: • Revision of IPv4 with 128 bit address Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 26 Top Level Domains (TLD) Top level domain names, .com, .edu, .gov and ISO 3166 country codes There are three types of top-level domains: • Generic domains were created for use by the Internet public • Country code domains were created to be used by individual country • The .arpa domain Address and Routing Parameter Area domain is designated to be used exclusively for Internetinfrastructure purposes Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 27 Registrars • Domain names ending with .aero, .biz, .com, .coop, .info, .museum, .name, .net, .org, or .pro can be registered through many different companies (known as "registrars") that compete with one another • InterNIC at http://internic.net • Registrars Directory: http://www.internic.net/regist.html Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 28 Server Log Files Server Transfer Log: transactions between a browser and server are logged • • • • IP address, the time of the request Method of the request (GET, HEAD, POST…) Status code, a response from the server Size in byte of the transaction Referrer Log: where the request originated Agent Log: browser software making the request (spider) Error Log: request resulted in errors (404) Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 29 Server Log Analysis • Most and least visited web pages • Entry and exit pages • Referrals from other sites or search engines • What are the searched keywords • How many clicks/page views a page received • Error reports, like broken links Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 30 Server Log Analysis Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 31 Search Engines According to Pew Internet Project Report (2002), search engines are the most popular way to locate information online • About 33 million U.S. Internet users query on search engines on a typical day. • More than 80% have used search engines Search Engines are measured by coverage and recency Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 32 Coverage Overlap analysis used for estimating the size of the indexable web • W: set of webpages • Wa, Wb: pages crawled by two independent engines a and b • P(Wa), P(Wb): probabilities that a page was crawled by a or b • P(Wa)=|Wa| / |W| • P(Wb)=|Wb| / |W| Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 33 Overlap Analysis • P(Wa Wb| Wb) = P(Wa Wb)/ P(Wb) = |Wa Wb| / |Wb| • If a and b are independent: P(Wa Wb) = P(Wa)*P(Wb) • P(Wa Wb| Wb) = P(Wa)*P(Wb)/P(Wb) = |Wa| * |Wb| / |Wb| = |Wa| / |W| =P(Wa) Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 34 Overlap Analysis Using |W| = |Wa|/ P(Wa), the researchers found: • Web had at least 320 million pages in 1997 • 60% of web was covered by six major engines • Maximum coverage of a single engine was 1/3 of the web Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 35 How to Improve the Coverage? • Meta-search engine: dispatch the user query to several engines at same time, collect and merge the results into one list to the user. • Any suggestions? Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 36 Web Crawler • A crawler is a program that picks up a page and follows all the links on that page • Crawler = Spider • Types of crawler: – Breadth First – Depth First Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 37 Breadth First Crawlers Use breadth-first search (BFS) algorithm • Get all links from the starting page, and add them to a queue • Pick the 1st link from the queue, get all links on the page and add to the queue • Repeat above step till queue is empty Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 38 Breadth First Crawlers Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 39 Depth First Crawlers Use depth first search (DFS) algorithm • Get the 1st link not visited from the start page • Visit link and get 1st non-visited link • Repeat above step till no no-visited links • Go to next non-visited link in the previous level and repeat 2nd step Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 40 Depth First Crawlers Modeling the Internet and the Web School of Information and Computer Science University of California, Irvine 41