Conflict-free Replicated Data Types_RonZ

advertisement

Conflict-free

Replicated Data Types

MARC SHAPIRO, NUNO PREGUIÇA, CARLOS BAQUERO AND MAREK ZAWIRSKI

Presented by: Ron Zisman

2

Motivation

Replication and Consistency - essential

features of large distributed systems such as

www, p2p, and cloud computing

Lots of replicas

Great for fault-tolerance and read latency

×

Problematic when updates occur

•

Slow synchronization

•

Conflicts in case of no synchronization

3

Motivation

We look for an approach that:

supports Replication

guarantees Eventual Consistency

is Fast and Simple

Conflict-free objects = no synchronization

whatsoever

Is this practical?

4

Contributions

Theory

Practice

Strong Eventual Consistency

(SEC)

CRDTs = Convergent

or Commutative

Replicated Data

Types

A solution to the CAP problem

Formal definitions

Two sufficient conditions

Counters

Strong equivalence between

the two

Set

Directed graph

Incomparable to sequential

consistency

5

Strong Consistency

Ideal consistency: all replicas know

about the update immediately after

it executes

Preclude conflicts

Replicas update in the same

total order

Any deterministic object

Consensus

Serialization bottleneck

Tolerates < n/2 faults

Correct, but doesn’t scale

6

Strong Consistency

Ideal consistency: all replicas know

about the update immediately after

it executes

Preclude conflicts

Replicas update in the same

total order

Any deterministic object

Consensus

Serialization bottleneck

Tolerates < n/2 faults

Correct, but doesn’t scale

7

Strong Consistency

Ideal consistency: all replicas know

about the update immediately after

it executes

Preclude conflicts

Replicas update in the same

total order

Any deterministic object

Consensus

Serialization bottleneck

Tolerates < n/2 faults

Correct, but doesn’t scale

8

Strong Consistency

Ideal consistency: all replicas know

about the update immediately after

it executes

Preclude conflicts

Replicas update in the same

total order

Any deterministic object

Consensus

Serialization bottleneck

Tolerates < n/2 faults

Correct, but doesn’t scale

9

Strong Consistency

Ideal consistency: all replicas know

about the update immediately after

it executes

Preclude conflicts

Replicas update in the same

total order

Any deterministic object

Consensus

Serialization bottleneck

Tolerates < n/2 faults

Correct, but doesn’t scale

10

Eventual Consistency

Update local and propagate

No foreground synch

Eventual, reliable delivery

On conflict

Arbitrate

Roll back

Consensus moved to

background

Better performance

×

Still complex

11

Eventual Consistency

Update local and propagate

No foreground synch

Eventual, reliable delivery

On conflict

Arbitrate

Roll back

Consensus moved to

background

Better performance

×

Still complex

12

Eventual Consistency

Update local and propagate

No foreground synch

Eventual, reliable delivery

On conflict

Arbitrate

Roll back

Consensus moved to

background

Better performance

×

Still complex

13

Eventual Consistency

Update local and propagate

No foreground synch

Eventual, reliable delivery

On conflict

Arbitrate

Roll back

Consensus moved to

background

Better performance

×

Still complex

14

Eventual Consistency

Update local and propagate

No foreground synch

Eventual, reliable delivery

On conflict

Arbitrate

Roll back

Consensus moved to

background

Better performance

×

Still complex

15

Eventual Consistency

Update local and propagate

No foreground synch

Eventual, reliable delivery

On conflict

Arbitrate

Roll back

Consensus moved to

background

Better performance

×

Still complex

16

Eventual Consistency

Reconcile

Update local and propagate

No foreground synch

Eventual, reliable delivery

On conflict

Arbitrate

Roll back

Consensus moved to

background

Better performance

×

Still complex

17

Strong Eventual Consistency

Update local and propagate

No synch

Eventual, reliable delivery

No conflict

deterministic outcome of

concurrent updates

No consensus: ≤ n-1 faults

Solves the CAP problem

18

Strong Eventual Consistency

Update local and propagate

No synch

Eventual, reliable delivery

No conflict

deterministic outcome of

concurrent updates

No consensus: ≤ n-1 faults

Solves the CAP problem

19

Strong Eventual Consistency

Update local and propagate

No synch

Eventual, reliable delivery

No conflict

deterministic outcome of

concurrent updates

No consensus: ≤ n-1 faults

Solves the CAP problem

20

Strong Eventual Consistency

Update local and propagate

No synch

Eventual, reliable delivery

No conflict

deterministic outcome of

concurrent updates

No consensus: ≤ n-1 faults

Solves the CAP problem

21

Strong Eventual Consistency

Update local and propagate

No synch

Eventual, reliable delivery

No conflict

deterministic outcome of

concurrent updates

No consensus: ≤ n-1 faults

Solves the CAP problem

22

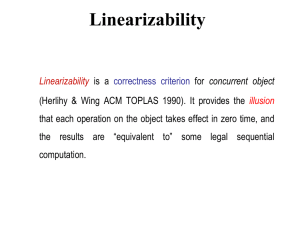

Definition of EC

Eventual delivery: An update delivered at some

correct replica is eventually delivered to all

correct replicas

Termination: All method executions terminate

Convergence: Correct replicas that have

delivered the same updates eventually reach

equivalent state

Doesn’t preclude roll backs and reconciling

23

Definition of SEC

Eventual delivery: An update delivered at some

correct replica is eventually delivered to all

correct replicas

Termination: All method executions terminate

Strong Convergence: Correct replicas that

have delivered the same updates have

equivalent state

24

System model

System of nonbyzantine processes

interconnected by

an asynchronous

network

Partition-tolerance

and recovery

What are the two

simple conditions

that guarantee

strong

convergence?

25

Query

Client sends the query to any of the replicas

Local at source replica

Evaluate synchronously, no side effects

26

Query

Client sends the query to any of the replicas

Local at source replica

Evaluate synchronously, no side effects

27

Query

Client sends the query to any of the replicas

Local at source replica

Evaluate synchronously, no side effects

28

State-based approach

An object is a tuple (𝑆, 𝑠 0 , 𝑞, 𝑢, 𝑚)

payload set

merge

update

initial state

query

Local queries, local updates

Send full state; on receive, merge

Update is said ‘delivered’ at some replica when it is included

in its casual history

Causal History:

𝐶 = 𝑐1 , … , 𝑐𝑛 (𝑤ℎ𝑒𝑟𝑒 𝑐𝑖 𝑔𝑜𝑒𝑠 𝑡ℎ𝑟𝑜𝑢𝑔ℎ 𝑎 𝑠𝑒𝑞𝑢𝑒𝑛𝑐𝑒 𝑜𝑓 𝑠𝑡𝑎𝑡𝑒𝑠 𝑐𝑖0 , … , 𝑐𝑖𝑘 … )

29

State-based replication

Local at source 𝑠1 .u(a), 𝑠2 .u(b), …

Causal History:

Precondition, compute

on query: 𝑐𝑖𝑘 = 𝑐𝑖𝑘−1

Update local payload

on update: 𝑐𝑖𝑘 = 𝑐𝑖𝑘−1 ∪ {𝑢𝑖𝑘 𝑎 }

30

State-based replication

Local at source 𝑠1 .u(a), 𝑠2 .u(b), …

Causal History:

Precondition, compute

on query: 𝑐𝑖𝑘 = 𝑐𝑖𝑘−1

Update local payload

on update: 𝑐𝑖𝑘 = 𝑐𝑖𝑘−1 ∪ {𝑢𝑖𝑘 𝑎 }

31

State-based replication

Local at source 𝑠1 .u(a), 𝑠2 .u(b), …

Causal History:

Precondition, compute

on query: 𝑐𝑖𝑘 = 𝑐𝑖𝑘−1

Update local payload

on update: 𝑐𝑖𝑘 = 𝑐𝑖𝑘−1 ∪ {𝑢𝑖𝑘 𝑎 }

on merge: 𝑐𝑖𝑘 = 𝑐𝑖𝑘−1 ∪ 𝑐𝑖′𝑘′

Convergence

Episodically: send 𝑠𝑖 payload

On delivery: merge payloads

32

State-based replication

Local at source 𝑠1 .u(a), 𝑠2 .u(b), …

Causal History:

Precondition, compute

on query: 𝑐𝑖𝑘 = 𝑐𝑖𝑘−1

Update local payload

on update: 𝑐𝑖𝑘 = 𝑐𝑖𝑘−1 ∪ {𝑢𝑖𝑘 𝑎 }

on merge: 𝑐𝑖𝑘 = 𝑐𝑖𝑘−1 ∪ 𝑐𝑖′𝑘′

Convergence

Episodically: send 𝑠𝑖 payload

On delivery: merge payloads

33

State-based replication

Local at source 𝑠1 .u(a), 𝑠2 .u(b), …

Causal History:

Precondition, compute

on query: 𝑐𝑖𝑘 = 𝑐𝑖𝑘−1

Update local payload

on update: 𝑐𝑖𝑘 = 𝑐𝑖𝑘−1 ∪ {𝑢𝑖𝑘 𝑎 }

on merge: 𝑐𝑖𝑘 = 𝑐𝑖𝑘−1 ∪ 𝑐𝑖′𝑘′

Convergence

Episodically: send 𝑠𝑖 payload

On delivery: merge payloads

34

Semi-lattice

A poset (𝑆, ≤) is a join-semilattice if for all x,y in S a LUB exists

∀𝑥, 𝑦 ∈ 𝑆, ∃𝑧: 𝑥, 𝑦 ≤ 𝑧 ∧ ∄𝑧 ′ : 𝑥, 𝑦 ≤ 𝑧 ′ < 𝑧

LUB = Least Upper Bound

Associative:

Commutative: 𝑥 ⊔ 𝑦 = 𝑦 ⊔ 𝑥

Idempotent:

𝑥 ⊔ 𝑦 ⊔ 𝑧 = (𝑥 ⊔ 𝑦) ⊔ 𝑧

𝑥⊔𝑥 =𝑥

Examples:

𝑖𝑛𝑡, ≤ :

𝑥 ⊔ 𝑦 = max(𝑥, 𝑦)

𝑠𝑒𝑡𝑠, ⊆ : 𝑥 ⊔ 𝑦 = x ∪ 𝑦

State-based: monotonic semilattice CvRDT

If:

payload type forms a semi-lattice

updates are increasing

merge computes Least Upper Bound

then replicas converge to LUB of last values

35

36

Operation-based approach

An object is a tuple (𝑆, 𝑠 0 , 𝑞, 𝑡, 𝑢, 𝑃)

payload set

initial state

query

delivery precondition

effect-update

prepare-update

prepare-update

Precondition at source

1st phase: at source, synchronous, no side effects

effect-update

Precondition against downstream state (P)

2nd phase, asynchronous, side-effects to downstream state

37

Operation-based replication

Local at source

Precondition, compute

Broadcast to all replicas

Causal History:

on query/prepare-update: 𝑐𝑖𝑘 = 𝑐𝑖𝑘−1

38

Operation-based replication

Local at source

Causal History:

Precondition, compute

on query/prepare-update: 𝑐𝑖𝑘 = 𝑐𝑖𝑘−1

Broadcast to all replicas

on effect-update: 𝑐𝑖𝑘 = 𝑐𝑖𝑘−1 ∪ {𝑢𝑖𝑘 𝑎 }

Eventually, at all replicas:

Downstream precondition

Assign local replica

39

Operation-based replication

Local at source

Causal History:

Precondition, compute

on query/prepare-update: 𝑐𝑖𝑘 = 𝑐𝑖𝑘−1

Broadcast to all replicas

on effect-update: 𝑐𝑖𝑘 = 𝑐𝑖𝑘−1 ∪ {𝑢𝑖𝑘 𝑎 }

Eventually, at all replicas:

Downstream precondition

Assign local replica

Op-based: commute

CmRDT

If:

40

Liveness: all replicas execute all operations in

delivery order where the downstream precondition

(P) is true

Safety: concurrent operations all commute

then replicas converge

Monotonic semi-lattice

Commutative

A state-based object can emulate an

operation-based object, and vice-versa

Use state-based reasoning and then covert

to operation based for better efficiency

41

42

Comparison

Operation-based

State-based

Update ≠ merge

operation

Simple data types

State includes preceding

updates; no separate

historical information

Inefficient if payload is

large

File systems (NFS, Dynamo)

Update operation

Higher level, more

complex

More powerful, more

constraining

Small messages

Collaborative editing

(Treedoc), Bayou, PNUTS

State-based or op-based, as convenient

SEC is incomparable to

sequential consistency

43

There is a SEC object that is not sequentially-consistent:

Consider a Set CRDT S with operations add(e) and remove(e)

remove(e) → add(e)

e∈S

add(e) ║ remove(e’)

e ∈ S ∧ e’ ∉ S

add(e) ║ remove(e)

e ∈ S (suppose add wins)

Consider the following scenario with replicas 𝑝0 , 𝑝1 , 𝑝2 :

1.

𝑝0 [add(e); remove(e’)] ║ 𝑝1 [add(e’); remove(e)]

2.

𝑝2 merges the states from 𝑝0 and 𝑝1

𝑝2 : e ∈ S ∧ e’ ∈ S

The state of replica 𝑝2 will never occur in a sequentially-consistent

execution (either remove(e) or remove(e’) must be last)

SEC is incomparable to

sequential consistency

∎

44

There is a sequentially-consistent object that is not

SEC:

If no crashes occur, a sequentially-consistent object is SEC

Generally, sequential consistency requires consensus to

determine the single order of operations – cannot be

solved if n-1 crashes occur (while SEC can tolerate n-1

crashes)

45

Example

CRDTs

Multi-master counter

Observed-Remove

Set

Directed Graph

46

Multi-master counter

Increment

Payload: 𝑃 = [𝑖𝑛𝑡, 𝑖𝑛𝑡, … ]

Partial order: 𝑥 ≤ 𝑦

value() =

increment() = 𝑃 𝑀𝑦𝐼𝐷 ++

merge(x,y) = x ⊔ 𝑦 = [… , 𝑚𝑎𝑥 𝑥. 𝑃 𝑖 , 𝑦. 𝑃 𝑖 , … ]

𝑖

∀𝑖 𝑥 𝑖 ≤ 𝑦[𝑖]

𝑃[𝑖]

47

Multi-master counter

Increment / Decrement

Payload: 𝑃 = 𝑖𝑛𝑡, 𝑖𝑛𝑡, … , 𝑁 = 𝑖𝑛𝑡, 𝑖𝑛𝑡, …

Partial order: 𝑥 ≤ 𝑦

value() =

increment() = 𝑃 𝑀𝑦𝐼𝐷 ++

decrement() = 𝑁 𝑀𝑦𝐼𝐷 ++

merge(x,y) = x ⊔ 𝑦 = ( … , 𝑚𝑎𝑥 𝑥. 𝑃 𝑖 , 𝑦. 𝑃 𝑖 , … ,

𝑖

𝑃[𝑖] -

∀𝑖 𝑥 𝑖 ≤ 𝑦[𝑖]

𝑖

𝑁[𝑖]

… , 𝑚𝑎𝑥 𝑥. 𝑁 𝑖 , 𝑦. 𝑁 𝑖 , … )

48

Set design alternatives

Sequential specification:

{true} add(e) {e ∈ S}

{true} remove(e) {e ∈ S}

Concurrent: {true} add(e) ║ remove(e) {???}

linearizable?

error state?

last writer wins?

add wins?

remove wins?

49

Observed-Remove Set

50

Observed-Remove Set

Payload: added, removed (element, unique token)

add(e) = 𝐴 ≔ 𝐴 ∪ { 𝑒, 𝛼 }

51

Observed-Remove Set

Payload: added, removed (element, unique token)

add(e) = 𝐴 ≔ 𝐴 ∪ { 𝑒, 𝛼 }

52

Observed-Remove Set

Payload: added, removed (element, unique token)

add(e) = 𝐴 ≔ 𝐴 ∪ { 𝑒, 𝛼 }

53

Observed-Remove Set

Payload: added, removed (element, unique token)

add(e) = 𝐴 ≔ 𝐴 ∪ { 𝑒, 𝛼 }

54

Observed-Remove Set

Payload: added, removed (element, unique token)

add(e) = 𝐴 ≔ 𝐴 ∪

Remove all unique elements observed: remove(e) = 𝑅 ≔ 𝑅 ∪

lookup(e) = ∃ 𝑒, − ∈ 𝐴 \ 𝑅

merge(S,S’) = (𝐴 ∪ 𝐴′ , 𝑅 ∪ 𝑅 ′ )

𝑒, 𝛼

𝑒, − ∈ 𝐴

55

Observed-Remove Set

Payload: added, removed (element, unique token)

add(e) = 𝐴 ≔ 𝐴 ∪

Remove all unique elements observed: remove(e) = 𝑅 ≔ 𝑅 ∪

lookup(e) = ∃ 𝑒, − ∈ 𝐴 \ 𝑅

merge(S,S’) = (𝐴 ∪ 𝐴′ , 𝑅 ∪ 𝑅 ′ )

𝑒, 𝛼

𝑒, − ∈ 𝐴

56

Observed-Remove Set

Payload: added, removed (element, unique token)

add(e) = 𝐴 ≔ 𝐴 ∪

Remove all unique elements observed: remove(e) = 𝑅 ≔ 𝑅 ∪

lookup(e) = ∃ 𝑒, − ∈ 𝐴 \ 𝑅

merge(S,S’) = (𝐴 ∪ 𝐴′ , 𝑅 ∪ 𝑅 ′ )

𝑒, 𝛼

𝑒, − ∈ 𝐴

57

OR-Set + Snapshot

Read consistent snapshots

despite concurrent, incremental updates

Vector clock for each process (global time)

Payload: a set of (event, timestamp) pairs

Snapshot: vector clock value

lookup(e,t): ∃ 𝑒, 𝑡, 𝑟 ∈ 𝐴: 𝑇 𝑟 ≥ 𝑡 ∧ ∄ 𝑒, 𝑡, 𝑟, 𝑡 ′ , 𝑟 ′ ∈ 𝑅: 𝑇 𝑟 ′ ≤ 𝑡′

Garbage Collection: retain tombstones until not needed

log entry discarded as soon as its timestamp is less than all

remote vector clocks (delivered to all processes)

58

Sharded OR-Set

Very large objects

Independent shards

Static: hash, Dynamic: consensus

Statically-Sharded CRDT

Each shard is a CRDT

Update: single shard

No cross-object invariants

A combination of independent CRDTs remains a CRDT

Statically-Sharded OR-Set

Combination of smaller OR-Sets

Consistent snapshots: clock cross shards

59

Directed Graph – Motivation

Design a web search

engine

Efficiency and scalability

compute page rank by a

directed graph

Asynchronous processing

Operations

Find new pages: add vertex

Parse page links: add/remove

arc

Add URLs of linked pages to

be crawled: add vertex

Deleted pages: remove vertex

(lookup masks incident arcs)

Broken links allowed: add arc

works even if tail vertex

doesn’t exist

Responsiveness

Incremental processing, as

fast as each page is

crawled

60

Graph design alternatives

Graph = (V,A) where A ⊆ V × V

Sequential specification:

{v’,v’’ ∈ V} addArc(v’,v’’) {…}

{∄(v’,v’’) ∈ A} removeVertex(v’) {…}

Concurrent: removeVertex(v) ║ addArc(v’,v’’)

linearizable?

last writer wins?

addArc(v’,v’’) wins? – v’ or v’’ restored if removed

removeVertex(v) wins? - all edges to or from v are

removed

61

Directed Graph (op-based)

Payload: OR-Set V (vertices), OR-Set A (arcs)

62

Directed Graph (op-based)

Payload: OR-Set V (vertices), OR-Set A (arcs)

63

Summary

Principled approach

Strong Eventual Consistency

Two sufficient conditions:

State: monotonic semi-lattice

Operation: commutativity

Useful CRDTs

Multi-master counter, OR-Set, Directed Graph

64

Future Work

Theory

Class of computations accomplished by CRDTs

Complexity classes of CRDTs

Classes of invariants supported by a CRDT

CRDTs and self-stabilization, aggregation, and so on

Practice

Library implementation of CRDTs

Supporting non-critical synchronous operations (commiting

a state, global reset, etc)

Sharding

Extras: MV-Register and the

Shopping Cart Anomaly

65

MV-Register ≈ LWW-Set Register

Payload = { (value, versionVector) }

assign: overwrite value, vv++

merge: union of every element in each input set that is not

dominated by an element in the other input set

A more recent assignment overwrites an older one

Concurrent assignments are merged by union (VC merge)

Extras: MV-Register and the

Shopping Cart Anomaly

Shopping cart anomaly

deleted element reappears

MV-Register does not behave like a set

Assignment is not an alternative to proper

add and remove operations

66

67

The problem with eventual

consistency jokes is that you

can't tell who doesn't get it

from who hasn't gotten it.