Strategies towards improving the utility of scientific big data

advertisement

Strategies towards improving

the utility of scientific big data

Evan Bolton, PhD

National Center for Biotechnology Information (NCBI)

National Library of Medicine (NLM)

National Institutes of Health (NIH)

Sep. 4, 2014

http://www.nlm.nih.gov/

U.S. National Center for Biotechnology Information

https://www.ncbi.nlm.nih.gov/

PubChem website

https://pubchem.ncbi.nlm.nih.gov/

PubChem primary goal

… to be an on-line resource

providing comprehensive

information on the biological

activities of substances

where “substance” means any biologically testable entity

Small molecules, RNAs, carbohydrates,

peptides, plant extracts, etc.

PubChem data growth over ten years

Contributors

Chemicals

Protein Targets

Tested Chemicals

Biological Assays

Bioactivity Results

+280 substance contributors, +60 assay contributors, +150M substances, +50M compounds, +1.0M bioassays,

+6.1T protein targets, +2.9M tested substances, +2.0M tested compounds, +225M bioactivity result sets

[M=millions, T=thousands, MLP = Molecular Libraries Program]

CAVEAT!

All data has “errors”

Big data has “big errors”

Hypothetical

If your average data error rate is 1

in 1,000,000, you have 99.999%

data accuracy

If you have one trillion facts

(10^12), can you accept one

million errors (10^9)?

Strategies to mitigate errors?

Manual curation has its limits

(accuracy, cost, time)

So .. what do you do?

Error suppression strategies for scientific big data

1. Identify quality {un}known known/unknowns

use to formulate an error suppression strategy

2. Perform data normalization

improves utility by helping to refine identification

3. “Trust but verify”

cross compare authoritative and curated data

4. Consistency filtering

improves precision by removal of outliers

5. Address error feedback loops

use “is”, “can be”, and, if all else fails, “is not” lists

Error suppression strategies for scientific big data

1. Identify quality {un}known known/unknowns

use to formulate an error suppression strategy

there are known knowns; there are things that we

know that we know. We also know there are known

unknowns; that is to say we know there are some

things we do not know. But there are also unknown

unknowns, the ones we don't know we don't know

Feb. 2002 news briefing

Ring Closed

Ring Open

(+)-Iridodial

Salt-form

variations

arechange

common

Chemical

meaningdrawing

of a substance

may

upon

Image credit:

http://en.wikipedia.org/wiki/Donald_Rumsfeld

context

Defense chemicals from abdominal glands of

13

rove beetle species of subtribe Staphylinina

Tautomers and resonance forms of same chemical structure are prolific

Error suppression strategies for scientific big data

2. Perform data normalization

improves utility by helping to refine identification

• Verify chemical content

–

–

–

–

Atoms defined/real

Implicit hydrogen

Functional group

Atom valence sanity

• Calculate

– Coordinates

– Properties

– Descriptors

• Normalize representation

–

–

–

–

Tautomer invariance

Aromaticity detection

Stereochemistry

Explicit hydrogen

• Detect components

–

–

–

–

Isolate covalent units

Neutralize (+/- proton)

Reprocess

Detect unique

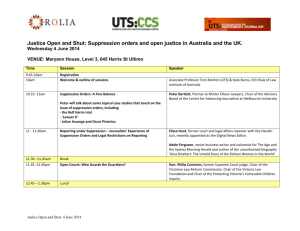

Error suppression strategies for scientific big data

3. “Trust but verify”

cross compare authoritative and curated data

Cross concept count %

CTD

HDO

KEG

MED

NDF

ORD

CTD

100.0

14.3

79.1

40.7

49.7

35.8

Доверяй,

но проверяй

no

proveryai)

HDO

26.0

100.0

38.7

52.4 (doveryai,

48.3

26.2

extensively

Ronald Regan

KEG

24.8 Russian

6.7 proverb

100.0 used

10.7

6.4 by 25.2

when

relations with

MED

97.2

68.9 discussing

81.6

100.0

93.8 the Soviet

79.6 Union

NDF

30.4

16.3

12.5

24.0 or 100.0

10.8

Kerry’s71.6

more recent

the phrase when

ORD

31.9 John12.8

29.7 adaption

15.7 of 100.0

discussing Syria’s chemical weapons disposal:

“Verify

verify”

Cross-reference overlaps between various

diseaseand

resources:

Human

Disease Ontology (HDO), NCBI MedGen (MED), CTD MEDIC (CTD), KEGG

Image credit: http://en.wikipedia.org/wiki/Ronald_Reagan

Disease (KEG), NDF-RT (NDF), and OrphaNet (ORD) using NLM Medical

Subject Headings (MeSH) as the basis of comparison.

Image credit: http://en.wikipedia.org/wiki/John_Kerry

Error suppression strategies for scientific big data

4. Consistency filtering

improves precision by removal of outliers

Histogram of MNIDs per CID

1,000,000

100,000

Original

10,000

Histogram of Fate of CID-MNID Pairs

Many votes, 70%

1,000

100

Many votes, 60%

120,000

One Vote, 70%

100,000

One Vote, 60%

10

80,000

60,000

1

1

2

3

4

5

6

7

40,000

20,000

-

Keep consensus, remove the rest

Image credit: http://withfriendship.com/images/c/11229/Accuracy-and-precision-picture.png

Many votes, 70%

Many votes, 60%

One Vote, 70%

One Vote, 60%

Error suppression strategies for scientific big data

5. Address error feedback loops

use “is”, “can be”, and, if all else fails, “is not” lists

Prevent error proliferation at the data source, when possible

Error suppression strategies for scientific big data

1. Identify quality {un}known known/unknowns

use to formulate an error suppression strategy

2. Perform data normalization

improves utility by helping to refine identification

3. “Trust but verify”

cross compare authoritative and curated data

4. Consistency filtering

improves precision by removal of outliers

5. Address error feedback loops

use “is”, “can be”, and, if all else fails, “is not” lists

Okay … now what?

… you have cleaned up your data

… but it is huge, unwieldy, unstructured

How can it be made more useful?

Data organization strategies for scientific big data

1. Crosslink and annotate data

provides context and identifies associated concepts

2. Establish similarity schemes

enables identification of related records

3. Associate to concept hierarchies

improves navigation between related records

4. Perform data reduction

suppresses “redundant” information

5. Be succinct

simplifies presentation by hiding details

Data organization strategies for scientific big data

1. Crosslink and annotate data

provides context and identifies associated concepts

Substance

Protein

Patent

Gene

Pathway

Compound

Disease

treat

Publication

cites

ingredient

Drug

Data organization strategies for scientific big data

2. Establish similarity schemes

enables identification of related records

Vioxx

Data organization strategies for scientific big data

3. Associate to concept hierarchies

improves navigation between related records

Match to

concept

Independent

hierarchy

= chemical

protein

gene

patent

publication

pathway

…

…

Organized

records

Data organization strategies for scientific big data

4. Perform data reduction

suppresses “redundant” information

5. Be succinct

simplifies presentation by hiding details

“subject-predicate-object”

“atorvastatin may treat hypercholesterolemia”

subject

Provenance

information

predicate

Evidence citation

(PMID)

From whom?

(Data Source)

object

Data organization strategies for scientific big data

1. Crosslink and annotate data

provides context and identifies associated concepts

2. Establish similarity schemes

enables identification of related records

3. Associate to concept hierarchies

improves navigation between related records

4. Perform data reduction

suppresses “redundant” information

5. Be succinct

simplifies presentation by hiding details

Concluding remarks

Scientific “big data” …

… contains an amazing amount of information

… provides opportunities to make discoveries

… benefits from strategies to massage it

PubChem is doing its part …

… making chemical substance data broadly accessible

… cross-integrating it to key scientific resources

… suppressing errors and their propagation

… organizing the data and making it available

https://pubchem.ncbi.nlm.nih.gov

PubChem Crew …

Steve Bryant

Tiejun Chen

Siqian He

Gang Fu

Sunghwan Kim

Lewis Geer

Ben Shoemaker

Renata Geer

Paul Thiessen

Asta Gindulyte

Jiyao Wang

Volker Hahnke

Yanli Wang

Lianyi Han

Bo Yu

Jane He

Jian Zhang

Special thanks to the NCBI Help Desk, especially Rana Morris

Any questions?

If you think of one later, email me:

bolton@ncbi.nlm.nih.gov