Midwest SPR - University of Michigan

advertisement

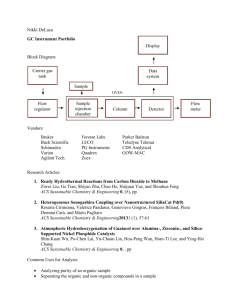

Creation of the Simulator Value Index Tool Adapted from workshop on 4.21.14 presented by American College of Surgeons Accreditation Education Institutes, Technologies & Simulation Committee) Deborah Rooney PhD James Cooke MD Yuri Millo MD David Hananel MEDICAL SCHOOL UNIVERSITY OF MICHIGAN Disclosures o David Hananel, No Disclosures o Yuri Millo, No Disclosures o James Cooke, No Disclosures o Deborah Rooney, No Disclosures Overview of Main Topics o Introduction of project o Overview of 2014 IMSH Survey results o Summary of 2014 ACS Consortium results o Working meeting to refine the AVI algorithm o Apply AVI algorithm in group exercise o Discuss next steps Introduction: How it all started o ACS AEI, Technologies and Simulation Committee o Guidelines for Simulation Development (Millo, George, Seymour and Smith) o University of Michigan o Need to support faculty in sim purchase/decision-making process (Cooke) o Discourse o Definition of “value” o Differences across stakeholder role (institution, administration, clinician, educator, researcher...) Introduction: How it all started o Reached consensus on factors used when considering a simulator purchase o Survey 1 o IMSH general membership, N=2800 o January, 2014 o Workshop 1, n=16 o IMSH, January, 2014 o Survey 2 o ACS AEI Consortium membership, N = 455 o March, 2014 o Workshop 2, n = ? o ACS AEI-March, 2014 Introduction: The Instrument o Began with 31-item survey accessed via www (Qualtrics) o 4-point rating scale o (1 = not considered/not important 4= critical to me when I consider a simulator purchase) o 6 Domains o Cost, Impact, Manufacturer, Utility, Assessment, Environment/Ergonomics) o Demographics o Country/Institution o Stakeholder role o Involvement o Follow-up IMSH Survey Sample: 67 institutions x 12 Countries 5 1=Czech Republic 44 1 1= Chile 1= Peru 1 1= Grenada 2=Singapore 2 2 3 = New Zealand 95 total respondents, 72 individuals completed survey approximately 2+% of IMSH membership (2,800), 7 undesignated/16 incomplete IMSH Survey Sample: 44 institutions x 22 States/US 1 1 1 1 1 1 3 4 2 1 1 3 1 4 6 1= Massachusetts 3 = Rhode Island 1 = New Jersey 1 1 3 1 50 participants from US IMSH Survey Sample: Institution Affiliation 46 58% 50 45 40 35 30 25 20 15 10 5 0 28 35% 26 33% 20 25% 6 8% 4 5% 1 1% n = 79 1 undesignated IMSH Survey Results: Rating Differences by Institutional Affiliation o Cost o Commercial Skills Centers (CSC) rated C1 (Purchase cost) lower than each of the other institutions, p = .001. o Manufacturer o CSCs rated M1 (Reputation of manufacturer) lower than each of the other institutions, p = .001. o Utility o CSCs rated U3 (Ease of data management) and o U11 (portability) lower than each of the other institutions, p = .001. o Ergonomics o Medical Schools rated item E2 (Ergonomic risk factor) much higher thank other institutions), p = .05. CSCs rated E3 (Ease of ergonomic setup) lower than each of the other institutions, p = .001. IMSH Survey Sample: Stakeholder Role 7 9% 8 10% 31 39% 14 18% 19 24% Administration Clinician Institution Technician Educator n = 79 1=undesignated IMSH Survey Results: Rating Differences by Stakeholder Role o Cost o Clinicians rated C2 (Cost of warranty) lower than the other stakeholders, p = .048. o Utility o Clinicians rated U11 (portability of simulator) higher than other stakeholders, p = .037. IMSH Survey Sample: 4 5% 37 46% Involvement in Decision 2 3% 37 46% Contribute to Decision Lead/Responsible Approval Process Not Involved n = 80 IMSH Survey Results Summary : o Although there are no differences across level of involvement, o There are different considerations during simulator purchasing process across; o Country o Institutional affiliation (commercial skills center may have unique needs) o Stakeholder role (Clinicians may have unique needs) o Keeping this in mind, let’s review the top factors considered The SVI Factors: Top 15+1 Factors Ranked Average Factor (survey item number, item description) Domain 3.8 21- Technical stability/reliability of simulator 3.7 10- Customer service 3.4 16- Ease use for instructor/administrator Utility 3.4 19- Ease of use for learner 6- Relevance of metrics to real life/clinical setting Utility 3.3 Utility Manufacturer Impact 3.2 11- Ease of delivery and installation, orientation to sim Manufacturer 3.2 26- Reproducibility of task/scenario/curriculum Assmnt/Res 3.2 1- Purchase cost of simulator Cost 3.2 9- Reputation of manufacturer Manufacturer 3.1 8- Scalability Impact 3.1 20- Quality of tutoring/feedback from sim to learners Utility 3.1 7- Number of learners impacted Impact 3.0 2- Cost of warranty Cost 3.0 3- Cost of maintenance Cost 3.0 17- Ease of configuration/authoring sim's learning management system Utility Physical durability Utility - ACS Consortium Survey: Introduction o Identical Survey items, ratings o Added durability of simulator question o 31 32-item survey accessed www (Qualtrics) o 4-point rating scale o (1 = not considered/not important 4= critical to me when I consider a simulator purchase) o 6 Domains o Cost, Impact, Manufacturer, Utility, Assessment, Environment/Ergonomics) o Demographics o Country/Institution o Stakeholder role o Involvement o Follow-up ACS Survey Sample: 41 institutions x 7 Countries 1=Sweden 1 1=UK 1=France 1=Italy 49 1=Greece 1 2 65 total respondents, 54 individuals completed survey approximately 12% of ACS membership (455), 2 undesignated ACS Survey Sample: 36 institutions x 17 States/US 2 1 1 1 1 1 1 1 1 5 4 1 4 3 2 4 8 8 = Massachusetts 1 = Rhode Island 1=Delaware 1 = Maryland 1 1 1 3 3 49 participants from US 47 indicated institution ACS Survey Sample: Institution Affiliation 40 37 67% 28 51% 35 30 24 44% 25 16 29% 20 15 2 4% 10 5 0 0% 0 0% Commercial Skills Center Industry 0 Academic (University) Hospital Teaching Hospital Medical School Health Care Delivery System Government or Military Center n = 55 ACS Survey Sample: Stakeholder Role 3 2 1 <6% 1 <2%<4% <2% 13 23% 9 16% 27 48% Institution Administration Clinician Technician Educator Researcher Coordinator n = 56 ACS Survey Sample: Involvement in Decision 2 3% 29 52% 25 45% Contribute to Decision Lead/Responsibl e Approval Process Not Involved n = 56 ACS Survey Results: Summary o Although there are no differences across; o institution o stakeholder role o There are different considerations during simulator purchasing process across; o Level of involvement o (Self-reported “Responsible” folks are more concerned about number of learners impacted and Scalability) ACS Survey Results: Summary But are there differences across IMSH and ACS membership? Survey Results: IMSH v. ACS 4.5 4 (C2) 7 (I2) 4 11 (M3) 15 (U4) 22 (U11) 3.5 Average Observation 3 2.5 1. IMSH 2. ACS 2 1.5 1 0.5 0 Survey Results: Rating Differences by Conference o Cost o ACS members rated C2 (Cost of warranty) higher than the IMH members, bias = .40, p = .04. o Impact o ACS members rated I2 (Number of learners) higher than other stakeholders, bias = .53, p = .01. o Utility o ACS members rated U4 (Ease of report generation) higher than the IMH members, bias = .43, p = .02. o ACS members rated U11 (Portability of simulator) higher than other stakeholders, bias = .48, p = .01. The SVI Factors: Top 15+1 Factors Ranked Factor IMSH Avg (Rank) ACS Avg (Rank) 21- Technical stability/reliability of simulator 3.8 (1) 3.7 (1) n.s 10- Customer service 3.7 (2) 3.5 (2) n.s 16- Ease use for instructor/administrator 3.4 (3) 3.3 (7) n.s 19- Ease of use for learner 3.4 (4) 3.4 (4) n.s 6- Relevance of metrics to real life/clinical setting 3.3 (5) 3.3 (6) n.s 11- Ease of delivery and installation, orientation to sim 3.2 (6) 2.7 (21) 0.02 26/27- Reproducibility of task/scenario/curriculum 3.2 (7) 3.3 (8) n.s 1- Purchase cost of simulator 3.2 (8) 3.3 (5) n.s 4- Cost of disposables 2.7 (22) 3.0 (13) n.s 9- Reputation of manufacturer 3.2 (9) 2.8 (20) n.s 8- Scalability 3.1 (10) 3.2 (10) n.s 20- Quality of tutoring/feedback from sim to learners 3.1 (11) 3.1 (11) n.s 7- Number of learners impacted 3.1 (12) 3.4 (3) 0.02 2- Cost of warranty 3.0 (13) 3.0 (14) n.s 3- Cost of maintenance 3.0 (14) 3.1 (12) n.s 17- Ease of configuration/authoring sim's learning management system 3.0 (15) 2.8 (17) n.s 3.2 (9) n.s -/24- Physical durability - P-value Applying the SVI Tool o General impressions? What stood out? o What worked well? o What could have gone better? o Any surprises? o Usefulness? How might you use the SVI Tool at your institution? o Please complete the questions on “Feedback” Tab on the SVI Worksheet Thank you: Our Contact Information o Deb Rooney University of Michigan dmrooney@med.umich.edu o Jim Cooke University of Michigan o cookej@med.umich.edu o David Hananel SimPORTAL & CREST University of Minnesota Medical School o dhananel@umn.edu o Yuri Millo Millo Group yuri.millo@millo-group.com o Olivier Petinaux ACS American College of Surgeon, Division of Education opetinaux@facs.org