SIP Overload Control

Report from the IETF Design Team

Volker Hilt

volkerh@bell-labs.com

Bell-Labs/Alcatel-Lucent

All Rights Reserved © Alcatel-Lucent 2006, #####

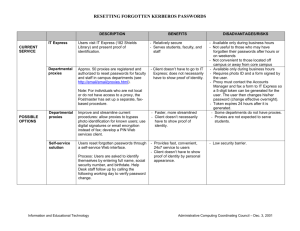

Overview

IETF SIP Overload Control Design Team

Simulation Results for SIP

SIP Overload Control

Conclusion

Slide 2 | Volker Hilt | March 2008

IETF SIP Overload Control Design Team

Team was founded beginning of 2007 by SIPPING WG

Members

Eric Noel, Carolyn Johnson (AT&T Labs)

Volker Hilt, Indra Widjaja (Bell-Labs/Alcatel Lucent)

Charles Shen, Henning Schulzrinne (Columbia University)

Ping Wu*, Tadeusz Drwiega*, Mary Barnes (Nortel)

Jonathan Rosenberg (Cisco)

Nick Stewart (British Telecom)

Developed four independent simulation tools

AT&T, Bell-Labs, Columbia, Nortel*

Simulation results for SIP

Proposals and initial results for overload controlled SIP.

* Left design team in fall 2007

Slide 3 | Volker Hilt | March 2008

Simulation Results

Setup and Assumptions

SIP server topology consisting of UAs, edge

proxies and core proxies.

UAs are connected to edge proxies.

Each UA creates a single call (INVITE

followed by BYE transaction).

Poisson arrival rate.

Load is equally distributed across edge

proxies.

Edge proxies forward requests to a core proxies.

Edge proxies reject a request if one/both

core proxies are overloaded.

To break up the problem domain we

assume edge proxies have infinite capacity.

Core proxies forward call to the edge proxy of

the destination.

Capacity: 500 messages per second at a

constant rate.

Server Topology

All proxies are modeled as queuing system.

Queue size: 500 messages

Media path congestion is not considered.

Slide 4 | Volker Hilt | March 2008

Proxy Model

Simulation Results

Client-to-Server vs. Server-to-Server Communication

Server-to-Server Communication

A server sends a stream of SIP requests to other servers.

SIP request streams between servers are dynamic.

Load between servers can be reduced gradually by

rejecting/retrying some of the requests.

Overload control can use feedback to request that an

upstream server reduces traffic to a desired amount.

Client-to-Server Communication

UAs typically only initiate a single request at a time.

A UA can be told to wait a certain time before resending the request.

Feedback-based overload control does not prevent

overload in the server.

Slide 5 | Volker Hilt | March 2008

B

D

C

Server-to-Server

Communication

a

b

c

D

…

Problem: a large number of UAs can cause overload even

if all UAs are told to back-up.

A

z

UA-to-Server

Communication

Simulation Results

Scenario 1: No Overload Control

Assumptions

Proxies do not use any overload control.

When the input buffer of a proxy is filled up, messages are dropped.

Requests that failed at one of the core proxies are not retried.

Sim1

140

Results

Congestion collapse!

Carried load (cps)

The number of INVITE

transactions completed

(i.e., calls set up)

drops to zero.

120

AT&T Sim1 gput

CU Sim1 gput

BL Sim1 gput

100

80

60

40

20

0

0

100

200

300

400

500

Offered load (cps)

Slide 6 | Volker Hilt | March 2008

600

700

800

Simulation Results

Scenario 2: 503 (Service Unavailable) – Reject Requests

Assumptions

Proxies use 503 (Service Unavailable) responses to reject requests during overload.

Watermark-based (Bang-Bang) overload control algorithm:

Enter overload state when queue length reaches high watermark (400 messages)

Enter normal state when queue length drops below low watermark (300 messages)

When in overload state a proxy rejects all incoming requests with 503 responses.

Edge proxies do not

retry requests that are

rejected.

Provides little or no

improvement compared

to no overload control.

Note: Performance can

be improved by using

other control algorithms.

Congestion collapse!

120

Carried load (cps)

Results

Statefull 503

140

AT&T Sim2 gput

CU Sim2 gput

100

BL Sim2 gput

80

60

40

20

0

0

Slide 7 | Volker Hilt | March 2008

200

400

Offered load (cps)

600

800

Simulation Results

Scenario 3: 503 (Service Unavailable) - Retry Requests

Assumptions

Same assumptions as in Scenario 2.

But: edge proxies retry all requests that have been rejected by one core proxy

at the second core proxy.

Requests are rejected only if they fail at both core proxies.

Statefull 503

140

Retrying requests

decreases goodput.

Increased load

caused by retries.

Congestion collapse!

Carried load (cps)

Results

120

AT&T Sim3 gput

100

CU Sim3 gput

BL Sim3 gput

80

60

40

20

0

0

Slide 8 | Volker Hilt | March 2008

200

400

Offered load (cps)

600

800

SIP Overload Control

Mechanisms and Algorithms

The IETF SIP overload design team has started to investigate solutions for more

effective SIP overload control mechanisms.

Current simulations of SIP overload control mechanisms are focusing on:

Server-to-server communication.

Feedback channel between core and edge proxy (hop-by-hop).

Four different overload control mechanisms:

on/off control,

rate-based control,

loss-based control,

window-based control.

Simulation results available for these four mechanisms and different control

algorithm proposals.

Contributed by AT&T Labs, Bell-Labs/Alcatel-Lucent, Columbia University

Initial simulation results.

Slide 9 | Volker Hilt | March 2008

SIP Overload Control

Hop-by-hop vs. end-to-end

SIP requests for the same source/destination pair can

travel along different paths, depending on

provider policies, services invoked, forwarding rules,

request forking, load balancing, etc.

A SIP proxy cannot make assumptions about which

downstream proxies will be on the path of a SIP request.

C

A

B

D

Hop-by-hop overload control

Server provides overload control feedback to its

direct upstream neighbor.

Hop-by-hop

No knowledge about routing policies of neighbors needed.

Neighbor processes feedback and rejects/retries

excess requests if needed.

x

End-to-end overload control

Feedback from all servers on all paths between a

source and a destination needs to be considered.

A server needs to track the load of all servers a request

may traverse on its way to the target.

C

A

B

Complex and challenging since requests for the same

destination may travel along very different paths.

May be applicable in limited, tightly controlled

environments.

Slide 10 | Volker Hilt | March 2008

D

x

End-to-end

SIP Overload Control

AT&T Labs Simulation Results

Stateless 503

160

140

Carried load (cps)

120

100

80

Theoretical

AT&T Labs Sim3 no control gput

AT&T Labs Sim3 RetryAfter algo1 gput

AT&T Labs Sim3 Rate algo1 gput

60

40

20

0

0

200

400

600

800

1000

Offered load (cps)

Every sampling interval, Core Proxies estimate optimal control parameters such

that queueing delay is within a pre-defined target delay. Core Proxies solely rely on

measured offered load and measured internal queueing delay (no Edge Proxies to

Core Proxies signaling).

On/off control builds upon existing SIP 503 Retry-After capability. Each control

interval, Core Proxies estimate optimal retry after timer value and share with Edge

Proxies within the 503 messages.

In rate-based control, each control interval, Core Proxies estimate optimal

controlled load and active sources, then share with Edge Proxies either with

dedicated signaling or as overhead in response messages. Edge Proxies execute

percent blocking throttling algorithm.

Slide 11 | Volker Hilt | March 2008

SIP Overload Control

Bell-Labs/Alcatel-Lucent Simulation Results

Loss-based overload control.

Feedback-loop between receiver

(core proxy) and sender (edge proxy).

Feedback in SIP responses.

Receiver driven control algorithm.

Estimates current processor

utilization.

Compares to target processor

utilization.

Multiplicative increase and decrease

of loss-rate to reach target utilization.

Sender adjusts the load it sends to receiver based on the feedback received

using percent-blocking.

Overload control algorithms:

Occupancy algorithm (OCC), Acceptance Rate/Occupancy algorithm (ARO)

Slide 12 | Volker Hilt | March 2008

SIP Overload Control

Columbia University Simulation Results

Window-based overload control

CU Overload Control Results

SIP session as control unit, dynamically

estimated from processed SIP messages

160

140

Receiver (Core Proxy in the scenario)

decreases window on session arrival

dynamically computes available window

Goodput (cps)

120

100

80

Sim1

Sim3

CU-WIN-II

Theoretical

60

splits and feedbacks to active Senders

40

Feedback piggybacked in responses/requests

20

Sim2

CU-WIN-I

CU-WIN-III

0

0

Sender (Edge Proxy in the scenario)

200

400

600

800

1000

1200

Load (cps)

forwards a session only if window slot

available

Three different window adaptation algorithms work equally well in steady state

CU-WIN-I: keep current estimated sessions below total allowed sessions given target delay

CU-WIN-II: open up the window after a new session is processed

CU-WIN-III: discrete version of CU-WIN-I, divided into control intervals

Slide 13 | Volker Hilt | March 2008

Conclusion

Current Status

The SIP protocol is vulnerable to congestion collapse under overload.

The 503 response mechanism is insufficient to avoid congestion collapse.

Simulation results confirm problems.

The IETF design team is investigating different mechanisms and algorithms for

SIP overload control.

Initial simulation results are available.

Results show stable server-to-server goodput under overload.

Open Issues

Overload control with uneven distribution of load and fluctuating load

conditions.

Dynamic arrival and departure of servers.

Fairness between upstream neighbors.

Comments and suggestions are very welcome!

Slide 14 | Volker Hilt | March 2008