Nanotech 1AC Case Neg - Open Evidence Project

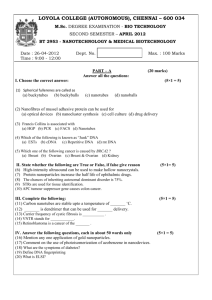

Nanotech 1AC Case Neg

Strat Sheet

Notes

Plan Text

Plan: The United States federal Government should provide uncapped NOAA Community

Based Removal Grants for nanotechnological recycling programs.

CX Guide

1.

Your Courage 10 evidence at the top of the disease flow says that, “Climate has a strong impact on the incidence of disease” so which piece of evidence says that the affirmative solves climate change? Blah Blah Blah. None Cool. (NOTE AFF NOW HAS A WARMING

ADVANTAGE)

2.

How do you make the nanotubes? (You are trying to get a distinction between the aff’s tech and the CP’s tech to make the CP competitive)

Marine Debris

1NC

1.

Cleaning the oceans of microplastics faces multiple solvency barriers – the aff can’t solve

Stiv Wilson , 07/17/ 13 ,

(Associate Director at The 5 Gyres Institute Freelance Writer, Photojournalist at Freelance Journalist, previously Rise Above Plastics Campaign Coordinator and Oceanic Voyage Ambassador at Surfrider Foundation, http://inhabitat.com/thefallacy-of-cleaning-the-gyres-of-plastic-with-a-floating-ocean-cleanup-array/, The Fallacy of Cleaning the Gyres of Plastic With a Floating

"Ocean Cleanup Array, SRB)

As the policy director of the ocean conservation nonprofit 5Gyres

.org,

I can tell you that

the problem of ocean plastic pollution is massive. In case you didn’t know, an ocean gyre is a rotating current that circulates within one of the world’s ocean s – and recent research has found that these massive systems are filled with plastic waste.

There are no great estimates

(at least scientific) on how much plastic is in the ocean

, but I can say from firsthand knowledge (after sailing to four of the world’s five gyres) that it’s so pervasive it confounds the senses.

Gyre cleanup has often been floated as a solution in the past,

and recently Boyan Slat’s proposed ‘Ocean Cleanup Array’ went viral in a big way. The nineteen-year-old claims that the system can clean a gyre in 5 years with ‘unprecedented efficiency’ and then recycle the trash collected.

The problem is that the barriers to gyre cleanup are so massive that the vast majority of the scientific and advocacy community believe it’s a fool’s errand

– the ocean is big, the plastic harvested is near worthless, and sea life would be harmed.

The solutions starts on land.

2.

Their PRnews 13 evidence says that disposable containers have been on the rise for

21 years with stagnate recycling – the impact should have already been triggered.

3.

Alt Cause to recycling stagnation – their PR News 13 evidence concedes that surge in bottled water sales and failure of Congress to implement recycling regulations have stagnated plastic recycling – the aff can’t resolve these

4.

Their third piece of evidence (Reuseit 14) was written by Re use it a company that sells reusable products – of course they are going to say that disposable resources are the worst things ever.

5.

The affirmatives evidence all relies on false statistics and estimates – the amount of plastic in the ocean was greatly overestimated.

Ron

Meador, 07/07/14

(writes Earth Journal for MinnPost. He is a veteran journalist whose last decade in a 25-year stint at the Star

Tribune involved writing editorials and columns with environment, energy and science subjects as his major concentration., http://www.minnpost.com/earth-journal/2014/07/good-news-probably-ocean-plastic-lot-it-seems-be-disappearing , Good news, probably, on ocean plastic: A lot of it seems to be disappearing, SRB)

New research suggests that conventional estimates of the amount of plastic

accumulating in the

world's five great ocean gyres may be 100 times too high.

Writing in the Proceedings of the National Academy of Sciences last week, a Spanishled research team says its nine-month sampling of seawater on a round-the-world research cruise

in 2010-11 found floating plastic debris to be widespread

: Of more than 3,000 samples collected, 88 percent contained bits of plastic. However, the samples showed a strange dropoff at particle sizes smaller than 1 millimeter, suggesting that as solar radiation and weathering break the wastes into smaller and smaller pieces, some of it may simply disappear from the ocean surface.

Where it goes remains a mystery.

The team's estimate of the current volume of plastic litter is

on the order of 7,000 to 35,000 tons — less than 1

percent of what it calls a conservative estimate of the amount of plastic waste released into the ocean in the last several decades

.

6.

Their NJ.gov 13 evidence indicates that NOAA funded debris removal is already happening in the status quo – they don’t do anything to enhance this program - means that the status quo solves the advantage

7.

Plastics in the ocean are self-correcting – they are being broken down and naturally degraded now.

Ron

Meador, 07/07/14

(writes Earth Journal for MinnPost. He is a veteran journalist whose last decade in a 25-year stint at the Star

Tribune involved writing editorials and columns with environment, energy and science subjects as his major concentration., http://www.minnpost.com/earth-journal/2014/07/good-news-probably-ocean-plastic-lot-it-seems-be-disappearing , Good news, probably, on ocean plastic: A lot of it seems to be disappearing, SRB)

And while it is widely known that plastic materials can be obnoxiously persistent in all environments

— including seawater, which has a remarkable ability to break down and absorb all kinds of waste, including crude oil — that doesn't mean the yoke from a six-pack of soda cans remains intact.

Plastics pollution found on the ocean surface is dominated by particles smaller than 1 cm in diameter, commonly referred to as microplastics. Exposure of plastic objects on the surface waters to solar radiation results in their photodegradation, embrittlement, and fragmentation by wave action.

However, plastic fragments are considered to be quite stable and highly durable, potentially lasting hundreds to thousands of years. Persistent nano-particles may be generated during the weathering of plastic debris, although their abundance has not been quantified in ocean waters. The Malaspina's surface tow nets were capable of collecting microplastics with diameters larger than 200 microns, or about four times the thickness of a human hair. Its sampling found the greatest abundance of microplastics in the size range around 2 mm (about one and a half times the thickness of a dime) and a dramatic falloff in particles smaller than 1 mm. And this was puzzling, because our knowledge of plastics degradation suggests that "progressive fragmentation of the plastic objects into more and smaller pieces should lead to a gradual increase of fragments toward small sizes" and a steadier distribution across allsizes.

So where is all the microplastic going?

One possibility is that something accelerates sunlight's degradation of microplastic fragments below 1 mm; another is that the finest bits of debris are washing ashore and remaining there.

In the absence of any observations to support either pathway, the research team considers them unlikely. Two other avenues seem more probable:

"

Biofouling" by accumulations of bacteria could be hastening further breakdown of the plastics, or "ballasting" them with enough additional weight to cause the particles to sink below the surface.

Something could be eating the sub-millimeter particles, which just happen to be about the same size as the zooplankton that form a foundation of the ocean's food web. The paper notes other research documenting the presence of plastics in the guts of fish — especially "mesopelagic" fish, feeding at depths of 200 to 1,000 meters, where the light isn't so good — and also in the guts of birds and other predators that feed on fish (also, in their poop).

7. Nanotechnology has adverse environmental effects – replicates aff impacts

Zhang et al. 11 (B. Zhang1 , H.Misak1 , P.S. Dhanasekaran1 , D. Kalla2 and R. Asmatulu1,

1Department of Mechanical Engineering Wichita State University, 2Department of Engineering

Technology, Metropolitan State College of Denver, Environmental Impacts of Nanotechnology and Its Products,

Midwest Section Conference of the American Society for Engineering Education, 2011, https://www.asee.org/documents/sections/midwest/2011/ASEE-

MIDWEST_0030_c25dbf.pdf)//rh

Nanoparticles have higher surface areas than the bulk materials which can cause more damage to the human body and environment compared to the bulk particles . Therefore, concern for the potential risk to the society due to nanoparticles has attracted national and international attentions. Nanoparticles are not only beneficial to tailor the properties of polymeric composite materials and environment in air pollution monitoring, but also to help reduce material consumption and remediation (Figure 1). For example, carbon nanotube and graphene based coatings have been developed to reduce the weathering effects on composites used for wind

turbines and aircraft. Graphene has been chosen to be a better nanoscale inclusion to reduce the degradation of UV exposure and salt. By using nanotechnology to apply a nanoscale coating on existing materials, the material will last longer and retain the initial strength longer in the presence of salt and UV exposure. Carbon nanotubes have been used to increase the performance of data information system. However, there are few considerations of potential risks need to be considered using nanoparticles: The major problem of nanomaterials is the nanoparticle analysis method . As nanotechnology improves, new and novel nanomaterials are gradually developed. However, the materials vary by shape and size which are important factors in determining the toxicity. Lack of information and methods of characterizing nanomaterials make existing technology extremely difficult to detect the nanoparticles in air for environmental protection . information of the chemical structure is a critical factor to determine how toxic a nanomaterial is, and minor changes of chemical function group could drastically change its properties . Full risk assessment of the safety on human health and environmental impact need to be evaluated at all stages of nanotechnology. The risk assessment should include the exposure risk and its probability of exposure, toxicological analysis, transport risk, persistence risk, transformation risk and ability to recycle. environmental impacts. imental design in advance of manufacturing a nanotechnology based product can reduce the material waste. Carbon nanotubes have applications in many materials for memory storage, electronic, batteries, etc. However, some scientists have concerns about carbon nanotubes because of unknown harmful impacts to the human body by inhalation into lungs, and initial data suggests that carbon nanotubes have similar toxicity to asbestos fiber 11 . Lam et al. and Warheit et al. studied on pulmonary toxicological evaluation of single-wall carbon nanotubes12 .

From Lam’s research, carbon nanotube showed to be more toxic than carbon black and quartz once it reaches lung 13 , and Warheit found multifocal granulomas were produced when rats were exposure to singlewall carbon nanotubes 14 . Also, previous disasters need to be re-analyzed to compare with current knowledge as well. In the 1980s, a semiconductor plant contaminated the groundwater in Silicon Valley, California . This is a classic example of how nanotechnology can harm the environment even though there are several positive benefits.

. As current nanoscale materials are becoming smaller, it is more difficult to detect toxic nanoparticles from waste which may contaminate the environment (Figure 2). Nanoparticles may interact with environment in many ways: it may be attached to a carrier and transported in underground water by bio-uptake, contaminants, or organic compounds . Possible aggregation will allow for conventional transportation to sensitive environments where the nanoparticles can break up into colloidal nanoparticles. As Dr. Colvin says “we are concerned not only with where nanoparticles may be transported, but what they take with them”16 . There are four ways that nanoparticles or nanomaterials can become toxic and harm the surrounding environment 17: researchers are currently working on TiO2 powder as a coating inclusion that will reduce the weathering effects, such as salt rain degradation on composite materials. Ivana Fenoglio, et al. 18 expressed their concern that the effect of TiO2 nanoparticles to be assessed when leaked into the environment . Mobility of contaminants: There are two general methods that nanoparticle

can be emitted into atmosphere. 19 Nanoparticles are emitted into air directly from the source called primary emission, and are the main source of the total emissions. However, secondary particles are emitted naturally, such as homogeneous nucleation with ammonia and sulfuric acid presents. As Figure 2 demonstrates that nanoparticles can easily be attached to contaminations and transported to a more sensitive environment such as aqueous environments . For example, nuclear waste traveled almost 1 mile from a nuclear test site in 30 years20. However, after 40 years of the incident the first flow mechanism model is being developed to describe the methods of nanoparticle based waste travels21 .

Nanoparticles are invented and developed in advance of the toxic assessment by scientists. Many of the nanoparticles are soluble in water, and are hard to separate from waste if inappropriately handled. Any waste product, including nanomaterials, can cause environmental concerns/problems if disposed inappropriately.

8. Climate Change is alt cause to coral reef destruction

Roberts, 12

(Callum, marine conservation biologist at the University of York, “Corrosive Seas,”

The Ocean of Life, May 31, pg. 109-110, it’s a book, AW)

As carbon dioxide levels in the sea rise, carbonate saturation will fall , and the depths at which carbonate dissolves will become shallower.

Recent estimates suggest that this horizon is rising by three feet to six feet per year in some places . So far, most carbon dioxide added by human activity remains near the surface. It has mixed more deeply—to depths of more than three thousand feet

—in areas of intense downwelling in the polar North and South

Atlantic, where deep bottom waters of the global ocean conveyor current are formed. Elsewhere the sea has been stirred to only a thousand feet deep or less. All tropical coral reefs inhabit waters that are less than three hundred feet deep, so they will quickly come under the influence of ocean acidification.

If carbon dioxide in the atmosphere doubles from its current level, all of the world's coral reefs will shift from a state of construction to erosion .

They will literally begin to crumble and dissolve, as erosion and dissolution of carbonates outpaces deposition . What is most worrying is that this level of carbon dioxide will be reached by 2100 under a low-emission scenario of the Intergovernmental Panel on Climate Change .

The 2009 Copenhagen negotiations sought to limit carbon dioxide emissions so that levels would never exceed 450 parts per million in the atmosphere. That target caused deadlock in negotiations, but even that, according to some prominent scientists, would be too high for coral reefs. Just as

Ischia's carbonated volcanic springs provide a warning of things to come, bubbling carbon dioxide released beneath reefs in Papua New Guinea give us tangible proof of the fate that awaits coral reefs.

' 3 Reef growth has failed completely in places where gas bubbles froth vigorously, reducing pH there to levels expected everywhere by early in the twenty-second century under a business-as-usual scenario . The few corals that survive today have been heavily eroded by the corrosive water.

The collapse of coral reefs in the Galapagos following

El Nino in the early 1980s was hastened by the fact that eastern Pacific waters are naturally more acid due to their deep-water upwelling than those in other parts of the oceans.'4 Corals there were only loosely cemented into reef structures and collapsed quickly.

9. Climate change alt cause to bio-diversity– laundry list of reasons it affects all levels of ecosystems

Bellard et al, 12 (Celine – PhD; postdoc work on impact of climate change at the Universite

Paris , Cleo Bertelsmeier, Paul Leadley, Wilfried Thuiller, and Franck Courchamp, “Impacts of climate change on the future of biodiversity,” US National Library of Medicine National

Institutes of Health – accepted for publication in a peer reviewed journal, January 4, http://www.ncbi.nlm.nih.gov/pmc/articles/PMC3880584/, AW)

The multiple components of climate change are anticipated to affect all the levels of biodiversity, from organism to biome levels (Figure 1, and reviewed in detail in, e.g.,

Parmesan 2006). They primarily concern various strengths and forms of fitness decrease , which are expressed at different levels, and have effects on individuals, populations, species, ecological networks and ecosystems. At the most basic levels of biodiversity, climate change is able to decrease genetic diversity of populations due to directional selection and rapid migration, which could in turn affect ecosystem functioning and resilience (Botkin et al.

2007) (but, see Meyers & Bull 2002). However, most studies are centred on impacts at higher organizational levels, and genetic effects of climate change have been explored only for a very small number of species. Beyond this, the various effects on populations are likely to modify the “web of interactions” at the community level

(Gilman et al. 2010; Walther 2010). In essence, the response of some species to climate change may constitute an indirect impact on the species that depend on them. A study of 9,650 interspecific systems, including pollinators and parasites, suggested that around 6,300 species could disappear following the extinction of their associated species (Koh et al. 2004). In addition, for many species, the primary impact of climate change may be mediated through effects on synchrony with species’ food and habitat requirements

(see below). Climate change has led to phenological shifts in flowering plants and insect pollinators, causing mismatches between plant and pollinator populations that lead to the extinctions of both the plant and the pollinator with expected consequences on the structure of plant-pollinator networks (Kiers et al. 2010;

Rafferty & Ives 2010). Other modifications of interspecific relationships (with competitors, prey/predators, host/parasites or mutualists) also modify community structure and ecosystem functions (Lafferty 2009; Walther 2010; Yang & Rudolf 2010) At a higher level of biodiversity , climate can induce changes in vegetation communities that are predicted to be large enough to affect biome integrity . The Millenium Ecosystem Assessment forecasts shifts for 5 to 20% of Earth’s terrestrial ecosystems, in particular cool conifer forests, tundra, scrubland, savannahs, and boreal forest (Sala et al. 2005). Of particular concern are “tipping points” where ecosystem thresholds can lead to irreversible shifts in biomes

(Leadley et al.

2010). A recent analysis of potential future biome distributions in tropical South America suggests that large portions of Amazonian rainforest could be replaced by tropical savannahs (Lapola et al. 2009). At higher altitudes and latitudes, alpine and boreal forests are expected to expand northwards and shift their tree lines upwards at the expense of low stature tundra and alpine communities (Alo & Wang 2008). Increased temperature and decreased rainfall mean that some lakes, especially in Africa , might dry out (Campbell et al.

2009). Oceans are predicted to warm and become more acid, resulting in widespread degradation of tropical coral reefs (Hoegh-Guldberg et al. 2007). The implications of climate

change for genetic and specific diversity have potentially strong implications for ecosystem services. The most extreme and irreversible form of fitness decrease is obviously species extinction . To avoid or mitigate these effects, biodiversity can respond in several ways, through several types of mechanisms.

10. Ocean biodiversity is getting better – disproves their impact

Panetta 13

(Leon, former US secretary of state, co-chaired the Pew Ocean Commission and founded the Panetta Institute at California State

University, Monterey Bay, “Panetta: Don't take oceans for granted,” http://www.cnn.com/2013/07/17/opinion/panetta-oceans/index.html, ND)

Our oceans are a tremendous economic engine

, providing jobs for millions of Americans, directly and indirectly, and a source of food and recreation for countless more. Yet, for much of U.S. history, the health of America's oceans has been taken for granted, assuming its bounty was limitless and capacity to absorb waste without end. This is far from the truth.

The situation the commission found in 2001 was grim. Many of our nation's commercial fisheries were being depleted and fishing families and communities were hurting. More than 60% of our coastal rivers and bays were degraded by nutrient runoff from farmland, cities and suburbs.

Government policies

and practices, a patchwork of inadequate laws and regulations at various levels, in many cases made matters worse.

Our nation needed a wake-up call.

The situation, on many fronts, is dramatically different today because of a combination of leadership initiatives from the White House and old-fashioned bipartisan cooperation on Capitol Hill. Perhaps the most dramatic example can be seen in the effort to end overfishing in U.S. waters

. In 2005, President George W.

Bush worked with congressional leaders to strengthen America's primary fisheries management law,

the Magnuson-Stevens Fishery Conservation and Management Act. This included establishment of science-based catch limits to guide decisions in rebuilding depleted species.

These reforms enacted by Congress are paying off.

In fact, an important milestone was reached last June when the National Oceanic and Atmospheric Administration announced it had established annual, science-based catch limits for all U.S. ocean fish populations.

We now have some of the best managed fisheries in the world. Progress also is evident in improved overall ocean governance and better safeguards for ecologically sensitive marine areas.

In 2010, President Barack

Obama issued a historic executive order establishing a national ocean policy directing federal agencies to coordinate efforts to protect and restore the health of marine ecosystems.

President George W.

Bush set aside new U.S. marine sanctuary areas from 2006 through 2009.

Today, the Papahanaumokuakea Marine National Monument, one of several marine monuments created by the Bush administration, provides protection for some of the most biologically diverse waters in the

Pacific.

11. No extinction – we can isolate ourselves from the environment

Powers 2002

(Lawrence, Professor of Natural Sciences, Oregon Institute of Technology, The Chronicle of Higher Education, August 9, ND)

Mass extinctions

appear to result from major climatic changes

or catastrophes, such as asteroid impacts.

As far as we know, none has resulted from the activities of a species, regardless of predatory voracity

, pathogenicity, or any other interactive attribute.

We are the first species with the potential to

manipulate global climates and to destroy habitats, perhaps even ecosystem s -- therefore setting the stage for a sixth mass extinction

. According to Boulter, this event will be an inevitable consequence of a "self-organized Earth-life system."

This

Gaia-like proposal might account for many of the processes exhibited by biological evolution before

man's technological intervention, but ... the rules are now dramatically different.

... Many species may vanish, ... but that doesn't guarantee

, unfortunately, that we will be among the missing. While other species go bang

in the night, humanity will technologically isolate itself

further from the natural world and will rationalize the decrease in biodiversity in the same manner as we have done so far.

I fear, that like the fabled cockroaches of the atomic age,

we may be one of the last life-forms to succumb, long after the "vast tracts of beauty" that Boulter mourns we will no longer behold vanish before our distant descendants' eyes.

2NC Extension Coral Reefs Impact D

1.

Coral is incredibly resilient. If it survived 300 million years of badness it can survive anything we throw at it.

Ridd ‘7

(Peter, Reader in Physics – James Cook U. Specializing in Marine Physics and

Scientific Advisor – Australian Environment Foundation, “The Great Great Barrier Reef

Swindle”, 7-19, http://www.onlineopinion.com.au/view.asp?article=6134)

In biological circles, it is common to compare coral reefs to canaries, i.e. beautiful and delicate organisms that are easily killed.

The analogy is pushed further by claiming that

, just as canaries were used to detect gas in coal mines, coral reefs are the canaries of the world and their death is a first indication of our apocalyptic

greenhouse future.

The bleaching events of 1998 and 2002 were our warning. Heed them now or retribution will be visited upon us.

In fact a more appropriate creature with which to compare corals would be cockroaches - at least for their ability to survive. If our future brings us total self-annihilation by nuclear war, pollution or global warming, my bet is that both cockroaches and corals will survive

.

Their track-record is impressive. Corals have survived

300 million years of massively varying climate both much warmer and much cooler than today, far higher CO2 levels than we see today, and enormous sea level changes

.

Corals saw the dinosaurs come and go, and cruised through mass extinction events that left so many other organisms as no more than a part of the fossil record

.

Corals are particularly well adapted to temperature changes and in general, the warmer the better

.

It seems odd that coral scientists are worrying about global warming because this is one group of organisms that like it hot

. Corals are most abundant in the tropics and you certainly do not find fewer corals closer to the equator. Quite the opposite, the further you get away from the heat, the worse the corals

.

A cooling climate is a far greater threat. The scientific evidence about the effect of rising water temperatures on corals is very encouraging. In the GBR, growth rates of corals have been shown to be increasing over the last 100 years, at a time when water temperatures have risen. This is not surprising as the highest growth rates for corals are found in warmer waters. Further, all the species of corals we have in the GBR are also found in the islands, such as

PNG, to our north where the water temperatures are considerably hotter than in the GBR. Despite the bleaching events of 1998 and 2002, most of the corals of the GBR did not bleach and of those that did, most have fully recovered.

Coral Reef extinction is inevitable by 2100 – ocean acidification is destroying them – means the aff can’t solve

Kintisch 12 (

Eli, American Science Journalist, Coral Reefs Could Be Decimated by 2100 ,

Science AAAS, http://news.sciencemag.org/sciencenow/2012/12/coral-reefs-could-bedecimated-b.html)//rh

Nearly every coral reef could be dying by 2100 if current carbon dioxide emission trends continue, according to a new review of major climate models from around the world. The only way to maintain the current chemical environment in which reefs now live, the study suggests, would be to deeply cut emissions as soon as possible. It may even become necessary to actively remove carbon dioxide from the atmosphere, say with massive treeplanting efforts or machines. The world's open-ocean reefs are already under attack by the combined stresses of acidifying and warming water, overfishing, and coastal pollution .

Carbon emissions have already lowered the pH of the ocean a full 0.1 unit, which has harmed reefs and hindered bivalves' ability to grow. The historical record of previous mass extinctions suggests that acidified seas were accompanied by widespread die-offs but not total extinction. To study how the world's slowly souring seas would affect reefs in the future,

scientists with the Carnegie Institution for Science in Palo Alto, California, analyzed the results of computer simulations performed by 13 teams around the world. The models include simulations of how ocean chemistry would interact with an atmosphere with higher carbon dioxide levels in the future. This so-called "active biogeochemistry" is a new feature that is mostly absent in the previous generation of global climate models. Using the models' predictions for future physical traits such as pH and temperature in different sections of the ocean, the scientists were able to calculate a key chemical measurement that affects coral. Corals make their shells out of the dissolved carbonate mineral known as aragonite. But as carbon dioxide pollution steadily acidifies the ocean, chemical reactionschange the extent to which the carbonate is available in the water for coral. That availability is known as its saturation, and is generally thought to be a number between 3 and 3.5.

No precise rule of thumb exists to link that figure and the health of reefs. But the Carnegie scientists say paleoclimate data suggests that the saturation level during preindustrial times—before carbon pollution began to accumulate in the sky and seas—was greater than 3.5. The models that the Carnegie scientists analyzed were prepared for the major global climate report coming out next year: the

Intergovernmental Panel on Climate Change report. The team compared the results of those simulations to the location of 6000 reefs for which there is data, two-thirds of the world total.

That allowed them to do what amounted to a chemical analysis of future reef habitats. In a talk reviewing the study at the fall meeting of the American Geophysical Union earlier this month, senior author and Carnegie geochemist Ken Caldeira showed how the amount of carbon emitted in the coming decades could have huge impacts on reefs' fates. In a low-emissions trajectory in which carbon pollution rates were slashed and carbon actively removed from the air by trees or machines, between 77% and 87% of reefs that they analyzed stay in the safe zone with the aragonite saturation above 3. "If we are on the [business as usual] emissions trajectory, then the reefs are toast ," Caldeira says. In that case, all the reefs in the study were surrounded by water with Aragonite saturation below 3, dooming them . In that scenario,

Caldeira says, "details about sensitivity of corals are just arguments about when they will die."

" In the absence of deep reductions in CO2 emissions, we will go outside the bounds of the chemistry that surrounded all open ocean coral reefs before the industrial revolution ," says

Carnegie climate modeler Katharine Ricke, the first author on the new study. Greg Rau, a geochemist at Lawrence Livermore National Laboratory in California, says the work sheds new light onto the future of aragonite saturation levels in the ocean, also known as "omega." "There is a very wide coral response to omega—some are able to internally control the [relevant] chemistry," says Rau, who has collaborated with Caldeira in the past but did not participate in this research. Those tougher coral species could replace more vulnerable ones "rather than a wholesale loss" of coral. "[But] an important point made by [Caldeira] is that corals have had many millions of years of opportunity to extend their range into low omega waters.

With rare exception they have failed. What are the chances that they will adapt to lowering omega in the next 100 years ?"

2NC Extension Bio-D

Changes in biodiversity are both inevitable and unstoppable — their impacts are empirically disproven.

Dodds 2k

( Donald J. Dodds is the former president of the North Pacific Research Board, M.S. and P.E. (“The Myth of Biodiversity,”

Published in 2000, Available Online at http://www.docstoc.com/docs/97436583/THE-MYTH-OF-BIODIVERSITY, ND)

Biodiversity is a corner stone of the environmental movement. But there is no proof that biodiversity is important to the environment.

Something without basis in scientific fact is called a Myth. Lets examine biodiversity through out the history of the earth. The earth has been a around for about 4 billion years. Life did not develop until about 500 million years later. Thus for the first 500 million years bio diversity was zero. The planet somehow survived this lack of biodiversity.

For the next 3 billion years, the only life on the planet was microbial and not diverse

. Thus, the first unexplainable fact is that the earth existed for 3.5 billion years, 87.5% of its existence, without biodiversity.

Somewhere around 500 million years ago life began to diversify and multiple celled species appeared. Because these species were partially composed of sold material they left better geologic records, and the number of species and genera could be cataloged and counted. The number of genera on the planet is a indication of the biodiversity of the planet. Figure 1 is a plot of the number of genera on the planet over the last 550 million years. The little black line outside of the left edge of the graph is 10 million years.

Notice

the left end of this graph.

Biodiversity has never been higher than it is today. Notice next that at least ten times biodiversity fell rapidly; none of these extreme reductions in biodiversity were caused by humans

. Around 250 million years ago the number of genera was reduce 85 percent from about 1200 to around 200, by any definition a significant reduction in biodiversity. Now notice that after this extinction a steep and rapid rise of biodiversity.

In fact, if you look closely at the curve, you will find that every mass-extinction was followed by a massive increase in biodiversity

. Why was that? Do you suppose it had anything to do with the number environmental niches available for exploitation? If you do, you are right.

Extinctions are necessary for creation

. Each time a mass extinction occurs the world is filled with new and better-adapted species. That is the way evolution works, its called survival of the fittest.

Those species that could not adapted to the changing world conditions simply disappeared and better species evolved. How efficient is that? Those that could adapt to change continued to thrive. For example, the cockroach and the shark have been around well over 300 million years. There is a pair to draw to, two successful species that any creator would be proud to produce. To date these creatures have successful survived six extinctions, without the aid of humans or the EPA.

Now notice that only once in the last 500 million years did life ever exceed 1500 genera, and that was in the middle of the

Cretaceous Period around 100 million years ago, when the dinosaurs exploded on the planet. Obviously, biodiversity has a bad side. The direct result of this explosion in biodiversity was the extinction of the dinosaurs that followed 45 million years later at the KT boundary. It is interesting to note, that at the end of the extinction the number of genera had returned to the 1500 level almost exactly.

Presently biodiversity is at an all time high and has again far exceeded the 1500 genera level. Are we over due for another extinction?

A closer look at the KT extinction 65 million years ago reveals at least three things. First the

1500 genera that remained had passed the test of environmental compatibility and remained on the planet. This was not an accident. Second, these extinctions freed niches for occupation by better-adapted species. The remaining genera now faced an environment with hundreds of thousands of vacant niches. Third, it only took about 15 million years to refill all of those niches and completely replaced the dinosaurs, with new and better species. In this context, a better species is by definition one that is more successful in dealing with a changing environment.

Many of those genera that survived the KT extinction were early mammals, a more sophisticated class of life that had developed new and better ways of facing the environment. These genera were now free to expand and diversify without the presences of the life dominating dinosaurs. Thus, as a direct result of this mass extinction humans are around to discuss the consequences of change. If the EPA had prevented the dinosaur extinction, neither the human race, nor the EPA would have existed. The unfortunate truth is that the all-powerful human species does not yet have the intelligence or the knowledge to regulate evolution. It is even questionable that they have the skills to prevent their own extinction.

Change is a vital part of the environment

. A successful species is one that can adapt to the changing environment, and the most successful species is one that can do that for the longest duration. This brings us back to the cockroach and the shark. This of course dethrones egotistical homosapien-sapiens as god’s finest creation, and raises the cockroach to that exalted position. A fact that is difficult for the vain to accept. If humans are to replace the cockroach, we need to use our most important adaptation (our brain) to

prevent our own extinction. Humans like the Kola bear have become over specialized, we require a complex energy consuming social system to exist.

If one thing is constant in the universe, it is change. The planet has change significantly over the last 4 billion years and it will continue to change over the next 4 billion years. The current human scheme for survival, stopping change, is a not only wrong, but futile because stopping change is impossible.

Geologic history has repeatedly shown that species that become overspecialized are ripe for extinction. A classic example of overspecialization is the Kola bears, which can only eat the leaves from a single eucalyptus tree. But because they are soft and furry, look like a teddy bear and have big brown eyes, humans are artificially keeping them alive. Humans do not have the stomach or the brain for controlling evolution. Evolution is a simple process or it wouldn’t function. Evolution works because it follows the simple law: what works—works, what doesn’t work—goes away. There is no legislation, no regulations, no arbitration, no lawyers, scientists or politicians. Mother Nature has no preference, no prejudices, no emotions and no ulterior motives. Humans have all of those traits.

Humans are working against nature when they try to prevent extinctions and freeze biodiversity.

Examine the curve in figure one, at no time since the origin of life has biodiversity been constant

. If this principal has worked for 550 million years on this planet, and science is supposed to find truth in nature, by what twisted reasoning can fixing biodiversity be considered science? Let alone good for the environment. Environmentalists are now killing species that they arbitrarily term invasive, which are in reality simply better adapted to the current environment. Consider the Barred Owl, a superior species is being killed in the name of biodiversity because the Barred Owl is trying to replace a less environmentally adapted species the Spotted Owl. This is more harmful to the ecosystem because it impedes the normal flow of evolution based on the idea that biodiversity must remain constant

.

Human scientists have decided to take evolution out of the hands of Mother Nature and give it to the EPA.

Now there is a good example of brilliance. We all know what is wrong with lawyers and politicians, but scientists are supposed to be trustworthy. Unfortunately, they are all to often, only people who think they know more than anybody else. Abraham Lincoln said, “Those who know not, and know not that the know not, are fools shun them.” Civilization has fallen into the hands of fools. What is suggested by geologic history is that the world has more biodiversity than it ever had and that it maybe overdue for another major extinction.

Unfortunately, today many scientists have too narrow a view. They are highly specialized. They have no time for geologic history. This appears to be a problem of inadequate education not ignorance. What is abundantly clear is that artificially enforcing rigid biodiversity works against the laws of nature, and will cause irreparable damage to the evolution of life on this planet and maybe beyond. The world and the human species may be better served if we stop trying to prevent change, and begin trying to understand change and positioning the human species to that it survives the inevitable change of evolution.

If history is to be believed, the planet has 3 times more biodiversity than it had 65 million years ago. Trying to sustain that level is futile and may be dangerous.

The next major extinction, change in biodiversity, is as inevitable as climate change.

We cannot stop either from occurring, but we can position the human species to survive

those changes.

2NC Extension Environment Turn

1.

Aff replicates its impacts – Nanotech increases toxicological pollution

Zhang et al. 11 (B. Zhang1 , H.Misak1 , P.S. Dhanasekaran1 , D. Kalla2 and R. Asmatulu1,

1Department of Mechanical Engineering Wichita State University, 2Department of Engineering

Technology, Metropolitan State College of Denver, Environmental Impacts of Nanotechnology and Its Products,

Midwest Section Conference of the American Society for Engineering Education, 2011, https://www.asee.org/documents/sections/midwest/2011/ASEE-

MIDWEST_0030_c25dbf.pdf)//rh

Nanotechnology increases the strengths of many materials and devices, as well as enhances efficiencies of monitoring devices, remediation of environmental pollution, and renewable energy production. While these are considered to be the positive effect of nanotechnology, there are certain negative impacts of nanotechnology on environment in many ways, such as increased toxicological pollution on the environment due to the uncertain shape, size, and chemical compositions of some of the nanotechnology products (or nanomaterials). It can be vital to understand the risks of using nanomaterials, and cost of the resulting damage. It is required to conduct a risk assessment and full life-cycle analysis for nanotechnology products at all stages of products to understand the hazards of nanoproducts and the resultant knowledge that can then be used to predict the possible positive and negative impacts of the nanoscale products. Choosing right, less toxic materials (e.g., graphene) will make huge impacts on the environment. This can be very useful for the training and protection of students, as well as scientists, engineers, policymakers, and regulators working in the field.

Disease

1NC

1.

Can’t solve disease - Tech barriers in squo

Cario 12 (Elke, Associate Editor of Mucosal Immunology, Nanotechnology-based drug delivery in mucosal immune diseases: hype or hope?

, Mucosal Immunology (2012) 5, 2–3, http://www.nature.com/mi/journal/v5/n1/full/mi201149a.html)//rh

Despite this optimistic outlook on nanotechnology-based drug delivery, many hurdles must still be overcome . Naturally, the future nanoparticle-preparation process should be contaminant-free, standardized and reproducible, relatively inexpensive, and easy to scale up . To increase drug efficacy and ensure patient compliance, the nanocarrier formulation must be safe, simple to administer, and, most important, nontoxic. Very little is known about the potential effects of nanoparticles and individual components on the human immune system (not to mention with long-term administration). Protective mucus usually traps and removes foreign particles from the mucosal surface . Biodegradable polymeric particles of larger size (200 nm) have been shown to be capable of rapidly penetrat ing healthy

(e.g., cervicovaginal14) or diseased (e.g., chronic rhinosinusitis15) human mucus barriers .

During this process, however, nanoparticles can alter the microstructure of the mucus barrier,16 but the functional impact of this observation remains to be examined in vivo (e.g., do nanoparticle-induced “holes” disrupt the mucus barrier, allowing bacterial translocation?). Once the mucus is crossed, nanoparticles should not cause toxicity to the epithelial cells or immune hyper-/hyposensitivity in the underlying lamina propria.

2.

The first piece of evidence they read on this flow says that disease is inevitable in the squo due to climate change since the affirmative can’t solve climate change they can’t solve disease.

3.

Ocean cleanup nanotech and medical nanotech are not the same the plan only funds

NOAA nanotech development.

4.

New cures solve all diseases

ASNS, 2008 ( ASNS, Africa Science News Service, Uganda, 9-15-2008, AIDS cure may lie in supercharged "mineral water")

Antibiotics and vaccines that prompt side effects, genetic mutations, and resistant germs may soon be obsolete pending the results of an AIDS trial sponsored by volunteers, humanitarian groups, and The Republic of

Uganda

. At the Victoria Medical Center, in this nation at the epicenter of the pandemic , a new type of "mineral water" will be tested to compete with the drug industry's most profitable weapons

against disease. As governments worldwide are stockpiling defenses against bioterrorist attacks and deadly new outbreaks, Uganda will test a new possible cure for infectious diseases made from energized water and silver. It is called

UPCOSHTM, short for "Uniform Picoscaler Concentrated Oligodynamic Silver

Hydrosol." OXYSILVERTM is the leading brand. The base formula was developed by NASA scientists to protect astronauts in space

.

The solution of pure water and energized silver and oxygen uniquely boasts a covalent electromagnetic bond

between these two non-toxic elements that kills most harmful germs, oxygenates the blood, alkalines the body, helps feed essential nutrients to healthy cells and desirable digestive bacteria, and even relays a musical note upon which active DNA depends. These factors are crucial for developing mega-immunity and winning the war against cancer and infectious diseases

experts say.

According to

the product's developers, including famous health scientists, this entirely new class of liquids and gels is performing "miraculously" in killing HIV, the AIDS virus, tuberculosis, and malaria in initial tests.

Africa's greatest killers (after starvation, dehydration, and

resulting immunological destruction

) are no match for a few drops of UPCOSHTM

. Even using a germ infested glass, as is commonly the case in the poorest communities, you need not fear.

This water safely disinfects everything it touches

. Ugandan officials were encouraged by the nation's leading AIDS activist, Peter Luyima, co-founder of the WASART African

Youth Movement, to study OXYSILVERTM. Mr. Luyima invited several humanitarian doctors, researchers, organizations, and corporations to sponsor this promising human experiment on 70 terminally-ill patients. If successful , the government plans to grant funding to

Mr.

Luyima's youth organization to establish an OXYSILVERTM manufacturing plant to supply this life-saving liquid to distributors across Africa

. " Better late than never,

OXYSILVERTM may prove to be civilization's greatest hope for surviving against the current and coming plagues," says

Dr. Leonard

Horowitz

, an award-winning public health and emerging diseases expert

who contributed to the product's electro-genetic formulation.

Author of the American bestseller, Emerging Viruses: AIDS & Ebola--Nature, Accident or Intentional?, and the scientific text, DNA: Pirates of the Sacred Spiral, Dr. Horowitz is most critical of the drug cartel profiting from humanity's suffering.

5.

Empirics should really frame this debate

Richard Posner , Senior Lecturer in Law at the University of Chicago, judge on the United States

Court of Appeals for the Seventh Circuit, January 1, 2005

, Skeptic, “Catastrophe: the dozen most significant catastrophic risks and what we can do about them,” http://goliath.ecnext.com/coms2/gi_0199-4150331/Catastrophe-the-dozen-mostsignificant.html#abstract

Yet the fact that Homo sapien s has managed to survive every disease to assail it in the 200,000 years or so of

its existence is a source of genuine comfort

, at least if the focus is on extinction events .

There have been enormously destructive plagues, such as the Black Death, smallpox, and now

AIDS, but none has come close to destroying the entire human race. There is a biological reason. Natural selection favors germs of limited lethality

; they are fitter in an evolutionary sense because their genes are more likely to be spread if the germs do not kill their hosts too quickly.

The AIDS virus is an example of a lethal virus, wholly natural, that by lying dormant yet infectious in its host for years maximizes its spread. Yet there is no danger that AIDS will destroy the entire human race.

The likelihood of a natural pandemic that would cause the extinction of the human race is probably even less today than in the past

(except in prehistoric times, when people lived in small, scattered bands, which would have limited the spread of disease), despite wider human contacts that make it more difficult to localize an infectious disease.

The reason is improvements in medical science.

But the comfort is a small one. Pandemics can still impose enormous losses and resist prevention and cure: the lesson of the AIDS pandemic. And there is always a lust time.

6.

Nanotechnology does not improve health actually it’s been linked to numerous health links.

Kevin

Bullis,

3/22/

08 (MIT Technology Review’s senior editor for energy, http://www.technologyreview.com/news/410172/somenanotubes-could-cause-cancer/, Some Nanotubes Could Cause Cancer, SRB)

Certain types of carbon nanotubes could cause the same health problems as asbestos

, according to the results of two recent studies. In one, published yesterday, tests in mice showed that long and straight multiwalled

carbon nanotubes cause the same kind of inflammation and lesions in the type of tissues that surround the lungs that is caused by asbestos.

The other study

, also done in mice, showed that similar carbon nanotubes eventually led to cancerous tumors.

Carbon nanotubes, tube-shaped carbon molecules just tens of nanometers in diameter, have excellent electronic and mechanical properties that make them attractive for a number of applications. They have already been incorporated into some products, such as tennis rackets and bicycles, and eventually they could be used in a wide variety of applications, including medical therapies, water purification, and ultrafast and compact computer chips.

“It’s a material that’s got many unique characteristics,” says Andrew Maynard, a coauthor of one of the studies, which appears in the current issue of Nature Nanotechnology. “But of course nothing comes along like this that is completely free from risk.” Carbon nanotubes that are straight and 20 micrometers or longer in length–qualities that are well suited for composite materials used in sports equipment–resemble asbestos fibers. This has long led many experts to suggest that these carbon nanotubes might pose the same health risks as asbestos, a fire-resistant material that can cause mesothelioma, a cancer of a type of tissue surrounding the lungs. But until now, strong scientific evidence for this theory was lacking.

The new studies partially confirm the carbon nanotubes’ similarity to asbestos by showing that long, straight carbon nanotubes injected into mesothelial tissues in mice cause the sort of lesions and inflammation that also develop as a result of asbestos.

Such reactions are a strong indicator that cancer will develop with chronic exposure. One of the studies, which appeared in the Journal of Toxicological Study and was done by researchers at Japan’s National Institute of Health Sciences, also showed actual cancerous tumors. The Nature Nanotechnology study was done primarily by researchers in the United Kingdom at the University of Edinburgh and elsewhere. What isn’t known is whether, during nanotubes’ manufacture, use, and disposal, they can become airborne and be inhaled in sufficient quantities to cause problems. Indeed, earlier work has shown that it is actually difficult to get carbon nanotubes airborne, since they tend to clump together, says Maynard, the chief science advisor for the Project on Emerging Nanotechnologies, at the Woodrow Wilson Center for

Scholars, in Washington, DC. He says that this could decrease the chance that they will be inhaled. He adds that further research is needed to confirm this. Not all types of carbon nanotubes behave like cancer-causing asbestos. TheNature Nanotechnology article showed that short nanotubes (those less than 15 micrometers long) and long nanotubes that have become very tangled do not cause inflammation and lesions. Also, while the study did not look explicitly at single-walled nanotubes, these tend to be shorter and more tangled than multiwalled nanotubes, so they probably won’t act like asbestos, the researchers say. The authors suggest that this could be because such nanotubes can easily be taken up by immune cells called macrophages, and long, straighter ones can’t. (Macrophages can only stretch to 20 micrometers, which makes it difficult for them to engulf nanotubes longer than that.) This finding is consistent withresults published in

January that suggest that certain types of short carbon nanotubes are nontoxic to mice, says Hongjie Dai, the professor of chemistry at Stanford University who published the earlier work. Short nanotubes are likely to be useful in electronics and medical applications, while long, multiwalled nanotubes are more attractive for composite materials because of their mechanical strength. Dai says that it’s important not to lump all carbon nanotubes together, since they can have very different characteristics depending on how they are manufactured.

The Nature

Nanotechnology study is a strong one because it establishes the link between a particular type of nanotube and asbestos-like symptoms, while controlling for chemical impurities

that are a by-product of manufacturing carbon nanotubes, says Vicki Colvin, a professor of chemistry and chemical and biological engineering at Rice University in Houston, TX. Such chemical impurities have led to contradictory results in earlier toxicity studies on nanoparticles. The Journal of Toxicological Study paper, which showed not only that long carbon nanotubes could cause lesions, but also that these can actually lead to cancerous tumors, had the drawback that the researchers used genetically modified mice that are particularly sensitive to asbestos, Colvin says. But that study still shows a relationship between these particular kinds of carbon nanotubes and mesothelioma. As is the case with asbestos, carbon nanotubes are not likely to cause problems while they’re embedded inside products. It’s most important to protect workers involved in the manufacturing and disposal of these products, at which point the nanotubes could be released into the air, the authors of the Nature Nanotechnology study say. This could be done with established methods for handling fibrous particles, Colvin says, and by starting to keep track of what products have the potentially dangerous nanotubes–something that’s not done systematically now. Armed with the results, engineers could possibly use types of carbon nanotubes that are safer, Maynard says.

Anthony Seaton, one of the authors of the Nature Nanotechnology paper, a researcher at the University of Aberdeen, and a medical doctor who has treated people exposed to asbestos, draws a connection between the promise of carbon nanotubes and the hope people once had for asbestos. Asbestos, like carbon nanotubes, was seemingly ideal for many applications. At one point,

Seaton says, asbestos was “almost ubiquitous.” But whereas the dangers of asbestos weren’t recognized and dealt with until people got sick, the new findings present a chance to keep people from being hurt, he says, by taking preventative measures. “We’ve learned a serious lesson from asbestos,” Seaton says.

7. Virulent diseases cannot cause extinction because of burnout theory

Gerber 5 (Leah R. Gerber, PhD, Associate Professor of Ecology, Evolution, and Environmental

Sciences, Ecological Society of America, Exposing Extinction Risk Analysis to Pathogens: Is

Disease Just Another Form of Density Dependence?

August 2005)//rh

The density of a population is an important parameter for both PVA and host–pathogen theory. A fundamental principle of epidemiology is that the spread of an infectious disease through a population is a function of the density of both susceptible and infectious hosts

.

If infectious agents are supportable by the host species of conservation interest, the impact of a pathogen on a declining population is likely to decrease as the host population declines

. A pathogen will spread when, on average, it is able to transmit to a susceptible host before an infected host dies or eliminates the infection (Kermack and McKendrick 1927, Anderson and May 1991

). If the parasite affects the reproduction or mortality of its host, or the host is able to mount an immune response, the parasite population may eventually reduce the density of susceptible hosts to a level at which the rate of parasite increase is no longer positive

. Most epidemiological models indicate that there is a host threshold density (or local population size) below which a parasite cannot invade, suggesting that rare or depleted species should be less subject to host-specific disease.

This has implications for small, yet increasing, populations. For example, although endangered species at low density may be less susceptible to a disease outbreak,

recovery to higher densities places them at increasing risk of future disease-related decline (e.g., southern sea otters; Gerber et al. 2004). In the absence of stochastic factors (such as those modeled in PVA), and given the usual assumption of disease models that the chance that a susceptible host will become infected is proportional to the density of

infected hosts (the mass action assumption) a hostspecific pathogen cannot drive its host to extinction

(McCallum and Dobson 1995). Extinction in the absence of stochasticity is possible if alternate hosts (sometimes called reservoir hosts) relax the extent to which transmission depends on the density of the endangered host species. Similarly, if transmission occurs at a rate proportional to the frequency of infected hosts relative to uninfected hosts (see McCallum et al. 2001), endangered hosts at low density may still face the threat of extinction by disease. These possibilities suggest that the complexities characteristic of many real host– pathogen systems may have very direct implications for the recovery of rare endangered species.

Their impact is hype and out of context – their impact card is in the context of the Chytrid

Fungus, but several problems – first – Fungi are spores which require air to live – means that these deadly fungi are only found on land and therefore exploring the ocean can’t solve.

Second, first the Chytrid Fungus only infects amphibians and therefore can’t cause human extinction. Also – The Chytrid Fungus has been infecting amphibians for over 50 years and there has not been any serious extinction scenario. Finally, current research into fungal pathogens solves

Science Codex 12 (Super cool and accurate science website, Preserved Frogs Hold Clues to

Deadly Pathogen, June 20, 2012, http://www.sciencecodex.com/preserved_frogs_hold_clues_to_deadly_pathogen-93651)//rh

A Yale graduate student has developed a novel means for charting the history of a pathogen deadly to amphibians worldwide. Katy Richards-Hrdlicka, a doctoral candidate at the

Yale School of Forestry & Environmental Studies, examined 164 preserved amphibians for the presence of Batrachochytrium dendrobatidis, or Bd, an infectious pathogen driving many species to extinction. The pathogen is found on every continent inhabited by amphibians and in more than 200 species. Bd causes chytridiomycosis, which is one of the most devastating infectious diseases to vertebrate wildlife. Her paper, "Extracting the Amphibian Chytrid Fungus from

Formalin-fixed Specimens," was published in the British Ecological Society's journal Methods in

Ecology and Evolution and can be viewed at http://onlinelibrary.wiley.com/doi/10.1111/j.2041-

210X.2012.00228.x/full. Richards-Hrdlicka swabbed the skin of 10 species of amphibians dating back to 1963 and preserved in formalin at the Peabody Museum of Natural History.

Those swabs were then analyzed for the presence of the deadly pathogen . The frog being swabbed is a Golden Toad (Cranopsis periglenes) of Monteverde, Costa Rica. The species is extinct as a result of a lethal Bd infection. (Photo Credit: Michael Hrdlicka) "I have long proposed that the millions of amphibians maintained in natural-history collections around the world are just waiting to be sampled, " she said. The samples were then analyzed using a highly sensitive molecular test called quantitative polymerase chain reaction (qPCR) that can detect Bd DNA, even from specimens originally fixed in formalin. Formalin has long been recognized as a potent chemical that destroys DNA. "This advancement holds promise to uncover Bd's global or regional date and place of arrival, and it could also help determine if some of the recent extinctions or disappearances could be tied to Bd," said Richards-Hrdlicka.

" Scientists will also be able to identify deeper molecular patterns of the pathogen, such as genetic changes and patterns relating to strain differences, virulence levels and its population genetics ." Richards-Hrdlicka found Bd in six specimens from Guilford, Conn., dating back to 1968, the earliest record of Bd in the Northeast. Four other animals from the

1960s were infected and came from Hamden, Litchfield and Woodbridge. From specimens

collected in the 2000s, 27 infected with Bd came from Woodbridge and southern

Connecticut.

In other related work, she found that nearly 30 percent of amphibians in

Connecticut today are infected , yet show no outward signs of infection. Amphibian populations and species around the world are declining or disappearing as a result of land-use change, habitat loss, climate change and disease. The chytrid fungus , caused by Bd, suffocates amphibians by preventing them from respiring through their skin . Since Bd's identification in the late 1990s, there has been an intercontinental effort to document amphibian populations and species infected with it. Richards-Hrdlicka's work will enable researchers to look to the past for additional insight into this pathogen's impact

2NC Extension Health Problems

1.

Nanotechnology has been linked to numerous health problems

Philip E.

Ross ,

May 1, 20

06

(Guest contributor for MIT Tech Review, http://www.technologyreview.com/featuredstory/405743/tinytoxins/page/3/, Tiny Toxins? SRB)

It was just the type of event that many in the nanotechnology community have feared

– and warned against. In late March

, six people went to the hospital with serious

(but nonfatal) respiratory problems

after using a German household cleaning product called Magic Nano. Though it was unclear at the time what had caused the illnesses – and even whether the aerosol cleaner contained any nanoparticles – the events reignited the debate over the safety of consumer products that use nanotechnology. The number of products fitting that description has now topped 200, according to a survey published in March by the Project on Emerging Nanotechnologies in Washington, DC. Among them are additives that catalyze combustion in diesel fuel, polymers used in vehicles, high-strength materials for tennis rackets and golf clubs, treated stain-resistant fabrics, and cosmetics. These products incorporate everything from buckyballs – soccer ball-shaped carbon molecules named after

Buckminster Fuller – to less exotic materials such as nanoparticles of zinc oxide. But they all have one thing in common: their “nano” components have not undergone thorough safety tests.

Nanoparticles, which are less than 100 nanometers in size, have long been familiar as byproducts of combustion or constituents of air pollution; but increasingly, researchers are designing and synthesizing ultrasmall particles to take advantage of their novel properties.

Most toxicologists agree that nanoparticles are neither uniformly dangerous nor uniformly safe, but that the chemical and physical properties that make them potentially valuable may also make their toxicities differ from those of the same materials in bulk form.

One of the reasons for concern about nanoparticles’ toxicity has to do with simple physics.

For instance, as a particle shrinks, the ratio of its surface area to its mass rises. A material that’s seemingly inert in bulk thus has a larger surface area as a collection of nanoparticles, which can lead to greater reactivity. For certain applications, this is an advantage; but it can also mean greater toxicity. “The normal measure of toxicity is the mass of the toxin, but with nanomaterials, you need a whole different set of metrics,” says Vicki Colvin, a professor of chemistry at Rice University in Houston and a leading expert on nanomaterials.

Beyond the question of increased reactivity, the sheer tininess of nanoparticles is itself a cause for concern. Toxicologists have known for years that relatively small particles could create health problems when inhaled. Researchers have found evidence that the smaller particles are, the more easily they can get past the mucus membranes in the nose and bronchial tubes to lodge in the alveoli, the tiny sacs in the lungs where carbon dioxide in the blood is exchanged for oxygen.

In the alveoli, the particles face the white-cell scavengers known as macrophages, which engulf them and clear them from the body.

But at high doses, the particles overload the clearance mechanisms.

It is the potential growth, however, of technologies involving precisely engineered nanoparticles, such as buckyballs and their near cousins, carbon nanotubes, and the use of these new materials in consumer products that has made the question of toxicity particularly urgent. In addition to questions about how easily nanoparticles can penetrate the body, there is also debate over where they could end up once inside. Günter Oberdörster, a toxicologist at the University of Rochester, found that various kinds of carbon nanoparticles, averaging 30 to 35 nanometers in diameter, could enter the olfactory nerve in rodents and climb all the way up to the brain. “

There is a possibility that because of their small size, nanoparticles can reach sites in the body that large particles cannot, cross barriers, and react,

” says Oberdörster. In 2004, Oberdörster’s daughter, Eva Oberdörster, a toxicology researcher at Duke

University, put largemouth bass into water containing buckyballs

at the concentration of one part per million.

After two days, the lipids in the brains of the fish showed 17 times as much oxidative damage as those of unexposed fish.

Carbon nanotubes, which are basically cylindrical versions of the spherical buckyballs, are one of the stars of nanotech, with potential uses in everything from solar cells to computer chips. But in 2003, researchers at NASA

’s Johnson Space Center in Houston, headed by Chiu-Wing Lam, showed that in the lungs of mice, carbon nanotubes caused lesions that got progressively worse over time

. Under the conditions of the experiment, the researchers concluded, carbon nanotubes were “much more toxic than carbon black

[that is, soot] and can be more toxic than quartz, which is considered a serious occupational health hazard.

” Another extremely promising nanoparticle is the fluorescent “quantum dot,” now being explored for use in bioimaging. Researchers envision applications in which they tag the glowing nanodots with antibodies, inject them into subjects, and watch as they selectively highlight certain tissues or, say, tumors. Quantum dots are typically made of cadmium selenide, which can be toxic as a bulk material, so researchers encase them in a protective coating. But it is not yet known whether the dots will linger in the body, or whether the coating will degrade, releasing its cargo. Sensible regulation of nanoparticles will require new methods for assessing toxicity, which take into account the qualitative differences between nanoparticles and other regulated chemicals. Preferably, those methods will be generally applicable to a wide spectrum of materials. Today’s assays are not adequate for the purpose, says Oberdörster. “We have to formalize a tiered approach,” he says, “beginning with noncellular studies to determine the reactivity of particles, then moving on to in vitro cellular studies, and finally in vivo studies in animals. We have to establish that some particles are benign and others are reactive, then benchmark new particles against them.”

Separately testing every newly developed type of nanoparticle would be a Herculean task

, so Rice’s Colvin wants to develop a model that indicates whether a particular nanoparticle deserves special screening. “My dream is that there would be a predictive algorithm that would say, for a certain size and surface coating, this particular type of material is one you’d want to stay away from,” she says. “We should be able to do it, with the advance we have made in computing power, but we have to ask the right questions. For instance, is it acute cytotoxicity, or is it something else?” Amidst all the uncertainty about evaluating nanoparticles’ toxicity, regulatory agencies are in something of a quandary. In the United States, the Food and Drug Administration will assess medical products that incorporate nanoparticles, such as the quantum dots now being tested in animals; the Occupational Safety and Health Administration is responsible for the workplace environment in the factories that make the products involving nanoparticles; and the Environmental Protection Agency looks at products or chemicals that broadly permeate the environment, like additives to diesel fuel. In principle, these federal agencies have sweeping power over nanomaterials, but at the moment, their

traditional focus, their limited resources, and the sheer lack of test tube and clinical data make effective oversight next to impossible. For example, the National Institute for Occupational Safety and Health, the part of the Centers for Disease Control and Prevention in Atlanta responsible for studying and tracking workplace safety, acknowledges that “minimal information” is available on the health risks of making nanomaterials. The agency also points out that there are no reliable figures on the number of workers exposed to engineered nanomaterials. The EPA seems further along. In its draft “Nanotechnology White Paper,” issued in December, it proposed interagency negotiations to hammer out standards and pool resources. It acknowledged that at present, some nanoparticles that should be under its review are not, because they are not included in the inventory of chemicals controlled under the Toxic Substances Control Act. The EPA must defend the safety not only of human beings but of the natural environment – plants and ecological systems that may be exposed to a regulated material. There is scant data on the effects of nanomaterials in the environment, but some of it is troubling.

One study, for example, showed that alumina nanoparticles, which are already commonly used, inhibit root growth in some plants.

In a report written for the Project on Emerging Technologies, J.

Clarence Davies, assistant administrator for policy, planning, and evaluation at the EPA from 1989 to 1991, advocates passing a new law assigning responsibility for nanomaterial regulation to a single interagency regulatory authority. Davies would also require manufacturers to prove their nanotech products safe until enough evidence had been gained to warrant exemptions. But some executives in the nanotech industry cringe at the prospect of such regulations. Alan Gotcher, head of Altair Nanotechnologies, a manufacturer in Reno, NV, that makes various types of nanoparticles, testified before the U.S. Senate in February and cited the Davies report. “

To fall into ‘a one-size-fits-all’ approach to nanotechnology,” he said, “is irresponsible and counterproductive

.” Gotcher would prefer a government-funded effort to amass the necessary data and build the necessary models before setting any standards. It is doubtful, however, that the nanotech community will stop developing new products, or that the public will stop buying them, while awaiting a new regulatory framework that could take years and millions of dollars to finalize. While few agree on how to efficiently determine the toxicity of nanoparticles, or how to regulate them, nearly everyone agrees on the urgency of quickly tackling both questions. The use of nanoparticles in consumer products like cosmetics and cleaners represents only a tiny sliver of nanotech’s potential, but any unresolved safety concerns could cast a huge shadow. “If I was someone producing these materials, I would be afraid that one health problem, anywhere, would hurt the entire industry,” says Peter Hoet, a toxicologist at the Catholic University of Leuven, in Belgium. The large consumer corporations DuPont and Procter and

Gamble participated in a study on nanoparticles’ toxicity. But the nanotech community needs to put pressure on manufacturers using the “nano” label for marketing purposes to stand up and take responsibility for their products. That means contributing resources and money to toxicity studies and freely disclosing which nanotechnologies they are relying on.

Warming

1NC

1.

Co2 is the ONLY solution to stop the impending food crisis that will kill millions.

Idso, 11 (Craig D., PhD Center for the Study of Carbon Dioxide and Global Change, 6/15/11, Center for the Study of Carbon Dioxide and Global Climate Change

, “Estimates of Global Food Production in the

Year 2050: Will We Produce Enough to Adequately Feed the World?” AS)

As indicated in the material above, a very real and devastating food crisis is looming on the horizon, and continuing advancements in agricultural technology and expertise will most likely not be able to bridge the gap between global food supply and global food demand just a few short years from now.

However, the positive impact of Earth’s rising atmospheric

CO2 concentration on crop yields will considerably lessen the severity of the coming food shortage . In some regions and countries it will mean the difference between being food secure or food insecure ; and it will aid in lifting untold hundreds of millions out of a state of hunger and malnutrition, preventing starvation and premature death .

¶

For those regions of the globe where neither

¶ enhancements in the techno-intel effect nor

¶ the rise in CO2 are projected to foster food

¶ security, an Apollo moon-mission-like

¶ commitment is needed by governments and

¶ researchers to further increase crop yields

¶ per unit of land area planted, nutrients applied, and water used. And about the only truly viable option for doing so (without taking enormous amounts of land and water from nature

¶

and driving untold numbers of plant and animal species to extinction) is to have researchers and governments invest the time, effort and capital needed to identify and to prepare for production the plant genotypes that are most capable of maximizing CO2 benefits for important food crops.

¶

Rice, for example , is the third most important global food crop , accounting for 9.4% of global food production. Based upon data presented in the CO2 Science Plant

¶

Growth Database, the average growth response of rice to a 300-ppm increase in the air’s CO2 concentration is 35.7%. However, data obtained from De

Costa et al. (2007), who studied the growth responses of 16 different rice genotypes, revealed

CO2-induced productivity increases ranging from -7% to +263%. Therefore, if countries learned to identify which genotypes provided the largest yield increases per unit of CO2 rise, and then grew those genotypes , it is quite possible that the world could collectively produce enough food to supply the needs of all its inhabitants . But since rising Co2 concentrations are considered by many people to be the primary cause of global warming, we are faced with a dilemma of major proportions.

¶

If proposed regulations restricting

¶ anthropogenic

CO2 emissions (which are

¶ designed to remedy the potential global

¶ warming problem) are enacted, they will

¶ greatly exacerbate future food problems

¶ by reducing the CO2-induced yield

¶ enhancements that are needed to

¶ supplement increases provided by

¶ advances in agricultural technology and

¶ expertise. And as a result of such CO2