What is response to intervention?

Ingham ISD

RtI Initiative

November 6, 2009

Terri Metcalf

MiBLSi/Ottawa Area ISD

Terri Metcalf

Michigan’s Integrated Behavior and Learning

Support Initiative (MiBLSi)

Technical Assistance Partner

Ottawa Area, Kent and Ionia ISDs tmetcalf@oaisd.org

(616) 738-8940 ext 4112 www.cenmi.org/miblsi www.oaisd.org/earlyintervention

What is RtI?

Memory box activity

Draw rectangle

What concepts, terms, vocabulary, theory, models, questions do you remember about RtI?

What is response to intervention?

Response to intervention is . . .

RtI

RTI

Three-tier model

Problem solving model

The Triangle model

Response to intervention is

not . . .

Special education eligibility

Pre-referral model

Tier 2 pull-out

Just for reading

Just for learning disabilities

DIBELS

1.

2.

3.

4.

5.

6.

RtI in 6 “easy” steps . . .

Implement scientifically based general education instructional methods

Collect benchmarks of all student performance 3 times a year (“school-wide

or universal screening”)

Identify students below benchmark

Provide scientifically based small-group instruction to students below benchmark

(“tiered model of instruction”)

Monitor student progress (“progress

monitoring”)

Review, revise or discontinue small group instruction based on progress (“data-based

decision making”)

RtI is . . .

. . .the practice of providing high-quality instruction and interventions matched to student need, monitoring progress frequently to make decisions about changes in instruction or goals and

applying child response data to important educational decisions.

NASDSE, RtI: Policy Considerations and Implementation, 2005 (emphasis added).

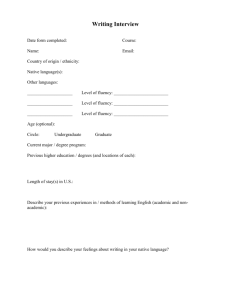

What to expect . . .

Reading research

◦ Beginning reading (K-3)

◦ Secondary reading (4+)

Role of assessment in reading instruction and

Response to Intervention models

◦ Different types of assessments

◦ Curriculum-based measurement

Administration and scoring of Oral Reading

Fluency and Maze

Logistics

Fidelity of the data

Exploring resources and online training materials on Aimsweb

What Makes a Big Idea a Big Idea?

A Big Idea is:

◦ Predictive of reading acquisition and later reading achievement

◦ Something we can do something about, i.e., something we can teach

◦ Something that improves outcomes for children if/when we teach it

© 2004, Dynamic

Measurement Group

8

“Big ideas” in reading instruction

Beginning reading

(K-3)

◦ Phonemic awareness

◦ Alphabetic principle

◦ Accuracy and fluency with connected text

◦ Vocabulary

◦ Comprehension

Adolescent reading (4+)

◦ Word attack

◦ Fluency

◦ Vocabulary

◦ Comprehension

◦ Student engagement and motivation

Phonemic Awareness

The awareness and understanding of the sound structure of our language

Understanding that spoken words are made up of sequences of individual speech sounds

“cat” is composed of the sounds /k/ /a/ /t/

© 2004, Dynamic

Measurement Group

10

Why Phonemic Awareness?

Phonemic awareness is essential to learning to read in an alphabetic writing system.

◦ Words are made up of sounds/phonemes.

◦ Letters represent sounds/phonemes.

Without phonemic awareness, phonics makes little sense.

© 2004, Dynamic

Measurement Group

11

When do most students master phonemic awareness?

Greatest gains are between kindergarten and first grade

(Chafouleas, Lewandowski, Smith & Blachman, 1997; Fox &

Routh, 1975; Liberman, Shankweiler, Fischer, & Carter, 1974)

Study found 90% of students had mastery by age 7

(Chafouleas et al., 1997)

Beginning phonological awareness is needed to facilitate reading, once reading is established, each promotes the other

(Daly, Chafouleas, & Skinner

Interventions for reading problems)

12

Read the following word to a partner: hypsilophodonts

The Children’s Dinosaur Encyclopedia, 2000.

13

Alphabetic Principle

Based on two parts:

◦ Alphabetic Understanding: Letters represent sounds in words p

© 2004, Dynamic

Measurement Group

14

© 2004, Dynamic

Measurement Group

Alphabetic Principle

◦ Phonological Recoding. Letter sounds can be blended together and knowledge of letter -sound associations can be used to read/decode words.

p

15

Why Alphabetic Principle?

Letter-sound knowledge is prerequisite to word identification.

A primary difference between good and poor readers is the ability to use letter-sound correspondences to decode words.

Letter-sound knowledge can be taught.

Teaching the alphabetic principle leads to gains in reading acquisition/achievement.

16

Accuracy and Fluency with connected text

◦ Automaticity with fundamental skills so that reading occurs quickly and effortlessly

e.g., driving a car, playing a musical instrument, playing a sport

© 2004, Dynamic

Measurement Group

17

Why Fluency?

Fluency provides a bridge between word recognition and comprehension (National Institute for

Literacy, 2001).

Proficient readers are so automatic with each component skill (phonological awareness, decoding, vocabulary) that they focus their attention on constructing meaning from the print (Kuhn & Stahl,

2000).

If a reader has to spend too much time and energy figuring out what the words are, she will be unable to concentrate on what the words mean

(Coyne,

Kame’enui, & Simmons, 2001).

Vocabulary

◦ Understanding and use of words:

Ability to produce a specific word for a particular meaning

Ability to understand spoken/written words

© 2004, Dynamic

Measurement Group

19

Why Vocabulary?

Learning is profoundly dependent upon vocabulary knowledge.

Children who enter kindergarten with limited vocabulary knowledge start behind their peers and grow much more discrepant from peers over time.

There are effective strategies for teaching vocabulary to children.

Teaching vocabulary increases children’s reading comprehension.

© 2004, Dynamic

Measurement Group

Comprehension

◦ The process of getting meaning from spoken language and/or print

21

Why Comprehension?

Comprehension is the essence of reading.

Through comprehension, meaning is constructed and students begin to read to learn.

Children can be taught comprehension strategies.

Comprehension instruction improves reading achievement and academic success.

© 2004, Dynamic

Measurement Group

22

Adolescent Big Ideas

Practice Guide for Adolescent Literacy

http://ies.ed.gov/ncee/wwc

Adolescent big ideas

◦

Word attack

◦

Fluency

◦

Vocabulary

◦

Comprehension

◦

Student engagement and motivation

Recommendations for adolescent literacy:

1.

2.

3.

4.

5.

Provide explicit vocabulary instruction

Provide direct and explicit comprehension instruction

Provide opportunities for extended discussion of text meaning and interpretation

Increase student motivation and engagement in literacy learning

Make available intensive and individualized interventions for struggling readers that can be provided by trained specialists

Improving adolescent literacy: Effective Classroom and intervention practices (2008).

Team time

What do you currently use in 1 st grade in your district to screen for the big ideas in reading?

5 th or 6 th grade?

Assessments for different purposes

Outcome assessments - Provides a bottom-line evaluation of the effectiveness of the reading program in relation to established performance levels

Screening assessments - Designed as a first step in identifying children who may be at high risk for delayed development or academic failure and in need of further diagnosis of their need for special services or additional reading instruction

Assessments for different purposes, cont’d

Diagnostic assessments- Helps teachers plan instruction by providing indepth information about students' skills and instructional needs.

Progress monitoring assessments-

Determines through frequent measurement if students are making adequate progress or need more intervention to achieve grade-level reading outcomes.

Outcome Screening Diagnostic Progress

Monitor

School-wide or universal screening

What is it?

Assessment measure in academic area used to look at group and individual performance on a specific skill (e.g. reading)

1.

Should provide information on two questions:

Is our core curriculum working?

2.

What students need further intervention?

Essential components of schoolwide screening measure

Accurate (reliable and valid)

Efficient

Based on mastery of skills vs performance of peers

Provide cut point or goal

What is Curriculum-based measurement or CBM?

CBM is a method of monitoring progress through direct, continuous assessment of basic skills

CBM probes require about 1 to 4 minutes to complete, depending on the skill

CBM probes can be given frequently to provide continuous progress data

Can graph results to look for trends in the data

Adapted from Shinn, Shinn & Langell.

CBM is available for:

*oral reading

*reading comprehension

*math computation

*written expression

*spelling

Origins of CBM

Originated by Stanley Deno and others at

University of Minnesota Institute for

Research on Learning Disabilities

Looking for way to evaluate basic skills

Supported by over 30 years of schoolbased research

CBM is endorsed by the USDOE as a method for monitoring student progress

Adapted from Shinn, Shinn & Langell.

Advantages of CBM

Direct measure of student performance

Focus is on repeated measures of performance

Simple, accurate, efficient, generalizable

Designed to be sensitive to growth over time

Adapted from Shinn, Shinn & Langell.

What are some General Outcome

Measures from other fields?

Medicine?

Wall Street?

McDonalds?

Adapted from Shinn, Shinn & Langell.

Can it predict performance on the

MEAP?

Nine states have looked at CBM-Reading and the ability to predict a “pass” on their state test

All found correlations between CBM-

Reading and state test statistically significant or highly correlated

Cut scores for predicting pass in

Grade 3:

Ax & Bradley-Klug, Aimsweb website, research.

Things to remember about CBM:

Designed to be “indicators” of basic skills

Probes are all standardized

(administered, scored and interpreted the same way for all students)

Sensitive to individual growth

More information on validity

See Appendix C, Aimsweb Training

Workbook

Four page jigsaw activity on Appendix C

Administration and scoring of

Oral Reading Fluency

Student reads aloud for one minute

Examiner listens to student read and notes any errors

Student’s score is number of correct words in one minute

Administration and Scoring

Procedures (Oral reading fluency)

You’ll need:

1.

2.

3.

Materials – reading passages, stopwatch

Standardized directions

Scoring rules to obtain correct words read per minute

2.

3.

1.

Administration directions

Place unnumbered copy in front of student

Your copy is numbered

Say “When I say ‘begin’, start reading aloud at the top of this page. Read across the page. Try to read each word. If you come to a word you don’t know, I’ll tell it to you. Be sure to do your best reading. Are there any questions? (Pause) Begin.”

4.

Administration directions, cont’d

Follow along on your copy. Put a slash

( / ) through words read incorrectly.

5.

At the end of one minute, place a bracket

( ] ) after the last word and say, “Stop”.

6. Score and summarize by writing

Words Read Correct (WRC) and errors.

Scoring rules, p. 32

Aimsweb Training Workbook

Correctly read words are pronounced correct

Self corrected words w/in 3 seconds are correct

Repeated words are counted as correct

Don’t take off for dialect

Inserted words are ignored

Scoring rules, p. 32

Aimsweb Training Workbook

Errors:

Mispronounced or substituted words

Omitted words

Hesitations ( > 3 seconds)

Reversals

Some scoring hints . . .

If a student doesn’t begin in the first 3 seconds, give them the first word

If a student skips a line, the missed words count as errors

If a student has more than 5 errors in the first line, discontinue

Watch titles! If included in numbers, student needs to read

Tips

Its about testing, not teaching

Its about best, versus fastest reading

If possible, sit across from, not beside the student

Aimsweb Training Workbook, p. 10

Oral Reading Fluency Scoring

Score = Words read correct - errors

Give three passage and take the median score and error rate

67/2

85/8

74/9

Why median versus average?

Averages have “outliers”, median more statistically reliable

Practice Session

Groups of two

Look through Qualitative Features

Checklist on page 19

Look through Accuracy of

Implementation Scale on p. 20

One person is Examiner (See Practice exercises, p. 21)

One person is Reader (See Answer Key, p. 29)

Do a few exercises, then switch

Maze

Approximately 150-400 word grade level passage

First and last sentence are left intact

Group of three words are inserted for every seventh word

Student is asked to select the original word (circle it) from among three to five distractors

Administration and scoring: Reading Maze

Group administered

Give them the practice test (especially with younger students)

Say the standardized directions, see

Maze Training workbook, p. 14

Start watch

Monitor students to make sure they are circling, not writing

Stop students after 3 minutes

Administration and scoring: Reading Maze

Training Workbook, p. 16

Answer is correct if student circles the word that matches the correct word on the scoring template

Answer is incorrect if student (1) circles an incorrect word or (2) omits a word selection

Administration and scoring: Reading Maze

Training Workbook, p. 16

Scoring:

Count the total number of items up to last circled word

Compare student answers to correct answers on template. Mark slash ( / ) through incorrect.

Subtract incorrect from total number attempted

Record score as total number of correct answers followed by errors (e.g. 35/2)

Administration and scoring: Reading Maze

Training Workbook, p. 16

Prorating (for students who finish earlier than 3 minutes)

Record their time

Convert their time to seconds (e.g. 2 minutes = 120 seconds)

Divide number of seconds by number correct (120/40 = 3)

Divide number of seconds for 3 minutes

(180) by calculated value (e.g. 180/3=60)

Administration and scoring of

Maze

Training workbook, p. 9

What differences did you observe in

Emma’s and Abby’s Maze performance?

What other conclusions can you draw?

Team Time: Logistics

How will you collect this data?

◦ Who will be on your assessment team?

◦ Who will be in charge of fidelity checks ?

◦ Who will input the data into the website?

◦ How will you report the data back to the teachers? What training will they need in the beginning?

◦ How will you review the data as grade levels? As a building? As a district?

Use and interpretation of screening data

Use a Four Box Instructional Sort with your screening data

Group 1:

Accurate and Fluent

Group 2:

Accurate but Slow

Rate

Group 3:

Inaccurate and Slow

Rate

Group 4:

Inaccurate but High

Rate

Progress monitoring: What is it?

Assessment that determines if academic or behavior interventions are having the desired effect

Typically administered to students who did not meet the benchmark standard

Must align with the overall goal of the intervention

GPS On

You are

Here

Desired

Course

Actual

Course

Port

Progress monitoring :

What does it tell us?

Should provide a picture of student progress

◦ Graph form is very useful

Answers: Do we need to continue or revise the intervention for this student? How confident are you that the student will meet the next benchmark standard?

Typically use 3 or 4 point “decision” rules

Progress monitoring:

Tier 1 or benchmark: Use screening and other assessment information, no need for more frequent progress monitoring

Tier 2 or strategic: Progress monitor in the deficit area approximately every 2 weeks (some sources say 4 wks)

Tier 3 or intensive: Progress monitor every week

Team Time

Progress monitoring

How might this work in your building?

Who would gather the information?

Who would they report it to? (e.g. teachers)

How would that data be reviewed and used?

Practice time with Aimsweb

Action planning

Conclusion

RtI is about systems change

Assessment is ONE PIECE of RtI

Usually a 3-5 year process

Look for the smallest change that will have the biggest impact

Thank you!

Terri Metcalf

Michigan’s Integrated Behavior and Learning

Support Initiative (MiBLSi)

Technical Assistance Partner

Ottawa Area, Kent and Ionia ISDs tmetcalf@oaisd.org

(616) 738-8940 ext 4112 www.cenmi.org/miblsi www.oaisd.org/earlyintervention