Server Consolidation

advertisement

Server Consolidation

Xiujiao Gao

xiujiaog@buffalo.edu

12/02/2011

Overview

• Introduction

• Server consolidation problems and solutions

– Static Server Allocation Problems (SSAP) and its

extensions[1]

– Shares and Utilities based Server Consolidation[2]

– Server Consolidation with Dynamic Bandwidth

Demand[3]

• Conclusion

2

Introduction

• Server Consolidation

– The process of combining the workloads of several

different servers or services on a set of target

(physical) servers

• The Gartner Group estimates that the utilization

of servers in datacenter is less than 20 percent.

Server

Consolidation

VM 1

VM2

VM 1

VM 4

VM 4

VM 3

VM 3

VM 2

3

Introduction

• Server Virtualization

– Provide technical means to consolidate multiple

servers leading to increased utilization of physical

servers

– Virtual machine appears to a “guest” operating

system as hardware, but it is simulated in a

contained software environment by the host system

– Reduced time for deployment, easier system

management— lower hardware and operating costs

4

Overview

• Introduction

• Server consolidation problems and solutions

– Static Server Allocation Problems (SSAP) and its

extensions[1]

– Shares and Utilities based Server Consolidation[2]

– Server Consolidation with Dynamic Bandwidth

Demand[3]

• Conclusion

5

SSAP and its Extensions [1]

•

•

•

•

•

•

Decision problems

Available data in data centers

Problem Formulation

Complexity and Algorithms

Experimental Setup

Simulation Results

6

Decision Problems

• It applies to three widespread scenarios

– Investment decision

– Operational costs (i.e. energy, cooling and

administrative cost)

– Rack of identical blade servers (which subset of

servers to use)

• Minimize the sum of server costs in terms of

purchasing, maintenance, administration or

sum of them (ci has different meanings)

7

Available Data in Data Centers

• Date centers reserve certain amounts of IT

resources for each single service or server

– CPU capacity — SAPS or HP computons

– Memory —Gigabyte

– Bandwidth —Megabits per second

• Resource demand has seasonal patterns on a

daily, weekly or monthly basis

– large set of workload traces from their industry

partner

http://doi.ieeecomputersociety.org/10.1109/TSC.2010.25

8

An Example of Available Data

9

Available Data in Data Centers

• Workloads traces can change in extended time

periods

• IT service managers monitor workload

developments regularly

• Reallocate servers if it is necessary

• Models for initial and subsequent allocation

problems

10

Problem Formulation

• Static Server Allocation Problems (SSAP)

• Static Server Allocation Problem with variable

workload (SSAPv)

• Extensions of the SSAP

–

–

–

–

–

Max-No. of services Constraints

Separation Constraints

Combination Constraints

Technical Constraints and Preassignment Constraints

Limit on the number of reallocations

11

SSAP

• n services j ϵ J that are to be served by m servers i

ϵI

• Different types of resources k ϵ K

• Server i has a certain capacity sik of resource k

• ci describes the potential cost of a server

• Service j orders ujk units of resource k

• yi are binary decision variables indicating which

servers are used

• xij describes which service is allocated on which

server

12

SSAP

The SSAP represents a single service’s resource demand

as constant over time (side constraints 2)

13

SSAPv

• Consider variations in the workload

• Time is divided into a set of intervals T indexed by

t={1,….r}

• ujkt describes how much capacity service j

requires from resource type k in time interval t

• ujkt depend on the load characteristics of the

servers to be consolidated

14

Extensions of SSAP

• Max No. of Services Constraints

• Separation Constraints

• Combination Constraints

• Technical Constraints

• Limits on the number of reallocations

15

Complexity and Algorithms

• SSAP is strongly NP-hard

• A straightforward proof by reducing SSAP to the

multidimensional bin packing problem (MDBP)

http://doi.ieeecomputersociety.org/10.1109/TSC.2010.25

• NP-hard does not necessarily mean that it is

intractable for practical problem sizes

• Which problem sizes can be solved exactly and

how far one can get with heuristic solutions, both

in terms of problem size and solution quality

20

Complexity and Algorithms

• Polynomial-time approximation schemes

(PTAS) with worst-case guarantees on the

solution quality of MDBP have been published.

• The first important result was produced in

C. Chekuri and S. Khanna, “On Multi-Dimensional Packing Problems,”

Proc. ACM-SIAM Symp. Discrete Algorithms, pp. 185-194, 1999

• For any fixed ε>0, delivers a

approximate solution for constant d (d is

dimension of MDBP)

Two steps

21

Algorithms for MDBP

• First step

—Solves linear programming relaxation :make

fractional assignments for at most dm vectors in d

dimensions and m bins

• Second step

– The set of fractionally assigned vectors is assigned

greedily—find the largest possible set

22

Algorithms for SSAP(v)

• SSAP with only one source

– Branch & Bound (SSAP B&B)

– First Fit (FF)

– First-Fit Decreasing (FFD)

• SSAPv

– Branch & Bound (SSAPv B&B)

– LP-relaxation-based heuristic (SSAPv Heuristic)

• Use the results of an LP-relaxation

• Use an integer program to find an integral assignment

(Compared to the PTAS)

23

Algorithms for SSAP(v)

• For SSAP B&B, SSAPv B&B and SSAPv Heuristic,

the number of servers used does have a

significant impact on the computation time

• Each additional server increases the number

of binary decision variables by n+1

• Use specific iterative approach to keep the

number of binary variables as low as possible

Lower bound number of servers

Same capacity s

Fractional allocation of services

24

Algorithms for SSAP(v)

• Start to solve the problem with m being the LB

• If the problem is infeasible, m is incremented

by 1

• Repeat until a feasible solution is found

• The first feasible solution found in B&B search

tree is obviously an optimal solution

25

Experimental Data

• Experimental Data (3 consecutive months

measured in intervals of 5minutes)

– 160 traces for the resource usage of

Web/Application/Database servers (W/A/D)

– 259 traces describing the load of servers exclusively

hosting ERP applications

– Resources demands are in terms of CPU and memory

– Strong diurnal seasonality with nearly all servers and

some weekly seasonality

– CPU is the bottleneck resource for these types of

applications

– CPU demand of ERP services is significantly higher

than W/A/D

26

Data Preprocessing

• Data Preprocessing: discrete characterization of

daily patterns in the workload traces and solve

the allocation problem as a discrete

optimization problem

• Two- step process to derive the parameters ujkt

for our optimization models from the original

workload traces

ujktraw original workload traces

ujkt an estimator from the set of ujktraw

27

Data Preprocessing

• First step

– Derive an estimator for each interval

A day as a period of observation

p number of periods contained in the load data (p=92)

ϒ’ intervals in a single period (ϒ’=288)

Derive ujkt from the above distribution

28

Data Preprocessing

Y-axis captures a sample of about 92values

Risk attitude : 0.95-quantile of Ujkt is an estimator for

the resource requirement of service j where 95percent

of requests can be satisfied

29

Data Preprocessing

• Second step

– Aggregate these intervals to reduce the number of

parameters for the optimization

30

Experimental Design

• Experimental Design

–

–

–

–

–

–

–

–

Model (SSAP and SSAPv)

Algorithms (B&B, Heuristic, FF,FFD)

Service type (W/A/D, ERP)

Number of services

Server capacity (CPU only)

Risk attitude

Number of time intervals considered in SSAPv

Sensitivity with respect to additional allocation

constraints

31

Experimental Design

• Experimental Design

– lp_solve 5.5.9 :revised simplex and B&B

– COIN-OR CBC branch-and-cut IP solver with the

CLP LP server

– Java 1.5.0 : FF and FFD

– Time out is 20 mins (already up to 700 servers)

32

Simulation Results

• Computation time Depending on Problem Size

–

–

–

–

Examine 24 time intervals

95th percentile of 5-minute intervals

5000 SAPS server capacity

For each of different numbers of services, 20 instances

have been sampled(with replacement)

– Different number of services—x-axis

– Computation time—y-axis

– Proportion of solvable instances within 2o mins—yaxis

33

Computation time Depending on

Problem Size

34

Computation time Depending on

Problem Size

35

Computation time Depending on

Problem Size

36

Proportion of solvable W/A/D instances

37

Proportion of solvable ERP instances

Solve much smaller instances compared with W/A/D

services with 20mins

38

Solution Quality Depending on

Problem Size

Computed number of required servers exceeds

the lower bound number of servers

Refer to this excess ratio Q as solution quality

The closer Q is to 1, the better the solution is

39

Solution Quality Depending on

Problem Size

40

Solution Quality Depending on

Problem Size

• W/A/D

D

• ERP

41

Impact of Risk Attitude on Solution Quality

• Previous simulation assumed the decision maker

to select 95th percentile in data processing

• Percent of the historical service demand would

have been satisfied without delay at this capacity

• Risk attitude

– Actual overbooking of server resources (aggregate

demands)

– More conservative estimate (reduction in variance)

• Analysis of capacity violations

– 10 different consolidation problems of 250 W/A/D

services

– Quantiles :0.4.0.45….1

– Use SSAPv B&B

42

Impact of Risk Attitude on Solution Quality

43

Influence of the Interval Size

SSAPv

SSAP

44

Influence of Additional Allocation

Constraints

• Up bound on the number of services per server

– The number of servers increases

– Computation time increases

• Combination and separation constraints

– Little effect on the solution quality

– Negative impact on computation time

• Technical constraints

– Little effect on the number of servers needed

– Computation time decreases

45

Overview

• Introduction

• Server consolidation problems and solutions

– Static Server Allocation Problems (SSAP) and its

extensions[1]

– Shares and Utilities based Server Consolidation[2]

– Server Consolidation with Dynamic Bandwidth

Demand [3]

• Conclusion

46

Shares and Utilities based Server

consolidation [2]

• Min, max and shares

• Problem formulation

• Algorithms

–

–

–

–

–

–

Basic Overprovision (BO)

Greedy Max (GM)

Greedy Min Max (GMM)

Expand Min Max (EMM)

Power Expand Min Max (PEMM)

Hypothetical Upper Bound Algorithm (HUB)

• Experimental Evaluation

47

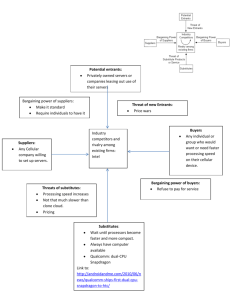

Min, Max and Shares

• Not all the applications are created equal.

• Different priority

– High priority applications : e-commerce web server

– Low priority applications: the intranet blogging server

• Different resource affinities

– Ex : web server may value additional CPU cycles much

more than a storage backup

• Under situation of high load, CPU resources are

best to allocated to higher utility application-web

server

48

Min, Max and Shares

• Take advantage of the Min, Max and Shares

parameters

– Min: ensure VM receive at least that amount of

resources when it is power on

– Max: ensure low priority application does not use

more resources and keep them available for high

priority applications

– Shares: provide advice to the virtualization

scheduler distribute resources between

contending VMs (shares ratio of 1:4)

49

Min, Max and Shares Impact Experiment

3 Vmware ESX servers

12 VMs (6 low priority and 6 high priority)

– Low load: desire 35% of the total available CPU

– High load: desire 100% of the total available CPU

– Under high load conditions, MMS delivers 47%

more utility than BASE

50

Problem Formulation

• The set of VMs

– Vi.m minimum resources needed (CPU only)

– Vi.M maximum resources needed (CPU only)

– Vi.u utility derived from the VM when it is

allocated Vi.m

– Vi.U utility derived from the VM when it is

allocated Vi.M

• The set of physical servers

– Cj the CPU capacity of the server Sj

– Pj power cost for the server Sj if it is turned on

51

Problem Formulation

The set of VMs allocated to server Sj

52

Problem Formulation

• Maximize

• Subject to

Unique Multi-knapsack problem:

Items can be elastic between min and max

Try to find the best size

53

Algorithms-BO

Power-aware: choosing lower power cost per unit resource

First-fit

Packing VMs at their maximum requirements

Conservative use of 9/10 of servers’ capacities

Fail to choose higher utility VMs

54

Algorithm-GM & GMM

• GM: Sorts the VMs by their profitablity

– Provide higher utility for every unit resource than BO

– Assign maximum requested allocation

– Some VMs are more profitable at another smaller

size

• GMM: Sorts the VMs by

and

Vi .u

Vi .m

– When it choose one corresponding to

delete V .u

, then

i

Vi .m

55

Algorithm-GMM

• GMM

– Pick right combination of VMs especially when

some VMs are more profitable at their min

– Fail to get additional resources even when the

incremental value they provide beyond min is

much higher than other VMs

– Fail to get resources when some nodes still have

left room after first-fitting

56

Algorithms-EMM

• No first-fit fashion

• Compute an estimated utility for each node if

the new VM were added to that node

• Choose the node gives best utility

• How to compute the utility of a node?

• Given a feasible set Q for Sj, use algorithm 2 to

estimate the utility of node Sj

57

Compute Node Utility

Expanding VMs that give the most

incremental utility per unit capacity until

either the node’s capacity is reached or

no more expansion is possible

58

Algorithms-EMM

• Limitation of EMM

• It tends to use all the servers that it has access

to (considering when empty server is available)

• It is disadvantage in terms of power costs

• How to detect whether to start a new node or

not?

59

Algorithms-PEMM

• One important change compared with EMM

• Use the node utility gain minus the proportional

power cost incurred on the new node as the

comparative measure

• If Sj is an already open node

• If Sj is a new node

60

Algorithms-HUB

• Provide a upper bound

– Relax multi-bin packing constraint by “lining up

the servers end-to-end”

– Allow single VMs to be placed over multiple

servers

– Charge only for each VM’s fractional power

consumption over its respective machines

• Allow achieving 100% capacity usage on each

machine thus providing an upper bound

61

Experimental Evaluation

• Large synthetic data center experiments

– Simulate thousands of VMs and hundreds of servers

– Utility and Min-Max inputs: normal distribution

– Power cost is a percentage of total utility received

• Real testbed experiments

– Three Vmware ESX 3.5 servers

– 12 VMs run RedHat Enterprise Linux (RHEL)

– Low priority (1) and high priority (4) (6/6)

– Workloads in each VM were generated using HPCC

HPC Challenge Benchmark, http://icl.cs.utk.edu/hpcc/.

62

Utility under Different Number of Servers

MM: Packing VMs at other sizes (compared with BO using maximum size)

150-200 servers: Shrinking resources given to high-profitable VMs in favor of

fitting more low-profitable VMs

200-300 servers: more room to expand high –utility VMs

63

300-350 servers: steady state :place all the VMS; PEMM with higher utility

Number of VMs Excluded under Different

Number of Servers

All algorithms leave many VMs out up to 150 servers

PEMM and EMM are able to fit all the VMs earliest

64

Utility under Different Number of VMs (at

min) per Server

Lower numbers: more servers are needed and hence power

costs rise leading to lower utility

High numbers: fewer servers are needed and utility goes up

65

PEMM yields the highest utility placements

Utility under Different Standard Deviation

of Server Capacity

As the standard deviation increases, certain servers will

have high capacity, lower power to capacity rate and will

be filled first, since the utility goes up

66

Computation Time under Different VMs

EMM has a greater execution time as it attempts to use

many more servers than PEMM

PEMM can easily scale to larger systems

67

Utility under Different Percentage of

Low Priority Load

GMM places all VMs in a single server allocating only the

min amount of resources resulting in poor utility

PEMM use less servers than EMM to generate more utility

68

Utility under Different Percentage

of Power Cost

As the power cost increases, it becomes increasingly

expensive to use extra servers, causing drops in utility

69

PEMM does the best by adjusting to those increasing cost

Overview

• Introduction

• Server consolidation problems and solutions

– Static Server Allocation Problems (SSAP) and its

extensions[1]

– Shares and Utilities based Server Consolidation[2]

– Server Consolidation with Dynamic Bandwidth

Demand[3]

• Conclusion

70

Server Consolidation with Dynamic

Bandwidth Demand [3]

• Dynamic bandwidth demand: normal

distribution

• Modulate it into Stochastic Bin Packing (SBP)

• Propose an online algorithm to solve SBP by

which the number of servers required is

within

of the optimal for any

• Simulation Results

71

Dynamic Bandwidth Demand

• Normal Distribution

The mean is positive

The standard deviation is small

enough compared with the mean

The probability of bandwidth demand being

negative is very small

72

Stochastic Bin Packing

The total size of items

in a bin follows normal

distribution

mean

variance

73

Stochastic Bin Packing

74

Stochastic Bin Packing

• Equivalent Size

• Classical Bin Packing:

– The number of bins used is the sum of the

item sizes plus the sum of the residual

capacity of each bin

– Pack each bin in a compact way so as to

reduce the residual capacity of each bin

75

Stochastic Bin Packing

• SBP

– Reducing the residual capacity does not

necessarily reduce the number of bins used

since the equivalent size can change

• Solution

– Reduce the residual capacity

– Reduce the equivalent size at the same time

while packing items

76

Stochastic Bin Packing

• One way: 1-4(full),5-13(full),14-35

• Second way:1-2 & 5-8 &14 (full), 3-4 & 9-10 & 15

(full), the rest must be in two bins

• Both of them pact as compact as possible

• The total equivalent size in the second method is

larger than the first one

77

Online Algorithm

• Find methods of dividing groups

– The items with both means and standard deviations

in the same interval belong to the same group

• Run Group Packing algorithm

78

Online Algorithm

• Two scenarios

– Finite possibilities of means

– Generic scenarios

• Worst case performance ratio

– B(L) is the number of bins used by the packing

algorithm

• First scenario

• Second scenario

79

Simulation Setup

• Use traffic dataset from global operational

data centers.

• 9K VMs and servers are equipped with 1Gbps

Ethernet card

The bandwidth requirement for VM i

80

Simulation Results

• The number of servers used : our algorithm 421

HARMONIC 609

C. C. Lee and D. T. Lee, "A simple on-line bin-packing algorithm," J. ACM, vol.

32, no. 3, pp. 562-572, 1985

• Use

as the bandwidth for requirement

VM i

• The number of servers become 402 for

HARMONIC

• But the violation probability exceeds 0.01

81

Simulation Results

82

Simulation Setup

• Number of items: 2000-20,000

•

•

•

for FF and FFD

83

Simulation Results

84

Overview

• Introduction

• Server consolidation problems and solutions

– Static Server Allocation Problems (SSAP) and its

extensions [1]

– Shares and Utilities based Server Consolidation [2]

– Server Consolidation with Dynamic Bandwidth

Demand [3]

• Conclusion

85

Conclusion

• They(except SSAP) do not use deterministic

values to characterize the demands over time

anymore

– Some modulate the problem into ILP while

considering real world constraints, then solve the

ILP with heuristic algorithm [1]

– Some try to take advantages of Min, Max and

Shares features inherent in Server consolidation [2]

– Some use SBP to consolidate dynamic servers [3]

86

References

•

•

•

•

•

1 Speitkamp, B.; Bichler, M., “A Mathematical Programming Approach for Server

Consolidation Problems in Virtualized Data Centers,” IEEE Transactions on Services

Computing, vol.3, no.4, pp.266-278, Oct.-Dec. 2010

2 Cardosa, M.; Korupolu, M.R.; Singh, A., “Shares and utilities based power

consolidation in virtualized server environments,” IM ’09. IFIP/IEEE International

Symposium on Integrated Network Management, 2009, pp.327-334, 1-5 June 2009

3 Meng Wang; Xiaoqiao Meng; Li Zhang, “Consolidating virtual machines with

dynamic bandwidth demand in data centers,” 2011 Proceedings IEEE INFOCOM ,

pp.71-75, 10-15 April 2011

4 Ying Song; Yanwei Zhang; Yuzhong Sun; Weisong Shi, “Utility analysis for

Internet-oriented server consolidation in VM-based data centers,” 2009.

CLUSTER ’09. IEEE International Conference on Cluster Computing and Workshops,

pp.1-10, Aug. 31 2009-Sept. 4 2009

5 C.Chekuri and S. Khanna, “On Multi-Dimensional PackingProblems,” Proc.ACMSIAM Symp. Discrete Algorithms, pp. 185-194, 1999.

87

Questions and Answers

• Q1: How to understand the combination

constraints in the extensions of SSAP?[1]

• Answer: If is zero, that means the service e is

not allocated on server i, from the formulation

we can get that

must

be zero, in other words, the other services are

not allocated on the server i, too. If is one, it

means service e is allocated on server i, then all

the other services have to be allocated on the

same server i.

88

Questions and Answers

• Q2: Explain the constraints of limit on the

number of reallocations. [1]

• Professor Qiao explained this in an much

easier way, just reformulate the constraints

into n x r , here r is the limit number of

reallocations, and n is the size of the set x =1

in the current time.

x ij X

ij

ij

89

Questions and Answers

• Q3: How does GMM work if the

is higher,

but the corresponding max size cannot be

satisfied?[2]

• If the max size cannot be satisfied, it will not

allocate this service at max, and then keep

traversing the list, leaving the corresponding VV ..mu

in the list.

i

i

90

Questions and Answers

• Q4: How to get the utility inputs in Min, Max

and Shares paper? [2]

• They use normal distribution to generate the

utility. First, they generate the utility at min,

and then on top of this, they get the utility at

max using certain variance. But for the utility

of the size between min and max, the paper

does not explain very clearly.

91

Questions and Answers

• Q5: In which way they get the HUB method? [2]

• HUB is ideal. It allows VMs use different

servers in different time interval and also VMs

can be fractional allocated. In real technology

world, we cannot really do this. If we assume

that migration over time does not consume

time and VMs can be fractionally allocated, we

can get this upper bound.

92

Questions and Answers

• Q6 : Since “the dynamic bandwidth demand”

paper is too abstract and theoretical, just

introduce the main ideas. [3]

• The core idea is that we should pact items as compact

as possible, and at the same time try to minimize the

equivalent size of the items. First they divided the

items into different groups according to the mean and

variance interval, and then used on line algorithm to

pact the items in each group. They improved that the

worst case performance ratio is

.

93

Any Questions?

Thank you!