History of Lep data archiving- Aleph Statement (1)

advertisement

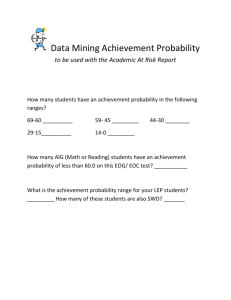

LEP DATA PRESERVATION 11 years of data taking 4 Experiments Large Luminosity ~1200 Scientific Papers ALEPH Raw data 5 Terabytes DST 800 Gigabytes Mini 80 Gigabytes MC Files 16 T + 9 T Similar for other experiments History of Lep data archiving (1) Interest triggered by CERN directorate in year 2000. Formal agreement between LEP experiments and IT department in 2001 http://committees.web.cern.ch/Committees/RB/RC.html Working group active until 2004 with partial success History of Lep data archiving (2) Development by IT of a "museum computing system", based and frozen on existing lxplus technology/software, with access possibilities to (at present CASTOR) mass storage where all data are stored. These activities were started by Andreas Pfeiffer and Tony Cass. http://pfeiffer.home.cern.ch/pfeiffer/LEP-Data-Archive/Scenarios.html History of Lep data archiving (3) the safeguarding of 'standard' analysis framework software and of mini-data on a number of PC’s the development of a modern C++ analysis framework (in some cases) the establishment of rules for access to data by non-members of the Collaboration. History of Lep data archiving- Aleph Statement (1) The data collected by the Aleph experiment in the years 1990-2000 have been archived to allow their use for physics analyses after the closure of the Collaboration. The archiving includes the last set of simulated events and the most updated version of the analysis software. Limitations. The available information is not sufficient to repeat all analyses, particularly when systematic effects play an important role as, for instance, for precision measurements in the electroweak sector. Examples of physics analyses that cannot be repeated on archived data are The measurement of the Z lineshape The measurement of the W mass The measurement of the tau polarization The measurement of lepton and quark forward-backward asymmetries Most heavy flavour measurements, such as the measurement of Rb, of the CKM matrix elements, of Bd and Bs oscillations The searches for the Higgs boson Many searches in the Susy sector History of Lep data archiving- Aleph Statement (2) Authorized Users. The use of archived Aleph data is authorized to former members of the Aleph Collaboration and their collaborators. The use of a subset of data for teaching and pedagogical purposes, under the guidance of former members of the Collaboration, is allowed. Authorship. The publication of results based on archived Aleph data is not allowed until 1 year after the official termination of the Collaboration, foreseen for the end of 2004. The authors of the analysis take full responsibility for the publication. Any figure, plot or table using Aleph data should contain the label “ALEPH Archived Data”. A reference to the present document “Statement on the use of Aleph data for long-term analyses” must be present in the publication. Special Case : ALEPH QCD archive http://aleph.web.cern.ch/aleph/ THE PROBLEM of HEP data preservation The HEP data model is a highly complex data model (from the start difficult to export to OA a` la astronomy) Raw data -> calibrated data -> skimmed data -> high-level objects Final results depend on all the grey-literature on constants, human knowledge, algorithms which are needed for each pass Experiment lifetimes > computing environment lifetimes. Many migrations within the lifetime or an experiment (in this sense preservation is not an issue !) Lesson learned from LEP Apart from publication of numbers or tables, no real OA Either little useful or little usable (with small exceptions): continuous need for additional knowledge, difficult to encode and store. Regardless of community openness in pre-printing, wide-spreading of preliminary results at conferences and insider information, little priority on OA bringing to partial failures of LEP data archiving for the "general" public. Need force-majeure (Discovery at LHC of something we should have seen at LEP?) to access data again. Final results (containing additional unpublished information) but also high-level objects have been already combined (LEP Electroweak vs LEP Higgs) The "Parallel way" to archiving and publishing data In addition to internal data models, elaborate a parallel format for useful and usable high-level objects Publish high-level objects behind each scientific paper (after a time lapse?) Publish all high-level objects after end of collaboration Address issues of accountability, reproducibility of results, "careless discovers", "careless measurements" A possible R&D program Use LEP as a case study for information retrieval to better assess the different methods Define some high-level object to make a OA-based analysis possible for an "external" but "motivated" researcher of the field Propose strategies to define "parallel" high-level objects to be included in the LHC data model, that is not post-mortem but aim to make it part of the data-model designing process. This is very timely. Imagine solutions to expand digital-library records of experimental results to include the OA data behind the results Initiate a discussion on priority issues and time-delays in making these "parallel" high-level objects available. This is very timely. Credit: to Salvatore Mele for many of the ideas in these slides