DAC2012-Tutorial - Computer Science and Engineering

advertisement

System-Level Exploration of Power,

Temperature, Performance, and Area

for Multicore Architectures

Houman Homayoun1, Manish Arora1, Luis Angel D. Bathen2

1Department

of Computer Science and Engineering

University of California San Diego

2Department

of Computer Science

University of California, Irvine

1

Outline

Why power, temperature and reliability? (H. Homayoun)

Tools for architects? (H. Homayoun)

Thermal simulation using hotspot (H. Homayoun)

Power/performance modeling for NVMs using nvsim (H. Homayoun)

Cycle-level NOC simulation using DARSIM (H. Homayoun)

Using bottlenecks analysis and McPAT for efficient CPU design space exploration

(M. Arora)

Simplescalar: a computer system design and analysis infrastructure (L. Bathen)

PHiLOSoftware: Software-Controlled Memory Virtualization (L. Bathen)

SimpleScalar + CACTI + NVSIM + SystemC

2

Power

Power consumption: first-order design constraint

Embedded System: Battery life time

High Performance System: Heat dissipation

Large Scale Systems: Billing cost

Power

Thermal

Reliability

Short-lived batteries

Huge heatsinks

High electric bills

Unreliable microprocessors

3

Power Importance – Data Center Example

2006: Data Centers in US consumed 61 billion KWh,

a electricity cost of about $9 billion1

2012: Doubles! $18 billion1

Impact of 10% reduction of processor and RAM

power consumption

6.5% reduction of total power consumption

Power

Thermal

Reliability

Others (5%)

Network (9%)

Storage (21%)

$18B x 6.5% = $1.1

billion in savings

1Environmental

Processor + RAM

(65%)

Protection Agency (EPA), “Report to Congress on Server and Data Center Energy Efficiency”, August 2, 2007

4

Temperature Trend – Temperature Crisis

Energy = Heat

Heat dissipation is costly

Increasing power dissipation in computer systems

Ever increasing cooling costs

Power

Thermal

Reliability

Power Density (W/cm2)

10000

Rocket Nozzle

1000

Nuclear Reactor

Hot Plate

100

Nehalem

Core 2 Duo

Itanium 2

POWER4

POWER2

8086

10

8008 8085

Max Power Consumption

1

1975

286

386

Athlon II

AMD K8

POWER3 POWER6

P6

Pentium®

486

8080

1985

1995

Year

2005

2015

5

Reliability Trend – Reliability Crisis

Decrease lifetime reliability

Power

High power densities: high temperatures

Every 10o temperature increase doubles the failure rate

Thermal

Reliability

Technology Scaling

Manufacturing defects, process variation

1.00E+00

Probability of Failure

1.00E-02

1.00E-04

1.00E-06

1.00E-08

1.8

1.6

1.4

1.2

1

0.8

Relative Cell Size

Source: N. Kim, et al., Analyzing the Impact of Joint Optimization of Cell Size, TVLSI 2011

6

Efficiency Crisis

Moore’s law allow to double transistor budget every 18 months

Power

The power and thermal budget have not changed significantly Thermal Reliability

Efficiency problem in new generations of microprocessor

Intel Teraflops

(Many-Core)

Power Down

8x10 Cores

7

What Can We Do About it?

In order to achieve “sustainable computing”, and fight back the “Power

Problem”, we need to rethink from a “Green Computing” perspective.

Understand levels of design abstraction; technology level, circuit level, and

architecture level.

Understand where power/temperature is dissipated, where reliability issue

exposed and where performance bottleneck exist.

Think about ways to tackle these issue at all levels.

8

Tools for Architects

Performance

CPU: SimpleScalar, GEMS5, SMTSIM

GPU: GPGPU-SIM

Network: DARSIM, NoC-SIM

Power

CPU: Wattch

SRAM/CAM, eDRAM Cache: Cacti

Non-Volatile Memory: NVSIM

Reliability: VARIUS

Temperature: HotSpot

Power, Timing, Area for CPU + Cache + Main Memory: McPAT

9

Thermal Simulation Using HOTSPOT

Slides Courtesy of

A Quick Thermal Tutorial by Kevin Skadron and Mircea Stan

10

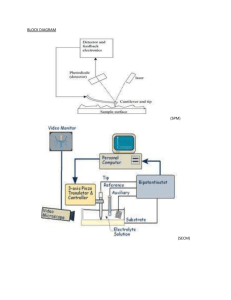

Thermal modeling1

A fine-grained, dynamic model for temperature

Architects can use

Accounts for adjacency and package

No detailed designs

Provide detailed temperature distribution

Fast

HotSpot - a compact model based on thermal R, C

Parameterized for various

Architectures

Power models

Floorplans

Thermal Packages

[1] A Quick Thermal Tutorial, Kevin Skadron and Mircea Stan

11

HotSpot

Time evolution of temperature is driven by unit

activities and power dissipations averaged over N

cycles (10K shown to provide enough accuracy)

Power dissipations can come from any power simulator, act as

“current sources” in RC circuit ('P' vector in the equations)

Simulation overhead in Wattch/SimpleScalar: <1%

Requires models of

Floorplan: important for adjacency

Package: important for spreading and time constants

R and C matrices are derived from the above

12

Example System1

Heat sink

IC Package

Heat spreader

PCB

Pin

Die

Interface

material

[1] A Quick Thermal Tutorial, Kevin Skadron and Mircea Stan

13

HotSpot Implementation

Primarily a circuit solver

Steady state solution

Mainly matrix inversion – done in two steps

Decomposition of the matrix into lower and upper triangular

matrices

Successive backward substitution of solved variables

Implements the pseudocode from CLR

Transient solution

Inputs – current temperature and power

Output – temperature for the next interval

Computed using a fourth order Runge-Kutta

(RK4) method

14

Validation

Validated and calibrated using MICRED test chips

Within 7% for both steady-state and transient step-response

Interface material (chip/spreader) matters

Also validated against an FPGA

9x9 array of power dissipators and sensors

Compared to HotSpot configured with same grid, package

Can instantiate a temp. sensor based on a ring oscillator and

counter

Also validated against IBM AnSYS FEM simulations

15

HotSpot Interface

Inputs

a power trace file

a floorplan file

A config file (package information)

Outputs

the corresponding transient temperatures

steady state temperatures

Thermal map (perl script)

16

Config File

# thermal model parameters

# chip specs

# chip thickness in meters

-t_chip

0.00015

# silicon thermal conductivity in W/(m-K)

-k_chip

100.0

# silicon specific heat in J/(m^3-K)

-p_chip

1.75e6

# temperature threshold for DTM (kelvin)

-thermal_threshold 354.95

# heat sink specs

# convection capacitance in J/K

-c_convec

140.4

# convection resistance in K/W

-r_convec

0.1

# heatsink side in meters

-s_sink

0.06

# heatsink thickness in meters

-t_sink

0.0069

# heatsink thermal conductivity in W/(m-K)

-k_sink

400.0

# heatsink specific heat in J/(m^3-K)

-p_sink

3.55e6

# heat spreader specs

# spreader side in meters

-s_spreader

0.03

# spreader thickness in meters

-t_spreader

0.001

# heat spreader thermal conductivity in W/(m-K)

-k_spreader

400.0

# heat spreader specific heat in J/(m^3-K)

-p_spreader

3.55e6

# interface material specs

# interface material thickness in meters

-t_interface

2.0e-05

# interface material thermal conductivity in W/(m-K)

-k_interface

4.0

# interface material specific heat in J/(m^3-K)

-p_interface

4.0e6

17

FLPfile

# Floorplan close to the Alpha EV6 processor

# Line Format: <unit-name>\t<width>\t<height>\t<left-x>\t<bottom-y>

# all dimensions are in meters

0.0159

# comment lines begin with a '#'

# comments and empty lines are ignored

FPMap_0

FPMul_0

FPReg_0

FPAdd_0

0.0139

L2_left 0.004900

0.006200

0.000000

0.009800

L2 0.016000

0.009800

0.000000

0.000000

L2_right

0.004900

0.006200

0.011100

0.009800

Icache 0.003100

0.002600

0.004900

0.009800

Dcache 0.003100

0.002600

0.008000

0.009800

Bpred_0 0.001033

0.000700

0.004900

0.012400

Bpred_1 0.001033

0.000700

0.005933

0.012400

Bpred_2 0.001033

0.000700

0.006967

0.012400

DTB_0 0.001033

0.000700

0.008000

0.012400

DTB_1 0.001033

0.000700

0.009033

0.012400

DTB_2 0.001033

0.000700

0.010067

0.012400

FPAdd_0 0.001100

0.000900

0.004900

0.013100

FPAdd_1 0.001100

0.000900

0.006000

0.013100

FPReg_0 0.000550

0.000380

0.004900

0.014000

FPReg_1 0.000550

0.000380

0.005450

0.014000

FPMul_0 0.001100

0.000950

0.004900

0.014380

FPMul_1 0.001100

0.000950

0.006000

0.014380

FPMap_0 0.001100

0.000670

0.004900

0.015330

FPMap_1 0.001100

0.000670

0.006000

0.015330

IntMap 0.000900

0.001350

0.007100

0.014650

IntQ 0.001300

0.001350

0.008000

0.014650

IntReg_0

0.000900

0.000670

0.009300

0.015330

IntReg_1

0.000900

0.000670

0.010200

0.015330

IntExec 0.001800

0.002230

0.009300

0.013100

FPQ 0.000900

0.001550

0.007100

0.013100

LdStQ 0.001300

0.000950

0.008000

0.013700

ITB_0 0.000650

0.000600

0.008000

0.013100

ITB_1 0.000650

0.000600

0.008650

0.013100

L2_left

Bpred_0

IntReg_0

IntQ

FPQ

LdStQ IntExec

ITB_1

DTB_0 DTB_2

L2_right

0.0119

Icache

Dcache

0.0099

0.0079

0.0059

L2

0.0039

0.0019

-0.0001

-0.0001

0.0049

0.0099

0.0149

18

HotSpot Modes of running

Block Level

fast

less accurate

Example: hotspot -c hotspot.config -f ev6.flp -p gcc.ptrace -o

gcc.ttrace -steady_file gcc.steady

Grid-Level

slow

more accurate

Example: hotspot -c hotspot.config -f ev6.flp -p gcc.ptrace steady_file gcc.steady -model_type grid

Change grid size for trade-off between speed up and accuracy

default grid size is 64x64

19

3D Modeling with HotSpot

HotSpot's grid model is capable of modeling stacked 3D

chips

HotSpot need Layer Configuration File (LCF) for 3D

simulation.

LCF specifies the set of vertical layers to be modeled including its

physical properties (thickness, conductivity etc.)

20

Example: LCF file for two layers

#<Layer Number>

#<Lateral heat flow Y/N?>

#<Power Dissipation Y/N?>

#<Specific heat capacity in J/(m^3K)>

#<Resistivity in (m-K)/W>

#<Thickness in m>

#<floorplan file>

# Layer 0: Silicon

0

Y

Y

1.75e6

0.01

0.00015

ev6.flp

# Layer 1: thermal interface material

(TIM)

1

Y

N

4e6

0.25

2.0e-05

ev6.flp

For instance, the above sample file shows an LCF corresponding to

the default HotSpot configuration with two layers: one layer of silicon

and one layer of Thermal Interface Material (TIM)

Command line example:

hotspot -c hotspot.config -f <some_random_file> -p example.ptrace

-o example.ttrace -model_type grid -grid_layer_file example.lcf

21

Example: Modeling memory

peripheral temperature

22

Power/Performance Modeling for NonVolatile Memories Using NVSIM

23

Why yet-another Circuit-Level

Estimation Tool for Cache Memories

Emerging non-volatile memory devices show a large

variation on performance, energy, and density.

Some of them are performance-optimized; some of

them are area-optimized…

For system-level research, it is NOT correct to pick

random device parameters from multiple sources.

24

NVSIM1

`nvsim' is designed to be a general circuit-level

performance, power, and area model

Emerging memory technologies supported:

NAND

PCM

MRAM(STT-RAM)

Memristor

SRAM

DRAM

eDRAM

[1] "Design Implications of Memristor-Based RRAM Cross-Point Structures." In DATE

2011, C. Xu, X. Dong, N. P. Jouppi, and Y. Xie

25

NVSim Model

Developed on the basis of CACTI

CACTI models SRAM and DRAM caches

CACTI does NOT support eNVM.

Row Decoders

Wordline Drivers

Precharge & Equalization

2D array of memory

cells

Bitline Mux

Sense Amplifies

Sense Amplifier Mux

Output/Write Drivers

Memory cells

NVSim made

modifications

on the subarray-level

And the bank-level

Peripheral circuitry

CACTI-modeled memory

subarray

26

Tricks (Subarray-Level)

Why the circuit design space is large?

Many design tricks

•Array

•Transistor type

•Interconnect type

oWire pitch

oRepeater design

•Sense amp

oCurrent-sensing

oVoltage-sensing

•Driver

oArea-opt

oLatency-opt

Precharge & Equalization

Row Decoders

Wordline Drivers

oHigh-performance

oLow-power

oLow-standby

oMOSaccessed

oCrosspoint

2D array of memory

cells

Bitline Mux

Sense Amplifies

Sense Amplifier Mux

Output/Write Drivers

27

Configuring NVSim

NVSim provides a variety of functionalities by supporting two

categories of the configuration input files: <.cfg> files and <.cell>

files.

<.cfg> Configuration: <.cfg> files are used to specify the non-volatile

memory module parameters and tune the design exploration knobs. The

details of how to configure <.cfg> files are under the cfg files page.

<.cell> Configuration: <.cell> files are used to specify the non-volatile

memory cell properties. The information held in these files are usually

from the device level. NVSim provides default <.cell> files for PC-RAM,

STT-RAM, and R-RAM as well as allows advanced users to tailor their own

cell properties by add new <.cell> files. The details of how to configure

<.cell> files are under the cell files page.

28

NVSIM Interface

-DesignTarget: cache

//-DesignTarget: RAM

//-DesignTarget: CAM

-CacheAccessMode: Normal

//-CacheAccessMode: Fast

//-CacheAccessMode: Sequential

//-OptimizationTarget: ReadLatency

//-OptimizationTarget: WriteLatency

//-OptimizationTarget: ReadDynamicEnergy

//-OptimizationTarget: WriteDynamicEnergy

//-OptimizationTarget: ReadEDP

//-OptimizationTarget: WriteEDP

//-OptimizationTarget: LeakagePower

-OptimizationTarget: Area

//-OptimizationTarget: Exploration

//-ProcessNode: 200

//-ProcessNode: 120

//-ProcessNode: 90

//-ProcessNode: 65

-ProcessNode: 45

//-ProcessNode: 32

-Capacity (KB): 128

//-Capacity (MB): 1

-WordWidth (bit): 512

-Associativity (for cache only): 8

-DeviceRoadmap: HP

//-DeviceRoadmap: LSTP

//-DeviceRoadmap: LOP

-Routing: H-tree

//-Routing: non-H-tree

-MemoryCellInputFile: SRAM.cell

//-MemoryCellInputFile: Memristor_3.cell

//-MemoryCellInputFile:

PCRAM_JSSC_2007.cell

//-MemoryCellInputFile:

PCRAM_JSSC_2008.cell

//-MemoryCellInputFile:

PCRAM_IEDM_2004.cell

//-MemoryCellInputFile:

MRAM_ISSCC_2007.cell

//-MemoryCellInputFile:

MRAM_ISSCC_2010_14_2.cell

//-MemoryCellInputFile:

MRAM_Qualcomm_IEDM.cell

//-MemoryCellInputFile: SLCNAND.cell

-Temperature (K): 380

29

Example 1: PCRAM1

32-nm 16MB 8-way L3 caches (with different PCRAM

design optimizations)

[1] PCRAMsim: System-Level Performance, Energy, and Area Modeling for PhaseChange RAM, Xiangyu Dong, et al. ICCAD 2009

30

Cycle-Level NoC Simulation Using DARSIM

31

DARSIM1

A parallel, highly configurable, cycle-level network-on-chip simulator based

on an ingress-queued wormhole router architecture

Most hardware parameters are configurable, including geometry, bandwidth,

crossbar dimensions

Packets arrive flit-by-flit on ingress ports are buffered in ingress virtual

channel (VC) buffers until they have been assigned a next-hop node and VC;

they then compete for the crossbar and, after crossing, depart from the

egress ports.

Basic datapath of an NoC

router modeled by DARSIM.

[1] Darsim: A Parallel Cycle-Level NoC Simulator, Lis, Mieszko; Shim, Keun Sup; Cho, Myong

Hyon; Ren, Pengju; Khan, Omer; Devadas, Srinivas, ISPASS 2011.

32

DARSIm Simulation Parameters

--cycles arg

simulate for arg cycles (0 = until drained)

--packets arg

simulate until arg packets arrive (0 = until drained)

--stats-start arg start statistics after cycle arg (default: 0)

--no-stats

do not report statistics

--no-fast-forward do not fast-forward when system is drained

--memory-traces arg read memory traces from file arg

--log-file arg

write a log to file arg

--random-seed arg set random seed (default: use system entropy)

--version

show program version and exit

-h [ --help ]

show this help message and exit

33

Sample Config File

[geometry]

height = 8

width = 8

[routing]

node = weighted

queue = set

one queue per flow = false

one flow per queue = false

[node]

queue size = 8

[bandwidth]

cpu = 16/1

net = 16

north = 1/1

east = 1/1

south = 1/1

west = 1/1

[queues]

cpu = 0 1

net = 8 9

north = 16 18

east = 28 30

south = 20 22

west = 24 26

[core]

default = injector

34

Network Configuration

-x (arg) : network width (8)

-y (arg) : network height (8)

-v (arg) : number of virtual channels per set (1)

-q (arg) : capacity of each virtual channel in flits(4)

-c (arg) : core type (memtraceCore)

-m (arg) : memory type (privateSharedMSI)

-n (arg) : number of VC sets

-o (arg) : output filename (output.cfg)

35

Sample Flow Event

tick 12094

flow 0x001b0000 size 13

tick 12140

flow 0x00001f00 size 5

tick 12141

flow 0x001f0000 size 5

tick 12212

flow 0x00002100 size 5

tick 12212

flow 0x00210000 size 13

The first two lines indicate that at the cycle of 12094, a packet which

consists of 13 flits is injected to Node 27 (0x1b), and its destination is

Node 0 (0x00).

36

Statistics

flit counts:

flow 00010000: offered 58, sent 58, received 58 (0 in flight)

flow 00020000: offered 44, sent 44, received 44 (0 in flight)

flow 00030000: offered 34, sent 34, received 34 (0 in flight)

flow 00040700: offered 32, sent 32, received 32 (0 in flight)

flow 00050700: offered 34, sent 34, received 34 (0 in flight)

.....

all flows counts: offered 109724, sent 109724, received 109724 (0 in flight)

in-network sent flit latencies (mean +/- s.d., [min..max] in # cycles):

flow 00010000: 4.06897 +/- 0.364931, range [4..6]

flow 00020000: 5.20455 +/- 0.756173, range [5..9]

flow 00030000: 6.38235 +/- 0.874969, range [6..10]

flow 00040700: 6.1875 +/- 0.526634, range [6..8]

flow 00050700: 5.11765 +/- 0.32219, range [5..6]

.....

all flows in-network flit latency: 9.95079 +/- 20.5398

37

Example: Effect of Routing and VC1

The effect of routing and VC configuration on network transit latency

in a relatively congested network on the WATER benchmark: while O1TURN

and ROMM clearly outperform XY, the margin is not particularly impressive.

[1] Scalable Accurate Multicore Simulation in the 1000 core era", M. Lis et al. ISPASS 2011

38

Design Flow

39

Trace Driven NUCA Non-Volatile

Cache Simulation in 3D

40

Trace Driven NUCA Non-Volatile

Cache Simulation in 3D

Feed new cache latency for performance impact

cycle accurate

simulator like

SimpleScalar or

SMTSIM

NVSIM-based

simulator

Feed cache trace

DARSIM Network

Simulation

HotSpot 3D (Virginia)

3D thermal

simulation

Feed temperature for accurate leakage power modeling

41

Questions?

3/21/2016

42

Using Bottlenecks Analysis and

McPAT for Efficient CPU Design

Space Exploration

Manish Arora1

Computer Science and Engineering

University of California, San Diego

1Credit

also goes to my co-authors: Feng Wang (Qualcomm),

Bob Rychlik (Qualcomm) and Dean Tullsen (UC San Diego)

Tackling Design Complexity

Increasingly complex design decisions

Accurate simulation is slow

Simulation of all design points not feasible

Commonly followed techniques inadequate

Sensitivity analysis

Multicore exacerbates the problem

Vary a single parameter while keeping other parameters fixed

E.g. L2 performance by varying size and keeping else constant

Dependent on the choice of fixed point of reference

June 4 2012

L2 performance correlated with L1 size

44

Accelerating Design Space Exploration

Speeding up individual simulations

Benchmark subsetting (SMARTS[1], SimPoint[2] and MinneSpec[3])

Analytical models instead of cycle accurate simulation (Karkhanis

et al. [4])

Regression models to derive performance models (Lee et al. [5])

Design space pruning

June 4 2012

Hill-climbing (Systems et al. [6])

Tabu search (Axelsson et al. [7])

Genetic search (Palesi et al. [8])

Plackett and Burman (P&B) based design (Yi et al. [9] and Arora et

al. [10])

45

Plackett and Burman (P&B) Based Design

Advantages

Exploration over ranges of parameter values

Linear or near linear number of experiments

Non-iterative technique (exploit cluster parallelism)

Workings

Provide a high value (+1) and low value (-1) for each component

Run a P&B specified set of experiments

Evaluate “Impact” of each component

June 4 2012

CPU Freq 2GHz (+1) / 1GHz (-1)

L2 Cache 1MB (+1) / 256KB (-1) and so on…

E.g. CPU Frequency has a 30% influence when CPU Freq changed from

1GHz to 2GHz AND changing L2 from 256KB to 1MB AND …

46

System Under Design - 1

Sub-system consisting of 11 components

Up to 10 choices per component

June 4 2012

47

System Under Design - 2

12 Mobile CPU centric benchmarks

June 4 2012

48

June 4 2012

49

June 4 2012

50

June 4 2012

51

Using P&B for Cost-Optimized Designs

Recapping P&B

P&B yields unit-less “Impact” (influence of changing a component)

Provides “Impact” trends by changing upper bounds

Constrained systems

Most systems are cost constrained (area, power or energy)

Need to look at cost together with performance

Use Cost Normalized Marginal Impact

L2 cache size has higher impact than L2 Associativity

L2 Associativity might still provide the best “Bang for the buck”

Impact gained / Cost incurred

Use McPAT [11, 12] to evaluate baseline and marginal

costs

June 4 2012

52

McPAT: High Level Features

Integrated modeling framework

Hardware validated (~20% error)

Configurable system components

Power (Peak, Dynamic, Short-circuit and Leakage)

Area

Critical path timing

Cores, NOC, clock tree, PLL, caches and memory controllers etc.

Technology nodes 90nm to 22nm

Device types Bulk CMOS, SOI and Double Gate

Flexible XML interface

June 4 2012

Standalone and performance simulator integration

53

McPAT 1.0 Overview (from [11])

Unspecified parameters

filled, Structures

optimized to satisfy

timing

June 4 2012

54

Use models and

configurations to

evaluate numbers

Framework Components

Hierarchical power, area and timing model

Model structures at a low level but allow high-level configuration

Optimizer for circuit level implementations

Determines unspecified parameters in the internal chip

representation

Model core details rigorously and allow to connect multiple cores

User specifies cache size and number of banks but optimizer specifies

cachebank wordline and bitline lengths

User can choose to specify everything themselves

Internal chip representation

June 4 2012

Driven by user inputs and those generated by the optimizer

55

Hierarchical Modeling (from [11])

June 4 2012

56

Power, Area and Timing Modeling

Power Modeling

Timing Modeling

Dynamic power using load capacitive modeling, supply voltage,

clock frequency and activity factors

Short-circuit power using published models

Leakage using published data and existing models such as MASTER

Estimate critical path

Use RC delays to estimate time similar to CACTI

Area Modeling

June 4 2012

Similar to CACTI to model gates and regular structures

Empirical modeling techniques for non-regular structures

57

Multicore Architectural Modeling

Core

NOC

Signal link and router models

Shared and private cache hierarchies

Memory Controller

Configurable models of fetch, execute, load-store and OOO etc.

Reservation station style and physical-register-file architectures

In-order, OOO and Multithreaded architectures

Front end, transaction processing and PHY models

Clocking

June 4 2012

PLL and clock tree models

58

Circuit and Technology Level Modeling

Wires

Devices

Hierarchical repeated wires for local and global wires

Short wires using pi-RC models

Latches automatically inserted and modeled to satisfy clock rates

Use ITRS 2007 roadmap data

90nm, 65nm, 45nm, 32nm and 22nm nodes supported

Planar bulk (up to 36nm), SOI (up to 25nm) and double-gate

(22nm) modeled

Support for Power-saving modes

McPAT 1.0 supports multiple sleep states

June 4 2012

Coming this summer (v0.8 current)

59

McPAT Operation

Requires input from user and simulator

Target clock rate, architectural and technology parameters

Optimization function (timing or ED^2)

Unit activity factors

McPAT optimizes structures to satisfy timing

Configurations not satisfying timing are discarded

Optimization functions are applied to all timing

satisfying configurations

Numbers calculated using remaining configurations +

activity factors

June 4 2012

60

Downloading and Installing

Current version 0.8 available from HP Labs website

Download and build the tool (“make” works)

Works on unix compatible systems

Command line operation (standalone XML input)

http://www.hpl.hp.com/research/mcpat/

Print levels provide verbose results

Alternatively can build together with simulator

June 4 2012

61

Running McPAT

Standalone mode (with XML input file)

Architectural and technology details specified within XML

Find correspondence between McPAT stats and simulator stats

Run performance simulation and pass counters to XML

<component id="system.core0.icache" name="icache">

<param name="icache_config" value="131072,32,8,1,8,3,32,0"/>

<stat name="read_accesses" value=“2000"/>

<stat name="read_misses" value=“116"/>

<stat name="conflicts" value=“9"/>

</component>

Integrated with multiple simulators (M5, SMTSIM, Multi2SIM etc.)

Documentation gives tips on building together with

simulator

June 4 2012

62

XML Specification (Top Level)

June 4 2012

63

XML Specification (Core)

June 4 2012

64

XML Specification (Memory Controller)

June 4 2012

65

Results (Top Level)

June 4 2012

66

Results (Core)

June 4 2012

67

Results (Memory Controller)

June 4 2012

68

Cost Normalized Marginal Impact

Created XML specification for our processor system

Modeled a mobile processor and obtained activity factors from

a custom cycle-accurate simulator

Obtained baseline power and area

Obtained Marginal costs

June 4 2012

Obtained cost normalized marginal impact

69

Results: Cost Normalized Impact

June 4 2012

70

Obtaining Cost Optimized Designs

Make design decisions utilizing the marginal impact

and marginal cost information

June 4 2012

71

Results: Cost Optimized Designs

Set budgets to 70% - 40% of highest end system

Selection algorithm minimizes impact loss while

reducing cost as much as possible

June 4 2012

Performance within 16% of peak @ 40% area

Performance within 19% of peak @ nearly half the power

72

To Summarize

Looked at the problem of efficient design space

exploration

Used the Plackett and Burman Method to yield

“Impact” or a measure of bottleneck for components

Understood the basic workings of McPAT

Understood the use of McPAT to obtain area and

power costs for various system configurations

Used cost numbers to obtain cost normalized impact

Used cost normalized impact values to obtain efficient

design choices

June 4 2012

73

References

[1] Wunderlich et al. “SMARTS: Accelerating microarchitecural simulation via rigorous statistical

sampling”, ISCA 2003.

[2] Sherwood et al. “Automaticaly characterizing large scale program behavior”, ASPLOS 2002.

[3] KleinOsowski et al. “MinneSPEC: A new SPEC benchmark workload for simulation based

computer architecture research”, CAL 2002.

[4] Karkhanis et al. “A first-order superscalar processor model”, ISCA 2004.

[5] Lee at al. “Accurate and efficient regression modeling for microarchitectural performance

and power prediction”, ASPLOS 2006.

[6] Systems et al. “Spacewalker: Automated design space exploration”, HP Labs 2001.

[7] Axelsson et al. “Architecture synthesis and partitioning of realtime systems”, CODES 1997.

[8] Palesi et al. “Multi-objective design space exploration using genetic algorithms”, CODES

2002.

[9] Yi et al. “A statistically rigorous approach for improving simulation methodology”, HPCA

2003.

[10] Arora et al. “Efficient system design using the SAAB methodology”, SAMOS 2012.

[11] S. Li et al. “McPAT: An integrated Power, Area and Timing framework”, MICRO 2009.

[12] S. Li et al. McPAT 1.0 technical report, HP Labs 2009.

June 4 2012

74

Questions?

SimpleScalar Simulator (ISS) and

PHiLOSoftware Framework (SystemC)

Luis Angel D. Bathen (Danny)3

Slides Courtesy of: Kyoungwoo Lee1, Aviral Shrivastava2, and Nikil

Dutt3

1Dept.

of Computer Science

Yonsei University

2Dept.

of Computer Science & Engineering

Arizona State University

3Dept.

of Computer Science

University of California

at Irvine

Contents

SimpleScalar Overview

Demo 1: a simple simulation

PHiLOSoftware Simulator

(w/ 3.1 version of SimpleScalar)

SimpleScalar + CACTI + SystemC TLM

Demo 2: Bus Protocol Selection

Demo 3: Software-Controlled Memory Virtualization

77

Contents

SimpleScalar Overview

Demo 1: a simple simulation

PHiLOSoftware Simulator

(w/ 3.1 version of SimpleScalar)

SimpleScalar + CACTI + SystemC TLM

Demo 2: Bus Protocol Selection

Demo 3: Software-Controlled Memory Virtualization

78

Overview

What is an architectural simulator?

a tool that reproduces the behavior of a computing

device

Why we use a simulator?

Leverage a faster, more flexible software

development cycle

Permit more design space exploration

Facilitates validation before H/W becomes available

Level of abstraction is tailored by design task

Possible to increase/improve system instrumentation

Usually less expensive than building a real system

79

A Taxonomy of Simulation Tools

Shaded tools are included in SimpleScalar Tool Set

80

Functional vs.Performance

Functional simulators implement the architecture.

Perform real execution

Implement what programmers see

Performance simulators implement the

microarchitecture.

Model system resources/internals

Concern about time

Do not implement what programmers see

81

Trace- vs. Execution-Driven

Trace-Driven

Simulator reads a ‘trace’ of the instructions captured

during a previous execution

Easy to implement, no functional components necessary

Execution-Driven

Simulator runs the program (trace-on-the-fly)

Difficult to implement

Advantages

Faster than tracing

No need to store traces

Register and memory values usually are not in trace

Support mis-speculation cost modeling

82

SimpleScalar Tool Set Overview

Computer architecture research test bed

Compilers, assembler, linker, libraries, and simulators

Targeted to the virtual SimpleScalar architecture

Hosted on most any Unix-like machine

83

SimpleScalar Suite

84

Strength of SimpleScalar

Highly flexible

Portable

Host: virtual target runs on most Unix-like systems

Target: simulators can support multiple ISAs

Extensible

functional simulator + performance simulator

Source is included for compiler, libraries, simulators

Easy to write simulators

Performance

Runs codes approaching ‘real’ sizes

85

Contents

SimpleScalar Overview

Demo 1: a simple simulation

PHiLOSoftware Simulator

(w/ 3.1 version of SimpleScalar)

SimpleScalar + CACTI + SystemC TLM

Demo 2: Bus Protocol Selection

Demo 3: Software-Controlled Memory Virtualization

86

./sim-safe helloworld

Helloworld!

Create a new file, hello.c, that has the following code:

#include<stdio.h>

main()

{

printf("Hello World!\n");

}

then compile it using the following command:

$ $IDIR/bin/sslittle-na-sstrix-gcc –o hello hello.c

That should generate a file hello, which we will run over the

simulator:

$ $IDIR/simplesim-3.0/sim-safe hello

In the output, you should be able to find the following:

sim: ** starting functional simulation **

Hello World!

87

./sim-safe test-math

TESTS-PISA – test-math

Simple set of test executables

./tests-pisa/bin.little/

anagram, test-fmath, test-lswlr, test-printf, test-llong,

test-math

Run test-math

$ $IDIR/simplesim-3.0/sim-safe testspisa/bin.little/test-math

88

./sim-safe test-math

In the output (1)

sim: ** starting functional simulation **

pow(12.0, 2.0) == 144.000000

pow(10.0, 3.0) == 1000.000000

pow(10.0, -3.0) == 0.001000

str: 123.456

x: 123.000000

str: 123.456

x: 123.456000

str: 123.456

x: 123.456000

123.456 123.456000 123 1000

sinh(2.0) = 3.62686

sinh(3.0) = 10.01787

h=3.60555

atan2(3,2) = 0.98279

pow(3.60555,4.0) = 169

169 / exp(0.98279 * 5) = 1.24102

3.93117 + 5*log(3.60555) = 10.34355

cos(10.34355) = -0.6068, sin(10.34355) = -0.79486

x 0.5x

x0.5 x

x 0.5x

-1e-17 != -1e-17 Worked!

89

./sim-safe test-math

In the output (2)

sim: ** simulation statistics **

sim_num_insn

213703 # total number of instructions executed

sim_num_refs

56899 # total number of loads and stores executed

sim_elapsed_time

1 # total simulation time in seconds

sim_inst_rate

213703.0000 # simulation speed (in insts/sec)

ld_text_base

0x00400000 # program text (code) segment base

ld_text_size

91744 # program text (code) size in bytes

ld_data_base

0x10000000 # program initialized data segment base

ld_data_size

13028 # program init'ed `.data' and uninit'ed `.bss' size in bytes

ld_stack_base

0x7fffc000 # program stack segment base (highest address in

stack)

ld_stack_size

16384 # program initial stack size

ld_prog_entry

0x00400140 # program entry point (initial PC)

ld_environ_base

0x7fff8000 # program environment base address address

ld_target_big_endian

0 # target executable endian-ness, non-zero if big endian

mem.page_count

33 # total number of pages allocated

mem.page_mem

132k # total size of memory pages allocated

mem.ptab_misses

34 # total first level page table misses

mem.ptab_accesses

1546771 # total page table accesses

mem.ptab_miss_rate

0.0000 # first level page table miss rate

90

./sim-cache test-math

Cache Simulator

Run test-math with sim-cache

$ $IDIR/simplesim-3.0/sim-cache testspisa/bin.little/test-math

91

./sim-cache test-math

In the output

sim: ** simulation statistics **

sim_num_insn

213703 # total number of instructions executed

sim_num_refs

56899 # total number of loads and stores executed

sim_elapsed_time

1 # total simulation time in seconds

sim_inst_rate

213703.0000 # simulation speed (in insts/sec)

il1.accesses

213703 # total number of accesses

il1.hits

189940 # total number of hits

il1.misses

23763 # total number of misses

il1.replacements

23507 # total number of replacements

il1.writebacks

0 # total number of writebacks

il1.invalidations

0 # total number of invalidations

il1.miss_rate

0.1112 # miss rate (i.e., misses/ref)

il1.repl_rate

0.1100 # replacement rate (i.e., repls/ref)

il1.wb_rate

0.0000 # writeback rate (i.e., wrbks/ref)

il1.inv_rate

0.0000 # invalidation rate (i.e., invs/ref)

dl1.accesses

57480 # total number of accesses

dl1.hits

56675 # total number of hits

dl1.misses

805 # total number of misses

dl1.replacements

549 # total number of replacements

dl1.writebacks

416 # total number of writebacks

dl1.invalidations

0 # total number of invalidations

dl1.miss_rate

0.0140 # miss rate (i.e., misses/ref)

dl1.repl_rate

0.0096 # replacement rate (i.e., repls/ref)

dl1.wb_rate

0.0072 # writeback rate (i.e., wrbks/ref)

92

./sim-cache –cache:dl1 dl1:32:32:32:f test-math

Cache Configuration

Cache configuration

<name>:<nsets>:<bsize>:<assoc>:<repl>

<name> - name of the cache being defined

<nsets> - number of sets in the cache

<bsize> - block size of the cache

<assoc> - associativity of the cache

<repl> - block replacement strategy, 'l'-LRU, 'f'-FIFO, 'r'random

Examples: -cache:dl1 dl1:4096:32:1:l

Run test-math with sim-cache

$ $IDIR/simplesim-3.0/sim-cache –cache:dl1 dl1:32:32:32:f

tests-pisa/bin.little/test-math

93

./sim-cache –cache:dl1 dl1:32:32:32:f test-math

In the output

sim: ** simulation statistics **

sim_num_insn

213703 # total number of instructions executed

sim_num_refs

56899 # total number of loads and stores executed

sim_elapsed_time

1 # total simulation time in seconds

sim_inst_rate

213703.0000 # simulation speed (in insts/sec)

il1.accesses

213703 # total number of accesses

il1.hits

189940 # total number of hits

il1.misses

23763 # total number of misses

il1.replacements

23507 # total number of replacements

il1.writebacks

0 # total number of writebacks

il1.invalidations

0 # total number of invalidations

il1.miss_rate

0.1112 # miss rate (i.e., misses/ref)

il1.repl_rate

0.1100 # replacement rate (i.e., repls/ref)

il1.wb_rate

0.0000 # writeback rate (i.e., wrbks/ref)

il1.inv_rate

0.0000 # invalidation rate (i.e., invs/ref)

dl1.accesses

57480 # total number of accesses

dl1.hits

56938 # total number of hits

dl1.misses

542 # total number of misses

dl1.replacements

0 # total number of replacements

dl1.writebacks

0 # total number of writebacks

dl1.invalidations

0 # total number of invalidations

dl1.miss_rate

0.0094 # miss rate (i.e., misses/ref)

dl1.repl_rate

0.0000 # replacement rate (i.e., repls/ref)

dl1.wb_rate

0.0000 # writeback rate (i.e., wrbks/ref)

dl1.inv_rate

0.0000 # invalidation rate (i.e., invs/ref)

…

94

Different Cache Configurations

Different Configurations

Difference

< -cache:dl1

dl1:32:32:32:f # l1 data cache config, i.e.,

{<config>|none}

> -cache:dl1

dl1:256:32:1:l # l1 data cache config, i.e.,

{<config>|none}

dl1 output differences

< dl1.hits

56938 # total number of hits

< dl1.misses

542 # total number of misses

< dl1.replacements

0 # total number of replacements

< dl1.writebacks

0 # total number of writebacks

> dl1.hits

56675 # total number of hits

> dl1.misses

805 # total number of misses

> dl1.replacements

549 # total number of replacements

> dl1.writebacks

416 # total number of writebacks

95

./sim-outorder test-math

Performance Simulation

sim-outorder

Performance simulation

Out-of-Order Issue

Run test-math with sim-outorder

$ $IDIR/simplesim-3.0/sim-order testspisa/bin.little/test-math

96

./sim-outorder test-math

In the output

sim: ** simulation statistics **

sim_num_insn

213703 # total number of instructions committed

sim_num_refs

56899 # total number of loads and stores committed

sim_num_loads

34105 # total number of loads committed

sim_num_stores

22794.0000 # total number of stores committed

sim_num_branches

38594 # total number of branches committed

sim_elapsed_time

1 # total simulation time in seconds

sim_inst_rate

213703.0000 # simulation speed (in insts/sec)

sim_total_insn

233029 # total number of instructions executed

sim_total_refs

61927 # total number of loads and stores executed

sim_total_loads

37545 # total number of loads executed

sim_total_stores

24382.0000 # total number of stores executed

sim_total_branches

42770 # total number of branches executed

sim_cycle

224302 # total simulation time in cycles

sim_IPC

0.9527 # instructions per cycle

sim_CPI

1.0496 # cycles per instruction

sim_exec_BW

1.0389 # total instructions (mis-spec + committed) per cycle

sim_IPB

5.5372 # instruction per branch

IFQ_count

352201 # cumulative IFQ occupancy

IFQ_fcount

74028 # cumulative IFQ full count

ifq_occupancy

1.5702 # avg IFQ occupancy (insn's)

ifq_rate

1.0389 # avg IFQ dispatch rate (insn/cycle)

ifq_latency

1.5114 # avg IFQ occupant latency (cycle's)

ifq_full

0.3300 # fraction of time (cycle's) IFQ was full

RUU_count

1440457 # cumulative RUU occupancy

RUU_fcount

45203 # cumulative RUU full count

ruu_occupancy

6.4220 # avg RUU occupancy (insn's)

97

Contents

SimpleScalar Overview

Demo 1: a simple simulation

PHiLOSoftware Simulator

(w/ 3.1 version of SimpleScalar)

SimpleScalar + CACTI + SystemC TLM

Demo 2: Bus Protocol Selection

Demo 3: Software-Controlled Memory Virtualization

98

A Taxonomy of Simulation Tools

99

ARM Cortex-M3

Address Map

Software-Controlled Memories

Two types of memory subsystems

Hardware-controlled Caches

Software-controlled SRAMs

SPMs are preferred over caches due to:

ScratchPad Memories (SPMs), Tightly Coupled Memories (ARM), Local Stores

(Cell), Streaming Memories, Software Caches

Smaller area footprint

Lower power consumption

More predictable

Allow explicit control of their data

[Leverich et al., ISCA ‘07]

SPMs lack hardware support

Compiler

and programmer

need to explicitly manage

themdeploying

New

memory

subsystems

are

Accessed through physical addresses

Difficultdistributed

to capture irregularity aton-chip

compile time

with

softwareWhat about sharing their address space?

controlled memories

3/21/2016

100

Goal: Abstracting Physical Characteristics

from the Device

Challenges:

Software-controlled memories are explicitly accessed

Programmers assume full access to their physical space

Sharing issues and security risks (open environments)

Different physical characteristics

Due to voltage scaling

Increased latencies

Higher error rates

Due to interconnection network

Different access latencies

Mutually Dependent

Can we exploit

Local vs. Remote virtualization to minimize

Due to technology

programmer burden while

Process variations

SRAM vs. NVM characteristics

opportunistically

Virtualization as a viable solution

exploiting

the

variation

in physical

Traditional

virtualization

makes

no difference between

address spaces

3/21/2016

101

• Multi-processing and

multi-tasking

• Shared Memory

• Distributed Memory

• Leakage Power

• Voltage Scaling

• Hybrid Memories

• Process Variations

Low

Power/Energ

y

Locally Allocated

On-Chip Space

(1) Voltage Scaled Performance

Low-Power / Mid Latency

(2) Voltage Scaled

Fault-tolerant

(3) Nominal-Voltage

High Power / Low Latency

(4) Nominal-Voltage

CPU

Low-Priority

Security

Reliability

(5) Nominal-Voltage

NV

SPM

SPM

Higher Power / Latency

M

(6) NVM High Write Power /

Voltage Nominal

• Open Environments

Scaled Voltage

• Shared Memory Latency

• Encryption (7) NVM Higher Write

Power / Latency

Remotely Allocated

On-Chip Space

CPU

SPM

SPM

NV

M

• Memory

Most Nominal

Vulnerable

Voltage

Voltage

(LargeScaled

On-Chip

Area)

• Process Variations

• Soft-errors

(8) Low-Power DRAM

DRAM

NVM

SRAM Preferred

PHiLOSoftware’s Heterogeneous

Virtual Address Space

(9) High-Power DRAM

Memory

Controller

Interconnection

Network

Propose the idea of virtual address

spaces with different characteristics

Low Power DIMM

3/21/2016

High Power DIMM

102

PHiLOSoftware Framework

PHiLOSoftware

Software Annotations

@LowPower(arr,a,64)

@Reliable(arr,b,64)

@Secure(arr,c,128)

Application Market

Application

Over the Air Update

Application

Application

1

Application

1

1

App. OS

Service1

Service1

Service1

User Profile

User

Profile

Manager

User

Profile

Manager

Manager

User

User

Profile

Profile

Third

Party

Manager

Manager

App1

Services. OS

Private. OS

Third Party

OS

RTS

Allocation Policies

Compiler

(Static Analysis)

Application Layer (Static)

Run-Time System (OS/Hypervisor)

Run-Time Layer

(Dynamic)

3/21/2016

CPU

SPM

CPU

SPM

CPU

CPU

Manager

High Power

DRAM

HDD

NVM

NVM

DMA

DRAM

DRAM

DRAM

Voltage Scaled Emerging Memories

CMP Low Power Medium Power

Platform Type

(CMP,NoC,

MPSoC)

Memory Tech.

(SRAM, NVMs,

DRAM)

103

Platform

Platform Configuration

(Variable)

PHiLOSoftware Simulator

// connect modules to the bus

Priority

HW ID

while(...) {

...// bus_0->arbiter_port(*bus_arbiter_0);

create cpus && p.has_more())

if(!p.is_finished()

Annotations

SHA MIN_PRIO+1,

MOTION

NORMAL, 2, 0x00);

{ gen_0 = new generator("gen_0",

AES MIN_PRIO+2,

JPEG

@LowPower(arr,a,64)

gen_0->off_bus_port(*bus_0);

inst

= p.get_next_inst();

gen_1

= new generator("gen_1",

NORMAL, 2, 0x01);

BLOWFISH

GSM

@Reliable(arr,b,64)

gen_0->on_bus_port(*bus_1);

H263

ADPCM

#ifdef

VERBOSE

@Secure(arr,c,128)

spmvisor_0

= new spmvisor("spmvisor_0", SPMVISORBASE, SPMVISORBASE + SPMVISORSPACESIZE

- 1,

Application Policies

printf("%g %s : EXECUTE_INST <%s, 0x%lx, 0x%lx>\n",

gen_1->off_bus_port(*bus_0);

1, 1, MIN_PRIO+0, NORMAL, 2, 0x04);

sc_simulation_time(), name(), inst.op.c_str(), inst.pc,

inst.address);

Physical

Start Address

SHA

MOTION

gen_1->on_bus_port(*bus_1);

SimpleScalar

Traces

#endif

AES

JPEG

// mem

SimpleScalar

BLOWFISH

GSM

spmvisor_0->bus_port_req(*bus_0);

#ifdef

LOAD_INSTRUCTIONS

spm_0

= new spm("spm_0", SPMBASE + 0*SPM_SIZE, SPMBASE + 1*SPM_SIZE-1,1, 1);

H263

ADPCM

entry_p

= p.v_lut.lookup(inst.pc);

spmvisor_0->bus_port_spm(*bus_1);

spm_1

=

new spm("spm_1", SPMBASE + 1*SPM_SIZE, SPMBASE + 2*SPM_SIZE-1,1, 1);

if(entry_p!=NULL)

Physical End Address

{

Simulated RTOS Environment

Simulated Virtualized Environment

// bus

if(entry_p->in_spm)

bus_0->slave_port(*spmvisor_0);

bus_0

= new channel_amba2("bus_amba2_0",false);

A1

A2

A3

A4

A5

A6

A7

A8

{

GuestOS1

GuestOS2

GuestOS3

GuestOS4

A1

A2

A1

bus_0->slave_port(*ram);

bus_arbiter_0

= new arbiter("arbiter_amba2_0", STATIC, false);

spm_ax_inc(p.id);

RTOS

Arbitration Policy

Hypervisor

#ifdef

VSPM_ENABLED

bus_1->slave_port(*spm_0);

bus_1

= new channel_amba2("bus_amba2_1",false);

read(&off_bus_port,entry_p->pa,packet);

SystemC TLM

bus_1->slave_port(*spm_1);

bus_arbiter_1

= new arbiter("arbiter_amba2_1", STATIC, false);

#else

Power/Perf.

Manager

CPU

CPU

CPU

CPU

High Power

read(&on_bus_port,entry_p->pa,packet);

Models

HDD

#endif

OM

} Module Connectivity

#ifdef RISC_CYCLES_EN

wait(RISC_CYCLES(S_ISA_TO_INT_ISA(inst.op))+inst.cycles,

SC_NS);S-DMA

SPM

SPM

SPM

SPM

OM

OM

OM

#else

Nominal

Voltage

Voltage Scaled

CMP Low Power Medium Power

wait(1, SC_NS);

#endif

Memory Technology

...

Platform DB

Fault & Variability Models

Performanc

e

Models architecture configuration file for 2 core CMP with SPMs

Sample

(CACTI/NVSim)

Contents

SimpleScalar Overview

Demo 1: a simple simulation

PHiLOSoftware Simulator

(w/ 3.1 version of SimpleScalar)

SimpleScalar + CACTI + SystemC TLM

Demo 2: Bus Protocol Selection

Demo 3: Software-Controlled Memory Virtualization

105

PHiLOSoftware DEMO1: Bus Protocol

Selection

Access Type / Address / Number of bytes

lw

r16,0(r29)

0x400140

0x400140

_YY_R_

lui

r28,0x1001

0x400148

addiu

r28,r28,-26160

0x400150

addiu

r17,r29,4

0x400158

addiu

r3,r17,4

0x400160

sll

r2,r16,2

0x400168

addu

r3,r3,r2

0x400170

addu

r18,r0,r3

0x400178

sw

r18,-32412(r28)

0x400180

0x400180

addiu

r29,r29,-24

0x400188

addu

r4,r0,r16

0x400190

addu

r5,r0,r17

0x400198

addu

r6,r0,r18

0x4001a0

jal

0x403800

0x4001a8

addiu

r29,r29,-24

0x403800

0x7fff8000

_YY_W_

4

0x10001b34

0

4

0

SimpleScalar Trace

SimpleScalar

SimpleScalar

SimpleScalar

SimpleScalar

CPU

CPU

CPU

CPU

8KB I$

8KB D$

8KB I$

8KB D$

8KB I$

AMBA AHB TLM

8KB D$

CACTI

8KB I$

8KB D$

SystemC – TLM Model

DEMO1: Running

Usage: testbench.x <arb protocol>

<cycles to simulate>

./cmp_2cpu_cache.x STATIC 1000000

Can vary from STATIC, RANDOM,

ROUNDROBIN, TDMA, TDMA_RR

Cycles from 10,000, -1 for full

application run

Sample run output:

501858

501940

501946

501951

501951

502022

502114

ram : read 0x1046370347 at address 0xc14

ram : write 0x364210008 at address 0xffdfe20

simple_cpu_0 : EXECUTE_INST <lw, 0x4005c8, 0x2147450764>

simple_cpu_0 : EXECUTE_INST <addiu, 0x0, 0x11032>

simple_cpu_0 : EXECUTE_INST <sw, 0x4005d8, 0x2147450764>

ram : read 0x628966950 at address 0xc18

simple_cpu_0 : EXECUTE_INST <sw, 0x4005e8, 0x268445784>

DEMO1: Output Analysis

Effect of arbitration protocol:

STATIC is blocking (e.g., CPU 0 has highest priority)

Each of the different arbitration protocols has different behavior

Can be observed by number of arbitration cycles from each cache interface

./cmp_2cpu_cache.x STATIC 1000000

May lead to starvation of other CPUs

Run 10000 cycles with STATIC arb protocol to see example

cache_0: Arbitration wait cycles (Reads) = 0

cache_0: Arbitration wait cycles (Writes) = 0

cache_1: Arbitration wait cycles (Reads) = 4581

cache_1: Arbitration wait cycles (Writes) = 391

./cmp_2cpu_cache.x TDMA 1000000

cache_0: Arbitration wait cycles (Reads) = 103313

cache_0: Arbitration wait cycles (Writes) = 128494

cache_1: Arbitration wait cycles (Reads) = 148688

cache_1: Arbitration wait cycles (Writes) = 28329

DEMO1: Output Analysis

TDMA

Fair

Increases total execution time because of time-slots

FULL SIM: 8.44379e+06

STATIC

Might cause starvation

Processes with high priority finish faster

E.g. CPU0 has highest priority

FULL SIM: 5.93757e+06

Reverse priorities (CPU1>CPU0): 6.0784e+06

CPU0 has longest task to execute

Suffers of performance degradation because CPU1 has higher priority

DEMO1: 8 Core CMP

./cmp_8cpu_cache.x STATIC 1000000

1 Million cycles and STATIC arbitration protocol

ram: |cache_0|

ram: |cache_1|

ram: |cache_2|

Reads:

Reads:

Reads:

2240

3072

475

Writes:

Writes:

Writes:

4936

1518

19

./cmp_8cpu_cache.x RANDOM 1000000

ram:

ram:

ram:

ram:

ram:

ram:

ram:

ram:

Transactio

n

Starvation

Same as above, RANDOM arbitration

|cache_0|

|cache_1|

|cache_2|

|cache_3|

|cache_4|

|cache_5|

|cache_6|

|cache_7|

Reads:

Reads:

Reads:

Reads:

Reads:

Reads:

Reads:

Reads:

1150

1445

1088

768

576

576

768

1088

Writes:

Writes:

Writes:

Writes:

Writes:

Writes:

Writes:

Writes:

416

198

492

679

880

901

784

470

Greater

fairness

DEMO1: 8 Core CMP – Protocol

Comparison

Protocol

Read

s

Write

s

Tota

l

Average

Average

Transaction Arb.

s

Wait Cycles

$ Read

STDev

$ Write

STDev

STATIC

5787

6473

12260

766.25

9802.5 1145.329531 1636.738941

RANDOM

7459

4820

12279

767.4375

10051.125 288.6667358 232.6671442

ROUNDRO

BIN

7076

4460

11536

721

68994.75 238.1438641 238.1438641

TDMA

7076

4460

11536

721

68994.75 238.1438641 238.1438641

- Almost same number of accesses for all protocols

- Higher STDev means higher variation in number of

- E.g., STATIC vs. RANDOM, RR, TDMA

Contents

SimpleScalar Overview

Demo 1: a simple simulation

PHiLOSoftware Simulator

(w/ 3.1 version of SimpleScalar)

SimpleScalar + CACTI + SystemC TLM

Demo 2: Bus Protocol Selection

Demo 3: Software-Controlled Memory Virtualization

112

Shared SPMs in Heterogeneous

Multi-tasking Environments (Open Environment)

Problem: Fixed allocation policies

App4

RTOS

known single/multiple apps

CPU1

CPU2

App3

Crypto

No SPM space available, map all data off-chip

App3

Enforce pre-defined policies

App2 App5 App1

RTOS

App1

AMBA AHB

What about data and task criticality/priority?

Need dynamic and selective enforcement of allocation

policies

SPM1

SPM2

MM

Reduced power consumption/better performance…

Want to launch newly downloaded App!

App1

DMA

App5

Task Priority:

High

CPU0

App2

App3

CPU1

Medium

Low

App4

MM-SPM

Transfers:

FixedSPM0policies do not work well in open environments where

SPM1 number of applications running concurrently is

the

MM

unknown!

Virtual ScratchPad Memories

(vSPMs)

A1

A2

SPM

Compete for same SPM

A1

vSPM1

A2

vSPM2

1K

1K

vSPM1

1K

1K

1K

1K

vSPM2

1K

1K

Block-based

priority-driven

allocation policies

Applications see their own dedicated SPM(s)

1K

1K

1K

1K

1K

1K

PEM

1K

1K

0

SPM Space

4K-1

4K4K Protected

Evict

Memory

(PEM) Space

8K-1

MM Space

MM Space

nGB-1

nGB-1

Priority-based Dynamic Memory

Allocation

May have data/application based priorities

Create vSPM(s)Selective

prior to running

applications

eviction (data-priority)

Selective eviction

No need to evict data from SPMs on every context switch

Task Priority:

High

Need to launch new (trusted) application

App1

CPU0

App2

Low priority data is

mapped to PEM

space!

App3

Medium

Low

ALL data is protected through vSPMs

CPU1

App4

SPM0

SPM1

PEM

MM-SPM

Transfers:

Support priority-based

selective allocation

Lowpriority

(data and application

driven)

Data Priority:

Selectiv

vSPM Normal

blocks

e

mapped

Allocation

to PEM Eviction

Minimize overheads of generating

trusted environments!

space

(data protected through SPMVisor)

S1

S2

S3

S4

S5

S6

P1

P2

P3

DEMO3: Setup

Simulated Virtualized Environment

A1

Total of 4 active cores

A4

GuestOS2

A5

A6

GuestOS3

A7

A8

GuestOS4

Hypervisor

Total of 32KB on-chip space

CPU

CPU

CPU

CPU

SPMVisor

Crypto

SPMVisor

A3

8KB SPM space (per core)

A2

GuestOS1

SPM

SPM

SPM

Simulated 1 vSPM per app

Total of 8 * 8KB = 64KB on-chip virtualized space

SPM

S-DMA

Simulated Virtualized Environment:

Generates input for SystemC model

annotated traces with context switch information per application

name

os_a

os_b

os_c

os_d

hyp

time_slot

10000

10000

10000

10000

20000

cx_cost mem_sz(MB)

4000

128

4000

128

4000

128

4000

128

6000

128

n_app

2

2

2

2

{program name, ...}

adpcm/mem.trace aes/mem.trace

blowfish/mem.trace gsm/mem.trace

h263/mem.trace jpeg/mem.trace

motion/mem.trace sha/mem.trace

name

dtlb

entries policy (supported: full assoc(fifo) = 1, not supported yet: 2-set asso = 2, 4-set asso = 4)

12

1

MM

DEMO3: Hypervisor - CX vs. vSPMs

Hypervisor CX: on a context-switch, evict data from

SPMs

Hypervisor w/vSPMs: no need to evict data from SPMs

Load data for new tasks onto SPMs

Protects integrity of SPM data

Each Application has a dedicated virtual space

At run-time load SPM allocation tables

Object Name

Buffer_0x7f<7>:

Buffer_0x7f<8>:

Buffer_0x10<0>:

Buffer_0x10<1>:

Buffer_0x10<2>:

Buffer_0x10<3>:

Buffer_0x10<4>:

Buffer_0x10<5>:

Buffer_0x10<6>:

T_Start

1

16

1295

89

9

226971

228507

230043

231816

T_End

87

233608

232119

233598

233169

233166

230038

231574

233043

Lifetime

86

233592

230824

233509

233160

6195

1531

1531

1227

Addr_Start

2147450879

2147446783

268435456

268439552

268443648

268447744

268451840

268455936

268460032

Addr_End

2147454974

2147450878

268439551

268443647

268447743

268451839

268455935

268460031

268464127

# of Accesses

7

84825

3822

370

25088

1058

1024

1024

17

DEMO3: Hypervisor - CX vs. vSPMs (Cont.)

Comparison of cx and vSPMs

E1:

spmvisor_e1.x – 2 Applications, 1 OS, Hypervisor, 1 Core, 1 SPM, 2 x vSPM

rtos_e1.x – 2 applications, 1 OS, Hypervisor, 1 Core, 1 SPM

Traditional

(CX)

SPMVisor

2.89E+07

1.46E+07

49 % Lower

Execution Time

677486.6228

52877.2700

6

92 % Energy

Savings

Execution Time

Total Energy

(nJ)

Improvements

DEMO3: Hypervisor - CX vs. vSPMs (Cont.)

Comparison of cx and vSPMs

E4:

spmvisor_e4.x – 8 Applications, 4 Oses, Hypervisor, 4 Cores, 4 SPMs, 8

vSPMs

rtos_e4.x – 8 Applications, 4 Oses, Hypervisor, 4 Cores, 4 SPMs

Traditional

(CX)

SPMVisor

6.25E+07

2.18E+07

65 % Lower

Execution Time

2475745.172

465330.598

4

81 % Energy

Savings

Execution Time

Total Energy

(nJ)

Improvements

- The number of data evictions/loads due to context switching hurts bo

performance and energy

Questions?

3/21/2016

120

Contact Information

Houman Homayoun

Email: hhomayou@eng.ucsd.edu

http://cseweb.ucsd.edu/~hhomayoun/

Manish Arora

Email: marora@eng.ucsd.edu

http://cseweb.ucsd.edu/~marora/

Luis Angel Bathen

Email:lbathen@uci.edu

www.ics.uci.edu/~lbathen/

121

Architecture Tool Publicly Available for Download

HotSpot

DARSIM (Hornet)

http://www.simplescalar.com/

GPGPU-SIM

http://www.m5sim.org/Main_Page

SimpleScalar

http://www.hpl.hp.com/research/cacti/

GEMS5

http://www.hpl.hp.com/research/mcpat/

CACTI

http://www.rioshering.com/nvsimwiki/index.php?title=Main_Page

McPAT

http://csg.csail.mit.edu/hornet/

NVSIM

http://lava.cs.virginia.edu/HotSpot/

http://www.gpgpu-sim.org/

VARIUS

http://iacoma.cs.uiuc.edu/varius/

122