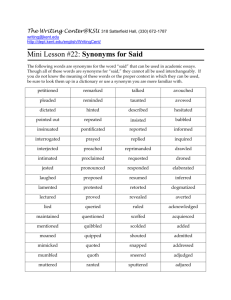

relations

advertisement

Relations Between Words CS 4705 Today • • • • • Word Clustering Words and Meaning Lexical Relations WordNet Clustering for word sense discovery Related Words: Clustering • Clustering feature vectors to ‘discover’ word senses using some similarity metric (e.g. cosine distance) – Represent each cluster as average of feature vectors it contains – Label clusters by hand with known senses – Classify unseen instances by proximity to these known and labeled clusters • Evaluation problem – What are the ‘right’ senses? – Cluster impurity – How do you know how many clusters to create? – Some clusters may not map to ‘known’ senses Related Words: Dictionary Entries • Lexeme: an entry in the lexicon that includes – an orthographic representation – a phonological form – a symbolic meaning representation or sense • Some typical dictionary entries: – Red (‘red) n: the color of blood or a ruby – Blood (‘bluhd) n: the red liquid that circulates in the heart, arteries and veins of animals – Right (‘rIt) adj: located nearer the right hand esp. being on the right when facing the same direction as the observer – Left (‘left) adj: located nearer to this side of the body than the right • Can we get semantics directly from online dictionary entries? – Some are circular – All are defined in terms of other lexemes – You have to know something to learn something • What can we learn from dictionaries? – Relations between words: • Oppositions, similarities, hierarchies Homonymy • Homonyms: Words with same form – orthography and pronunciation -- but different, unrelated meanings, or senses (multiple lexemes) – A bank holds investments in a custodial account in the client’s name. – As agriculture is burgeoning on the east bank, the river will shrink even more • Word sense disambiguation: what clues? • Related phenomena – homophones - read and red (same pron/different orth) – homographs - bass and bass (same orth/different pron) Ambiguity: Which applications will these cause problems for? A bass, the bank, red/read • General semantic interpretation • Machine translation • Spelling correction • Speech recognition • Text to speech • Information retrieval Polysemy • Word with multiple but related meanings (same lexeme) – They rarely serve red meat. – He served as U.S. ambassador. – He might have served his time in prison. • What’s the difference between polysemy and homonymy? • Homonymy: – Distinct, unrelated meanings – Different etymology? Coincidental similarity? • Polysemy: – Distinct but related meanings – idea bank, sperm bank, blood bank, bank bank – How different? • Different subcategorization frames? • Domain specificity? • Can the two candidate senses be conjoined? ?He served his time and as ambassador to Norway. • For either, practical task: – What are its senses? (related or not) – How are they related? (polysemy ‘easier’ here) – How can we distinguish them? Tropes, or Figures of Speech • Metaphor: one entity is given the attributes of another (tenor/vehicle/ground) – Life is a bowl of cherries. Don’t take it serious…. – We are the eyelids of defeated caves. ?? • Metonymy: one entity used to stand for another (replacive) – GM killed the Fiero. – The ham sandwich wants his check. (deferred reference) • Both extend existing sense to new meaning – Metaphor: completely different concept – Metonymy: related concepts Synonymy • Substitutability: different lexemes, same meaning – How big is that plane? – How large is that plane? – How big are you? Big brother is watching. • What influences substitutability? – Polysemy (large vs. old sense) – register: He’s really cheap/?parsimonious. – collocational constraints: roast beef, ?baked beef economy fare ?economy price Finding Synonyms and Collations Automatically from a Corpus • Synonyms: Identify words appearing frequently in similar contexts Blast victims were helped by civic-minded passersby. Few passersby came to the aid of this crime victim. • Collocations: Identify synonyms that don’t appear in some specific similar contexts Flu victims, flu suffers,… Crime victims, ?crime sufferers, … Hyponomy • General: hypernym (super…ordinate) – dog is a hypernym of poodle • Specific: hyponym (under..neath) – poodle is a hyponym of dog • Test: That is a poodle implies that is a dog • Ontology: set of domain objects • Taxonomy? Specification of relations between those objects • Object hierarchy? Structured hierarchy that supports feature inheritance (e.g. poodle inherits some properties of dog) Semantic Networks • Used to represent lexical relationships – e.g. WordNet (George Miller et al) – Most widely used hierarchically organized lexical database for English – Synset: set of synonyms, a dictionary-style definition (or gloss), and some examples of uses --> a concept – Databases for nouns, verbs, and modifiers • Applications can traverse network to find synonyms, antonyms, hierarchies,... – Available for download or online use – http://www.cogsci.princeton.edu/~wn Using WN, e.g. in Question-Answering • Pasca & Harabagiu ’01 results on TREC corpus – Parses questions to determine question type, key words (Who invented the light bulb?) – Person question; invent, light, bulb – The modern world is an electrified world. It might be argued that any of a number of electrical appliances deserves a place on a list of the millennium's most significant inventions. The light bulb, in particular, profoundly changed human existence by illuminating the night and making it hospitable to a wide range of human activity. The electric light, one of the everyday conveniences that most affects our lives, was invented in 1879 simultaneously by Thomas Alva Edison in the United States and Sir Joseph Wilson Swan in England. • Finding named entities is not enough • Compare expected answer ‘type’ to potential answers – For questions of type person, expect answer is person – Identify potential person names in passages retrieved by IR – Check in WN to find which of these are hyponyms of person • Or, Consider reformulations of question: Who invented the light bulb – For key words in query, look for WN synonyms – E.g. Who fabricated the light bulb? – Use this query for initial IR • Results: improve system accuracy by 147% (on some question types) Next time • Chapter 18.10