B - science.uu.nl project csg

advertisement

Argumentation

Henry Prakken

SIKS Basic Course

Learning and Reasoning

May 26th, 2009

Why do agents need argumentation?

For their internal reasoning

Reasoning about beliefs, goals, intentions etc often is

defeasible

For their interaction with other agents

Information exchange, negotiation, collaboration, …

Overview

Inference (logic)

Abstract argumentation

Rule-based argumentation

Dialogue

Part 1:

Inference

We should lower taxes

Lower taxes

increase

productivity

Increased

productivity

is good

We should lower taxes

Lower taxes

increase

productivity

Increased

productivity

is good

We should not lower taxes

Lower taxes

increase

inequality

Increased

inequality

is bad

We should lower taxes

Lower taxes

increase

productivity

We should not lower taxes

Increased

productivity

is good

Lower taxes do

not increase

productivity

USA lowered

taxes but

productivity

decreased

Lower taxes

increase

inequality

Increased

inequality

is bad

We should lower taxes

Lower taxes

increase

productivity

Prof. P says

that …

We should not lower taxes

Increased

productivity

is good

Lower taxes do

not increase

productivity

USA lowered

taxes but

productivity

decreased

Lower taxes

increase

inequality

Increased

inequality

is bad

We should lower taxes

Lower taxes

increase

productivity

Prof. P says

that …

People with

political

ambitions

are not

objective

We should not lower taxes

Increased

productivity

is good

Prof. P is not

objective

Prof. P has

political

ambitions

Lower taxes do

not increase

productivity

USA lowered

taxes but

productivity

decreased

Lower taxes

increase

inequality

Increased

inequality

is bad

We should lower taxes

Lower taxes

increase

productivity

Prof. P says

that …

People with

political

ambitions

are not

objective

We should not lower taxes

Increased

productivity

is good

Prof. P is not

objective

Prof. P has

political

ambitions

Lower taxes do

not increase

productivity

USA lowered

taxes but

productivity

decreased

Lower taxes

increase

inequality

Increased

inequality

is bad

We should lower taxes

Lower taxes

increase

productivity

Prof. P says

that …

People with

political

ambitions

are not

objective

We should not lower taxes

Increased

productivity

is good

Prof. P is not

objective

Prof. P has

political

ambitions

Lower taxes

increase

inequality

Increased

inequality

is good

Lower taxes do

not increase

productivity

USA lowered

taxes but

productivity

decreased

Increased

inequality

is bad

Increased

inequality

stimulates

competition

Competition

is good

Sources of conflict

Default generalisations

Conflicting information sources

Alternative explanations

Conflicting goals, interests

Conflicting normative, moral opinions

…

Application areas

Medical diagnosis and treatment

Legal reasoning

Interpretation

Evidence / crime investigation

Intelligence

Decision making

Policy design

…

We should lower taxes

Lower taxes

increase

productivity

Prof. P says

that …

People with

political

ambitions

are not

objective

We should not lower taxes

Increased

productivity

is good

Prof. P is not

objective

Prof. P has

political

ambitions

Lower taxes

increase

inequality

Increased

inequality

is good

Lower taxes do

not increase

productivity

USA lowered

taxes but

productivity

decreased

Increased

inequality

is bad

Increased

inequality

stimulates

competition

Competition

is good

A

C

B

D

E

Status of arguments: abstract

semantics (Dung 1995)

INPUT: a pair Args,Defeat

OUTPUT: An assignment of the status

‘in’ or ‘out’ to all members of Args

So: semantics specifies conditions for

labeling the ‘argument graph’.

Should capture reinstatement:

A

B

C

Possible labeling conditions

Every argument is either ‘in’ or ‘out’.

1. An argument is ‘in’ if all arguments defeating it

are ‘out’.

2. An argument is ‘out’ if it is defeated by an

argument that is ‘in’.

Works fine with:

A

B

But not with:

A

B

C

Two solutions

Change conditions so that always a unique status

assignment results

A

B

A

A

B

Use multiple status assignments:

A

C

B

B

and

C

A

B

Unique status assignments

Grounded semantics (Dung 1995):

S0: the empty set

Si+1: Si + all arguments defended by Si

...

(S defends A if all defeaters of A are

defeated by a member of S)

A

C

B

D

E

Is B, D or E defended by S1? Is B or E defended by S2?

A problem(?) with grounded

semantics

We have:

A

B

We want(?):

A

B

C

C

D

D

A problem(?) with grounded

semantics

A

A = Frederic Michaud is French since he has a French name

B = Frederic Michaud is Dutch since he is a marathon skater

C = F.M. likes the EU since he is European (assuming he is not

Dutch or French)

D = F.M. does not like the EU since he looks like a person who

does not like the EU

B

C

D

A problem(?) with grounded

semantics

E

A

A = Frederic Michaud is French since Alice says so

B = Frederic Michaud is Dutch since Bob says so

C = F.M. likes the EU since he is European (assuming he is not

Dutch or French)

D = F.M. does not like the EU since he looks like a person who

does not like the EU

E = Alice and Bob are unreliable since they contradict each other

B

C

D

Multiple labellings

A

B

A

B

C

C

D

D

Status assignments (1)

Given Args,Defeat:

A status assignment is a partition of Args into sets

In and Out such that:

1. An argument is in In if all arguments defeating it

are in Out.

2. An argument is in Out if it is defeated by an

argument that is in In.

A

B

C

Status assignments (1)

Given Args,Defeat:

A status assignment is a partition of Args into sets

In and Out such that:

1. An argument is in In if all arguments defeating it

are in Out.

2. An argument is in Out if it is defeated by an

argument that is in In.

A

B

C

Status assignments (1)

Given Args,Defeat:

A status assignment is a partition of Args into sets

In and Out such that:

1. An argument is in In if all arguments defeating it

are in Out.

2. An argument is in Out if it is defeated by an

argument that is in In.

A

B

C

Status assignments (1)

Given Args,Defeat:

A status assignment is a partition of Args into sets

In and Out such that:

1. An argument is in In if all arguments defeating it

are in Out.

2. An argument is in Out if it is defeated by an

argument that is in In.

A

B

C

Status assignments (1)

Given Args,Defeat:

A status assignment is a partition of Args into sets

In and Out such that:

1. An argument is in In if all arguments defeating it

are in Out.

2. An argument is in Out if it is defeated by an

argument that is in In.

A

B

C

Status assignments (2)

Given Args,Defeat:

A status assignment is a partition of Args into sets In, Out and

Undecided such that:

1. An argument is in In if all arguments defeating it are in Out.

2. An argument is in Out if it is defeated by an argument that is in In.

A status assignment is stable if Undecided = .

A status assignment is preferred if Undecided is -minimal.

In is a stable extension

In is a preferred extension

A status assignment is grounded if Undecided is -maximal.

In is the grounded extension

Dung’s original definitions

Given Args,Defeat, S Args, A Args:

S is conflict-free if no member of S defeats a member of S

S defends A if all defeaters of A are defeated by a member of S

S is admissible if it is conflict-free and defends all its members

S is a preferred extension if it is -maximally admissible

S is a stable extension if it is conflict-free and defeats all

arguments outside it

S is the grounded extension if S is the -smallest set such that

A S iff S defends A.

S defends A if all defeaters of A are defeated

by a member of S

S is admissible if it is conflict-free and

defends all its members

A

C

B

D

Admissible?

E

S defends A if all defeaters of A are defeated

by a member of S

S is admissible if it is conflict-free and

defends all its members

A

C

B

D

Admissible?

E

S defends A if all defeaters of A are defeated

by a member of S

S is admissible if it is conflict-free and

defends all its members

A

C

B

D

Admissible?

E

S defends A if all defeaters of A are defeated

by a member of S

S is admissible if it is conflict-free and

defends all its members

A

C

B

D

Admissible?

E

S defends A if all defeaters of A are defeated

by a member of S

S is admissible if it is conflict-free and

defends all its members

A

C

B

D

Preferred?

E

S is preferred if it is

maximally admissible

S defends A if all defeaters of A are defeated

by a member of S

S is admissible if it is conflict-free and

defends all its members

A

C

B

D

Preferred?

E

S is preferred if it is

maximally admissible

S defends A if all defeaters of A are defeated

by a member of S

S is admissible if it is conflict-free and

defends all its members

A

C

B

D

Preferred?

E

S is preferred if it is

maximally admissible

S defends A if all defeaters of A are defeated

by a member of S

S is admissible if it is conflict-free and

defends all its members

A

C

B

D

Grounded?

E

S is groundeded if it

is the smallest set s.t.

A S iff S defends A

S defends A if all defeaters of A are defeated

by a member of S

S is admissible if it is conflict-free and

defends all its members

A

C

B

D

Grounded?

E

S is groundeded if it

is the smallest set s.t.

A S iff S defends A

Properties

The grounded extension is unique

Every stable extension is preferred (but

not v.v.)

There exists at least one preferred

extension

The grounded extension is a subset of

all preferred and stable extensions

…

The ‘ultimate’ status of

arguments (and conclusions)

With grounded semantics:

With preferred semantics:

A is justified if A g.e.

A is overruled if A g.e. and A is defeated by g.e.

A is defensible otherwise

A is justified if A p.e for all p.e.

A is defensible if A p.e. for some but not all p.e.

A is overruled otherwise (?)

In all semantics:

is justified if is the conclusion of some justified argument

is defensible if is not justified and is the conclusion of

some defensible argument

The status of arguments:

proof theory

Argument games between proponent and

opponent:

Proponent starts with an argument

Then each party replies with a suitable

counterargument

Possibly backtracking

A winning criterion

E.g. the other player cannot move

An argument is (dialectically) provable iff

proponent has a winning strategy in a game

for it.

The G-game for grounded semantics:

A sound and complete game:

Each move replies to previous move

Proponent does not repeat moves

Proponent moves strict defeaters, opponent

moves defeaters

A player wins iff the other player cannot move

Result: A is in the grounded extension iff

proponent has a winning strategy in a game

about A.

A game tree

A

F

B

C

D

E

A game tree

A

F

B

C

D

E

P: A

A game tree

A

P: A

F

O: F

B

C

D

E

A game tree

A

P: A

F

O: F

B

P: E

C

D

E

A game tree

A

P: A

F

O: F

B

P: E

C

D

E

O: B

A game tree

A

P: A

F

O: B

O: F

B

P: E

C

D

E

P: C

A game tree

A

P: A

F

O: B

O: F

B

P: E

C

P: C

E

O: D

D

A game tree

A

P: A

F

O: B

O: F

B

P: E

C

P: C

E

O: D

D

P: E

The structure of arguments:

current accounts

Assumption-based approaches (Dung-Kowalski-Toni, Besnard & Hunter,

…)

K = theory

A = assumptions, - is conflict relation on A

R = inference rules (strict)

An argument for p is a set A’ A such that A’ K |-R p

Arguments attack each other on their assumptions

Rule-based approaches (Pollock, Vreeswijk, DeLP, Prakken & Sartor,

Defeasible Logic, …)

K = theory

R = inference rules (strict and defeasible)

K yields an argument for p if K |-R p

Arguments attack each other on applications of defeasible inference rules

Aspic system: overview

Argument structure based on Vreeswijk (1997)

≈ Trees where

Nodes are wff of logical language L closed under

negation

Links are applications of inference rules

Strict (1, ..., 1 ); or

Defeasible (1, ..., 1 )

Reasoning starts from knowledge base K L

Defeat based on Pollock

Argument acceptability based on Dung (1995)

ASPIC system:

structure of arguments

An argument A is:

if K with

A1, ..., An if there is a strict inference rule Conc(A1), ...,

Conc(An)

Conc(A) = {}

Sub(A) = Sub(A1) ... Sub(An) {A}

A1, ..., An if there is a defeasible inference rule

Conc(A1), ..., Conc(An)

Conc(A) = {}

Sub(A) =

Conc(A) = {}

Sub(A) = Sub(A1) ... Sub(An) {A}

A is strict if all members of Sub(A) apply strict rules;

else A is defeasible

P

Q1,R1,R2 K

Q1

Q1, Q2 P

Q2

R1

R1, R2 Q2

R2

Domain-specific vs. inference

general inference rules Flies

R1: Bird Flies

R2: Penguin Bird

Penguin K

Bird

Penguin

R1: ,

Strict rules: all deductively

valid inference rules

Bird Flies K

Bird

Penguin Bird K

Penguin K

Penguin

Flies

Bird Flies

Penguin Bird

ASPIC system:

attack and defeat

≥ is a preference ordering between arguments such

that if A is strict and B is defeasible then A > B

A rebuts B if

A undercuts B if

Conc(A) = ¬Conc(B’ ) for some B’ Sub(B); and

B’ applies a defeasible rule; and

not B’ > A

Naming convention

implicit

Conc(A) = ¬B’ for some B’ Sub(B); and

B’ applies a defeasible rule

A defeats B if A rebuts or undercuts B

P

Q1

Q2

Q2

R1

R2

V1

V2

S2

V3

T1

T2

Argument acceptability

Dung-style semantics and proof theory

directly apply!

Additional properties

(cf. Caminada & Amgoud 2007)

Let E be any stable, preferred or

grounded extension:

If B Sub(A) and A E then B E

If the strict rules RS are closed under

contraposition, then {| = Conc(A)

for some A E } is

1.

2.

closed under RS;

consistent if K is consistent

Argument schemes

Many arguments (and attacks) follow patterns.

Much work in argumentation theory (Perelman,

Toulmin, Walton, ...)

Argument schemes

Critical questions

Recent applications in AI (& Law)

Argument schemes:

general form

Premise 1,

…,

Premise n

Therefore (presumably), conclusion

But also critical questions

Negative answers are counterarguments

Expert testimony

(Walton 1996)

E is expert on D

E says that P

P is within D

Therefore (presumably), P is the case

Critical questions:

Is E biased?

Is P consistent with what other experts say?

Is P consistent with known evidence?

Witness testimony

Witness W says P

Therefore (presumably), P

Critical questions:

Is W sincere? (veracity)

Was P evidenced by W’s senses? (objectivity)

Did P occur? (observational sensitivity)

Perception

P is observed

Therefore (presumably), P

Critical questions:

Are the circumstances such that reliable

observation of P is impossible?

…

Memory

P is recalled

Therefore (presumably), P

Critical questions:

Was P originally based on beliefs of which

one is false?

…

‘Unpacking’ the witness

testimony scheme

Witness

testimony

Witness W says “I remember I saw P”

Therefore (presumably), W remembers he saw P

Therefore (presumably), W saw P

Witness

Therefore (presumably), P

testimony

Critical questions:

Is W sincere? (veracity)

Was P evidenced by W’s senses?

(objectivity)

Did P occur? (observational sensitivity)

‘Unpacking’ the witness

testimony scheme

Memory

Witness W says “I remember I saw P”

Therefore (presumably), W remembers he saw P

Therefore (presumably), W saw P

Therefore (presumably), P

Memory

Critical questions:

Is W sincere? (veracity)

Was P evidenced by W’s senses?

(objectivity)

Did P occur? (observational sensitivity)

‘Unpacking’ the witness

testimony scheme

Witness W says “I remember I saw P”

Therefore (presumably), W remembers he saw P

Perception

Therefore (presumably), W saw P

Therefore (presumably), P

Critical questions:

Perception

Is W sincere? (veracity)

Was P evidenced by W’s senses?

(objectivity)

Did P occur? (observational sensitivity)

Applying commonsense

generalisations

Consc of Guilt

P

If P then usually Q

Therefore (presumably), Q

Fleas

If Fleas then usually

Consc of Guilt

People who flea from a crime scene usually have consciousness of guilt

Critical questions: are there exceptions to the generalisation?

exceptional classes of people may have other reasons to flea

Illegal immigrants

Customers of prostitutes

…

Arguments from consequences

Action A brings about G,

G is good (bad)

Therefore (presumably), A should (not) be done

Critical questions:

Does A also have bad (good) consequences?

Are there other ways to bring about G?

...

Other work on argument-based

inference

Reasoning about priorities and defeat

Abstract support relations between

arguments

Gradual defeat

Other semantics

Dialectical proof theories

Combining modes of reasoning

...

Part 2:

Dialogue

‘Argument’ is ambiguous

Inferential structure

Single agents

(Nonmonotonic) logic

Fixed information state

Form of dialogue

Multiple agents

Dialogue theory

Changing information state

Example

P: Tell me all you know about recent trading

in explosive materials (request)

P: why don’t you want to tell me?

P: why aren’t you allowed to tell me?

P: You may be right in general (concede) but

in this case there is an exception since

this is a matter of national importance

P: since we have heard about a possible

terrorist attack

P: OK, I agree (offer accepted).

O: No I won’t (reject)

O: since I am not allowed to tell you

O: since sharing such information could

endanger an investigation

O: Why is this a matter of national

importance?

O: I concede that there is an exception, so I

retract that I am not allowed to tell you.

I will tell you on the condition that you

don’t exchange the information with

other police officers (offer)

Example

P: Tell me all you know about recent trading

in explosive materials (request)

P: why don’t you want to tell me?

P: why aren’t you allowed to tell me?

P: You may be right in general (concede) but

in this case there is an exception since

this is a matter of national importance

P: since we have heard about a possible

terrorist attack

P: OK, I agree (offer accepted).

O: No I won’t (reject)

O: since I am not allowed to tell you

O: since sharing such information could

endanger an investigation

O: Why is this a matter of national

importance?

O: I concede that there is an exception, so I

retract that I am not allowed to tell you.

I will tell you on the condition that you

don’t exchange the information with

other police officers (offer)

Example

P: Tell me all you know about recent trading

in explosive materials (request)

P: why don’t you want to tell me?

P: why aren’t you allowed to tell me?

P: You may be right in general (concede) but

in this case there is an exception since

this is a matter of national importance

P: since we have heard about a possible

terrorist attack

P: OK, I agree (offer accepted).

O: No I won’t (reject)

O: since I am not allowed to tell you

O: since sharing such information could

endanger an investigation

O: Why is this a matter of national

importance?

O: I concede that there is an exception, so I

retract that I am not allowed to tell you.

I will tell you on the condition that you

don’t exchange the information with

other police officers (offer)

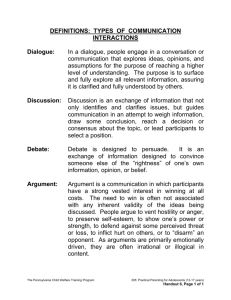

Types of dialogues

(Walton & Krabbe)

Dialogue Type

Dialogue Goal

Initial situation

Persuasion

resolution of conflict

conflict of opinion

Negotiation

making a deal

conflict of interest

Deliberation

reaching a decision

need for action

Information seeking

exchange of information

personal ignorance

Inquiry

growth of knowledge

general ignorance

Dialogue systems

(according to Carlson 1983)

Dialogue systems define the conditions under which

an utterance is appropriate

An utterance is appropriate if it promotes the goal of

the dialogue in which it is made

Appropriateness defined not at speech act level but

at dialogue level

Dialogue game approach

Protocol should promote the goal of the dialogue

Formal dialogue systems

Topic language

With a logic (possibly nonmonotonic)

Communication language

Locution + content (from topic language)

With a protocol: rules for when utterances may be

made

Should promote the goal of the dialogue

Effect rules (e.g. on agent’s commitments)

Termination and outcome rules

Negotiation

Dialogue goal: making a deal

Participants’ goals: maximise individual

gain

Typical communication language:

Request p, Offer p, Accept p, Reject p, …

Persuasion

Participants: proponent (P) and opponent (O) of a

dialogue topic T

Dialogue goal: resolve the conflict of opinion on T

Participants’ goals:

P wants O to accept T

O wants P to give up T

Typical speech acts:

Claim p, Concede p, Why p, p since S, Retract p, Deny p …

Goal of argument games:

Verify logical status of argument or

proposition relative to given theory

Standards for dialogue systems

Argument games: soundness and

Dialogue systems:

completeness wrt some logical

semantics

Effectiveness wrt dialogue goal

Efficiency, relevance, termination, ...

Fairness wrt participants’ goals

Can everything relevant be said?, ...

Some standards for persuasion

systems

Correspondence

With participants’ beliefs

If union of beliefs implies p, can/will agreement on p result?

If parties agree that p, does the union of their beliefs imply p?

...

With ‘dialogue theory’

If union of commitments implies p, can/will agreement on p

result?

...

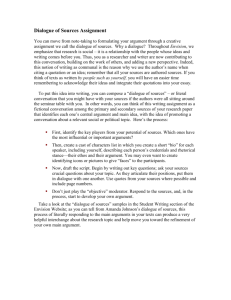

A communication language

(Dijkstra et al. 2007)

Speech act

Attack

Surrender

request()

offer (’), reject()

-

offer()

offer(’) ( ≠ ’), reject()

accept()

reject()

offer(’) ( ≠ ’),

why-reject ()

-

accept()

-

-

why-reject()

claim (’)

-

claim()

why()

concede()

why()

since S (an argument)

retract()

since S

why() ( S)

’ since S’ (a defeater)

concede()

concede ’ (’ S)

concede()

-

-

retract()

-

-

deny()

-

-

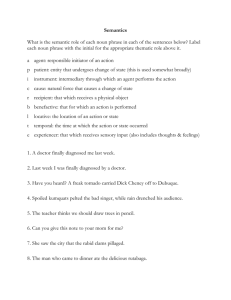

A protocol

(Dijkstra et al. 2007)

Start with a request

Repy to a previous move of the other agent

Pick your replies from the table

Finish persuasion before resuming negotiation

Turntaking:

In nego: after each move

In pers: various rules possible

Termination:

In nego: if offer is accepted or someone withdraws

In pers: if main claim is retracted or conceded

Example dialogue formalised

P: Request to tell

O: Reject to tell

P: Why reject to tell?

Embedded

persuasion

...

O: Offer to tell if no further exchange

P: Accept after tell no further exchange

Persuasion part formalised

O: Claim Not allowed to tell

P: Why not allowed to tell?

O: Not allowed to tell since telling endangers investigation &

What endangers an investigation is not allowed

P: Concede What endangers an

investigation is not allowed

P: Exception to R1 since National importance

& National importance Exception to R1

O: Why National importance?

P: National importance since Terrorist threat &

Terrorist threat National importance

Persuasion part formalised

O: Claim Not allowed to tell

P: Why not allowed to tell?

O: Not allowed to tell since telling endangers investigation &

What endangers an investigation is not allowed

P: Concede What endangers an

investigation is not allowed

P: Concede Exception to R1

P: Exception to R1 since National importance

& National importance Exception to R1

O: Why National importance?

P: National importance since Terrorist threat &

Terrorist threat National importance

Persuasion part formalised

O: Claim Not allowed to tell

P: Why not allowed to tell?

O: Not allowed to tell since telling endangers investigation &

What endangers an investigation is not allowed

P: Concede What endangers an

investigation is not allowed

O: Concede Exception to R1

O: Retract

Not allowed to tell

P: Exception to R1 since National importance

& National importance Exception to R1

O: Why National importance?

P: National importance since Terrorist threat &

Terrorist threat National importance

Theory building in dialogue

In my 2005 approach to (persuasion)

dialogue:

Agents build a joint theory during the

dialogue

A dialectical graph

Moves are operations on the joint theory

claim

Not allowed to tell

claim

Not allowed to tell

why

claim

Not allowed to tell

why

since

Telling endangers

investigation

R1: What endangers an

investigation is not allowed

claim

Not allowed to tell

why

since

Telling endangers

investigation

concede

R1: What endangers an

investigation is not allowed

claim

why

Not allowed to tell

since

concede

Telling endangers

investigation

R1: What endangers an

investigation is not allowed

Exception to R1

R2: national

importance

Not R1

since

National importance

claim

why

Not allowed to tell

since

concede

Telling endangers

investigation

R1: What endangers an

investigation is not allowed

Exception to R1

since

why

R2: national

importance

Not R1

National importance

claim

why

Not allowed to tell

since

concede

Telling endangers

investigation

R1: What endangers an

investigation is not allowed

Exception to R1

since

why

R2: national

importance

Not R1

National importance

since

Terrorist threat

Terrorist threat

national importance

claim

why

Not allowed to tell

since

concede

Telling endangers

investigation

R1: What endangers an

investigation is not allowed

concede

Exception to R1

since

why

R2: national

importance

Not R1

National importance

since

Terrorist threat

Terrorist threat

national importance

claim

why

Not allowed to tell

retract

since

Telling endangers

investigation

concede

R1: What endangers an

investigation is not allowed

concede

Exception to R1

since

why

R2: national

importance

Not R1

National importance

since

Terrorist threat

Terrorist threat

national importance

Research issues

Investigation of protocol properties

Combinations of dialogue types

Mathematical proof or experimentation

Deliberation!

Multi-party dialogues

Dialogical agent behaviour (strategies)

...

Further information

http://people.cs.uu.nl/henry/siks/siks09.html