Australian Numerals - Supplementary Materials

advertisement

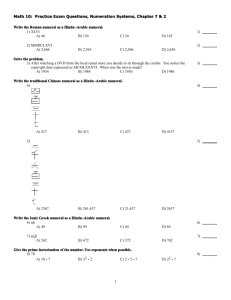

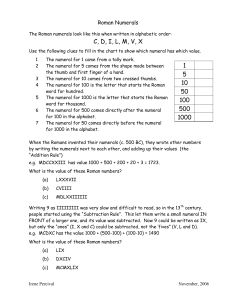

Supplementary Materials Quantifying Uncertainty in the Phylogenetics of Australian Numeral Systems Zhou and Bowern Further Information on Data Data on numeral systems Data for this paper have the same sources as those for Bowern and Zentz [1], and the reader is referred to that paper for details of the primary sources for numeral data. Each language was coded for the extent of the system (that is, the maximum attested numeral) and the etymological structure of the numerals 3 and 4. Bowern and Zentz included data from non-Pama-Nyungan languages; these languages were excluded from our sample because of the lack of resolution of phylogenetic relationships beyond the level of Proto-Pama-Nyungan. We have numeral extent data for 127 Pama-Nyungan languages and etymological structure data for 110 languages. Figure S1 summarizes the data. Each language was assigned a number representing its numeral extent; for example, languages coded with a ‘3’ have numeral extents of three, and so on. Languages whose extents were eight and greater were all represented by state ‘9’. Such languages include extents of 10, 19, 20, and 100. For clarity, we refer to this state as ‘8+’. Numerals in this survey are all sequential; for example, there are no languages with a term for 4 which do not also have a term for 3, 2, or 1. 20 15 Frequency 0 0 5 10 10 20 Frequency 30 25 40 30 Data coding for opacity (that is, the etymological structure of numeral systems) also come from Bowern and Zentz [1], supplemented by coding for additional languages by Bowern to ensure adequate coverage across all subgroups of Pama-Nyungan. A numeral was coded as compositional if it was comprised of other numerals in the synchronic system. This accounted for all but a few forms. Most of the exceptional forms were where one of the numerals in the compositional system was truncated (e.g. the word for ‘2’ is kutyarra but the compositional term for ‘3’ or ‘4’ contained kutya rather than kutyarra). These were also treated as compositional, as were the few forms where only one component of the numeral could be identified. Note that none of the languages in our sample have subtractive bases (e.g. where 7 is etymologically 10-3). All compositional forms for ‘3’ and ‘4’ are additive. 2 3 4 5 6 Numeral System Extent 7 9 0,- 0,0 0,1 1,- 1,0 1,1 Opacity of 3 and 4 Figure S1. Frequency of the various states of numeral extent and opacity data, for a total of 127 languages. Extent data is coded with states from 2 numerals to 8+ numerals (presence of two numerals = state 1, presence of 3 numerals = state 2, etc; presence of 8 or more numerals is coded as ‘9’). For the opacity data, 0,0 = compositional 3/ compositional 4; 0,1 = compositional 3/opaque 4; 1,0 = opaque 3/compositional 4; 1,1 = opaque 3/opaque 4. Languages where the maximum numeral was ‘3’ were coded as having missing data for 4 (states 5 and 6 in opacity analyses). 1 The languages in the sample, along with the trait values, are given in the trees in Figures S2 and S3 below. Phylogenetic Tree The phylogenetic consensus tree on which results are presented is based on that published in Bowern and Atkinson [2]. Some additional languages were coded for basic vocabulary cognacy using the same judgment criteria as in Bowern and Atkinson. The samples were combined with those from the 2012 paper and a tree compiled using the same model and parameters and those resulting in the highest posterior likelihood scores (that is, the Stochastic Dollo model). To quantify the uncertainty inherent in Bayesian phylogenetics, we ran our analyses using a sample of 700 trees, subsampled from the log file used to generate the consensus tree presented in Figure 1 of the main text. It is important to note that the trees were generated using basic vocabulary data, which was unrelated to the numeral extent data. This avoids biases for the reconstructions. To match the data we had for each of our analyses, the trees were subsequently pruned to the 127 languages represented, using R (ape package) [3]. Internal subgroup nodes are named according to the literature [4,5] on previously identified lowlevel clades in Pama-Nyungan. Most of these nodes were stable throughout the tree sample, as indicated by very high posterior probabilities on those nodes. Some of the higher level nodes were less stable. Central Pama-Nyungan, for example, had a posterior of .54 (compared to .87 for Western) and 1 for the ancestor of Lower Murray and Kulin (termed Macro-Victorian in Bowern and Atkinson). See Bowern and Atkinson [2] for further discussion. 2 3 3 2 5 6 4 4 5 3 2 4 3 4 4 4 9 5 4 3 4 5 5 3 5 5 3 4 3 2 4 5 3 3 4 5 4 4 5 3 3 9 3 3 4 5 4 4 4 3 7 4 3 5 3 3 4 7 6 4 3 3 3 4 6 9 4 2 5 3 4 4 4 4 4 3 2 3 2 3 2 5 3 4 9 3 6 4 9 4 6 3 5 9 4 2 4 4 3 5 3 2 4 3 9 3 6 4 4 3 3 3 3 3 3 4 3 3 9 3 4 3 3 4 3 3 3 6 Yanyuwa Bularnu Warluwarra Wakaya Djinba Djinang Yannhangu Djapu Dhuwal Djambarrpuyngu Dhuwala Gupapuyngu Dhangu Golpa Zorc Ritharrngu Mudburra Ngarinyman Bilinarra Gurindji Malngin Warlmanpa Warlpiri Warumungu Jaru WalmajarriHR Jiwarliny MangalaMcK NorthernMangarla Karajarri Nyangumarta Wangkatja Ngaanyatjarra Pitjantjatjara Kukatja Yulparija MartuWangka Warnman Nhanta Yingkarta Warriyangga Tharrgari Thalanyji Payungu Kurrama Yindjibarndi Panyjima Ngarluma Kariyarra Ngarla CentralAnmatyerr EasternArrernte Kaytetye Parnkala Adnyamathanha Guyani Kaurna Narrungga Wirangu Yalarnnga Kalkatungu MayiThakurti MayiKulan MayiYapi MayiKutuna Paakantyi Mithaka Yawarrawarrka Yandruwandha Nhirrpi Diyari Ngamini Yarluyandi Arabana Wangkangurru PittaPitta Wangkayutyuru WangkumaraMcDWur Wangkumara Punthamara Pirriya Kungkari Nyawaygi Dyirbal GuguBadhun Warungu Mbabaram Gangulu BidyaraGungabula Margany Gunya Wulguru Dharumbal GuuguYimidhirr Yidiny KokoBera WikMungkan KuguNganhcara Ayapathu YirYoront KuukThaayorre Kurtjar AghuTharrnggala Walangama Tagalag Linngithigh Mbakwithi Uradhi Mpalityan Yugambeh GoorengGooreng Duungidjawu Gumbaynggir Gamilaraay Kamilaroi Yuwaalaraay Wailwan Ngiyambaa Wiradjuri Muruwari Keerraywoorroong WembaWemba Woiwurrung Wathawurrung Ngarrindjeri Ngaiawang Keramin Figure S2. Trait data showing extent of numeral systems for the Pama-Nyungan languages under study. 3 0,0 Paakantyi 0,- Ngamini 1,0 Diyari 0,- Yawarrawarrka 0,0 Yandruwandha 0,- Nhirrpi 0,1 Wangkayutyuru 0,0 PittaPitta 1,- Arabana 1,- Wangkangurru 1,0 Kungkari 1,0 Pirriya 0,0 Wangkumara 0,- Punthamara 0,0 MayiKutuna 1,1 MayiYapi 1,0 MayiKulan 0,1 MayiThakurti 1,0 Kalkatungu 1,0 Yalarnnga 0,0 BidyaraGungabula 0,0 Gangulu 1,1 Nyawaygi 1,- GuguBadhun 1,0 Warungu 1,1 Wulguru 0,0 Dharumbal 1,1 Mbabaram 1,1 Kurtjar 1,- AghuTharrnggala 1,1 Tagalag 1,0 Walangama 1,- Mbakwithi 1,- Linngithigh 1,1 Uradhi 1,- WikMungkan 1,- KuguNganhcara 1,- Ayapathu 1,0 YirYoront 1,0 KokoBera 1,- GuuguYimidhirr 1,1 Yidiny 1,0 Keerraywoorroong 0,0 Wathawurrung 0,0 Woiwurrung 0,0 WembaWemba 0,1 Ngarrindjeri 0,0 Keramin 0,0 Ngaiawang 1,- Yuwaalaraay 1,1 Gamilaraay 1,0 Kamilaroi 0,1 Wiradjuri 1,0 Wailwan 1,0 Ngiyambaa 0,0 Muruwari 0,0 Yugambeh 1,- Duungidjawu 0,0 GoorengGooreng 0,0 Gumbaynggir 1,1 Wirangu 1,1 Parnkala 1,- Adnyamathanha 1,0 Guyani 1,1 Kaurna 1,0 Narrungga 1,0 CentralAnmatyerr 1,1 EasternArrernte 0,1 Kaytetye 0,0 Yanyuwa 0,- Bularnu 0,- Warluwarra 1,0 Wakaya 0,- Nyangumarta 0,0 Karajarri 1,0 NorthernMangarla 1,- MangalaMcK 1,1 Jaru 1,0 WalmajarriHR 1,1 Warlpiri 0,- Warlmanpa 1,- Warumungu 1,- Gurindji 1,- Ngarinyman 1,- Bilinarra 1,0 Mudburra 1,- Wangkatja 1,0 Pitjantjatjara 1,0 Ngaanyatjarra 1,1 Kukatja 1,0 Warnman 1,- MartuWangka 1,- Thalanyji 1,1 Payungu 1,- Tharrgari 1,1 Warriyangga 1,0 Yingkarta 1,0 Ngarluma 1,- Kariyarra 1,0 Yindjibarndi 1,- Kurrama 1,- Panyjima 1,0 Ngarla 1,- Nhanta 1,- Dhangu 1,- Dhuwala 1,- Dhuwal 1,- Ritharrngu 1,- Yannhangu 1,- Djinang Figure S3. Opacity Trait data; languages with numeral extent below 3 are omitted. Data refer to the opacity of 3 and 4. A hyphen - indicates that 4 is not present in the language (that is, the language has numeral extent 3); 1 indicates that the form is opaque, 0 that it is compositional. Further Information on Methods RJMCMC procedure RJMCMC was run using the BayesTraits (BT; [6,7]) package for analyzing trait evolution. BT has multiple modules, the relevant one of which is Multistate, which can be run with ML or MCMC analysis. Multistate is used for analyzing traits that take multiple discrete states, as is the case for numeral extent data (which take states 2:8+). 4 BT allows freedom for selecting a variety of settings for the MCMC simulations. For both numeral extent and opacity analyses, we set the number of MCMC iterations to 101,000,000, throwing out the first 1,000,000 iterations as burn-in, to allow the Markov Chain to stabilize from a typically non-ideal starting point, and sampling every 1,000 values to minimize autocorrelation. BT by default auto-adjusts the MCMC runs so that it has an acceptance rate of around 30%; given that 20-40% is a typical suggested acceptance rate, we kept this default parameter unmodified. Entropy-sorting and -weighting allow easier simultaneous visualization of the breadth of reconstructions given by RJMCMC. To produce the entropy plots, we sorted the 100,000 reconstructions by their entropies. An entropy histogram is then constructed. Estimates of the entropy density were given by spline interpolation of histograms. In principle we could have used kernel density estimation to smooth the histogram, but we found that this procedure was sufficient for our largely monomodal densities. From the sorted list of reconstructions, adjacent 100 reconstructions were averaged (for a total of 1,000 displayed reconstructions) to give the plots a smoother appearance and to mitigate display artifacts resulting from limited screen resolution. The reconstructions were weighted based on their entropy density value. An alternative approach would be to plot the multinomial distribution of standard deviation for each rate across the chain. We use the Shannon-Weiner approach because in the case of the multinomial distribution, we would obtain multiple numbers characterizing one reconstruction, making it unclear how the reconstructions should be sorted. Entropy gives one number and is an explicit measure for uncertainty. BT allows priors on trait frequencies. We ran models with estimated frequencies. Using empirically determined frequencies might have skewed the results for early tree nodes if contemporary languages show biased trait frequencies. Numeral extent A model that describes the evolution of numeral extent has at most 42 parameters, one for each possible forward/reverse transition. To parameterize the prior information, we used a uniform distribution between 0 and a hyperparameter that was allowed to vary between 0 and multiple ceiling values which we manually set. We found that the smaller the ceiling, the lower the entropy for the reconstructions. Moreover, while the degree of certainty varied, the state with the highest probability remained consistent, regardless of the ceiling (provided the ceiling was not too high). Figure 1 in the main paper reports data from the lowest ceiling; Figures S4 to S6 give higher ceilings (from left to right: 2x, 4x and 15x the original ceiling). Note that as the rate ceiling increases, there is more ambiguity in general, particular with respect to whether the root trait was ‘3’ or ‘4’. At the most relaxed rate ceiling, most reconstructions were uninformative, as they were nearly uniform. However, certain nodes are still relatively confidently reconstructed, particularly Mayi, Arandic, and Yolngu. These different rate ceilings on our prior distributions represent different hypotheses about the rate of evolution of these traits, where the slower the evolution rate, the more obscured the original states were. 5 Figure S4. Extent data for selected internal tree nodes, limit 4 Figure S5. Extent data for selected internal tree nodes, limit 8 6 Figure S6. Extent data for selected internal tree nodes, limit 30 Because every reconstruction from the Markov chain is displayed, the relative areas of each color represents the mean degree of support. This is valid because we have modulated the entropy-sorted reconstructions by the entropy density, which assures that the least frequent reconstructions are not exaggerated. Investigating rate differences In order to investigate the properties of different transition rates, we want to discover which rates are particularly fast or slow, both compared to other rates in the dataset, and specifically compared to the converse rate (that is, qxy compared to qyx). There are several different ways in which rates can be compared. We opted for three different measures: 1) the proportion of runs where the rate is zero; 2) the proportion of runs where a given rate was fastest, and 3) the mean rank of each rate (that is, which rate was on average fastest across all runs). The first two measures are reported in the main text. We also examined the standard deviation of rate variance across runs. Of these, the fastest six rates were the most stable across rank measures, and also had the lowest standard deviation. Rates involving state 7 were the most frequently deleted (perhaps due at least in part to the overall very low incidence of languages with extent 7 in our dataset). Other rates varied more extensively, and also shifted rank (sometimes quite extensively) depending on the measure used. We therefore conclude that the relative speed of the first six rates is likely to be significant, but any conclusions based on other rates are possibly artifacts of how rates were measured. Figure 2 of the main text gives measures 1 and 2 ; Figure S7 below gives the mean rank and Figure S8 the standard deviation of each rate. 7 Figure S7 Rank comparisons for transition rates, extent. The top panel shows the proportion of runs where each rate was the fastest, while the bottom panel gives the mean rank across runs. Figure S8 Standard deviations of transition rates. To investigate the statistical robustness of breaks in the data, we clustered rates and used the R package pvclust [8] to determine multiscale bootstrap values (10,000 bootstrap runs). However, this procedure was unhelpful determining statistically significant rate classes, as almost every cluster was returned as significant at 0.05 level. We therefore adopt the approach described here, concentrating on the fastest and slowest rates which are both ranked consistently and which have the lowest variance across the MCMC chain. Doing so allows us to focus on the main trends in the data. 8 Numeral opacity With numeral opacity of ‘3’ and ‘4’, we are interested in the correlatedness or dependency of their evolution. That is, we study whether the opacity status of one numeral influenced the status of the other. The application of RJMCMC to the numeral system extent data is natural and straightforward, given that there are potentially on the order of 1038 models to explore. However, our analysis of correlated evolution of numeral opacity requires more elaboration. To test for correlated evolution, we examine two classes of models, one that describes independent evolution and one that describes dependent evolution, which we could then compare via the Bayes factor (BF). With two binary traits, there are four possible paired states a language may assume; hence, the general coevolution model involves eight possible transitions, where simultaneous transitions in both traits are assumed to be negligibly slow (Figure 3 of main text). For independent evolution, there are four transitions. Fitting the data to both models and comparing their posterior likelihoods via the BF would tell us whether coevolution is favored. RJMCMC can be incorporated to strengthen this analysis by allowing the Markov chain to search the universe of dependent and independent models [6]; we could then either use the BF to compare all dependent models against all independent models, or compare the ratio of visits between dependent and independent models against that expected from random chance. This approach may be more desirable because it may be possible that the eight-parameter dependent model does not explain the data, but another dependent model might. Here, we adopt the RJMCMC approach as detailed by Pagel and Meade, but we modify the coevolution model of opacity of ‘3’ and ‘4’ by introducing two new paired states. These are required because the opacity of ‘4’ is ternary—opaque, compositional, or not attested. We kept the opacity of ‘3’ binary because no languages have a term for ‘4’ and not for ‘3’, and languages that only have a numeral extent of ‘2’ are not of interest in this analysis. Note that while ‘2’ was a possible limit for reconstruction in the extent model, it was never returned with more than minimal frequency. The underlying method for reconstruction is the same as for the extent data, except the number of possible models has significantly increased. We are thus interested in whether any of the three states influences one or both of the other states. Log likelihood averages across three runs are given in Table S1 below. The BF between runs range between 7.8 and 10.4, indicating strong support for the dependent model over the independent one. Dependent run A Dependent run B Dependent run C -172.683 -173.336 -173.154 Independent run A Independent run B Independent run C -177.571 -177.259 -177.905 Figures S8-S11 below are entropy plots of the opacity data, showing two rate limits (8 (Figures S9 and S10) and 30 (Figures S11 and S12). 9 Figure S9. Entropy plots for root and selected internal nodes for evolution of opacity in numeral systems, upper rate limit of 8, Dependent Model. Figure S10. Entropy plots for root and selected internal nodes for evolution of opacity in numeral systems, upper rate limit of 8, Independent Model (that is, uncorrelated evolution between the opacity or compositionality of 3 and 4). 10 Figure S 11. Entropy plots for root and selected internal nodes for evolution of opacity in numeral systems, upper rate limit of 30, Dependent Model. Figure S12. Entropy plots for root and selected internal nodes for evolution of opacity in numeral systems, upper rate limit of 30, Independent Model (that is, uncorrelated evolution between the opacity or compositionality of 3 and 4). To infer the mechanism of correlated evolution, we look at the relative sizes of the transition rates. We cannot look at the list of models most visited by RJMCMC, as was done by Pagel and Meade [6] and other work in this line, such as that by Dunn et al [9], for several reasons. By increasing the number of opacity states, the number of models has substantially increased (from 21,146 to 5,832,742,205,056), thus requiring very large RJMCMC samples to obtain sufficient resolution. Furthermore, there may be a handful of good models that differ from each other by 11 one or two transition rates, and would thus expect to give similar overall results. However, while they may hold a collective plurality, on an individual basis they do not appear very commonly, so even the ‘best’ models are visited only a few times. Thus, instead we look at the modelaveraged transition rate results, which would give us more meaningful information because common evolutionary models would collectively influence the transition rate results the most. Just as for the numeral extent investigation, we rank transition rates by speed (Figure S13) and present the mean rank across all runs (Figure S14). Figure 3 of the main text presents the fastest rates from Figure S13 in an alternative format. Opacity 0.8 Deleted Maximum Proportion 0.6 0.4 0.2 0.0 q21 q51 q43 q25 q34 q36 q63 q24 q46 q52 q15 q56 q42 q64 q13 q12 q31 q65 Rates Figure S 13 R Rank comparisons for transition rates for the dependent model of the opacity of numerals 3 and 4. The red line gives the proportion of runs where that rate was the fastest; the blue line gives the proportion of the runs where the rate was deleted. 12 Figure S14 R Rank comparisons for transition rates for the dependent model of the opacity of numerals 3 and 4. The top panel shows the proportion of runs where each rate was the fastest, while the bottom panel gives the mean rank across runs. References 1. Bowern, C. & Zentz, J. 2012 Numeral Systems in Australian languages. Anthropological Linguistics 2. Bowern, C. & Atkinson, Q. 2012 Computational phylogenetics and the internal structure of Pama-Nyungan. Language 88, 817–845. 3. R Core Team 2014 R: A language and environment for statistical computing. 4. Dixon, R. M. W. 1980 The languages of Australia. Cambridge: Cambridge University Press. 5. Bowern, C. & Koch, H. 2004 Australian languages: Classification and the comparative method. Amsterdam / Philadelphia: John Benjamins. 6. Pagel, M. & Meade, A. 2004 A phylogenetic mixture model for detecting patternheterogeneity in gene sequence or character-state data. Systematic Biology 53, 571–581. 7. Pagel, M., Meade, A. & Barker, D. 2004 Bayesian estimation of ancestral character states on phylogenies. Systematic biology 53, 673–684. 8. Suzuki, R. & Shimodaira, H. 2006 Pvclust: an R package for assessing the uncertainty in hierarchical clustering. Bioinformatics 22, 1540–1542. (doi:10.1093/bioinformatics/btl117) 13 9. Dunn, M., Greenhill, S. J., Levinson, S. C. & Gray, R. D. 2011 Evolved structure of language shows lineage-specific trends in word-order universals. Nature 473, 79–82. (doi:10.1038/nature09923) 14