Measuring the Ephemeral: Evaluation of Informal STEM Learning

advertisement

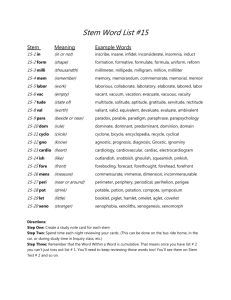

Measuring the Ephemeral: Evaluation of Informal STEM Learning Experiences Broader Impacts Infrastructure Summit Arlington, VA April 16th-18th, 2014 Images courtesy of ISE PI Meeting 2012 attendees From left to right: Geoffrey Haines-Stiles; Mohini Patel Glanz, NWABR; Scot Osterweil; April Luehmann Jamie Bell, Trevor Nesbit, Kalie Sacco, Grace Troxel (Association of Science-Technology Centers) John Falk (Oregon State University, Free-Choice Learning Program) Kirsten Ellenbogen (Great Lakes Science Center) Kevin Crowley (University of Pittsburgh Center for Learning in Out-of-School Environments) Sue Ellen McCann (KQED Public Media) Museums & science centers Zoos Botanical Aquaria Media (TV, Radio, Film) NSF AISL Program Festivals, cafes, events Cyber & Gaming Youth & Community Programs Citizen Science caise Convene, Connect, Characterize, Communicate Broader Impacts/ISE informal science.org PracticeandResearch Evaluation Capacity Building ^CAISE major initiatives for 2012-2015^ Framework for Evaluating Impacts of Informal Science Projects (2008) Learning Science in Informal Environments: People, Places and Pursuits Surrounded by Science: Learning Science in Informal Environments National Academies Press, 2010 National Academies Press, 2009 Learning Science in Informal Environments (2009) Six Learning Strands: 1. Developing interest 2. Understanding scientific knowledge 3. Engaging in scientific reasoning 4. Reflecting on science 5. Engaging in scientific practices 1. Identifying with the scientific enterprise Taking Science to School: Learning and Teaching Science in Grades K-8 (2007) K-8 School science learning strands: 1. Blank 2. Understanding scientific explanations 3. Generating scientific evidence, explanations and arguments 4. Reflecting on how science knowledge is produced and used in society 5. Participating in the practices of science—specialized talk, disciplinary tool use, representations Informal learning environments are complex. Challenges: • Cannot separate single experience • Experimental design is impractical Opportunities: • Allow wide range of outcomes • Naturally learner-driven • Inspires new methods and approaches Many different types of learning experiences and interactions can take place within a single exhibition. Image attribution: How People Make Things Summative Evaluation Camellia Sanford, University of Pittsburgh, 2009 Accessed March 20th, 2014: http://informalscience.org/evaluation/ic-000-000-003205/ Informal learning is collaborative and social. Challenges: • Individual assessment can be difficult Opportunities: • Helps us better understand how people learn High school students collaborate with an ecologist to study aquatic ecosystems. Image attribution: Making Natural Connections: An Authentic Field Research Collaboration Accessed March 20th, 2014: http://informalscience.org/projects/ic-000-000-001-050 LIFTOFF Evaluation Framework Data Collection: # of programs offering STEM and the intensity of those experiences Youth Impacts: attitudes, motivation, identity Professional Impacts: attitudes, demand, confidence Program Quality Impacts: frequency and intensity 12 Dimensions of Success Features of the Learning Environment Activity Engagement STEM Knowledge & Practices Youth Development in STEM Organization Participation STEM Content Learning Relationships Materials Purposeful Activities Inquiry Relevance Space Utilization Engagement with STEM Reflection Youth Voice www.PEARweb.org Resources for Evaluation: The PI’s Guide to Managing Evaluation InformalScience.org/evaluation/evaluation-resources/pi-guide Resources for Evaluation: Evaluations on InformalScience.org InformalScience.org/evaluation Building Informal Science Education (BISE) Project: Characterizing evaluations on InformalScience.org 300 275 250 2 7 5 2 6 1 225 200 Frequency of data collection methods used in the BISE synthesis reports (n=427) 175 150 125 1 2 8 100 75 50 25 0 7 3 6 9 40 14 11 10 10 9 8 8 6 6 5 5 3 1 Informal STEM Education Assessment Projects • Advancing Technology Fluency (PI: Brigid Barron, Stanford University) • Developing, Validating, and Implementing Situated Evaluation Instruments (DEVISE) • (PI: Rick Bonney, Cornell University) Science Learning Activation Lab (PI: Rena Dorph, University of California, Berkeley) Common Instrument (PI: Gil Noam, Harvard University) SYNERGIES (PI: John Falk, Oregon State University • • • Framework for Observing and Categorizing Instructional Strategies (FOCIS) (PI: Robert Tai, University of Virginia) Learn more at: http://informalscience.org/perspectives/blog/updates-from-the-fieldmeeting-on-assessment-in-informal-science-education What are We Measuring? This Wordle represents constructs measured by six informal STEM education assessment projects. Learn more… Jamie Bell, Project Director & PI of CAISE jbell@astc.org InformalScience.org facebook.com/informalscience @informalscience InformalScience.org/about/informal-science-education/for-scientists