Jones H. Cruzado

advertisement

Parallel Programming in

Artifitial Neural Networks

By

Harold Cruzado J.

Contents

Overview of Artificial Neural Network

(ANN)

Why Parallelizing?

Parallelizing by use MPI on C/C++:

An perceptron, back propagation, some functions

useful, phases in the training, C structure to

MPI structure

Conclusions

Artifitial Neural Network (ANN)

The ANN (or net) is used to solve many

problems sometimes that not have direct

solutions.

The ANN is composed by perceptrons (

neurons). Each percetron (also called

neuron) have input output and an constant

called bias

Why to parallelize?

An ANN have a high time consuming in

the process called training (also called

learning ), which is achieved mean some

algorithms, so as back propagation and

others; those algorithms involve the matrix

operations.

An Perceptron and its training

X1

:

:

Xn

Training theperceptron, lets t

a target, then

w1

:

:

wn

Σ

f

y

Θ

bias

forward

do {

1 : y f ( XW )

2 : update W , and

}while

| t Y |

backward

y f (w1 x1 ... wn xn ) f (WX )

- Forward phase (1): Calculate the actual outputs

- Backward phase (2): Adjust the weights to reduce error

An net of three layers

Layer 1

Layer 2

Layer 3

y1

x1

:

:

:

y1

:

xn

:

:

y1

forward

backward

Back Propagation Algorithm

1.

2.

3.

4.

5.

Define Net

Initialize Net

Forward: Propagate net

Backward: Adjust weights on net

Send (from process root) new Net all

process

6. If SSE>e repeat from step 3 to 6

Parallelizing by using MPI

forward

if SSE>e

:

backward

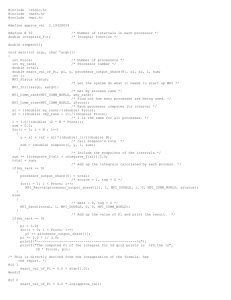

Function main

void main( int argc, char *argv[] )

{

Ctype declations...

MPI_Init(&argc, &argv );

MPI_Comm_rank( MPI_COMM_WORLD, &rank);

MPI_Comm_size( MPI_COMM_WORLD, &size);

Ctype to MPItype …

Initializing the Net …

Forward fase…

…

Backward fase …

MPI_Finalize();

exit(0);

}

Dartitioning data

The following function in C define the corresponding

part of an interval (for example, indices of rows of the

matrix ) for each process

Void Partition(int n,int

rank,int d,int I[2])

{ int r=n%d,q;

d=rank+1;

q=(int)n/d;

I[0]=q*(i-1);

I[1]=q*i;

if(i<=r){

I[0]+=i-1;

I[1]+=i;}

if(i>r){

I[0]+=r;

I[1]+=r; }

}

Product matrix-vector

void Ax(int A[f][c],int x[c],int I[2],int rank,int

size,int y[f])

{ int *c,aux[f];

for(int i=I[0];i<I[1];i++){

y[i-I[0]]=0;

for(int j=0;j<4;j++){

y[i-I[0]]+=A[i][j]*x[j];

}

}

}

C type structure to MPI type structure

typedef struct {

double

Y[N+1];

double

Delta[N];

double

Weights[N][N+1];

Int

bias

} LAYER

typedef struct {

LAYER

Layer[L];

double

Target[N][N];

double

Eta;

double

SSE;

double

x[DataRows][DataCols];

} NET;

C structure to MPI structure

int blocklengths[2];

MPI_Aint displacements[2],double_extent,layer_extent;

MPI_Datatype dtypes[2] = {MPI_DOUBLE,MPI_INT}, mpi_layer;

MPI_Type_extent(MPI_DOUBLE,&double_extent);

displacements[0] =0;

displacements[1] =(processors*(2*N+1)+ N*(N+1))*double_extent;

blocklengths[0]=processors*(2*N+1)+N*(N+1);

blocklengths[1]=1;

MPI_Status status;

MPI_Type_struct(2, blocklengths, displacements, dtypes, &mpi_layer);

MPI_Type_commit(&mpi_layer);

C structure to MPI structure

MPI_Type_extent(mpi_layer,&layer_extent);

MPI_Datatype dtypes1[2] = {mpi_layer,MPI_DOUBLE}, mpi_net;

displacements[0] =0;

displacements[1] =L*layer_extent;

blocklengths[0]=L;

blocklengths[1]=2+N*N+DataRows*DataCols+processors;

MPI_Type_struct(2, blocklengths, displacements, dtypes1,

&mpi_net);

MPI_Type_commit(&mpi_net);

Initializing the Net

..

if(rank==0){

LoadData("TrainingData.txt",Rows,Cols,Net.X);

SetBias(&Net,size);

GenerateTarget(&Net);

RandomWeights(&Net);

Net.Eta=0.02;

MPI_Bcast(&Net,1,NET,0,MPI_COMWORLD);

}

..

Forward phase

..

Partition(f,rank,size,I,n_loc);

for (int i=0;i<L,i++){

Ax(Net,Layer[l].Weights,Net.x,I,rank,size,y_loc);

if (rank==0)

MPI_Gather(y_loc,n_loc,MPI_INT,Net.Layer[l].y,n_loc,

MPI_INT,0,MPI_COMM_WORLD);

}

if(rank==0)

MPI_Bcast(&Net,1,NET,0,MPI_COMWORLD);

EvaluateSSE(&Net,0,rank,size);

..

Backward phase

..

DeltaAdjust(&Net,Net.Targets[i],rank,size);

WeightsAdjust(&Net,rank,size);

if(rank==0)

MPI_Bcast(&Net,1,NET,0,MPI_COMWORLD);

..

Conclusions

The MPI is good alternative to

implemented the parallel code

It is possible to parallelize the serial code

which use defined types by programmers

Questions?