SOMIC_Garcia_Information Theoretic Perspectives

advertisement

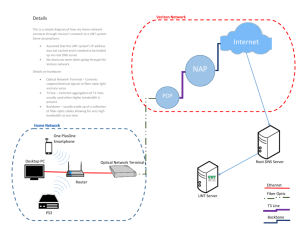

SoMIC , April 3,2013 Information Theoretic Perspectives to Cloud Communications Oscar N. Garcia Professor College of Engineering UNT Conventional wisdom seems to agree on the following issues: • 1) No system can be guaranteed to be impenetrable to malware, • 2) The Cloud will compete and win over sales of anything that does not require direct personal services, • 3) One of the obstacles to better security is the lack of a theory of encryption and secure software, • 4) Decryption in the Cloud presents dangers even when using an honest provider. Kinds of darknet sensing servers Depending on the level of response to the attack: 1) No response to an attack (“Black Hole sensor”) 2) Low level or minimal behavior as having been attacked (SYNACK) 3) Full behavior as victim of the attack (interactive honeypot) All examples below provide dynamic (blackbox) analysis: • Norman Corporation Sandbox (Norway) –Windows clone used w/o Internet connection for the malware to propagate • CWSandbox – U. of Mannheim (Germany) Windows XP on a virtual machine but allows Internet connectivity • Anubis – U. of Vienna, uses a PC emulator and allows Internet connectivity NICT’s approach via darknets www.youtube.com/watch?v=07DOnKDjkfg nicter Makes a real –time estimate of the types of malware present in the Internet by sensors in “darknets” IP’s by: • Multipoint passive monitoring since most if not all of what reach the server sensors is malware because the IP is not used • Analyzing the collected traffic information for commonality (macro) • Analyzing each specific attach for malware feature detection and how it causes damage (micro) • Using specimens from “honeypots” or darknets using dynamic (black box sacrifices) or static (manual white box analysis) by disassembly. • Correlating the features (datamining as in KDD 99) We are now involved in forming clusters via classification techniques to eventually find commonality of countermeasures for classes of attacks, rather than considering each kind individually. KDD 99 had a database with 41 features and thousands of incidents characterized by those features. Wrappers and their generation • My definition of a wrapper (loaded word): a “wrapping” or delegating a method to carrying a task without doing much computation before the delegation is used and often just passing complex data types. • Wrappers have been automatically generated (such as the SWIG or Simplified Wrapper and Interface Generator, an open source tool) to connect the wrapped program to another language, often of the scripting type. • Wrappers can be used as security mediators (say SAW project at Stanford) particularly now that they can be automatically generated (Fujitsu). A First Order Model of an Attack PROBING & SCANNING Objective? 1. Targeted or 2. Opportunistic. IDENTIFICATION OR LOCATION OF VALUABLE OR UNPROTECTED ACCOUNTS OR DBs POTENTIAL FOR DENIAL OF SERVICE HIDING AND PLANNING FUTURE OR SLOW RE-ENTRY DISCOVERING PASSWORDS & BRUTE FORCE DECRYPTION MALWARE ACTIONS AND TIMING - INFO GATHERING OR DESTRUCTION CLASSIFICATION OF ATTACKS • • • • • Purpose Origin Target Evaluation of threat etc Original KDD 99 listed 41 features; reduced to 22 in “Cyber Attack Classification Based on Parallel Support Vector Machine”, Mital Patel, MTech Scholar, SIRT, Bhopal, Yogdhar Pandey, Assistant Professor, CSE Dept, SIRT, Bhopal One more item of consensus Theoretical contributors in the field lament the lack of an integrative theory of how to achieve security by encryption or for that matter by any other approach. There are theoretical approaches for different encryption methods but not one that encompasses them all. We have been considering an information theory approach with some basic views about the compression, encryption and error correction sequence. And oriented a course to the common aspects of these topics. Two different approaches to information: information theoretic and algorithmic How does one measure information in each case: in non-redundant bits or in complexity? Claude E. Shannon Andrey N. Kolmogorov Why is measuring information an important endeavor? A “Gedanken” tool to measure in the information theoretic approaches: the Infometer. ogarcia@unt.edu 12 The information model is the same in both approaches NOISY OR NOISELESS SOURCE OF INFORMATION KOLMOGOROV USED THE MINIMUM SIZE OF THE SOURCE AS THE MEASURE OF INFORMATION CHANNEL OF INFORMATION with or without memory RECEIVER OR SINK OF INFORMATION SHANON USED THE PROBABILITY OF THE SYMBOLS TRANSMITTED THROUGH THE CHANNEL TO DEFINE THE MEASURE OF INFORMATION ogarcia@unt.edu 13 Kolmogorov Relative Complexity Leads to a measure of information Given a binary string s and a computational machine M, the complexity of s relative to M is given by the smallest number of bits needed to write a program p that exactly reproduces the sequence using the computer M. Let’s call L(p) the length of the sequence of binary symbols needed to express the program that run in M produces s as an output. The smallest possible L(p) for a given s over all programs and all machines that outputs s is the Kolmogorov measure of information in s relative in complexity to M represented as: where CM is the number of bits that it takes to describe M, a quantity that is independent of s. ogarcia@unt.edu 14 Shannon’s Entropic definition Shannon’s view of the degree of randomness, which corresponds to the amount of information being emitted by a source with more or less equiprobable output x, is measured in bits and called the entropy H(x) of x over the set of all possible messages X that could be emitted. It is given by the negative of the sum of the probability of each emitted message in the subset xєX times the logarithm of that probability: [1] ogarcia@unt.edu 15 THE CLOUD SYSTEM (Cryptocoding) ogarcia@unt.edu 16 Unicity value (Shannon) If the plaintext is not randomized, Eva with a supercomputer could estimate the key given a sufficiently large number n of samples (ciphertext only attack) of an n-digit cryptogram. What is that number? It is called the unicity distance and is given by nu = H(key)/(percentage redundancy of the plaintext/ size of ciphertext alphabet) = H(K)/(max possible H(P)-actual H(Px)) =H(K)/(redundancy of plaintext in bits/character) Then: change the key before we get past nu samples? Better figure out how much brute force cryptanalysis can the cryptosystem tolerate. Another way to look at it is the minimum amount of ciphertext that could yield a unique key by a brute force attack by an adversary with infinite computational resources. The denominator can also be stated as the difference between the maximum possible information of the plaintext and the actual information of the transmitted message. 17 ogarcia@unt.edu Example Using the 26 letters and the space, the maximum entropy if the were all equally likely would be –log2(27) = 4.7549/character while the actual average Shannon entropy of a character in an sufficiently long English plaintext is about 1.5 bits/character. The redundancy of the plaintext P is = (maxpossible-actual)=~(4.7-1.5)=3.2 bits/character. Assuming length(K) = 128 bits, then H(K)=128 for equiprobable keys (rather than standard language words) and the unicity distance is nu = 128 bits/3.2 bits per character = 40 characters which is a scary number if an attacker had such computational capacity to try all possible 2128 keys (brute force attack) on the 40 characters of ciphertext to extract some meaningful message and therefore figure out the key. Eve has a bid out for a teraflop computer. ogarcia@unt.edu 18 Classification of Compression Methods Most compression methods fall in one of four categories: i. Run-length encoding or RLE ii. Statistical methods – mostly entropy based (Huffman, Arithmetic codes, etc.) iii. Dictionary-based or LZ (Lempel-Ziv) methods iv. Transform methods (Fourier, wavelets, DCT, etc Removing redundancy leaves what is left containing more information per bit but the same entropy in the complete message. ogarcia@unt.edu 19 LOSSLESS COMPRESSION PROGRAMS and their use LEMPEL-ZIV Based on dynamic tables DEFLATE used in PKZIP, gzip, PNG Coding based on probabilities Use partial matches Use Grammars Based on Statistics (Rissanen’s idea) The tables can be encoded (SHRI, A generalization LZX) using of Huffman Huffman encoding Used in GIF Microsoft uses in its CAB format LZ+Welch = LZW Arithmetic codes for compression SEQUITOR RE-PAIR LZ+Renau = LZR Used in Zip Run-length encoding (lossless) used in simple colored icons but not oncontinuous-tone images although JPEG uses it. Common formats for run-length data include Truevision TGA, PackBits, PCX and ILBM and fax. ogarcia@unt.edu 20 Encryption The perfect approach to encryption is to immerse the data into a randomized noise-like stream that it would be difficult to identify it. A totally random sequence has the highest entropy possible, but of course, could not be compressed (although a couple of patents have been issued) or meaningfully decoded. Recurrences in the encrypted stream, give clues for potential decryption. DES was a failed encryption standard in the US that was replaced by the Advance Encryption Standard (AES) that may use keys of sizes 128, 192 or 256 bits for increased security. ogarcia@unt.edu 21 The general idea of encryption cipher: Plaintext an encrypting algorithm Secret Key(s) ogarcia@unt.edu ciphertext to be stored or transmitted (not processed until decrypted!) 22 Golomb’s postulates for randomness are the following: (G1): The numbers of 0s and 1s in the sequence are as near as possible to n/2(that is, exactly n/2 if n/2 is even, and (n±1)/2 if n is odd). (G2): The number of runs of given length should halve when the length is in-creased by one (as long as possible), and where possible equally many runsof given length should consist of 0s as of 1s. (G3): The out-of-phase autocorrelation (ANDing for binary of cyclic shifts) should be constant (independent of the shift). Tests for Randomness It should make sense that if we can test the randomness (and understand what we are testing for) of a string (or its generator) we should be able to improve it. Terry Ritter, a prolific author (whose webpage was last maintained up to 2005, http://www.ciphersbyritter.com/AUTHOR.HTM#Addr) wrote a nice survey (http://www.ciphersbyritter.com/RES/RANDTEST.HTM) on the literature on testing for randomness from 1963 to 1995. Since that time Marsaglia and Wan wrote: George Marsaglia, Wai Wan Tsang, Some Difficult-to-pass Tests of Randomness, Journal of Statistical Software, Volume 7, 2002, Issue 3. But the most comprehensive suite of tests that I found is from R. G. Brown: http://www.phy.duke.edu/~rgb/General/dieharder.php While the example is not to scale, it could be made precise in a specific case (blue line is start baseline) Total message info in bits Lossless Compression Encryption EC encoding Total number of bits in message (green) Lossy Total lost information Info per bit Lossless Lost information/bit Lossy Classification and examples of crypto systems Encryption/ Decryption Systems Symmetric: encryption and decryption keys are shared by Alice and Bob, or the former is shared and the latter calculated. Ex.: DES, AES 1970 Public Key (Asymmetric): nothing is shared but the common public knowledge of a public key infrastructure. Alice knows her private key but not Bob’s and vice-versa. Public key systems allow the secret transmission of a common key and therefore enable a symmetric system.Ex: ogarcia@unt.edu 26 RSA, ElGamal, NTRU, McEliece A High Level View of the Advanced Encryption Standard AES Software “Blender” Encryption Key 16 bytes block of plaintext Initialization Vector ciphertext For an animated description see: http://www.cs.bc.edu/~straubin/cs381-05/blockciphers/rijndael_ingles2004.swf ogarcia@unt.edu 27 “ALL YOU WANTED TO KNOW ABOUT AES BUT …” A Stick Figure Guide to the Advanced Encryption Standard www.slideshare.net/.../a-stick-figure-guide-to-theadvanced-encrypti... ogarcia@unt.edu 28 SUMMARY Block and Streaming Cryptosystems Common Private key Symmetric Dist=101 Goppa ECC generator matrix G Public-key encryption Asymmetric With a trapdoor One-way functions => HASH algorithms DES AES Similar IDEA PGP in tandem Diffie-Hellman (principle and implementation) RSA (find proper large primes) ElGamal(prime exp) NTRU(pair of poly.) Electronic McEliece (ECC ) Signature Elliptic Curves SHA-1,2,…,3 MD5 RIPEMD DSA(NIST…) Authentication Integrity (not privacy) Some background for elliptic curves (0): a singular elliptical curve is not an elliptical curve according to this definition! a later lesson, but imagine infinity as the additive identity element of the field. Also notice that the field is not specified: it could be the complex or real field, the field of rationals, Galois fields ( the ground field modulo p, or extension finite fields ), and any algebraic structure that fills the requirements for a field. Once you chose the field you use its elements for the coefficients, the indeterminants (variables), and the solutions: they all must be elements of the field. However, the representation of those elements plays a heavy burden in the computational complexity of the solutions. ogarcia@unt.edu 30 NSA In choosing an elliptic curve as the foundation of a public key system there are a variety of different choices. The National Institute of Standards and Technology (NIST) has standardized on a list of 15 elliptic curves of varying sizes. Ten of these curves are for what are known as binary fields (section 16.4 in text) and 5 are for prime fields. Those curves listed provide cryptography equivalent to symmetric encryption algorithms (e.g. AES, DES or SKIPJACK) with keys of length 80, 112, 128, 192, and 256 bits and beyond. For protecting both classified and unclassified National Security information, the National Security Agency has decided to move to elliptic curve based public key cryptography. Where appropriate, NSA plans to use the elliptic curves over finite fields with large prime moduli (256, 384, and 521 bits) published by NIST. ogarcia@unt.edu 31 ERROR CONTROL Error control in digital transmission and storage involves the detection and possible correction of errors due to noise. When designing error control systems it is important to know the type of errors that noise is most likely to cause. We classify those errors as random errors, burst errors and erasure errors among others. When we code for error detection we may require a retransmission if we can not tolerate discarding the message. With error correction capabilities we can repair up to a certain number of errors and obtain the original message back. In either case, we decrease the amount of information per bit when we encode for error control, but the amount of information transmitted is the same, just more redundant. ogarcia@unt.edu 32 HOMOMORPHIC ENCODING The last intruder or careless manager that one would suspect of disseminating or damaging the valuable user information is the Cloud provider. To insulate the user from that potential damage it would be ideal not to have to decrypt the information sent to the provider while the processing the user’s request from the utility. You wish the provider would never see your data This is the quest, not yet practical, of Homomorphic Encryption. ogarcia@unt.edu 33 What is a homomorphism? Groups G, H ƒ: G → H such that for any elements g1, g2 ∈ G, where * denotes the group operation in G and *' denotes an operation in H. If there is more than one operation the homorphism must hold for all. Elements r, s (encrypted) and operators in user range Elements r, s (encrypted) and “proper” operators in the Cloud domain Mapping between Universal Algebras ( ƒ: A → B for each n-ary operation μB and for all elements a1,...,an ∈ A. (Re: Wikipedia) ogarcia@unt.edu 34 Homomorphic Encryption: PHE and FHE (PARTIAL AND FULL) Plaintext x If encryption does not use randomness is called deterministic Partially homomorphic Cryptosystems: Unpadded RSA ElGamal Goldwasser-Micali Benaloh Paillier Okamoto–Uchiyama Naccache–Stern Damgård–Jurik Boneh–Goh–Nissim ciphertext Ƹ(x) Fully homomorphic Cryptosystems: Craig Gentry Marten van Dijk, Craig Gentry, Shai Halevi and Vinod Vaikuntanathan Nigel P. Smart and Frederik Vercauteren Riggio and Sicari Coron, Naccache and Tibouchi Credit to Wikipedia ogarcia@unt.edu 35 Elements of the Cloud Architecture User may have any or all of: Desktop PCs Laptops Servers Mobile devices Sensors, etc. S I Server Internet Access and Protocols PROVIDER U NAS (Large) Network Attached Storage Direct Connection HOME OFFICE PRIVATE CLOUD CLOUD Must have one or more: SaaS, PaaS, IaaS Full service cloud = a services utility ; specialized service clouds exist ENTERPRISE User ogarcia@unt.edu 36 ONE CLOUD with service(s) as needed from SaaS, PaaS, IaaS CLOUD Operating System (stack) and some Application I NAS I U NAS I Not a cloud as there are no services from a utility U PRIVATE CLOUD HOME OFFICE I S U Same user; different locations U U U Multiple related users serviced by one cloud ogarcia@unt.edu 37 PROVIDER PROVIDER CLOUD Must have one or more: SaaS, PaaS, IaaS CLOUD Must have one or more: SaaS, PaaS, IaaS ENTERPRISE U ENTERPRISE I U ENTERPRISE U U CLOUD Must have one or more: SaaS, PaaS, IaaS U PRIVATE CLOUD When connected to a public cloud it becomes a hybrid cloud ogarcia@unt.edu THE REAL CLOUD ENVIRONMENT 38 Research Areas in the Cloud: networking, operating systems, databases, storage, A metric for Clouds virtual machines, distributed systems, data-mining, web search, network measurements, and multimedia. Storage to computation ratio 500 TB/1000 cores at Illinois CCT (Cloud Computing Testbed) System stack: CentOS (the underlying OS beneath many popular parallel programming frameworks such as Hadoop and Pig Latin), The Open Cloud Consortium (OCC) is a not for profit that manages and operates cloud computing infrastructure to support : scientific, environmental, medical and health care research. ogarcia@unt.edu 39 Virtualization is a win-win solution From the client/user point of view: • Has practically on demand access to infinite (illusory but at lower cost than using individual real servers) autonomous resources and memory at a very competitive cost. From the server bank owner/service provider point of view: • More efficient utilization of physical facilities because peak loads can be handle by replication of VMs and not by adding individual servers to cover peak loads. • Faster disaster recovery and maintenance due to ease of VMs migration. • Be more competitive in pricing, processing offerings and variety of services due to economies of scale and physical plant expansion cost at a much smaller rate of increase. • Easier adaptation to and satisfaction of special customer requests ogarcia@unt.edu 40 by specializing and dedicating software, not hardware. WHITE PAPERS ogarcia@unt.edu 41 CONCLUSION: Information is power but … Quote from Herbert Simon (June 15, 1916 – February 9, 2001) : “In an information-rich world, the wealth of information means a dearth of something else: a scarcity of whatever it is that information consumes…the attention of its recipients.” ogarcia@unt.edu 42 IMPLICATIONS FOR THE FUTURE As I see it: 1.- Smaller or disappearing private IT installations 2.- If security does not improve, many private Clouds with interfaces to public or specialized ones in a hybrid arrangement 3.- The significant new struggle of Malls and store commerce to compete with electronic commerce 4.- Centralization of jobs in larger computing entities 6.- Significant changes in distance high quality education 7.- Data loss to natural disasters less common 8.- Data loss due to malware and cyberattacks more common 9.- Significant increase in loss of privacy In conclusion, if we thought that we were in a Brave New World GET READY FOR A BRAVER NEW ONE ogarcia@unt.edu 43