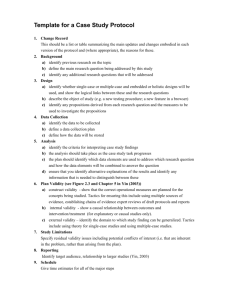

External Validity

advertisement

External Validity Timothy M. Ottusch H615 October 11, 2013 What is external validity? • “Concerns inferences about the extent to which a causal relationship holds over variations in persons, settings, treatments, and outcomes.” (p. 83) • It questions whether a causal relationship holds “over variations in persons, settings, treatments, and outcomes” that were and were not in the experiment. External Validity • People may want to generalize in several ways: • Narrow to broad • Broad to narrow • At a similar level • To a similar or different kind • Random sample to population members External Validity • Cronbach states most questions regarding external validity are about things not studied in the experiment (persons, settings, treatments, and outcomes). • Some others argue people should not be held accountable for questions in which they don’t ask. • Thoughts? • The authors argued “it is also wrong to limit external validity to questions about as-yet-unstudied instances.” (p. 84) • Campbell and Stanley said external validity was enhanced in single studies when a wide variety of conditions were used and it was made similar of experiments “to the conditions of application.” (p. 85) • What are some issues though when people try to do this? Threats to External Validity • Questions pertaining to external validity are similar to thinking about interactions. • I.e. Is the treatment effect similar across men and women? In Portland and Corvallis? • This is however not just about statistical interactions. • It is also important to get too tied up in statistical significance of interactions. • Can you think of any examples of when that might be? Threats to External Validity • Interaction of the Causal Relationship with Units • Sometimes questions arise to the unit that is being sampled and how much it is representative of the population of interest. • Are you sampling the full range of the population (For example, various ethnic groups)? • Are you sampling the full range of types of units (I.e. are you only sampling people who like to participate in research or populations most willing to participate?) • If you are interested in a certain issue, how would go about initially targeting a sample? • Even when you get the correct type of unit, how do you make sure you are getting the accurate subunits (I.e. students or teachers within sampled schools)? Threats to External Validity • Interaction of Causal Relationship Over Treatment Variations • • • • Length of experiment Strength of dose Size of experiment Treatments given alongside other treatments • What do you if the interaction is small and limited resources are at hand? • Other external factors (infrastructure for example) Threats to External Validity • Interaction of Causal Relationships with Outcomes • Examples from book: • Does the job program have effects outside of just making sure the person gets a job (i.e. being able to follow orders?) • Sometimes an experiment will have positive, negative, or no effects on various outcomes. • For example, what would be the likely effects on graduation rates, tuition, full-time faculty, part-time faculty, and plans for the design of a new building if a university decided to change its faculty to student ratio from 40 to 1 to 20 to 1? • How does your research have possible positive and negative effects on various outcomes? • Discussions with stakeholders before the experiment is suggested. Threats to External Validity • Interaction of Causal Relationship with Settings • Works best if you can look at causal relationships across many settings • But this also comes at a cost… • The book used a university as an example as a way to look at multiple settings (sub settings). • What happens though when some settings work well and others not, but you need to implement a university wide policy? • How does including various settings hurt and help other aspects of validity? Threats to External Validity • Context-Dependent Mediation • “The idea is that studies of causal mediation identify the essential processes that must occur in order to transfer an effect.” (p. 90) • A mediator, however, may work in one context but not the other. • For example, a change in the amount of students in a classroom may have different mediators for different types of students. • It may be smaller classrooms allows professors more time to give more in-depth, time-consuming projects which get the students more invested and allow them to learn more. • It may also be smaller class sizes allow students to flourish by having more personal attention by the professors which may be needed for struggling students. • Think about your own research, can you think of ideas how this might effect your work? Constancy of Effect Size Versus Casual Direction • There are many issues surrounding how large an effect size needs to possibly be to have relevance. • This may differ depending on sample size, relevance of effect size to the outcome being looked at. • The authors believe the direction of causal relationship is more important though. • Meta-analysis may show consistent directions but different effect sizes • Policy makers are often looking for broad policies that may have different effects in pockets of the population. • Theories are built around dependable relationships • It is irrelevant theoretically in some ways. • What do you think? When might effect size be important? Sampling and External Validity • Random Sampling • Allows for greater confidence in the generalizability across units, treatments, settings and outcomes. • Is strongly recommended when possible • For example, random sample of from U.S. population or random sample of Head Starts throughout United States. • Purposive Sampling • Is more common than random sampling in experiments. • Even when sample size is too small and interactions are unable to be run, a main effect still might say something about the relationship. • This is possible not just for persons, but for settings and outcomes, but not as common for treatments. Tying External to Others • How does external validity relate to other types of validity? • You are designing a study on what the outcome of a new parenting education class is on a population of young parents, comparing with a randomized group receiving the old parenting class… • You are designing a new program for high school students to take college classes online their senior year, comparing graduation rates and college continuation between enrollees and nonenrollees…. • How does construct validity relate? • How does statistical conclusion validity relate? • How does internal validity relate? Relating the validities • Construct and External Validities • Similarities • Both are generalizations • Valid knowledge of the constructs can shed light on external validity questions (example chemo, p. 93) • Differences • Differ in inferences made • External validity cannot be divorced from the causal relationship understudy but construct can. (p. 94) • We may be wrong about one and right about the other • Differ in how to improve them Relating the validities • No single experiment can cover all the risks of validity • “Threats to validity are heuristic devices that are intended to raise consciousness about priorities and tradeoffs, not to be a source of skepticism or despair” (p. 96) • A program of research, not a single study, is expected to tackle most of these threats. • Limited resources means tradeoffs are going to happen • It is important to note your priorities in the beginning in regards to what aspects of validity are the most important to tackle • Unnecessary tradeoffs should be avoided. • How do you go about figuring out your priorities? Relating the validities • Internal validity as the “Sine Qua Non” • Internal validity is not the sine qua non of all research. It does have a special (but not inviolate) place in cause-probing research, and especially in experimental research, by encouraging critical thinking about descriptive casual claims. (P. 98) • What do you think about this? • When is construct validity just as important? Others as well? • Think about your own research, how does internal validity rest on the quality of the other types? Nonexperimental Methods • People may use nonexperimental methods for various reasons • • • • • May not be easy to manipulate the phenomena Ethical reasons Fear of changing phenomena is undesirable ways Cause may not be clear exactly Topic may be underdeveloped and in need of pilot work. • “Premature experimental work is a common research sin?” (P. 99) • When is it ready for prime time? • When do you know? • They also bring a drop in internal validity but a possible increase in construct and external validity. Internal Validity as Sine Qua Non 1. Doing an experiment means you are interested in a descriptive causal question 2. Internal validity can be given priority by researchers over other types (although this is had to really measure). • People can use resource allocation of a marker • Maybe you want to use funds to beef up another validity type. • Perhaps not randomly sampling but using more people? Having several mini studies instead of just one? Increasing the measurement quality of the instruments? • All would drop internal validity • Think about your own research (or your bubbling ideas!), how would your ideas help and hurt different validities? Basic v. Applied Research • Basic tends to more concerned with construct • Applied tends to be more focused on external • Although this does vary • Construct validity of settings is less of a concern for basic researchers and construct validity of effects is more of a concern for applied researchers. • I’m genuinely curious, I always think of basic research happening first and then applied following. Does this help create a pipeline of dealing with the different types of validity?