ppt

advertisement

CSCE 431:

Testing

Some material from Bruegge, Dutoit, Meyer et al

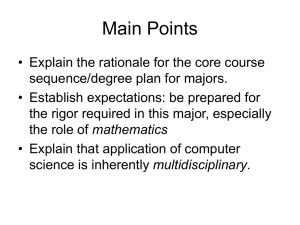

Outline

•

•

•

•

•

•

•

•

•

•

•

•

•

•

Introduction

How to deal with faults, erroneous states, and failures

Different kinds of testing

Testing strategies

Test automation

Unit testing

Mocks etc.

Estimating quality: Coverage

Using dataflow in testing

Mutation testing

Test management

Collaborative construction

Effectiveness of different quality assurance techniques

References

CSCE 431 Testing

Testing Truism

• Untested systems will not work

• Why?

• Requirements not correct

• Misunderstood requirements

• Coding errors

• Miscommunication

CSCE 431 Testing

Edsger W. Dijstra, in 1970

Program testing can be used to show the

presence of bugs, but never to show their

absence!

• It is impractical or impossible to exhaustively

test all possible executions of a program

• It is important to choose tests wisely

CSCE 431 Testing

Increasing System Reliability

• Fault avoidance

• Detect faults statically, without relying on executing any

system models

• Includes development methodologies, configuration

management, verification

• Fault detection

• Debugging, testing

• Controlled (and uncontrolled) experiments during

development process to identify erroneous states and their

underlying faults before system release

• Fault tolerance

• Assume that system can be released with faults and that

failures can be dealt with

• E.g., redundant subsystems, majority wins

• For a little extreme approach, see Martin Rinard:

Acceptability-Oriented Computing, Failure-Oblivious Computing

CSCE 431 Testing

Fault Avoidance and Detection

• Static Analysis

•

•

•

•

Hand execution: Reading the source code

Walk-Through (informal presentation to others)

Code Inspection (formal presentation to others)

Automated Tools checking for

• Syntactic and semantic errors

• Departure from coding standards

• Dynamic Analysis

• Black-box testing (Test the input/output behavior)

• White-box testing (Test the internal logic of the

subsystem or class)

• Data-structure based testing (Data types

determine test cases)

CSCE 431 Testing

Terminology

test component

part of the system isolated for testing

test case

a set of inputs and expected results that exercises a test

component (with the purpose of causing failures or detecting

faults)

test stub

a partial implementation of a component on which a test

component depends

test driver

a partial implementation of a component that depends on a test

component

fault

design or coding mistake that may cause abnormal behavior

erroneous state

manifestation of a fault during execution. Caused by one or more

faults and can lead to a failure

failure

deviation between the observed and specified behavior

• When exact meaning not important, fault, failure, erroneous state

commonly called errors, defects, bugs

CSCE 431 Testing

Outline

•

•

•

•

•

•

•

•

•

•

•

•

•

•

Introduction

How to deal with faults, erroneous states, and failures

Different kinds of testing

Testing strategies

Test automation

Unit testing

Mocks etc.

Estimating quality: Coverage

Using dataflow in testing

Mutation testing

Test management

Collaborative construction

Effectiveness of different quality assurance techniques

References

CSCE 431 Testing

What is This?

• A failure?

• An error?

• A fault?

• We need to describe specified

behavior first!

• Specification: “A track shall

support a moving train”

CSCE 431 Testing

Erroneous State (“Error”)

CSCE 431 Testing

Fault

• Possible algorithmic

fault: Compass

shows wrong

reading

• Or: Wrong usage of

compass

• Or: Communication

problems between

teams

CSCE 431 Testing

Mechanical Fault

CSCE 431 Testing

Modular Redundancy

CSCE 431 Testing

Declaring the Bug as a Feature

CSCE 431 Testing

Patching

CSCE 431 Testing

Testing

CSCE 431 Testing

Outline

•

•

•

•

•

•

•

•

•

•

•

•

•

•

Introduction

How to deal with faults, erroneous states, and failures

Different kinds of testing

Testing strategies

Test automation

Unit testing

Mocks etc.

Estimating quality: Coverage

Using dataflow in testing

Mutation testing

Test management

Collaborative construction

Effectiveness of different quality assurance techniques

References

CSCE 431 Testing

Typical Test Categorization

• Unit testing

• Integration testing

• System testing

• Reliability testing

• Stress testing

CSCE 431 Testing

Unit Testing

• Test each module individually

• Choose data based on knowing the source code

• “White-box” testing

• Desirable to try to cover all branches of a program

• Heuristics: choose input data

1. Well within acceptable input range

2. Well outside acceptable input range

3. At or near the boundary

• Usually performed by the programmer implementing

the module

• Purchases components should be unit tested too

• Goal: component or subsystem correctly implemented,

and carries out the intended functionality

CSCE 431 Testing

Integration Testing

• Testing collections of subsystems together

• Eventually testing the entire system

• Usually carried out by developers

• Goal: test interfaces between subsystems

• Integration testing can start early

• Stubs for modules that have not yet been

implemented

• Agile development ethos

CSCE 431 Testing

System Testing

• The entire system is tested

• Software and hardware together

• Black box methodology

• Robust testing

• Science of selecting test cases to maximize

coverage

• Carried out by developers, but likely a separate

testing group

• Goal: determine if the system meets its

requirements (functional and nonfunctional)

CSCE 431 Testing

Reliability Testing

• Run with same data repeatedly

• Finding timing problems

• Finding undesired consequences of changes

• Regression testing

• Fully automated test suites to run regression

test repeatedly

CSCE 431 Testing

Stress Testing

• How the system performs under stress

• More than maximum anticipated loads

• No load at all

• Load fluctuating from very high to very low

• How the system performs under exceptional

situations

•

•

•

•

Longer than anticipated run times

Loss of a device, such as a disk, sensor

Exceeding (physical) resource limits (memory, files)

Backup/Restore

CSCE 431 Testing

Acceptance Testing

• Evaluates the system delivered by developers

• Carried out by/with the client

• May involve executing typical transactions on

site on a trial basis

• Goal: Enable the customer to decide whether

to accept a product

CSCE 431 Testing

Verification vs. Validation

• Validation: “Are you building the right thing?”

• Verification: “Are you building it right?”

• Acceptance testing about validation, other

testing about verification

CSCE 431 Testing

Another Test Categorization –

By Intent

• Regression testing

• Retest previously tested element after changes

• Goal is to assess whether changes have

(re)introduced faults

• Mutation testing

• Introduce faults to assess test quality

CSCE 431 Testing

Categorization by Process Phase

• Unit testing

• Implementation

• Integration testing

• Subsystem

integration

• System testing

• System integration

V-Model

• Acceptance testing

• Deployment

• Regression testing

• Maintenance

CSCE 431 Testing

Outline

•

•

•

•

•

•

•

•

•

•

•

•

•

•

Introduction

How to deal with faults, erroneous states, and failures

Different kinds of testing

Testing strategies

Test automation

Unit testing

Mocks etc.

Estimating quality: Coverage

Using dataflow in testing

Mutation testing

Test management

Collaborative construction

Effectiveness of different quality assurance techniques

References

CSCE 431 Testing

Goal – Partition Testing

• Cannot test for all possible input data

• Idea: For each test, partition input data into equivalence classes,

such that:

• The test fails for all elements in the equivalence class; or

• The test succeeds for all elements in the equivalence class.

• If this succeeds:

• One input from each equivalence class suffices

• No way to know if partition is correct (likely not)

• Heuristics - could partition data like this:

•

•

•

•

Clearly good values

Clearly bad values

Values just inside the boundary

Values just outside the boundary

CSCE 431 Testing

Choosing Values From

Equivalence Classes

• Each Choice (EC):

• For every equivalence class c, at least one test

case must use a value from c

• All Combinations (AC):

• For every combination ec of equivalence classes, at

least one test case must use a set of values from ec

• Obviously more extensive, but may be unrealistic

• Think, e.g., testing a compiler (all combinations of all

features)

CSCE 431 Testing

Example Partitioning

• Date-related program

• Month: 28, 29, 30, 31 days

• Year:

•

•

•

•

Leap

Standard non-leap

Special non-leap (x100)

Special leap (x400)

• Month-to-month transition

• Year-to-year transition

• Time zone/date line locations

• All combinations: some do not make sense

CSCE 431 Testing

About Partition Testing

• Applicable to all levels of testing

• unit, class, integration, system

• Black box

• Based only on input space, not the implementation

• A natural and attractive idea, applied by many

(most) testers

• No rigorous basis for assessing effectiveness, as

there is generally no way of being certain that

partition corresponds to reality

CSCE 431 Testing

Outline

•

•

•

•

•

•

•

•

•

•

•

•

•

•

Introduction

How to deal with faults, erroneous states, and failures

Different kinds of testing

Testing strategies

Test automation

Unit testing

Mocks etc.

Estimating quality: Coverage

Using dataflow in testing

Mutation testing

Test management

Collaborative construction

Effectiveness of different quality assurance techniques

References

CSCE 431 Testing

Test Automation

• Testing is time consuming

• Should be automated as much as possible

• At a minimum, regression tests should be run

repeatedly and automatically

• Many tools exist to help

• E.g., automating test execution with “xUnit” tools

• http://en.wikipedia.org/wiki/XUnit

• It is possible to automate more than just test

execution

CSCE 431 Testing

Test Automation

• Generation

• Test quality estimation

• Test inputs

• Selection of test data

• Test driver code

• Execution

• Running the test code

• Recovering from

failures

• Coverage measures

• Other test quality

measures

• Feedback to test data

generator

• Management

• Save tests for

regression testing

• Evaluation

• Oracle: classify pass/no

pass

• Other info about results

CSCE 431 Testing

Automated Widely

• Generation

• Test quality estimation

• Test inputs

• Selection of test data

• Test driver code

• Execution

• Running the test code

• Recovering from

failures

• Coverage measures

• Other test quality

measures

• Feedback to test data

generator

• Management

• Save tests for

regression testing

• Evaluation

• Oracle: classify pass/no

pass

• Other info about results

CSCE 431 Testing

Difficult to Automate

• Generation

• Test quality estimation

• Test inputs

• Selection of test data

• Test driver code

• Execution

• Running the test code

• Recovering from

failures

• Coverage measures

• Other test quality

measures

• Feedback to test data

generator

• Management

• Save tests for

regression testing

• Evaluation

• Oracle: classify

pass/no pass

• Other info about results

CSCE 431 Testing

Outline

•

•

•

•

•

•

•

•

•

•

•

•

•

•

Introduction

How to deal with faults, erroneous states, and failures

Different kinds of testing

Testing strategies

Test automation

Unit testing

Mocks etc.

Estimating quality: Coverage

Using dataflow in testing

Mutation testing

Test management

Collaborative construction

Effectiveness of different quality assurance techniques

References

CSCE 431 Testing

What to Unit Test?

• Mission critical: test or die

• Complex: test or suffer

• Everything non-trivial: test or waste time

• Everything trivial: test == waste of time

CSCE 431 Testing

Code Coverage Metrics

• Take a critical view

• E.g.: Java getters and setters usually trivial

• Not testing them results in low code coverage

metric (< 50%)

• But they can indicate poorly covered parts of

code

• Example: error handling

CSCE 431 Testing

xUnit

• cppunit — C++

• JUnit — Java

• NUnit — .NET

• SUnit — Small Talk

• This was the first unit testing library

• pyUnit — Python

• vbUnit — Visual Basic

•...

CSCE 431 Testing

Outline

•

•

•

•

•

•

•

•

•

•

•

•

•

•

Introduction

How to deal with faults, erroneous states, and failures

Different kinds of testing

Testing strategies

Test automation

Unit testing

Mocks etc.

Estimating quality: Coverage

Using dataflow in testing

Mutation testing

Test management

Collaborative construction

Effectiveness of different quality assurance techniques

References

CSCE 431 Testing

Mock Objects

• Often (practically) impossible to include real objects,

those used in full application, into test cases

• To test code that depends on such objects, one often

uses mock objects instead

• Mock object simulates some part of the behavior of

another object, or objects

• Useful in situations where real objects

• Could provide non-deterministic data

• States of real objects are hard to reproduce (e.g. are result of

interactive use of software, erroneous cases)

• Functionality of real objects has not yet been implemented

• Real objects are slow to produce results

• Tear-up/tear-down requires lots of work and/or is time

consuming

CSCE 431 Testing

Knowing What is Being Tested

• Assume a failed test involves two classes/data

types

• Who to blame?

• One class’ defect can cause the other class to

fail

• Essentially, this is not unit testing, but rather

integration testing

• “Mocking” one class makes it clear which class

to blame for failures

CSCE 431 Testing

Mocks and Assigning Blame in

Integration Testing

• Direct integration testing

• Code + database

• My code + your code

• Integration testing with mock objects

• Code + mock database

• My code + mock your code

• Mock my code + your code

• Mocks help to make it clear what the system under test (SUT) is

CSCE 431 Testing

Mocks and Assigning Blame in

Integration Testing

• Direct integration testing blame assignment unclear

• Code + database

• My code + your code

• Integration testing with mock objects blame assignment clear

• Code + mock database

• My code + mock your code

• Mock my code + your code

• Mocks help to make it clear what the system under test (SUT) is

• As with scientific experiments, only change the variable being

measured, control others

CSCE 431 Testing

Terminology

Dummy object

Passed around, never used. Used for filling

parameter lists, etc.

Fake object

A working implementation, but somehow

simplified, e.g., uses an in-memory database

instead of a real one

Stub

Provides canned answers to calls made

during a test, but cannot respond to anything

outside what it is programmed for

Mock object

Mimic some of the behavior of the real object,

for example, dealing with sequences of calls

• The definitions are a bit overlapping and ambiguous, but the

terms are being used, and it is good to know their meaning,

even if imprecise

CSCE 431 Testing

Outline

•

•

•

•

•

•

•

•

•

•

•

•

•

•

Introduction

How to deal with faults, erroneous states, and failures

Different kinds of testing

Testing strategies

Test automation

Unit testing

Mocks etc.

Estimating quality: Coverage

Using dataflow in testing

Mutation testing

Test management

Collaborative construction

Effectiveness of different quality assurance techniques

References

CSCE 431 Testing

White Box Testing

• White box – you know what is inside, i.e. the code

• Idea

• To assess the effectiveness of a test suite, measure how much

of the program it exercises

• Concretely

• Choose a kind of program element, e.g., instructions

(instruction coverage) or paths (path coverage)

• Count how many are executed at least once

• Report as percentage

• A test suite that achieves 100% coverage achieves the

chosen criterion. Example:

• “This test suite achieves instruction coverage for routine r ”

• Means that for every instruction i in r, at least one test executes i

CSCE 431 Testing

Coverage Criteria

• Instruction (or statement) coverage

• Measure instructions executed

• Disadvantage: insensitive to some control structures

• Branch coverage

• Measure conditionals whose paths are both/all executed

• Condition coverage

• How many atomic Boolean expressions evaluate to both

true and false

• Path coverage

• How many of the possible paths are taken

• path == sequence of branches from routine entry to exit

CSCE 431 Testing

Using Coverage Measures to

Improve Test Suite

• Coverage-guided test suite improvement

1. Perform coverage analysis for a given criterion

2. If coverage < 100%, find unexercised code sections

3. Create additional test cases to cover them

• Process can be aided by a coverage analysis tool

1. Instrument source code by inserting trace instructions

2. Run instrumented code, yielding a trace file

3. From the trace file, analyzer produces coverage report

• Many tools available for many languages

• E.g. PureCoverage, SoftwareVerify, BullseyeCoverage,

xCover, govc, cppunit

CSCE 431 Testing

Example: Source Code

class ACCOUNT feature

balance: INTEGER

withdraw(sum: INTEGER)

do

if balance >= sum then

balance := balance – sum

if balance = 0 then

io.put_string(“Account empty%N”)

end

else

io.put_string(“Less than “

io.put_integer(sum)

io.put_string(“ $ in account%N)

end

end

end

end

CSCE 431 Testing

Instruction Coverage

class ACCOUNT feature

balance: INTEGER

withdraw(sum: INTEGER)

do

if balance >= sum then

balance := balance – sum

if balance = 0 then

io.put_string(“Account empty%N”)

end

else

io.put_string(“Less than “

io.put_integer(sum)

io.put_string(“ $ in account%N)

end

end

end

end

-- TC1:

create a

a.set_balance(100)

a.withdraw(1000)

-- TC2:

create a

a.set_balance(100)

a.withdraw(100)

CSCE 431 Testing

Condition and Path Coverage

class ACCOUNT feature

balance: INTEGER

withdraw(sum: INTEGER)

do

if balance >= sum then

balance := balance – sum

if balance = 0 then

io.put_string(“Account empty%N”)

end

else

io.put_string(“Less than “

io.put_integer(sum)

io.put_string(“ $ in account%N)

end

end

end

End

-- TC1:

create a

a.set_balance(100)

a.withdraw(1000)

-- TC2:

create a

a.set_balance(100)

a.withdraw(100)

--TC3:

create a

a.set_balance(100)

a.withdraw(99)

CSCE 431 Testing

Outline

•

•

•

•

•

•

•

•

•

•

•

•

•

•

Introduction

How to deal with faults, erroneous states, and failures

Different kinds of testing

Testing strategies

Test automation

Unit testing

Mocks etc.

Estimating quality: Coverage

Using dataflow in testing

Mutation testing

Test management

Collaborative construction

Effectiveness of different quality assurance techniques

References

CSCE 431 Testing

Dataflow Oriented Testing

• Focuses on how variables are defined,

modified, and accessed throughout the run of

the program

• Goal

• Execute certain paths between a definition of a

variable in the code and certain uses of that

variable

CSCE 431 Testing

Motivation

•

•

•

•

Instruction coverage: all nodes in the control flow graph

Branch coverage: all edges in the control flow graph

Neither tests interactions

Could use path coverage, but it often leads to

impractical number of test cases

• Only a few paths uncover additional faults

• Need to distinguish “important” paths

• Intuition: statements interact through dataflow

• Value computed in one statement, used in another

• Faulty value in a variable revealed only when it is used, other

paths can be ignored

CSCE 431 Testing

Access Related Defect Candidates

• Dataflow information also helps to find

suspicious access related issues

• Variable defined but never used/referenced

• Variable used but never defined/initialized

• Variable defined twice before use

• Many of these defected by static analysis tools

• E.g. PCLint, Coverity, Klocwork, CodeSonar,

Cppcheck

CSCE 431 Testing

Kinds of Accesses to a Variable

• Definition (def) - changing the value of a variable

• Initialization, assignment

• Use - reading the value of a variable (without changing)

• Computational use (c-use)

• Use variable for computation

• Predicative use (p-use):

• Use variable in a predicate

• Kill - any operation that causes the variable to be

deallocated, undefined, no longer usable

• Examples

a = b * c

if (x > 0)...

c-use of b; c-use of c; def of a

p-use of x

CSCE 431 Testing

Characterizing Paths in a

Dataflow Graph

• For a path or sub-path p and a variable v:

• def-clear for v

• No definition of v occurs in p

• DU-path for v

• p starts with a definition of v

• Except for this first node, p is def-clear for v

• v encounters either a c-use in the last node or a p-use

along the last edge of p

CSCE 431 Testing

Example: Control Flow Graph

for withdraw

class ACCOUNT feature

balance: INTEGER

withdraw(sum: INTEGER)

do

if balance >= sum then

balance := balance – sum

if balance = 0 then

io.put_string(“Account empty%N”)

end

else

io.put_string(“Less than “

io.put_integer(sum)

io.put_string(“ $ in account%N)

end

end

end

end

CSCE 431 Testing

Data Flow Graph for sum in

withdraw

CSCE 431 Testing

Data Flow Graph for balance

in withdraw

CSCE 431 Testing

Some Coverage Criteria

all-defs

all-p-uses

all-c-uses

execute at least one def-clear sub-path

between every definition of every

variable and at least one reachable use

of that variable

execute at least one def-clear sub-path

from every definition of every variable to

every reachable p-use of that variable

execute at least one def-clear sub-path

from every definition of every variable to

every reachable c-use of the respective

variable

CSCE 431 Testing

Some Coverage Criteria

all-c-uses/some-p-uses apply all-c-uses; then if any

definition of a variable is not

covered, use p-use

all-p-uses/some-c-uses symmetrical to all-c-uses/

some-p-uses

all-uses

execute at least one def-clear

sub-path from every definition

of every variable to every

reachable use of that variable

CSCE 431 Testing

Dataflow Coverage Criteria for sum

• all-defs - execute at least one

def-clear sub-path between

every definition of every variable

and at least one reachable use

of that variable

(0,1)

• all-p-uses - execute at least one

def-clear sub-path from every

definition of every variable to

every reachable p-use of that

variable

(0,1)

• all-c-uses - execute at least one

def-clear sub-path from every

definition of every variable to

every reachable c-use of the

respective variable

(0,1,2); (0.1,2,3,4); (0,1,5)

CSCE 431 Testing

Dataflow Coverage Criteria for sum

• all-c-uses/some-p-uses - apply

all-c-uses; then if any definition

of a variable is not covered, use

p-use

(0,1,2); (0,1,2,3,4); (0,1,5)

• all-p-uses/some-c-uses symmetrical to all-c-uses/ somep-uses

(0,1)

• all-uses - execute at least one

def-clear sub-path from every

definition of every variable to

every reachable use of that

variable

(0,1); (0,1,2); (0,1,2,3,4); (0,1,5)

CSCE 431 Testing

Subsumption of Dataflow

Coverage Criteria

CSCE 431 Testing

Outline

•

•

•

•

•

•

•

•

•

•

•

•

•

•

Introduction

How to deal with faults, erroneous states, and failures

Different kinds of testing

Testing strategies

Test automation

Unit testing

Mocks etc.

Estimating quality: Coverage

Using dataflow in testing

Mutation testing

Test management

Collaborative construction

Effectiveness of different quality assurance techniques

References

CSCE 431 Testing

Who Tests the Tester?

• All tests pass. Is the software really that good?

• How does one know?

• Is the software perfect or is the coverage too

limited?

• Test the tests

• Intentionally introduce defects

• If tests find the defects, test suite good

• If no bugs are found, test suite insufficient

• Have to plan defect types and locations

• Random?

• Weight based on code criticality?

• Amount of coverage?

CSCE 431 Testing

Fault Injection Terminology

• Faulty versions of the program = mutants

• Only versions not equivalent to the original program

are considered to be mutants (even though the

original program was faulty)

• A mutant is

• Killed if at least one test case detects the injected

fault

• What if injected fault is missed, but causes test to detect

previously undetected fault in original code?

• Alive otherwise

• A mutation score (MS) is associated to the test

set to measure its effectiveness

CSCE 431 Testing

Mutation Operators

• Mutation operator

• A rule that specifies a syntactic variation of the

program text so that the modified program still

compiles

• Maybe also require that it pass static analysis

• A mutant is the result of an application of a

mutation operator

• The quality of the mutation operators

determines the quality of the mutation testing

process

CSCE 431 Testing

Mutant Examples

• Original program

if (a < b)

b = b – a;

else

b = 0;

• Mutants

if (a

if (a

if (a

if (c

b =

b =

b =

else

b =

b =

a =

CSCE 431 Testing

< b)

<= b)

> b)

< b)

b - a;

b + a;

x - a;

0;

1;

0;

Example Mutant Operators

•

•

•

•

•

•

•

•

Replace arithmetic operator by another

Replace relational operator by another

Replace logical operator by another

Replace a variable by another

Replace a variable (in use position) by a constant

Replace number by absolute value

Replace a constant by another

Replace while(...)\{...\} by do \{...\}

while(...)

• Replace condition of test by the test’s negation

• Replace call to a routine by a call to another routine

• Language-specific operators: OO, AOP

CSCE 431 Testing

Test Quality Measures

•

•

•

•

S - system composed of n components denoted Ci

di - number of killed mutants after applying Ci ’s unit test sequence Ti to Ci

mi - total number of mutants of component Ci

Mutation score MS for Ci and its unit test sequence Ti

𝑑𝑖

𝑀𝑆 𝐶𝑖 , 𝑇𝑖 =

𝑚𝑖

• Test quality TQ is defined as MS

• System Test Quality

𝑆𝑇𝑄 𝑆 =

𝑖=1,𝑛 𝑑𝑖

𝑖=1,𝑛 𝑚𝑖

• STQ is a measure of the quality of the entire test suite for the entire

system

CSCE 431 Testing

Outline

•

•

•

•

•

•

•

•

•

•

•

•

•

•

Introduction

How to deal with faults, erroneous states, and failures

Different kinds of testing

Testing strategies

Test automation

Unit testing

Mocks etc.

Estimating quality: Coverage

Using dataflow in testing

Mutation testing

Test management

Collaborative construction

Effectiveness of different quality assurance techniques

References

CSCE 431 Testing

Testing Strategy

• Defining the process

• Test plan

• Input and output documents

• Who is testing?

• Developers / special testing teams / customer

• What test levels are needed?

• Unit, integration, system, acceptance, regression

• Order of tests?

• Top-down, bottom-up, combination (pertains to integration

testing)

• Running the tests

• Manually

• Use of tools

• Automatically

CSCE 431 Testing

Who Tests?

• Any significant project should have a separate QA

team

• Avoids self-delusion

• QA requires a skill-set of its own

• Unit tests

• Developers

• Integration tests

• Developers or QA team

• System test

• QA team

• Acceptance test

• Customer + QA team

CSCE 431 Testing

Testing Personnel

• Developing test requires

•

•

•

•

Detailed understanding of the system

Application and solution domain knowledge

Knowledge of the testing techniques

Skill to apply these techniques

• Testing is done best by independent testers

• We often develop a certain mental attitude that the

program should behave in a certain way when in fact it

does not

• Developers often stick to the data set with which the

program works

• Often the case that every new user uncovers a new class of

defects

CSCE 431 Testing

Test Report Classification,

Severity

• Defined in advance

• Applied to every reported failure

• Analyzes each failure to determine whether it

reflects a fault, and if so, how damaging

• Example classification:

•

•

•

•

•

not a fault

cosmetic

minor

serious

blocking

CSCE 431 Testing

Test Report Classification,

Status

• When a defect report finds its way around in the

bug database, its status changes reflecting the

state of the defect and its repairs

• For example:

•

•

•

•

•

•

•

•

•

registered

open

re-opened

corrected

integrated

delivered

closed

irreproducible

canceled

CSCE 431 Testing

Example Responsibility

Definitions

• Who runs each kind of test?

• Who is responsible for assigning severity and

status?

• What is the procedure for disputing such an

assignment?

• What are the consequences on the project of a

defect at each severity level?

• E.g. “the product shall be accepted when two

successive rounds of testing, at least one week

apart, have evidenced fewer than m serious faults

and no blocking faults”

CSCE 431 Testing

IEEE is Your Friend

• IEEE 829-2008

• Standard for Software and System Test

Documentation

• http://en.wikipedia.org/wiki/IEEE_829

CSCE 431 Testing

Many Issue Tracking Systems

• Pick one and use it

• Example: Traq

• See Kode Vicious column comments on the

shortcomings of bug tracking systems

CSCE 431 Testing

Traq System (1)

CSCE 431 Testing

Traq System (2)

CSCE 431 Testing

When to Stop Testing?

• Complete testing is infeasible

• One needs a correctness proof (usually not

feasible)

• Nevertheless, at some point, software testing

has to be stopped and the product shipped

• The stopping time can be decided by the trade-off

between time and budget

• Or testing can be stopped if an agreed-upon

reliability estimate meets a set requirement

• Set based on experience from earlier projects/releases

• Requires accurate record keeping of defects

CSCE 431 Testing

Full-Employment Theorem for

Software Testing Researchers

• The Pesticide Paradox:

• “Every method you use to prevent or find bugs leaves a

residue of subtler bugs against which those methods are

ineffectual.”

• Unlike real bugs, software bugs immune to current

testing methodologies do not evolve and multiply

themselves

• Instead, “killing” the “easy bugs,” allows the software to

grow with more features and more complexity, creating

new possibilities of these subtle defects to manifest

themselves as failures

• Therefore, the need for new software testing

technologies is guaranteed ad infinitum :)

Boris Beizer, Software Testing Techniques, 2nd edition, 1990

CSCE 431 Testing

Outline

•

•

•

•

•

•

•

•

•

•

•

•

•

•

Introduction

How to deal with faults, erroneous states, and failures

Different kinds of testing

Testing strategies

Test automation

Unit testing

Mocks etc.

Estimating quality: Coverage

Using dataflow in testing

Mutation testing

Test management

Collaborative construction

Effectiveness of different quality assurance techniques

References

CSCE 431 Testing

Defects Can Be Found By

Inspecting Code

• Formal inspections

• Walkthroughs

• Code reading

• Pair programming

• All of these techniques have a collaborative

dimension

• More than one pair of eyes staring at the code

CSCE 431 Testing

Collaborative Construction

• Working on code development in close

cooperation with others

• Motivation

• Developers do not notice their own errors very

easily

• Others will not have the same blind spots

• Thus, errors caught more easily by other people

• Takes place during construction process

• E.g. pair programming

• http://en.wikipedia.org/wiki/Pair_programming

CSCE 431 Testing

Benefits

• Can be more effective at finding errors than testing alone

• 35% errors found through low-volume beta testing

• 55-60% errors found by design/code inspection

• Finds errors earlier in process

• Reduces time and cost of fixing them

• Provides mentoring opportunity

• Junior programmers learn from more senior programmers

• Creates collaborative ownership

• No single “owner” of code

• People can leave team more easily, since others have seen

code

• Wider pool of people to draw from when fixing later errors in

code

CSCE 431 Testing

Code Reviews

• Method shown to be effective in finding errors

• Ratio of time spent in review vs. later testing and error

correction

• Ranges from 1:20 to 1:100

• Reduced defect correction from 40% of budget to 20%

• Maintenance costs of inspected code is 10% of noninspected code

• Changes done with review: 95% correct vs. 20% w/o

review

• Reviews cut errors by 20% to 80%

• Several others (examples from Code Complete)

CSCE 431 Testing

Reviews vs. Testing

•

•

•

•

•

•

Finds different types of problems than testing

Unclear error messages

Bad commenting

Hard-coded variable names

Repeated code patterns

Only high-volume beta testing (and prototyping)

find more errors than formal inspections

• Inspections typically take 10-15% of budget, but

usually reduce overall project cost

• Reviews can provide input to test plan

CSCE 431 Testing

Example Method: Formal

Inspection, Some Rules

• Focus on detection, not correction

• Reviewers prepare ahead of time and arrive

with a list of what they have discovered

• Code reviews aren’t to sit and read code

• No meeting unless everyone is prepared

• Distinct roles assigned to participants

• No deviation from roles during review

• Data is collected and fed into future reviews

• Checklists focus reviewers’ attention to

common past problems

CSCE 431 Testing

Roles

• Moderator

• Keep review moving, handle details.

• Technically competent

• Author

• Minor role, design/code should speak for itself

• Reviewer(s)

• Focus on code, not author

• Find errors prior and during the meeting

• Scribe

• Record keeper, not moderator, not author

• Management

• Not involved

• 3 people min

• ˜6 people max

CSCE 431 Testing

Stages

• Overview

• Authors might give overview to reviewers to familiarize them

• Separate from review meeting

• Preparation

• Reviewers work alone to scrutinize code for errors

• Review rate of 100–500 lines per hour for typical code

• Reviewer’s can be assign perspectives

• API client view, user’s view, etc.

• Inspection meeting

• Reviewer paraphrases code

• Explains design and logic

•

•

•

•

Moderator keeps focus

Scribe records defects when found

No discussion on solutions

No more than 1 meeting per day, max 2 hours

• Possibly “Third hour meeting”

• Outline solutions

CSCE 431 Testing

Outline

•

•

•

•

•

•

•

•

•

•

•

•

•

•

Introduction

How to deal with faults, erroneous states, and failures

Different kinds of testing

Testing strategies

Test automation

Unit testing

Mocks etc.

Estimating quality: Coverage

Using dataflow in testing

Mutation testing

Test management

Collaborative construction

Effectiveness of different quality assurance techniques

References

CSCE 431 Testing

Different Methods Catch

Different Defects

CSCE 431 Testing

Observations

• No technique >75%

• Average ~40%

• Human inspection seems more effective

• Code reading detected 80% more faults per

(human) hour than testing

• However, testing catches different kinds of bugs, so

still worthwhile

• V. Basili, R Selby, “Comparing the Effectiveness of Software Testing

Strategies”, IEEE Trans. SE, vol. SE-13, no. 12, Dec. 1987, pp. 1278-1296

CSCE 431 Testing

How Many Defects Are There?

• Industry average: 1-25 errors per 1000 LOC for

delivered software

• Microsoft’s application division: ~10-20 defects /

1kLOC during in-house testing, 0.5 / 1 kLOC in a

released product (Moore 1992)

• Code inspections, testing, collaborative development

• Space shuttle software

• 0 defects / 500 kLOC

• Formal systems, peer reviews, statistical testing

• Humphrey’s Team Software Process

• 0.06 defects / 1 kLOC (Weber 2003)

• Process focuses on not creating bugs to begin with

• http://en.wikipedia.org/wiki/Team_software_process

CSCE 431 Testing

Outline

•

•

•

•

•

•

•

•

•

•

•

•

•

•

Introduction

How to deal with faults, erroneous states, and failures

Different kinds of testing

Testing strategies

Test automation

Unit testing

Mocks etc.

Estimating quality: Coverage

Using dataflow in testing

Mutation testing

Test management

Collaborative construction

Effectiveness of different quality assurance techniques

References

CSCE 431 Testing

References

• Paul Amman and Jeff Offutt, Introduction to

Software Testing, Cambridge University Press,

2008

• Boris Beizer, Software Testing Techniques, 2nd

edition, Van Nostrand Reinhold, 1990

• Steve McConnell, Code Complete, 2nd edition,

Microsoft Press, 2004, Chapter 20-23

CSCE 431 Testing