Chapter 13- Statistical Methods for Continuous Measures

advertisement

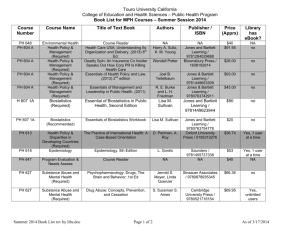

Handbook for Health Care Research, Second Edition Chapter 13 CHAPTER 13 Statistical Methods for Continuous Measures © 2010 Jones and Bartlett Publishers, LLC Handbook for Health Care Research, Second Edition Chapter 13 Testing for Normality A key assumption of test is the sample data come from a population that is normally distributed • Kolmogorov-Smirnov Test - tests whether the distribution of a continuous variable is the same for two groups -Example: You want to test the effect of prone positioning on the Pao2 of patients in the ICU. Data are collected before and after positioning the patient and you intend to use a paired t test. -Null: Two distributions are the same • See Figure 13.1 in book © 2010 Jones and Bartlett Publishers, LLC 2 Handbook for Health Care Research, Second Edition Chapter 13 Testing for Equal Variances Key assumption of the tests is the data in two or more samples have equal variances • F Ratio Test- is calculated as the ratio of two sample variances and shows whether the variance of one group is smaller, larger, or equal to the variance of the other group © 2010 Jones and Bartlett Publishers, LLC 3 Handbook for Health Care Research, Second Edition Chapter 13 Testing for Equal Variances Example: One of the pulmonary physicians in your hospital has questioned the accuracy of your lab’s pulmonary function test results. Specifically, she questions whether the new lab technician you have hired (Tech A) can produce as consistent results as the other technician, who has years of experience (Tech B). You gather two sets of FEV1 measurements on the same patient by the two techs. Since you are using only one patient, most of the variance in measurements will be due to the two technicians. -Null Hypothesis: The variances of the two groups of data are the same. © 2010 Jones and Bartlett Publishers, LLC Handbook for Health Care Research, Second Edition Chapter 13 F Ratio Test Results of F Ratio Test Tech A Tech B 30 30 1.206 1.173 0.143 0.033 0.378 0.182 0.069 0.033 Report from the Statistics Program The p value is less than 0.05, so we reject the null hypothesis And conclude that the variances are not equal. The variance of measurements made by Tech A (0.143) is much greater than the variance of measurements made by Tech B (0.033). © 2010 Jones and Bartlett Publishers, LLC 5 Handbook for Health Care Research, Second Edition Chapter 13 Correlation and Regression Basic assumption of this section is that the association between the two variables is linear • Pearson Product-Moment Correlation Coefficientcorrelation coefficient with a continuous variable measurable on an interval level The Pearson r statistic ranges:1.0 (perfect negative correlation) through 0 (no correlation) to 1.0 (perfect positive correlation). © 2010 Jones and Bartlett Publishers, LLC 6 Handbook for Health Care Research, Second Edition Chapter 13 Correlation and Regression Example: You decide to evaluate a device called the EzPAP for lung expansion therapy. It generates a continuous airway pressure proportional to the flow introduced at its inlet port. However, the user’s manual does not say how the set flow is related to resultant airway pressure, and we generally use the device without a pressure gauge attached. You connect a flowmeter and pressure gauge to the device and record the pressures as you adjust the gas flow over a wide range. -Null Hypothesis: The two variables have no significant linear association. © 2010 Jones and Bartlett Publishers, LLC Handbook for Health Care Research, Second Edition Chapter 13 Pearson Product-Moment Correlation Coefficient Data Entry for Calculating the Correlation Coeffi cient Flow 3 4 5 6 etc. Pressure 5 5 6 7 etc. Report from Statistics Program Correlation coefficient: 0.942. The p value is less than 0.001. © 2010 Jones and Bartlett Publishers, LLC 8 Handbook for Health Care Research, Second Edition Chapter 13 Correlation and Regression • Simple Linear Regression-A simple regression uses the values of one independent variable (x) to predict the value of a dependent variable (y). Regression analysis fits a straight line to a plot of the data. -Example: Using the data table from the EzPAP, we perform a simple linear regression. The purpose of the experiment is to allow us to predict the amount of flow required for a desired level of pressure. Therefore, we designate pressure as the (known) independent variable and fl ow as the dependent variable. © 2010 Jones and Bartlett Publishers, LLC 9 Handbook for Health Care Research, Second Edition Chapter 13 Simple Linear Regression Results of linear regression analysis. Pressure (cm H2O) Report from Statistics Program Normality test: Passed (p 0.511) Constant variance test: Passed (p 0.214) Coeffi cient p Y-intercept 1.045 0.021 Pressure 0.653 0.001 R2: 0.89 Standard error of estimate: 1.482 © 2010 Jones and Bartlett Publishers, LLC 10 Handbook for Health Care Research, Second Edition Chapter 13 Correlation and Regression • Multiple Linear Regression- simple linear regression can be extended to cases where more than one independent (predictor) variable is present - Multiple linear regression assumes an association between one dependent variable and an arbitrary number (symbolized by k) of independent variables. The general equation is: Y= b0 +b1x1+ b2x2 + b3x3 + … + bkxk © 2010 Jones and Bartlett Publishers, LLC 11 Handbook for Health Care Research, Second Edition Chapter 13 Correlation and Regression • Logistic Regression -designed for predicting a qualitative dependent variable from observations of one or more independent variables. -The qualitative dependent variable must be nominal and dichotomous (take only two possible values such as lived or died, presence or absence, etc.), represented by values of 0 and 1. -The general logistic regression: P=ey 1+eY © 2010 Jones and Bartlett Publishers, LLC 12 Handbook for Health Care Research, Second Edition Chapter 13 Comparing One Sample to a Known Value • One-Sample t Test - compares a sample mean to a hypothesized population mean and determines the probability that the observed difference between sample and hypothesized mean occurred by chance. • Probability of chance occurrence is the p value: – p close to 1.0 implies that the hypothesized and sample means are the same – A small p value (less than 0.05) suggests that such a difference is unlikely (only 1 in 20) to occur by chance if the sample came from a population with the hypothesized mean. © 2010 Jones and Bartlett Publishers, LLC 13 Handbook for Health Care Research, Second Edition Chapter 13 Comparing Two Samples, Unmatched Data • Unpaired t Test - compares the means of two groups and determines the probability that the observed difference occurred by chance. • Probability of chance occurrence is the p value: -A p value close to 1.0 implies that the two sample means are the same -A small p value (less than 0.05) suggests that such a difference is unlikely (only 1 in 20) to occur by chance if the sample came from a population with the hypothesized mean. © 2010 Jones and Bartlett Publishers, LLC 14 Handbook for Health Care Research, Second Edition Chapter 13 Comparing Two Samples, Matched Data • Paired t Test- used for comparing two measurements from the same individual or experimental unit. -The two measurements can be made at different times or under different conditions. • The paired t test is used to evaluate the hypothesis that the mean of the differences between pairs of experimental units is equal to some hypothesized value, usually zero. • The paired t test compares the two samples and determines the probability of the observed difference occurring by chance. The chance is reported as the p value. -A small p value (less than 0.05) suggests that such a difference is unlikely (only 1 in 20) to occur by chance if the sample came from a population with the hypothesized mean. © 2010 Jones and Bartlett Publishers, LLC 15 Handbook for Health Care Research, Second Edition Chapter 13 Comparing Three or More Samples, Unmatched Data With several independent comparisons, the probability of getting at least one falsely significant comparison when there are actually no significant differences The proper way to compare more than two mean values is to use the analysis of variance (ANOVA) • One-Way ANOVA- assesses values collected at one point in time for more than two different groups of subjects. It is used when you want to determine if the means of two or more different groups are affected by a single experimental factor. -ANOVA tests the null hypothesis that the mean values of all the groups are the same versus the hypothesis that at least one of the mean values is different. © 2010 Jones and Bartlett Publishers, LLC 16 Handbook for Health Care Research, Second Edition Chapter 13 Comparing Three or More Samples, Unmatched Data • Two-Way ANOVA - compares values within groups as well as between groups. -Appropriate for looking at comparisons of groups at different times as well as the differences within each group over the course of the study. • In a two-factor ANOVA, there are two experimental factors, which are varied for each experimental group. • A two-factor ANOVA tests three hypotheses: -There is no difference among the levels of the first factor. -There is no difference among the levels of the second factor. -There is no interaction between factors. That is, if there is any difference among levels of one factor, the differences are the same regardless of the second factor level. © 2010 Jones and Bartlett Publishers, LLC 17 Handbook for Health Care Research, Second Edition Chapter 13 Comparing Three or More Samples, Matched Data • One-Way Repeated Measures ANOVA - examines values collected at more than one point in time for a single group of subjects. -It is used when you want to determine if a single group of individuals was affected by a series of experimental treatments or conditions. • ANOVA tests the null hypothesis that all the mean values of all the groups are the same versus the hypothesis that at least one of the mean values is different. © 2010 Jones and Bartlett Publishers, LLC 18 Handbook for Health Care Research, Second Edition Chapter 13 Comparing Three or More Samples, Matched Data • Two-Way Repeated Measures ANOVA - compares values within groups as well as between groups - This analysis would be appropriate for looking at comparisons of groups at different times as well as the differences within each group over the course of the study • The test is for differences between the different levels of each factor and for interactions between the factors. -A two-factor ANOVA tests three hypotheses: -There is no difference among the levels of the first factor. -There is no difference among the levels of the second factor. -There is no interaction between factors. © 2010 Jones and Bartlett Publishers, LLC 19