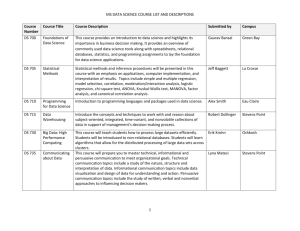

Intro to Text Mining and Analytics

advertisement

CSC 594 Topics in AI – Text Mining and Analytics Fall 2015/16 1. Introduction 1 Unstructured Data • “80 % of business-relevant information originates in unstructured form, primarily text.” (a quote in 2008) • “Based on the industry’s current estimations, unstructured data will occupy 90% of the data by volume in the entire digital space over the next decade.” (a quote in 2010) • “The possibilities for data mining from large text collections are virtually untapped. Text expresses a vast, rich range of information, but encodes this information in a form that is difficult to decipher automatically. For example, it is much more difficult to graphically display textual content than quantitative data.” (Marti Hear, UC Berkeley, 2007) 2 IBM Watson Content Analytics: Discover Hidden Value in Your Unstructured Data 3 Text Mining and Analytics • You use the terms text analytics, text data mining, and text mining almost synonymously in this course. • Text analytics uses algorithms for turning free-form text (unstructured data) into data that can be analyzed (structured data) by applying statistical and machine learning methods, as well as Natural Language Processing (NLP) techniques. • Once structured data is obtained, the same mining and analytic techniques can apply. • So the most significant part of Text Mining/Analytics is how to convert texts into structured data. Converting Text into Structured Data • A huge amount of preprocessing is required to convert text. – Cleaning up ‘dirty’ texts • Remove mark-up tags from web documents, encrypted symbols such as emoticons/emoji’s, extraneous strings such as “AHHHHHHHHHHHHHHHHHHHHH” • Correct misspelled words.. – Tokenization • Remove punctuations, normalizing upper/lower cases, etc. – Sentence splitting – Identifying multi-word expressions (e.g. “as well as”, “radio wave”) and Named Entities (e.g. “Allied Waste”, “Super Mario Bros.”) • Adding other linguistic information – Parts-of-speech (e.g. noun, verb, adjective, adverb, preposition) • Filtering non-significant/irrelevant words – to reduce dimensions – Filtering non-content words using a stop-list (e.g. “the”, “a”, “an”, “and”) – Combining tokens by stemming/lemmatizing or using synonyms • Other NLP features/techniques, e.g. n-grams, syntax trees Text Mining Process Pipeline • Process is essentially a linear pipeline. • Feedback from the results of Text Mining might affect earlier preprocessing (to Parsing, or even data collection).. 6 Text Mining Paradigm 7 Data Mining – Two Broad Areas – Pattern Discovery/Exploratory Analysis (Unsupervised Learning) • There is no target variable, and some form of analysis is performed to do the following: – identify or define homogeneous groups, clusters, or segments – find links or associations between entities, as in market basket analysis – Prediction (Supervised Learning) • A target variable is used, and some form of predictive or classification model is developed. • Input variables are associated with values of a target variable, and the model produces a predicted target value for a given set of inputs. Text Mining Applications – Unsupervised – Information retrieval (IR) • finding documents with relevant content of interest • used for researching medical, scientific, legal, and news documents such as books and journal articles – Document categorization for organizing • clustering documents into naturally occurring groups • extracting themes or concepts – Anomaly detection • identifying unusual documents that might be associated with cases requiring special handling such as unhappy customers, fraud activity, and so on Text Mining Applications – Unsupervised • Text clustering • Trend analysis Trend for the Term “text mining” from Google Trends Cluster No. Comment Key Words 1 1, 3, 4 doctor, staff, friendly, helpful 2 5, 6, 8 treatment, results, time, schedule 3 2, 7 service, clinic, fast 10 Text Mining Applications – Supervised – Many typical predictive modeling or classification applications can be enhanced by incorporating textual data in addition to traditional input variables. • churning propensity models that include customer center notes, website forms, emails, and Twitter messages • hospital admission prediction models incorporating medical records notes as a new source of information • insurance fraud modeling using adjustor notes • sentiment categorization (next page) • stylometry or forensic applications that identify the author of a particular writing sample Sentiment Analysis • The field of sentiment analysis deals with categorization (or classification) of opinions expressed in textual documents Green color represents positive tone, red color represents negative tone, and product features and model names are highlighted in blue and brown, respectively. 12 Structured + Text Data in Predictive Models • Use of both types of data in building predictive models. ROC Chart of Models With and Without Textual Comments Discussion •What sort of pattern discovery or predictive modeling application do you have in mind that can incorporate text data? Typical Text Pre-processing Step • Given a raw text (in a corpus), we typically pre-process the text by applying the following tasks in order: 1. Part-Of-Speech (POS) tagging – assign a POS to every word in a sentence in the text 2. Named Entity Recognition (NER) – identify named entities (proper nouns and some common nouns which are relevant in the domain of the text) 3. Shallow Parsing – identify the phrases (mostly verb phrases) which involve named entities 4. Information Extraction (IE) – identify relations between phrases, and extract the relevant/significant “information” described in the text Source: Andrew McCallum, UMass Amherst 15 1. Part-Of-Speech (POS) Tagging • POS tagging is a process of assigning a POS or lexical class marker to each word in a sentence (and all sentences in a corpus). Input: Output: the lead paint is unsafe the/Det lead/N paint/N is/V unsafe/Adj 16 2. Named Entity Recognition (NER) • NER is to process a text and identify named entities in a sentence – e.g. “U.N. official Ekeus heads for Baghdad.” 17 3. Shallow Parsing • Shallow (or Partial) parsing identifies the (base) syntactic phases in a sentence. [NP He] [v saw] [NP the big dog] • After NEs are identified, dependency parsing is often applied to extract the syntactic/dependency relations between the NEs. [PER Bill Gates] founded [ORG Microsoft]. found nsubj Bill Gates dobj Dependency Relations nsubj(Bill Gates, found) dobj(found, Microsoft) Microsoft 18 4. Information Extraction (IE) • • • Identify specific pieces of information (data) in an unstructured or semi-structured text Transform unstructured information in a corpus of texts or web pages into a structured database (or templates) Applied to various types of text, e.g. – Newspaper articles – Scientific articles – Web pages – etc. Source: J. Choi, CSE842, MSU 19 Bridgestone Sports Co. said Friday it had set up a joint venture in Taiwan with a local concern and a Japanese trading house to produce golf clubs to be supplied to Japan. The joint venture, Bridgestone Sports Taiwan Co., capitalized at 20 million new Taiwan dollars, will start production in January 1990 with production of 20,000 iron and “metal wood” clubs a month. template filling TIE-UP-1 Relationship: TIE-UP Entities: “Bridgestone Sport Co.” “a local concern” “a Japanese trading house” Joint Venture Company: “Bridgestone Sports Taiwan Co.” Activity: ACTIVITY-1 Amount: NT$200000000 ACTIVITY-1 Activity: PRODUCTION Company: “Bridgestone Sports Taiwan Co.” Product: “iron and ‘metal wood’ clubs” Start Date: DURING: January 1990 20