Gellerstedt_et_al_revisedBP

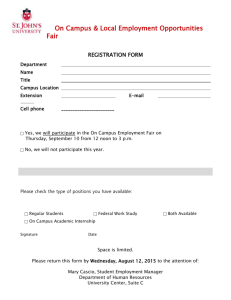

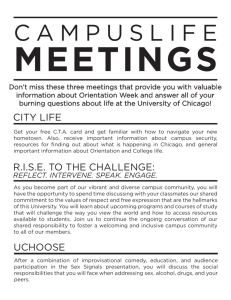

advertisement