Analysis of Variance (ANOVA)

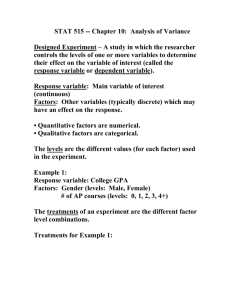

advertisement

Analysis of Variance (ANOVA) W&W, Chapter 10 Introduction Last time we learned about the chi square test for independence, which is useful for data that is measured at the nominal or ordinal level of analysis. If we have data measured at the interval level, we can compare two or more population groups in terms of their population means using a technique called analysis of variance, or ANOVA. Completely randomized design Population 1 Mean = 1 Variance=12 Population 2….. Population k Mean = 2 …. Mean = k Variance=22 … Variance = k2 We want to know something about how the populations compare. Do they have the same mean? We can collect random samples from each population, which gives us the following data. Completely randomized design Mean = M1 Variance=s12 Mean = M2 ..… Variance=s22 …. N1 cases N2 cases …. Mean = Mk Variance = sk2 Nk cases Suppose we want to compare 3 college majors in a business school by the average annual income people make 2 years after graduation. We collect the following data (in $1000s) based on random surveys. Completely randomized design Accounting 27 22 33 25 38 29 Marketing 23 36 27 44 39 32 Finance 48 35 46 36 28 29 Completely randomized design Can the dean conclude that there are differences among the major’s incomes? Ho: 1 = 2 = 3 HA: 1 2 3 In this problem we must take into account: 1) The variance between samples, or the actual differences by major. This is called the sum of squares for treatment (SST). Completely randomized design 2) The variance within samples, or the variance of incomes within a single major. This is called the sum of squares for error (SSE). Recall that when we sample, there will always be a chance of getting something different than the population. We account for this through #2, or the SSE. F-Statistic For this test, we will calculate a F statistic, which is used to compare variances. F = SST/(k-1) SSE/(n-k) SST=sum of squares for treatment SSE=sum of squares for error k = the number of populations N = total sample size F-statistic Intuitively, the F statistic is: F = explained variance unexplained variance Explained variance is the difference between majors Unexplained variance is the difference based on random sampling for each group (see Figure 10-1, page 327) Calculating SST SST = ni(Mi - )2 = grand mean or = Mi/k or the sum of all values for all groups divided by total sample size Mi = mean for each sample k= the number of populations Calculating SST By major Accounting M1=29, n1=6 Marketing M2=33.5, n2=6 Finance M3=37, n3=6 = (29+33.5+37)/3 = 33.17 SST = (6)(29-33.17)2 + (6)(33.5-33.17)2 + (6)(37-33.17)2 = 193 Calculating SST Note that when M1 = M2 = M3, then SST=0 which would support the null hypothesis. In this example, the samples are of equal size, but we can also run this analysis with samples of varying size also. Calculating SSE SSE = (Xit – Mi)2 In other words, it is just the variance for each sample added together. SSE = (X1t – M1)2 + (X2t – M2)2 + (X3t – M3)2 SSE = [(27-29)2 + (22-29)2 +…+ (29-29)2] + [(23-33.5)2 + (36-33.5)2 +…] + [(48-37)2 + (35-37)2 +…+ (29-37)2] SSE = 819.5 Statistical Output When you estimate this information in a computer program, it will typically be presented in a table as follows: Source of Variation Treatment Error Total df k-1 n-k n-1 Sum of squares SST SSE SS=SST+SSE Mean squares F-ratio MST=SST/(k-1) F=MST MSE=SSE/(n-k) MSE Calculating F for our example F = 193/2 819.5/15 F = 1.77 Our calculated F is compared to the critical value using the F-distribution with F, k-1, n-k degrees of freedom k-1 (numerator df) n-k (denominator df) The Results For 95% confidence (=.05), our critical F is 3.68 (averaging across the values at 14 and 16 In this case, 1.77 < 3.68 so we must accept the null hypothesis. The dean is puzzled by these results because just by eyeballing the data, it looks like finance majors make more money. The Results Many other factors may determine the salary level, such as GPA. The dean decides to collect new data selecting one student randomly from each major with the following average grades. New data Average Accounting A+ 41 A 36 B+ 27 B 32 C+ 26 C 23 M(t)1=30.83 = 33.72 Marketing 45 38 33 29 31 25 M(t)2=33.5 Finance M(b) 51 M(b1)=45.67 45 M(b2)=39.67 31 M(b3)=30.83 35 M(b4)=32 32 M(b5)=29.67 27 M(b6)=25 M(t)3=36.83 Randomized Block Design Now the data in the 3 samples are not independent, they are matched by GPA levels. Just like before, matched samples are superior to unmatched samples because they provide more information. In this case, we have added a factor that may account for some of the SSE. Two way ANOVA Now SS(total) = SST + SSB + SSE Where SSB = the variability among blocks, where a block is a matched group of observations from each of the populations We can calculate a two-way ANOVA to test our null hypothesis. We will talk about this next week.