Recovery-Oriented Computing

Scalar Processor Design

• Phenomenal advances in its brief lifetime of

30+ years

:

X2/18mo in 30yr.

multi-GFLOPs processors, inspiring and facilitating major innovations in:

– Embedded microcontrollers (300 millions sold in 2000)

– Personal computers (150 millions sold in 2000)

– Advanced workstations

– Handheld and mobile devices

– Application and file servers (4 millions sold in 2000)

– Web servers for the Internet

– Low-cost supercomputers

– Large-scale computing clusters

– Well over one billion microprocessors shipped per year

• The amazing decades of the evolution of microprocessors:

1970-1980

Transistor count 2K-100K

Clock frequency 0.1-3 MHz

Instruction/cycle 0.1 IPC

1980-1990

100K-1M

3-30 MHz

1990-2000

1M-100M

2000-2010

100M-2B

30MHz-1GHz 1GHz-15GHz

0.1-0.9 IPC 0.9-1.9 IPC 1.9-2.9 IPC

Scalar Processor Design

Past (Milestones):

– First electronic computer ENIAC in 1946: 18,000 vacuum tubes, 3,000 cubic feet, 20 2-foot 10-digit registers, 5

KIPs (thousand additions per second);

– First microprocessor (a CPU on a single IC chip) Intel

4004 in 1971: 2,300 transistors, 60 KIPs, $200;

– Virtual elimination of assembly language programming reduced the need for object-code compatibility;

– The creation of standardized, vendor-independent operating systems, such as UNIX and its clone, Linux, lowered the cost and risk of bringing out a new architecture

– RISC instruction set architecture paved ways for drastic design innovations that focused on two critical performance techniques: instruction-level parallelism and use of caches

Slide 2

Scalar Processor Design

Present (State of the art):

– Microprocessors approaching/surpassing 10 GFLOPS;

– A high-end microprocessor (<$10K) today is easily more powerful than a supercomputer (>$10million) ten years ago;

– While technology advancement contributes a sustained annual growth of 35%, innovative computer design accounts for another 25% annual growth rate a factor of 15 in performance gains!(fig1.1)

– Three different computing markets (fig. 1.3):

» Desktop Computing –- driven by price-performance (a few hundreds through over 10K);

» Servers – availability driven (distinguished from reliability), providing sustained high performance

» Embedded Computers – fastest growing portion of the computer market, real-time performance driven, and need to minimize memory and power, as well as ASIC

Slide 3

Scalar Processor Design

Present (State of the art):

– The Task of the Computer Designer:

» Instruction Set Architecture (Traditional view of what Computer Architecture is), the boundary between software and hardware;

» Organization, high-level aspects of a computer’s design, such as the memory system, the bus structure, the internal design of CPU, based on a given instruction set architectrue;

» Hardware, the specifics of a machine, including the detailed logic design and the packaging technology of the machine.

Future (Technology Trends):

– A truly successful instruction set architecture (ISA) should last for decades, however it takes an computer architect’s acute observation and knowledge of the rapidly changing technology, in order for the ISA to survive and cope with such changes:

Slide 4

Scalar Processor Design

» IC logic technology: transistor count on a chip grows at

55% annual rate (35% density growth rate + 10-20% die size growth) while device speed scales more slowly;

» Semiconductor DRAM: density grows at 60% annually while cycle time improves very slowly (decreasing one-third in ten years). Bandwidth per chip increases twice as fast as latency decreases;

» Magnetic dish technology: density increases at 100% annual rate since 1990 while access time improves at about a third every ten years; and

» Network technology: both latency and bandwidth have been improving, with more focus on bandwidth of late; the increasing importance of networking has led to faster improvement in performance than before—Internet bandwidth doubles every year in the U.S.

» Scaling of transistor performance: while transistor density increases quadratically with linear decrease in feature size, transistor performance increases roughly linearly with decrease in feature size challenge & opportunity for computer designer!

» Wires and power in IC: propagation delay and power needs?

Slide 5

Instruction Set Architecture

• ISA should reflect application characteristics:

– Desktop computing is compute-intensive , thus focusing on features favoring Integer and FP ops;

– Server computing is data-intensive , focusing on integers and char-strings (yet

FP ops are still standard in them)

– Embedded computing is time-sensitive, memory and power conciouse , thus focusing on code-density, real-time and media data streams.

• ISA has been defined as a contract between the software and hardware, or between the program and the machine, thus facilitating independent development of programs and machines.

Slide 6

Instruction Set Architecture

• Taxonomy of ISA:

– Stack : both operands are implicit on the top of the stack, a data structure in which items are accessed an a last in, first out fashion.

– Accumulator : one operand is implicit in the accumulator, a special-purpose register.

– General Purpose Register : all operands are explicit in specified registers or memory locations. Depending on where operands are specified and stored, there are three different

ISA groups:

» Register-Memory: one operand in register and one in memory.Examples: IBM 360/370, Intel 80x86 family, Mototola

68000;

» Memory-Memory: both operands are in memory. Example: VAX.

» Register=Register (load & store): all operands, except for those in load and store instructions, are in registers.

Examples: SPARC (Sun Microsystems), MIPS, Precision

Architecture (HP), PowerPC (IBM), Alpha (DEC).

Slide 7

Instruction Set Architecture

Taxonomy of ISA: Examples

C

A+B

(a) Stack (c) Register-Memory (d) Reg-Reg/Load-Store (e) Memory-Memory (b) Accumulator

TOS

Stack

Reg. Set Reg. Set

Accumulator

ALU

ALU ALU ALU

ALU

Memory

Push A

Push B

Add

Pop C

Memory

Load A

Add B

Store C

Memory Memory Memory

Load R1,A

Add R3,R1,B

Store R3,C

Load R1,A

Load R2,B

Add R3,R1,R2

Store R3,C

Add C,A,B

Slide 8

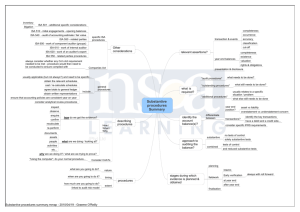

Dynamic and Static Interface

• Inherent in each ISA’s definition is an associated definition of an interface, called the dynamic and

static interface (DSI), that separates what is done

statically at compile time versus what is done

dynamically at run time.

– A key issue in the design of an ISA is the placement of the DSI:

Program (Software)

Compiler complexity

Exposed to software

Architecture

Machine (Hardware)

Hardware complexity

Hidden in hardware

“Static”

(DSI)

“Dynamic”

DEL ~CISC ~VLIW ~RISC

HLL Program

DSI-1

DSI-2

DSI-3

Hardware

Slide 9

Processor Performance

• Performance Equation:

– 1/Performance = Time/Program

= (Instuctions/Program)(Cycles/Instructions)(Time/Cycle)

= IC * CPI * CCT

Where: IC=Instruction Count;

CPI=Cycles per Instruction; CCT=Clock cycle time

• Performance Optimization: reducing one or more of the three factors (IC, CPI, CCT)

– IC: ISA dependent (CISC vs RISC); compiler dependent; dynamic elimination of redundant computation (computation reuse);

– CPI: ISA and instruction complexity dependent; microarchitecture dependent (pipelining and speculative execution of instructions);

– CCT: microarchitecture; pipelining; clock frequency; pipeline depth; can affect CPI;

Slide 10

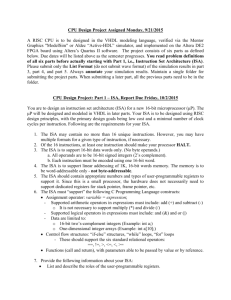

Processor Performance

• Performance Evaluation: functional (ISA) and performance (CPI)

– Trace-driven simulation

Physical execution with software instrumentation

Physical execution with hardware instrumentation

Functional simulator Trace storage

Cycle-based performance simulator

Trace-driven

Timing simulation

Trace generation

– Execution-driven simulation

Functional simulator

(instruction interpretation)

Functional simulation

Execution trace

Checkpoint and control

Cycle-based performance simulator

Execution-driven

Timing simulation

Slide 11

Processor Performance

• Performance Evaluation: Amdahl’s Law

– Idealized pipeline execution profile

Speedup

( 1

1 f )

f

Speedup enhance

N

Speedup

1

( 1

g )

g

N

1

1-g g

– Realistic pipeline execution profile

Pipeline stall Pipeline stall

N

1

– Refined speedup equation :

Speedup

1 g

1

1 g

2

...

2 g

N

N

Slide 12

Slide 13

Slide 14

Slide 15

Slide 16

Slide 17

Slide 18