ChenLiMediators_1

advertisement

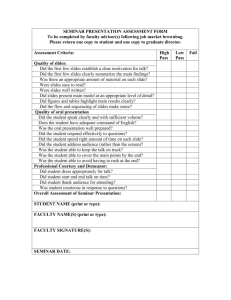

Searching and Integrating Information on the Web Professor Chen Li Department of Computer Science University of California, Irvine 1 About these seminars • Speaker: Chen Li (chenli AT ics.uci.edu) • http://www.ics.uci.edu/~chenli/tsinghua04/ • Topics: recent research on (Web) data management – – – – Search Data extraction Integration Information quality • Format: 4 seminars, 3 hours each • Questions/comments are always welcome! Seminar 1 2 Today’s topics • Topic 1: Web Search – Earlier search engines – PageRank in Google – HITS algorithm • Topic 2: Web-data extraction Seminar 1 3 Topic 1: Web Search • • • • How did earlier search engines work? How does PageRank used by Google work? HITS algorithm Readings: – Lawrence and Giles, Searching the World Wide Web, Science, 1998. – Brin and Page, The Anatomy of a Large-Scale Hypertextual Web Search Engine WWW7/Computer Networks 30(1-7): 107-117, 1998. – Jon M. Kleinberg, Authoritative Sources in a Hyperlinked Environment, Journal of ACM 46(5): 604-632, 1999. Seminar 1 4 Earlier Search Engines • Hotbot, Yahoo, Alta Vista, Northern Light, Excite, Infoseek, Lycos … • Main technique: “inverted index” – Conceptually: use a matrix to represent how many times a term appears in one page – # of columns = # of pages (huge!) – # of rows = # of terms (also huge!) ‘car’ ‘toyota’ ‘honda’ … Page1 Page2 Page3 Page4 … 1 0 1 0 0 2 0 1 2 1 0 0 Seminar 1 page 2 mentions ‘toyota’ twice 5 Search by Keywords • If the query has one keyword, just return all the pages that have the word – E.g., “toyota” all pages containing “toyota”: page2, page4,… – There could be many many pages! – Solution: return those pages with most frequencies of the word first Seminar 1 6 Multi-keyword Search • For each keyword W, find all the set of pages mentioning W • Intersect all the sets of pages – Assuming an “AND” operation of those keywords • Example: – A search “toyota honda” will return all the pages that mention both “toyota” and “honda” Seminar 1 7 Observations • The “matrix” can be huge: – Now the Web has 4.2 billion pages! – There are many “terms” on the Web. Many of them are typos. – It’s not easy to do the computation efficiently: Given a word, find all the pages… Intersect many sets of pages… • For these reasons, search engines never store this “matrix” so naively. Seminar 1 8 Problems • Spamming: – People want their pages to be put very top on a word search (e.g., “toyota”) by repeating the word many many times – Though these pages may be unimportant compared to www.toyota.com, even if the latter only mentions “toyota” only once (or 0 time). • Search engines can be easily “fooled” Seminar 1 9 Closer look at the problems • Lacking the concept of “importance” of each page on each topic – E.g.: My homepage is not as “important” as Yahoo’s main page. • A link from Yahoo is more important than a link from a personal homepage • But, how to capture the importance of a page? – A guess: # of hits? where to get that info? – # of inlinks to a page Google’s main idea. Seminar 1 10 Google’s History • Started at Stanford DB Group as a research project (Brin and Page) • Used to be at: google.stanford.edu • Very soon many people started liking it • Incorporated in 1998: www.google.com • The “largest” search engine now • Started other businesses: froogle, gmail, … Seminar 1 11 PageRank • Intuition: – The importance of each page should be decided by what other pages “say” about this page – One naïve implementation: count the # of pages pointing to each page (i.e., # of inlinks) • Problem: – We can easily fool this technique by generating many dummy pages that point to our class page Seminar 1 12 Details of PageRank • At the beginning, each page has weight 1 • In each iteration, each page propagates its current weight W to all its N forward neighbors. Each of them gets weight: W/N • Meanwhile, a page accumulates the weights from its backward neighbors • Iterate until all weights converge. Usually 6-7 times are good enough. • The final weight of each page is its importance. • NOTICE: currently Google is using many other techniques/heuristics to do search. Here we just cover some of the initial ideas. Seminar 1 13 Example: MiniWeb • Our “MiniWeb” has only three web sites: Netscape, Amazon, and Microsoft. • Their weights are represented as a vector Ne MS Am n 1 / 2 0 1 / 2 n m 0 0 1 / 2 m a new 1 / 2 1 0 a old For instance, in each iteration, half of the weight of AM goes to NE, and half goes to MS. Materials by courtesy of Jeff Ullman Seminar 1 14 Iterative computation n 1 1 5 / 4 9 / 8 5 / 4 6 / 5 m 1 1 / 2 3 / 4 1 / 2 11 / 16 3 / 5 a 1 3 / 2 1 11 / 8 17 / 16 6 / 5 Ne MS Am Final result: • Netscape and Amazon have the same importance, and twice the importance of Microsoft. • Does it capture the intuition? Yes. Seminar 1 15 Observations • We cannot get absolute weights: – We can only know (and we are only interested in) those relative weights of the pages • The matrix is stochastic (sum of each column is 1). So the iterations converge, and compute the principal eigenvector of the following matrix equation: n 1 / 2 0 1 / 2 n m 0 0 1 / 2 m a 1 / 2 1 0 a Seminar 1 16 Problem 1 of algorithm: dead ends! Ne MS Am n 1 / 2 0 1 / 2 n m 0 0 1 / 2 m a new 1 / 2 0 0 a old • MS does not point to anybody • Result: weights of the Web “leak out” n 1 1 3 / 4 5 / 8 1 / 2 0 m 1 1 / 2 1 / 4 1 / 4 3 / 16 0 a 1 1 / 2 1 / 2 3 / 8 5 / 16 0 Seminar 1 17 Problem 2 of algorithm: spider traps Ne MS Am n 1 / 2 0 1 / 2 n m 0 1 1 / 2 m a new 1 / 2 0 0 a old • MS only points to itself • Result: all weights go to MS! n 1 1 3 / 4 5 / 8 1 / 2 0 m 1 3 / 2 7 / 4 2 35 / 16 3 a 1 1 / 2 1 / 2 3 / 8 5 / 16 0 Seminar 1 18 Google’s solution: “tax each page” • Like people paying taxes, each page pays some weight into a public pool, which will be distributed to all pages. • Example: assume 20% tax rate in the “spider trap” example. n 1 / 2 0 1 / 2 n 0.2 m 0.8 * 0 1 1 / 2 m 0.2 1 / 2 0 0 a 0.2 a n 7 / 11 m 21 / 11 a 5 / 11 Seminar 1 19 The War of Search Engines • More companies are realizing the importance of search engines • More competitors in the market: Microsoft, Yahoo!, etc. Seminar 1 20 • Next: the HITS algorithm Seminar 1 21 Hubs and Authorities • Motivation: find web pages to a topic – E.g.: “find all web sites about automobiles” • “Authority”: a page that offers info about a topic – E.g.: DBLP is a page about papers – E.g.: google.com, aj.com, teoma.com, lycos.com • “Hub”: a page that doesn’t provide much info, but tell us where to find pages about a topic – E.g.: www.searchenginewatch.com is a hub of search engines – http://www.ics.uci.edu/~ics214a/ points to many bibliosearch engines Seminar 1 22 Two values of a page • Each page has a hub value and an authority value. – In PageRank, each page has one value: “weight” • Two vectors: – H: hub values – A: authority values h1 H h2 A1 A A2 Seminar 1 23 HITS algorithm: find hubs and authorities • First step: find pages related to the topic (e.g., “automobile”), and construct the corresponding “focused subgraph” – Find pages S containing the keyword (“automobile”) – Find all pages these S pages point to, i.e., their forward neighbors. – Find all pages that point to S pages, i.e., their backward neighbors – Compute the subgraph of these pages root Focused subgraph Seminar 1 24 Step 2: computing H and A • Initially: set hub and authority to 1 • In each iteration, the hub score of a page is the total authority value of its forward neighbors (after normalization) • The authority value of each page is the total hub value of its backward neighbors (after normalization) • Iterate until converge hubs Seminar 1 authorities 25 Example: MiniWeb 1 1 1 M 0 0 1 1 1 0 an A am aa hn H hm ha H new * M * Aold Ne Anew * M T * H old Normalization! Therefore: MS Am H new * M * M T * H old Anew * M T * M * Aold Seminar 1 26 Example: MiniWeb 1 1 1 M 0 0 1 1 1 0 1 0 1 3 1 2 T T M 1 0 1 MM 1 1 0 1 1 0 2 0 2 2 3 1 6 28 132 H 1 2 8 36 1 1 3 1 4 20 96 Ne MS Am 2 2 1 T M M 2 2 1 1 1 2 1 3 1 5 24 114 A 1 5 24 114 1 3 2 1 4 18 84 Seminar 1 27 Topic 2: Web-Data Extraction • Sergey Brin, Extracting Patterns and Relations from the World Wide Web, WebDB Workshop, 1998. • Anand Rajaraman and Jeffrey D. Ullman, Querying Websites Using Compact Skeletons, PODS 2001. Goal: Extract rich data from the Web Seminar 1 28 Motivation • Many applications need to get rich data from the Web – Data integration – Domain-specific applications • Challenges: – Data embedded in HTML pages – For display purposes, but not easily extractable Seminar 1 29 Brin’s approach • Example: – Book(title, author) – Find as many book titles and authors as possible Sample data Find patterns Find data Seminar 1 30 Details 1. Start with sample tuples, e.g., five book titles and authors. 2. Find where the tuples appear on Web. Accept a “pattern” if: a) It identifies several examples of known tuples, and b) is sufficiently specific that it is unlikely to identify too much. 3. Given a set of accepted patterns, find data that appears in these patterns, add it to the set of known data. 4. Repeat steps (2) and (3) several times. Seminar 1 31 Pattern A pattern consists of five elements: 1. The order, i.e., whether the title appears prior to the author in the text, or viceversa. a) In a more general case, where tuples have more than 2 components, the order would be the permutation of components. 2. The URL prefix. 3. The prefix of text, just prior to the first of the title or author. 4. The middle: text appearing between the two data elements. 5. The suffix of text following the second of the two data elements. Both the prefix and suffix were limited to 10 characters. Seminar 1 32 <ul> <li><i>Database Systems: The Complete Book</i>, by Hector Garcia-Molina, Jeffrey Ullman, and Jennifer Widom.<br> <li><i>Data Mining: Concepts and Techniques</i>, by Jiawei Han and Micheline Kamber.<br> <li><i>Principles of Data Mining</i>, by David J. Hand, Heikki Mannila, and Padhraic Smyth, Cambridge, MA: MIT Press, 2001.<br> </ul> Seminar 1 33 Example 1. 2. 3. Order: title then author. URL prefix: www.ics.uci.edu/~ics215/ Prefix, middle, and suffix of the following form: a) b) c) <li><i>title</i> by author<br> The prefix is “<li><i>”, the middle is “</i> by” (including the blank after ``by''), and the suffix is “<br>”. The title is whatever appears between the prefix and middle; the author is whatever appears between the middle and suffix. The intuition behind why this pattern might be good is that there are probably lots of reading lists among the class pages at UCI ICS. Seminar 1 34 Constraints on patterns 1. Pattern specificity: (a) Is the product of the lengths of prefix, middle, suffix, and URL prefix. (b) It measures how likely we are to find the pattern; the higher the specificity, the fewer occurrences we expect. 2. To make sure patterns are likely to be accurate, it must meet two conditions: (a) There must be >= 2 known data items appearing in it. (b) The product of the pattern’s specificity and the number of occurrences of data items in the pattern must exceed a certain threshold T. Seminar 1 35 Data Occurrences • A data occurrence in a pattern consists of: – The particular title and author. – The complete URL, not just the prefix as for a pattern. – The order, prefix, middle, and suffix of the pattern in which the title and author occurred. • The same title and author might appear in several different patterns Seminar 1 36 Finding Data Occurrences Given Data • Given known titleauthor pairs, to find new patterns, search the Web to see where these titles and authors occur. – Assume there is a Web index – Given a word, can find (pointers to) all pages containing that word. • The method used is essentially apriori: – Find (pointers to) pages containing any known author. Since author names generally consist of 2 words, use the index for each first name and last name, and check that the occurrences are consecutive in the document. – Find (pointers to) pages containing any known title. Start by finding pages with each word of a title, and then checking that the words appear in order on the page. – Intersect the sets of pages that have an author and a title on them. Only these pages need to be searched to find the patterns in which a known titleauthor pair is found. For the prefix and suffix, take the 10 surrounding characters, or fewer if there are not as many as 10. Seminar 1 37 Building Patterns from Data Occurrences • 1. Group data occurrences according to their order and middle. – E.g., one group in the “groupby'' might correspond to the order “titlethenauthor'' and the middle “</I> by”. • 2. For each group, find the longest common prefix, suffix, and URL prefix. • 3. If specificity test for the pattern is met, accept it. • 4. Otherwise, – try to split the group into two by extending the length of the URL prefix by one character, and repeat from step (2). – If it is impossible to split the group (because there is only one URL), then we fail to produce a pattern from the group. Seminar 1 38 Example • Suppose our group contains three URL's: – www.ics.uci.edu/~ics184/ – www.ics.uci.edu/~ics214/ – www.ics.uci.edu/~ics215/ • The common prefix is www.ics.uci.edu/~ics • If we have to split the group, then the next character, “1” versus “2”, breaks the group into two, – those data occurrences in the first page (could be many) go into one group, – those occurrences on the other two pages going into another. Seminar 1 39 Finding Occurrences Given Patterns • Find all URL's that match the URL prefix in at least one pattern. • For each of those pages, scan the text using a regular expression built from the pattern's prefix, middle, and suffix. • From each match, extract the title and author, according the order specified in the pattern. Seminar 1 40 Results • • • • • 24M pages, 147GB Start with 5 (book, author) pairs First round: 199 occurrences, 3 patterns, 4047 unique (book, author) pairs After four rounds, found to 15,000 tuples. About 95% were true titleauthor pairs. Data quality is good. Seminar 1 41 RU’s approach • • • • Model data values as a graph Compute “skeletons” from the graph Use skeleton to extract data to populate tables Used in Junglee – a legendary database startup Seminar 1 42 Example Seminar 1 43 Data graph G • • A DAG Each node is an information element: – – – • E.g., A(ddress), T(itle), S(alary) Can be extracted using predefined regular expressions Can have a value, or NULL. An edge represents relationship between two elements – – Could be between pages (web link) Or could be within one page Seminar 1 44 Relation schema • • • The table we want to populate Has a given set of attributes X Each attribute A has a domain Dom(A) • Problem formulation: – – Given a data graph G, and a relation R over attribute set X Use the data graph to populate the table Seminar 1 45 Skeleton • • • • A tree Each node is an attribute in X (could be NULL) Intuition: a pattern/layout of data in the graph Overlay of a skeleton on the data graph: – – • A skeleton node matches a graph node, i.e., they same attribute May use an overlay to extract tuples Perfect skeleton: a skeleton K is perfect for data graph G if for each edge e in G, there is an overlay using K that includes e. Seminar 1 46 Perfect skeletons Data graph • • • Skeleton K1 Good Skeleton K2 Bad K1 tends to give us “right” tuples K2 can give us “wrong” tuples Intuitively, in K1, information elements are closer Seminar 1 47 Compact skeletons Data graph • Not compact K is a compact skeleton for G if: – • Compact Skeleton For each node u in G, there is a node v in K, such that for any overlay from K to G in which u participates, u is mapped to v. Perfect compact skeleton (PCS): perfect & compact – That’s what we want! Seminar 1 48 Computing PCS’s • • • • Not every graph has a PCS PCS is unique An algorithm for computing PCS Complexity: O(km|VG|) – – K: # of attributes in the relation M: # of the nodes in the largest subgraph that has a PCS Seminar 1 49 Other results • Partially PCS – – • • Deal with incomplete information An algorithm for computing PPCS Use PCS or PPCS to populate the relation, and answer queries Deal with noisy data graphs Seminar 1 50