Bristol04.10.12

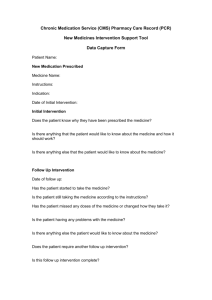

advertisement

Confidence Intervals, Q-Learning and Dynamic Treatment Regimes S.A. Murphy Time for Causality – Bristol April, 2012 1 Outline • Dynamic Treatment Regimes • Example Experimental Designs & Challenges • Q-Learning & Challenges 2 Dynamic treatment regimes are individually tailored treatments, with treatment type and dosage changing according to patient outcomes. Operationalize clinical practice. k Stages for one individual Observation available at jth stage Action at jth stage (usually a treatment) 3 Example of a Dynamic Treatment Regime •Adaptive Drug Court Program for drug abusing offenders. •Goal is to minimize recidivism and drug use. •Marlowe et al. (2008, 2011) 4 Adaptive Drug Court Program non-responsive low risk As-needed court hearings + standard counseling As-needed court hearings + ICM non-compliant high risk non-responsive Bi-weekly court hearings + standard counseling Bi-weekly court hearings + ICM non-compliant Court-determined disposition 5 k=2 Stages Goal: Construct decision rules that input information available at each stage and output a recommended decision; these decision rules should lead to a maximal mean Y where Y is a function of The dynamic treatment regime is a sequence of two decision rules: 6 Outline • Dynamic Treatment Regimes • Example Experimental Designs & Challenges • Q-Learning & Challenges 7 Data for Constructing the Dynamic Treatment Regime: Subject data from sequential, multiple assignment, randomized trials. At each stage subjects are randomized among alternative options. Aj is a randomized action with known randomization probability. binary actions with P[Aj=1]=P[Aj=-1]=.5 8 Pelham’s ADHD Study A1. Continue, reassess monthly; randomize if deteriorate Yes 8 weeks A. Begin low-intensity behavior modification A2. Add medication; bemod remains stable but medication dose may vary AssessAdequate response? No Random assignment: Random assignment: A3. Increase intensity of bemod with adaptive modifications based on impairment B1. Continue, reassess monthly; randomize if deteriorate 8 weeks B. Begin low dose medication AssessAdequate response? No Random assignment: B2. Increase dose of medication with monthly changes as needed B3. Add behavioral treatment; medication dose remains stable but intensity of bemod may increase with adaptive modifications 9 based on impairment Oslin’s ExTENd Study Naltrexone 8 wks Response Random assignment: Early Trigger for Nonresponse Random assignment: TDM + Naltrexone CBI Nonresponse CBI +Naltrexone Random assignment: Naltrexone 8 wks Response Random assignment: TDM + Naltrexone Late Trigger for Nonresponse Random assignment: Nonresponse CBI CBI +Naltrexone 10 Kasari Autism Study JAE+EMT Yes 12 weeks A. JAE+ EMT AssessAdequate response? JAE+EMT+++ Random assignment: No JAE+AAC Random assignment: Yes 12 weeks B. JAE + AAC B!. JAE+AAC AssessAdequate response? No B2. JAE +AAC ++ 11 Jones’ Study for Drug-Addicted Pregnant Women rRBT 2 wks Response Random assignment: tRBT Random assignment: tRBT tRBT Nonresponse eRBT Random assignment: 2 wks Response aRBT Random assignment: rRBT rRBT Random assignment: Nonresponse tRBT rRBT Challenges • Goal of trial may differ – Experimental designs for settings involving new drugs (cancer, ulcerative colitis) – Experimental designs for settings involving already approved drugs/treatments • Choice of Primary Hypothesis/Analysis & Secondary Hypothesis/Analysis • Longitudinal/Survival Primary Outcomes • Sample Size Formulae 13 Outline • Dynamic Treatment Regimes • Example Experimental Designs & Challenges • Q-Learning & Challenges 14 Secondary Analysis: Q-Learning •Q-Learning (Watkins, 1989; Ernst et al., 2005; Murphy, 2005) (a popular method from computer science) •Optimal nested structural mean model (Murphy, 2003; Robins, 2004) • The first method is an inefficient version of the second method when (a) linear models are used, (b) each stages’ covariates include the prior stages’ covariates and (c) the treatment variables are coded to have conditional mean zero. 15 k=2 Stages Goal: Construct d1 (X 1); d2 (X 1; A 1; X 2 ) for which E d1 ;d2 [Y ] is maximal. Vd1 ;d2 = E d1 ;d2 [Y] is called the value and the maximal value is denoted by V opt = max E d1 ;d2 [Y ] d1 ;d2 16 Idea behind Q-Learning ¯ ¸¸ ¯ = E max E max E [Y jX 1 ; A 1 ; X 2 ; A 2 = a2 ] ¯¯X 1 ; A 1 = a1 · V opt · a1 a2 ² Stage 2 Q-function Q2 (X 1 ; A 1 ; X 2 ; A 2 ) = E [Y jX 1 ; A 1 ; X 2 ; A 2 ] ¯ · · ¸¸ ¯ V opt = E max E max Q2 (X 1 ; A 1 ; X 2 ; a2 ) ¯¯X 1 ; A 1 = a1 a1 a2 ¯ ¸ ¯ ² Stage 1 Q-function Q1 (X 1 ; A 1 ) = E maxa 2 Q2 (X 1 ; A 1 ; X 2 ; a2 ) ¯¯X 1 ; A 1 · · ¸ V opt = E max Q1 (X 1 ; a1 ) a1 17 Simple Version of Q-Learning – There is a regression for each stage. • Stage 2 regression: Regress Y on obtain to • Stage 1 regression: Regress obtain to on 18 for subjects entering stage 2: • is a predictor of maxa2 Q2 (X 1 ; A 1 ; X 2; a2 ) • is the predicted end of stage 2 response when the stage 2 treatment is equal to the “best” treatment. • is the dependent variable in the stage 1 regression for patients moving to stage 2 19 A Simple Version of Q-Learning – • Stage 2 regression, (using Y as dependent variable) yields • Arg-max over a2 yields 20 A Simple Version of Q-Learning – • Stage 1 regression, (using yields as dependent variable) • Arg-max over a1 yields 21 Confidence Intervals ^ Y T ^ + max ¯2 S2 a2 = T 0 ® ^ 2 S2 = ® ^ 2T S20 + j ¯^2T S2 j a2 22 Non-regularity • ^ Y = ® ^ 2T S20 + j ¯^2T S2 j • Limiting distribution of (Robins, 2004) p n( ¯^1 ¡ ¯1 ) is non-regular • Problematic area in parameter space is around ¯2 for which P[¯ T S ¼ 0] > 0 2 2 • Standard asymptotic approaches invalid without modification (see Shao, 1994; Andrews, 2000). 23 Non-regularity – • p ^ Problematic term in n( ¯1 ¡ ¯1 ) is · T c §^ ¡1 1 Pn where B 1 = ¡ ¡p T p T B 1 j n¯^2 S2 )j ¡ j n¯2 S2 j ¸ ¢ ¢ 0 T T T (S1 ) ; A 1 S1 • This term is well-behaved if ¯2T S2 is bounded away from zero. • We want to form an adaptive confidence interval. 24 Idea from Econometrics • In nonregular settings in Econometrics there are a fixed number of easily identified “bad” parameter values at which you have nonregular behavior of the estimator • Use a pretest (e.g. an hypothesis test) to test if you are near a “bad” parameter value; if the pretest rejects, use standard critical values to form confidence interval; if the pretest accepts, use the maximal critical value over all possible local alternatives. (Andrews & Soares, 2007; Andrews & Guggenberger, 2009) 25 Our Approach • Construct smooth upper and lower bounds so that for p all n T Ln · c n( ¯^1 ¡ ¯1 ) · Un • The upper/lower bounds use a pretest: p • Embed in the formula for n( ¯^1 ¡ ¯1 ) a pretest of H0 : sT2 ¯2 = 0 based on Tn (s2 ) = 2 T ^ n s2 ¯ 2 sT2 §^ 2 s 2 ( ) 26 Non-regularity • The upper bound adds to c T ¡ + p n( ¯^1 ¡ ¯1 ): ¡ 1 ^ c § 1 Pn B 1 Z n (b)1T n ( S2 ) · T ¯ ¯ ¸n¯ ¡ 1 ^ sup c § 1 Pn B 1 Z n (b)1T n ( S2 ) · T b b= ¸ p n ¯2 n 27 Confidence Interval • Let Un( b) and L (nb) be the bootstrap analogues of Un and L n • PM is the probability with respect to the bootstrap weights. ^l is the ±=2 quantile of L (nb) ; u ^ is the 1 ¡ ±=2 ( b) quantile of Un . Theorem: Assume moment conditions, invertible varcovariance matrices and ¸ n = loglogn, then ³ PM ´ p p cT ¯^1 ¡ u ^= n · cT ¯1¤ · cT ¯^1 ¡ ^l= n ¸ 1 ¡ ± + oP (1) 28 Adaptation Theorem: Assuming finite moment conditions and invertible var-covariance matrices are invertible, ¸ n ! 1 ; ¸ n = o(n) , P[S2T ¯2 6 = 0] = 1 then T Ln; c p (b) (b) ^ n( ¯1 ¡ ¯1 ); Un ; L n ; Un each converge, in distribution, to the same limiting distribution (the last two in probability). 29 Empirical Study • Some Competing Methods • Soft-thresholding (ST) Chakraborty et al. (2009) • Centered percentile bootstrap (CPB) • Plug-in pretesting estimator (PPE) • Generative Models • Nonregular (NR): P[S2T ¯2 • = 0] > 0 Nearly Nonregular (NNR):P[ST ¯2 ¼ 0] > 0 2 • Regular (R): P[S2T ¯2 = 0] = 0 • n=150, 1000 Monte Carlo Reps, 1000 Bootstrap Samples30 Example –Two Stages, two treatments per stage Type CPB ST PPE ACI NR .93* .95 (.34) .93* .99(.50) R .94(.45) .92* .93* .95(.48) NR .93* .76* .90* .96(.49) NNR .93* .76* .90* .97(.49) Size(width) 31 Example –Two Stages, three treatments in stage 2 Type CPB PPE ACI NR .93* .93* 1.0(.72) R .94(.56) .92* .96(.63) NR .89* .86* .97(.67) NNR .90* .86* .97(.67) Size(width) 32 Pelham’s ADHD Study A1. Continue, reassess monthly; randomize if deteriorate Yes 8 weeks A. Begin low-intensity behavior modification A2. Add medication; bemod remains stable but medication dose may vary AssessAdequate response? No Random assignment: Random assignment: A3. Increase intensity of bemod with adaptive modifications based on impairment B1. Continue, reassess monthly; randomize if deteriorate 8 weeks B. Begin low dose medication AssessAdequate response? No Random assignment: B2. Increase dose of medication with monthly changes as needed B3. Add behavioral treatment; medication dose remains stable but intensity of bemod may increase with adaptive modifications 33 based on impairment ADHD Example • (X1, A1, R1, X2, A2, Y) – Y = end of year school performance – R1=1 if responder; =0 if non-responder – X2 includes the month of non-response, M2, and a measure of adherence in stage 1 (S2 ) – S2 =1 if adherent in stage 1; =0, if non-adherent – X1 includes baseline school performance, Y0 , whether medicated in prior year (S1), ODD (O1) – S1 =1 if medicated in prior year; =0, otherwise. 34 ADHD Example • Stage 2 regression for Y: (1; Y0 ; S1 ; O1 ; A 1 ; M 2 ; S2 )®2 + A 2 (¯21 + A 1 ¯22 + S2 ¯23 ) • Stage 1 outcome: R1 Y + (1 ¡ R1 ) Y^ ^ = (1; Y0 ; S1 ; O1 ; A 1 ; M 2 ; S2 ) ® Y ^2+ j ¯^21 + A 1 ¯^22 + S2 ¯^23 j 35 ADHD Example • Stage 1 regression for (1; Y0 ; S1 ; O1 )®1 + A 1 (¯11 + S1 ¯12 ) • Interesting stage 1 contrast: is it important to know whether the child was medicated in the prior year (S1=1) to determine the best initial treatment in the sequence? 36 ADHD Example • Stage 1 treatment effect when S1=1: ¯11 + ¯12 • Stage 1 treatment effect when S1=0: ¯11 90% ACI ¯11 + ¯12 ¯11 (-0.48, 0.16) (-0.05, 0.39) 37 Dynamic Treatment Regime Proposal IF medication was not used in the prior year THEN begin with BMOD; ELSE select either BMOD or MED. IF the child is nonresponsive and was nonadherent, THEN augment present treatment; ELSE IF the child is nonresponsive and was adherent, THEN select intensification of current treatment. 38 38 Challenges • There are multiple ways to form ^ ; what are the Y pros and cons? •Improve adaptation by a pretest of H0 : ¯2 = 0? • High dimensional data; investigators want to collect real time data • Feature construction & Feature selection •Many stages or infinite horizon 39 This seminar can be found at: http://www.stat.lsa.umich.edu/~samurphy/ seminars/Bristol04.10.12.ppt Email Eric Laber or me for questions: laber@stat.ncsu.edu or samurphy@umich.edu.edu 40