unit 1 introdution

advertisement

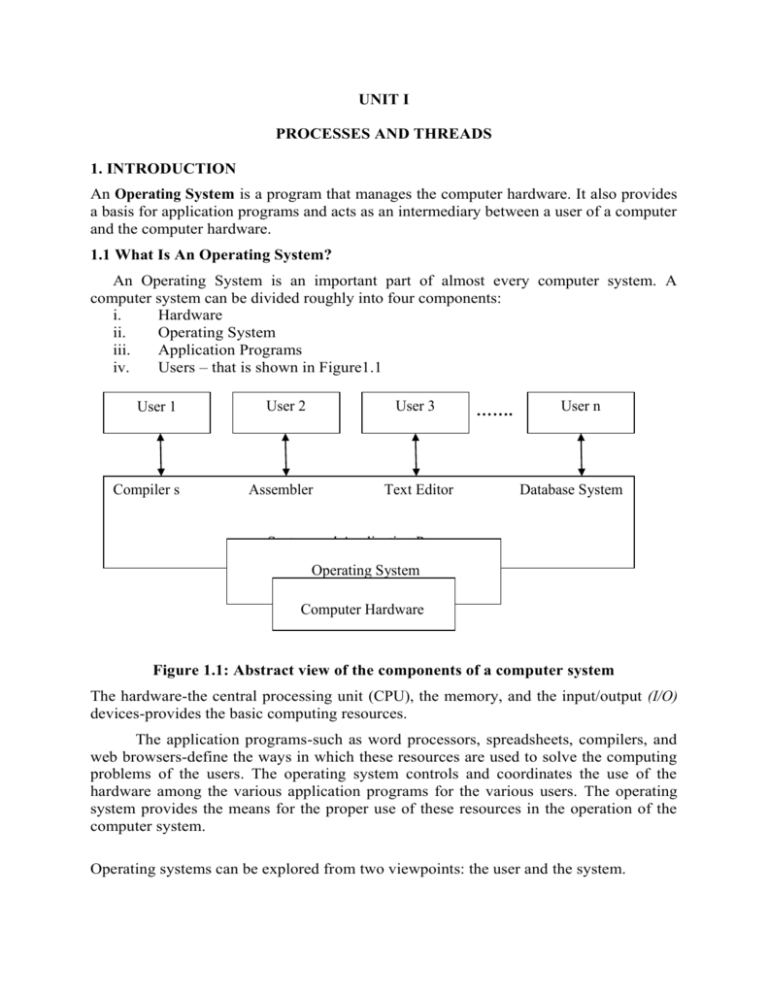

UNIT I PROCESSES AND THREADS 1. INTRODUCTION An Operating System is a program that manages the computer hardware. It also provides a basis for application programs and acts as an intermediary between a user of a computer and the computer hardware. 1.1 What Is An Operating System? An Operating System is an important part of almost every computer system. A computer system can be divided roughly into four components: i. Hardware ii. Operating System iii. Application Programs iv. Users – that is shown in Figure1.1 User 1 Compiler s User 2 User 3 Assembler Text Editor ……. User n Database System System and Application Program Operating System Computer Hardware Figure 1.1: Abstract view of the components of a computer system The hardware-the central processing unit (CPU), the memory, and the input/output (I/O) devices-provides the basic computing resources. The application programs-such as word processors, spreadsheets, compilers, and web browsers-define the ways in which these resources are used to solve the computing problems of the users. The operating system controls and coordinates the use of the hardware among the various application programs for the various users. The operating system provides the means for the proper use of these resources in the operation of the computer system. Operating systems can be explored from two viewpoints: the user and the system. 1.1.1 User View The user view of the computer varies by the interface being used. For PC’s the operating system is designed mostly for ease of use, with some attention paid to performance, and none paid to resource utilization. For mainframe or minicomputer, users will access the same computer through other terminals. Hence the OS is designed to maximize resource utilization to assume that all available CPU time, memory and input/output devices are used efficiently. For workstations connected to networks, the OS is designed to compromise between individual usability and resource utilization. For handheld computers, the OS is designed for individual usability and also performance per amount of battery life. 1.1.2 System View From the computer's point of view, the operating system is the program is most intimate with the hardware. We can view an operating system as a resource allocator. A slightly different view of an operating system is a control program. As a resource allocator, it faces numerous and possibly conflicting requests for resources, the operating system must decide how to allocate them to specific programs and users so that it can operate the computer system efficiently and fairly. A control program manages the execution of user programs to prevent errors and improper use of the computer. 1.2 Mainframe Systems Mainframe computer systems were the first computers used to tackle many commercial and scientific applications. The growth of mainframe systems can be categorized as Batch Systems Multiprogrammed systems Time sharing systems 1.2.1 Batch Systems The major task of operating system in early computers was to transfer control automatically from one job to the next. The operating system was always resident in memory (Figure 1.2). To speed up processing, operators batched together jobs with similar needs and ran them through the computer as a group. Thus, the programmers would leave their programs with the operator. The operator would sort programs into batches with similar requirements and, as the computer became available, would run each batch. The output from each job would be sent back to the appropriate programmer. OS User Program Area Figure 1.2 Memory layout for a simple batch system 1.2.2 Multiprogrammed Systems Multiprogramming increases CPU utilization by organizing jobs so that the CPU always has one to execute. The operating system keeps several jobs in memory simultaneously (Figure 1.3). The operating system picks and begins to execute one of the jobs in the memory. Eventually, the job may have to wait for some task, such as an I/O operation, to complete. At that time the operating system switches to execute another job. When that job needs to wait, the CPU is switched to another job, and so on. Eventually, the first job finishes waiting and gets the CPU back. 0 Operating System Job 1 Job 2 Job 3 Job 4 512k Figure 1.3 Memory layout for a multiprogramming system If several jobs are ready to be brought into memory, and if there is not enough room for all of them, then the system must choose among them. Making this decision is called Job Scheduling. 1.2.3 Time-Sharing Systems Time sharing (or multitasking) is a logical extension of multiprogramming. Where the CPU executes multiple jobs by switching among them, but the switches occur so frequently that the users can interact with each program while it is running. A time-shared operating system allows many users to share the computer simultaneously. A time-shared operating system uses CPU scheduling and multiprogramming to provide each user with a small portion of a time-shared computer. Each user has at least one separate program in memory. A program loaded into memory and executing is commonly referred to as a process. Time-sharing operating systems are even more complex than multiprogrammed operating systems. In both, several jobs must be kept simultaneously in memory, so the system must have memory management and protection To obtain a reasonable response time, jobs may have to be swapped in and out of main memory to the disk that now serves as a backing store for main memory. A common method for achieving this goal is virtual memory, which is a technique that allows the execution of a job that may not be completely in memory. The main advantage of the virtual-memory scheme is that programs can be larger than physical memory. Further, it abstracts main memory into a large, uniform array of storage, separating logical memory as viewed by the user from physical memory. This arrangement frees programmers from concern over memory-storage limitations. Time sharing systems provide a mechanism for concurrent execution, which requires sophisticated CPU-scheduling schemes. To ensure orderly execution, the system must provide mechanisms for job synchronization and communication and it may ensure that jobs do not get stuck in a deadlock, forever waiting for one another. Time sharing systems must also provide a file system. The file system resides on a collection of disks, hence, disk management must be provided. 1.3 Desktop Systems PC operating systems were neither multiuser nor multitasking. The goals of these operating systems have changed with time; instead of maximizing CPU and peripheral utilization, the systems opt for maximizing user convenience and responsiveness. These systems include PCs running Microsoft Windows and the Apple Macintosh. The hardware cost for microcomputers are sufficiently low that individuals have sole use of the computer and the CPU utilization is no longer a prime concern. 1.4 Multiprocessor Systems Multiprocessor systems or parallel systems or tightly coupled systems have more than one processor in close communication, sharing the computer bus, the clock, and sometimes memory and peripheral devices. Multiprocessor systems have three main advantages. Increased throughput: By increasing the number of processors, getting more work done in less time. Economy of scale: by sharing peripherals, mass storage, and power supplies among multiple processors. Increased reliability: by distributing functions among multiple processors where in failure of one processor will not halt the system. The most common multiple-processor systems now use symmetric multiprocessing (SMP), in which each processor runs an identical copy of the operating system, and these copies communicate with one another as needed. Here all processors are pears no master-slave relationship between processors. Some systems use asymmetric multiprocessing, in which each processor is assigned a specific task. A master processor controls the system; the other processors either look to the master for instruction or have predefined tasks. As microprocessors become less expensive and more powerful, additional Operating-system functions are off-loaded to slave processors (or back-ends). CPU CPU ……… CPU Memory Figure 1.4 Symmetric multiprocessing architecture 1.5 Distributed Systems Distributed systems depend on networking for their functionality. By being able to communicate, distributed systems are able to share computational tasks, and provide a rich set of features to users. Networks vary by the protocols used, the distances between nodes, and the transport media to an operating system, a network protocol simply needs an interface device-a network adapter, and software to package data in the communications protocol to send it and unpackaged it to receive it. Networks are type cast based on the distances between their nodes. A Local-Area Network(LAN) exist within a room, a floor or a building. A Wide-Area Network(WAN) usually exits between buildings, cities or countries. A Metropolitan-Area Network(MAN) could link buildings within a city. 1.5.1 Client-Server Systems In client server system, terminals connected to centralized system are being supplanted by PCs. The general structure of a client-server system is depicted in Figure 1.5. Client Client Client …. Client network Figure 1.5 General Structure of a client-server system Server Server systems can be broadly categorized as compute servers and file servers. Compute-server systems provide an interface to which clients can send requests to perform an action, in response to which they execute the action and send back results to the client. File-server systems provide a file-system interface where clients can create, update, read, and delete files. 1.5.2 Peer-to-Peer Systems The system has a collection of processors that do not share memory or a clock. Instead, each processor has its own local memory. The processors communicate with one another through various communication lines, such as high-speed buses or telephone lines. These systems are usually referred to as loosely coupled systems (or distributed systems). A network operating system is an operating system that provides features such as file sharing, across the network and that includes a communication scheme that allows different processors and different computers to exchange messages. 1.6 Clustered Systems Clustered systems gather together multiple CPUs to accomplish computational work. Clustered systems differ from parallel systems, however, in that they are composed of two or more individual systems coupled together. The clustered computers share storage and are closely linked via LAN networking. Clustering is usually performed to provide high availability. A layer of cluster software runs on the cluster nodes. Each node can monitor one or more of the others (over the LAN). If the monitored machine fails, the monitoring machine can take ownership of its storage, and restart the application(s) that were running on the failed machine. In asymmetric clustering, one machine is in hot standby mode while the other is running the applications. The hot standby host (machine) does nothing but monitor the active server. If that server fails, the hot standby host becomes the active server. In symmetric mode, two or more hosts are running applications, and they are monitoring each other. This mode is obviously more efficient, as it uses all of the available hardware. It does require that more than one application be available to run. 1.7 Real-Time Systems A real-time system is used when rigid time requirements have been placed on the operation of a processor or the flow of data; thus, it is often used as a control device in a dedicated application. Sensors bring data to the computer. The computer must analyze the data and possibly adjust controls to modify the sensor inputs. A real-time system has well-defined, fixed time constraints. Processing must be done within the defined constraints, or the system will fail Real-time systems come in two flavors: hard and soft. Hard Real Time System: A hard real-time system guarantees that critical tasks be completed on time. This goal requires that all delays in the system be bounded, from the retrieval of stored data to the time that it takes the operating system to finish any request made of it. Soft Real Time System: A less restrictive type of real-time system is a soft real-time system, where a critical realtime task gets priority over other tasks, and retains that priority until it completes. As in hard real-time systems, the operating-system kernel delays need to be bounded. A real-time task cannot be kept waiting indefinitely for the kernel to run it. Soft real time is an achievable goal that can be mixed with other types of systems. They are useful in several areas, including multimedia, virtual reality, and advanced scientific projects-such as undersea exploration and planetary rovers. 1.8 Handheld Systems Handheld systems include personal digital assistants (PDAs), such as palm pilots or cellular telephones with connectivity to a network such as the Internet. Developers of handheld systems and applications face many challenges, most of which are due to the limited size of such devices. For example, a PDA is typically about 5 inches in height and 3 inches in width, and it weighs less than one-half pound. Due to this limited size, most handheld devices have a small amount of memory, include slow processors, and feature small display screens. A second issue of concern to developers of handheld devices is the speed of the processor used in the device. Processors for most handheld devices often run at a fraction of the speed of a processor in Pc. Faster processors require more power. To include a faster processor in a handheld device would require a larger battery that would have to be replaced (or recharged) more frequently.