exploratory_data_analysis - Donald Bren School of Information

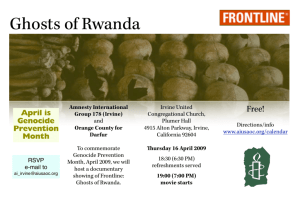

advertisement

CS 277, Data Mining Exploratory Data Analysis Padhraic Smyth Department of Computer Science Bren School of Information and Computer Sciences University of California, Irvine 2 Outline Assignment 1: Questions? Today’s Lecture: Exploratory Data Analysis – Analyzing single variables – Analyzing pairs of variables – Higher-dimensional visualization techniques Next Lecture: Clustering and Dimension Reduction – Dimension reduction methods – Clustering methods Padhraic Smyth, UC Irvine: CS 277, Winter 2014 3 What are the main Data Mining Techniques? • Descriptive Methods – – – – – Exploratory Data Analysis, Visualization Dimension reduction (principal components, factor models, topic models) Clustering Pattern and Anomaly Detection ….and more • Predictive Modeling – – – – – Classification Ranking Regression Matrix completion (recommender systems) …and more Padhraic Smyth, UC Irvine: CS 277, Winter 2014 4 The Typical Data Mining Process Problem Definition Defining the Goal Understanding the Problem Domain Data Definition Defining and Understanding Features Creating Training and Test Data Data Exploration Exploratory Data Analysis Data Mining Running Data Mining Algorithms Evaluating Results/Models Model Deployment System Implementation And Testing Evaluation “in the field” Model in Operations Model Monitoring Model Updating Padhraic Smyth, UC Irvine: CS 277, Winter 2014 5 Exploratory Data Analysis: Single Variables Padhraic Smyth, UC Irvine: CS 277, Winter 2014 6 Summary Statistics Mean: “center of data” Mode: location of highest data density Variance: “spread of data” Skew: indication of non-symmetry Range: max - min Median: 50% of values below, 50% above Quantiles: e.g., values such that 25%, 50%, 75% are smaller Note that some of these statistics can be misleading E.g., mean for data with 2 clusters may be in a region with zero data Padhraic Smyth, UC Irvine: CS 277, Winter 2014 7 Histogram of Unimodal Data 1000 data points simulated from a Normal distribution, mean 10, variance 1, 30 bins 1200 1000 800 600 400 200 0 6 7 8 9 10 11 12 13 14 Padhraic Smyth, UC Irvine: CS 277, Winter 2014 8 Histograms: Unimodal Data 100 data points from a Normal, mean 10, variance 1, with 5, 10, 30 bins 25 40 35 20 30 25 15 20 10 15 10 5 5 0 6 7 8 9 10 11 12 13 0 6 7 8 9 10 11 12 13 12 10 8 6 4 2 0 6 7 8 9 10 11 12 13 Padhraic Smyth, UC Irvine: CS 277, Winter 2014 9 Histogram of Multimodal Data 15000 data points simulated from a mixture of 3 Normal distributions, 300 bins 400 350 300 250 200 150 100 50 0 5 6 7 8 9 10 11 12 13 14 Padhraic Smyth, UC Irvine: CS 277, Winter 2014 10 Histogram of Multimodal Data 15000 data points simulated from a mixture of 3 Normal distributions, 300 bins 6000 3500 3000 5000 2500 4000 2000 3000 1500 2000 1000 1000 0 500 5 6 7 8 9 10 11 12 13 14 400 0 5 7 8 9 10 11 12 13 14 11 12 13 14 160 350 140 300 120 250 100 200 80 150 60 100 40 50 20 0 6 5 6 7 8 9 10 11 12 13 14 0 5 6 7 8 9 10 Padhraic Smyth, UC Irvine: CS 277, Winter 2014 11 Skewed Data 5000 data points simulated from an exponential distribution, 100 bins 450 400 350 300 250 200 150 100 50 0 0 1 2 3 4 5 6 7 8 9 Padhraic Smyth, UC Irvine: CS 277, Winter 2014 12 Another Skewed Data Set 10000 data points simulated from a mixture of 2 exponentials, 100 bins 5000 4500 4000 3500 3000 2500 2000 1500 1000 500 0 0 20 40 60 80 100 120 140 160 180 200 Padhraic Smyth, UC Irvine: CS 277, Winter 2014 13 Same Skewed Data after taking Logs (base 10) 10000 data points simulated from a mixture of 2 exponentials, 100 bins 350 300 250 200 150 100 50 0 -4 -3 -2 -1 0 1 2 3 Padhraic Smyth, UC Irvine: CS 277, Winter 2014 14 What will the mean or median tell us about this data? 900 800 700 600 500 400 300 200 100 0 9 10 11 12 13 14 15 16 Padhraic Smyth, UC Irvine: CS 277, Winter 2014 15 Issues with Histograms • For small data sets, histograms can be misleading. Small changes in the data or to the bucket boundaries can result in very different histograms. • For large data sets, histograms can be quite effective at illustrating general properties of the distribution. • Can smooth histogram using a variety of techniques – E.g., kernel density estimation, which avoids bins – but requires some notion of “scale” • Histograms effectively only work with 1 variable at a time – Difficult to extend to 2 dimensions, not possible for >2 – So histograms tell us nothing about the relationships among variables Padhraic Smyth, UC Irvine: CS 277, Winter 2014 16 US Zipcode Data: Population by Zipcode 900 8000 K = 50 7000 K = 500 800 700 6000 600 5000 500 4000 400 3000 300 2000 200 1000 0 100 0 2 4 6 8 10 0 12 0 2 4 6 8 10 12 4 4 x 10 x 10 400 K = 50 350 300 250 200 150 100 50 0 0 500 1000 1500 2000 2500 3000 3500 4000 4500 5000 Padhraic Smyth, UC Irvine: CS 277, Winter 2014 17 Histogram with Outliers Pima Indians Diabetes Data, From UC Irvine Machine Learning Repository Number of Individuals X values Padhraic Smyth, UC Irvine: CS 277, Winter 2014 18 Histogram with Outliers Number of Individuals Pima Indians Diabetes Data, From UC Irvine Machine Learning Repository blood pressure = 0 ? Diastolic Blood Pressure Padhraic Smyth, UC Irvine: CS 277, Winter 2014 19 Box Plots: Pima Indians Diabetes Data Two side-by-side box-plots of individuals from the Pima Indians Diabetes Data Set Body Mass Index Healthy Individuals Diabetic Individuals Padhraic Smyth, UC Irvine: CS 277, Winter 2014 20 Box Plots: Pima Indians Diabetes Data Two side-by-side box-plots of individuals from the Pima Indians Diabetes Data Set Body Mass Index Plots all data points outside “whiskers” Upper Whisker 1.5 x Q3-Q1 Q3 Q2 (median) Box = middle 50% of data Q1 Lower Whisker Healthy Individuals Diabetic Individuals Padhraic Smyth, UC Irvine: CS 277, Winter 2014 21 Box Plots: Pima Indians Diabetes Data healthy Diastolic Blood Pressure 24-hour Serum Insulin Plasma Glucose Concentration Body Mass Index diabetic healthy diabetic Padhraic Smyth, UC Irvine: CS 277, Winter 2014 22 Exploratory Data Analysis Analyzing more than 1 variable at a time… Padhraic Smyth, UC Irvine: CS 277, Winter 2014 23 Relationships between Pairs of Variables • Say we have a variable Y we want to predict and many variables X that we could use to predict Y • In exploratory data analysis we may be interested in quickly finding out if a particular X variable is potentially useful at predicting Y • Options? – Linear correlation – Scatter plot: plot Y values versus X values Padhraic Smyth, UC Irvine: CS 277, Winter 2014 24 Linear Dependence between Pairs of Variables • Covariance and correlation measure linear dependence • Assume we have two variables or attributes X and Y and n objects taking on values x(1), …, x(n) and y(1), …, y(n). The sample covariance of X and Y is: 1 n Cov( X , Y ) ( x(i ) x )( y (i ) y ) n i 1 • The covariance is a measure of how X and Y vary together. – it will be large and positive if large values of X are associated with large values of Y and small X small Y • (Linear) Correlation = scaled covariance, varies between -1 and 1 n ( x(i) x )( y(i) y ) ( X ,Y ) i 1 ( x(i ) x ) 2 ( y (i ) y ) 2 i 1 i 1 n n 1 2 Padhraic Smyth, UC Irvine: CS 277, Winter 2014 25 Correlation coefficient • Covariance depends on ranges of X and Y • Standardize by dividing by standard deviation • Linear correlation coefficient is defined as: n ( x(i) x )( y(i) y ) ( X ,Y ) i 1 ( x(i ) x ) 2 ( y (i ) y ) 2 i 1 i 1 n n 1 2 Padhraic Smyth, UC Irvine: CS 277, Winter 2014 26 Data Set on Housing Prices in Boston (widely used data set in research on regression models) 1 CRIM per capita crime rate by town 2 ZN proportion of residential land zoned for lots over 25,000 ft2 3 INDUS proportion of non-retail business acres per town 4 NOX Nitrogen oxide concentration (parts per 10 million) 5 RM average number of rooms per dwelling 6 AGE proportion of owner-occupied units built prior to 1940 7 DIS weighted distances to five Boston employment centres 8 RAD index of accessibility to radial highways 9 TAX full-value property-tax rate per $10,000 10 PTRATIO pupil-teacher ratio by town 11 MEDV Median value of owner-occupied homes in $1000's Padhraic Smyth, UC Irvine: CS 277, Winter 2014 27 Matrix of Pairwise Linear Correlations -1 0 +1 Crime Rate Industry Nitrous oxide Average # rooms Proportion of old houses Highway accessibility Property tax rate Student-teacher ratio Data on characteristics of Boston housing Percentage of large residential lots Distance to employment centers Median house value Padhraic Smyth, UC Irvine: CS 277, Winter 2014 28 Dangers of searching for correlations in high-dimensional data Simulated 50 random Gaussian/normal data vectors, each with 100 variables Results in a 50 x 100 data matrix Below is a histogram of the 100 choose 2 pairs of correlation coefficients Even if data are entirely random (no dependence) there is a very high probability some variables will appear dependent just by chance. Padhraic Smyth, UC Irvine: CS 277, Winter 2014 29 Examples of X-Y plots and linear correlation values Padhraic Smyth, UC Irvine: CS 277, Winter 2014 30 Examples of X-Y plots and linear correlation values Padhraic Smyth, UC Irvine: CS 277, Winter 2014 31 Examples of X-Y plots and linear correlation values Non-Linear Dependence Lack of linear correlation does not imply lack of dependence Linear Dependence Padhraic Smyth, UC Irvine: CS 277, Winter 2014 32 DATA SET 2 15 10 10 Y values Y values DATA SET 1 15 5 0 0 5 10 15 5 0 20 0 5 X values 10 10 5 5 10 20 15 20 DATA SET 4 15 Y values Y values DATA SET 3 0 15 X values 15 0 10 15 20 X values 5 0 0 5 10 X values Anscombe, Francis (1973), Graphs in Statistical Analysis, The American Statistician, pp. 195-199. Padhraic Smyth, UC Irvine: CS 277, Winter 2014 33 Guess the Linear Correlation Values for each Data Set DATA SET 2 15 10 10 Y values Y values DATA SET 1 15 5 0 0 5 10 15 5 0 20 0 5 X values 10 10 5 5 10 20 15 20 DATA SET 4 15 Y values Y values DATA SET 3 0 15 X values 15 0 10 15 20 X values 5 0 0 5 10 X values Anscombe, Francis (1973), Graphs in Statistical Analysis, The American Statistician, pp. 195-199. Padhraic Smyth, UC Irvine: CS 277, Winter 2014 34 Actual Correlation Values DATA SET 1 DATA SET 2 15 15 Correlation = 0.82 10 Y values Y values Correlation = 0.82 5 0 0 5 10 15 10 5 0 20 0 5 X values DATA SET 3 20 15 20 DATA SET 4 15 Correlation = 0.82 Correlation = 0.82 10 Y values Y values 15 X values 15 5 0 10 0 5 10 15 20 X values 10 5 0 0 5 10 X values Anscombe, Francis (1973), Graphs in Statistical Analysis, The American Statistician, pp. 195-199. Padhraic Smyth, UC Irvine: CS 277, Winter 2014 35 Summary Statistics for each Data Set Summary Statistics of Data Set 1 N = 11 Mean of X = 9.0 Mean of Y = 7.5 Intercept = 3 Slope = 0.5 Correlation = 0.82 Summary Statistics of Data Set 2 N = 11 Mean of X = 9.0 Mean of Y = 7.5 Intercept = 3 Slope = 0.5 Correlation = 0.82 Summary Statistics of Data Set 3 N = 11 Mean of X = 9.0 Mean of Y = 7.5 Intercept = 3 Slope = 0.5 Correlation = 0.82 Summary Statistics of Data Set 4 N = 11 Mean of X = 9.0 Mean of Y = 7.5 Intercept = 3 Slope = 0.5 Correlation = 0.82 Anscombe, Francis (1973), Graphs in Statistical Analysis, The American Statistician, pp. 195-199. Padhraic Smyth, UC Irvine: CS 277, Winter 2014 36 Conclusions so far? • Summary statistics are useful…..up to a point • Linear correlation measures can be misleading • There really is no substitute for plotting/visualizing the data Padhraic Smyth, UC Irvine: CS 277, Winter 2014 37 Scatter Plots • Plot the value of one variable against the other • Simple…but can be very informative, can reveal more than summary statistics • For example, we can… – See if variables are dependent on each other (beyond linear dependence) – Detect if outliers are present – Can color-code to overlay group information (e.g., color points by class label for classification problems) Padhraic Smyth, UC Irvine: CS 277, Winter 2014 38 5 2.5 (from US Zip code data: each point = 1 Zip code) x 10 MEDIAN HOUSEHOLD INCOME units = dollars 2 1.5 1 0.5 0 0 2 4 6 8 10 MEDIAN PERCAPITA INCOME 12 14 4 x 10 Padhraic Smyth, UC Irvine: CS 277, Winter 2014 39 Constant Variance versus Changing Variance variation in Y does not depend on X variation in Y changes with the value of X e.g., Y = annual tax paid, X = income Padhraic Smyth, UC Irvine: CS 277, Winter 2014 40 Problems with Scatter Plots of Large Data appears: later apps older; reality: downward slope (more apps, more variance) 96,000 bank loan applicants scatter plot degrades into black smudge ... Padhraic Smyth, UC Irvine: CS 277, Winter 2014 41 Problems with Scatter Plots of Large Data appears: later apps older; reality: downward slope (more apps, more variance) 96,000 bank loan applicants scatter plot degrades into black smudge ... Padhraic Smyth, UC Irvine: CS 277, Winter 2014 42 Contour Plots (based on local density) can help recall: (same 96,000 bank loan apps as before) shows variance(y) with x is indeed due to horizontal skew in density unimodal skewed skewed Padhraic Smyth, UC Irvine: CS 277, Winter 2014 43 Scatter-Plot Matrices Pima Indians Diabetes data Padhraic Smyth, UC Irvine: CS 277, Winter 2014 44 Another Scatter-Plot Matrix For interactive visualization the concept of “linked plots” is generally useful, i.e., clicking on 1 or more points in 1 window and having these same points highlighted in other windows Padhraic Smyth, UC Irvine: CS 277, Winter 2014 45 Using Color to Show Group Information in Scatter Plots Iris classification data set, 3 classes Figure from www.originlab.com Padhraic Smyth, UC Irvine: CS 277, Winter 2014 46 Another Example with Grouping by Color Figure from hci.stanford.edu Padhraic Smyth, UC Irvine: CS 277, Winter 2014 47 Outlier Detection • Definition of an outlier? No precise definition Generally….”A data point that is significantly different to the rest of the data” But how do we define “significantly different”? (many answers to this…..) Typically assumed to mean that the point was measured in error, or is not a true measurement in some sense Outliers in 1 dimension Outlier in 2 dimensions 9 8 7 Y VALUES – – – – 6 5 4 3 2 1 2 3 4 5 X VALUES 6 7 8 9 Padhraic Smyth, UC Irvine: CS 277, Winter 2014 48 0 0 5 10 15 0 20 0 5 10 Example 1: The Effect of Outliers on Regression X values DATA SET 3 15 5 10 10 0 0 5 5 10 15 DATA SET 1 5 20 10 1 5 1 0 Y values 15 Y values 15 Y values 5 10 Y values Y values Y values DATA SET 4 DATA SET 2 10 0 5 10 X values 0 0 5 10 15 0 20 0 15 2 X values 5 0 10 0 X values X values Least SquaresDATA Fit SET with the Outlier 3 DATA SET 4 15 5 20 10 15 20 X values Least SquaresDATA Fit without the Outlier SET 3 15 1 10 10 10 1 5 0 0 5 10 X values 15 20 5 0 0 5 10 X values Y values 15 Y values 15 Y values Y values 2 15 DATA SET 1 15 15 X values 5 0 0 15 5 20 10 15 20 X values Padhraic Smyth, UC Irvine: CS 277, Winter 2014 49 Example 2: Least Square Fitting is Sensitive to Outliers 18 16 16 2 cost for this one datum 14 12 Heavy penalty for large errors 10 5 4 3 2 1 0 -20 8 6 4 -15 -10 -5 0 5 2 0 0 2 4 6 8 10 12 14 16 18 20 Slide courtesy of Alex Ihler Padhraic Smyth, UC Irvine: CS 277, Winter 2014 50 More Robust Cost Functions for Training Regression Models (MSE) (MAE) Something else entirely, e.g., (Blue Line) Slide courtesy of Alex Ihler Padhraic Smyth, UC Irvine: CS 277, Winter 2014 51 L1 is more Robust to Outliers than L2 18 L2, original data 16 L1, original data 14 12 L2, outlier data 10 L1, outlier data 8 6 4 2 0 0 2 4 6 8 10 12 14 16 18 20 Slide courtesy of Alex Ihler Padhraic Smyth, UC Irvine: CS 277, Winter 2014 52 Detection of Outliers in Multiple Dimensions 9 8 Y VALUES 7 6 5 4 3 2 1 2 3 4 5 X VALUES 6 7 8 9 • Detecting “multi-dimensional outliers” is generally difficult • In the example above, the blue point will not look like an outlier if we were to plot 1-dimensional histograms of Y or X – it only stands out in the 2d plot • Now consider the same situation but in 3 or more dimensions Padhraic Smyth, UC Irvine: CS 277, Winter 2014 53 Some Advice on Outliers • Use visualization (e.g., in 1d, 2d) to spot obvious outliers • Use domain knowledge and known constraints – E.g., Age in years should be between 0 and 120 • Use the model itself to help detect outliers – E.g., in regression, data points with errors much larger than the others may be outliers • Use robust techniques that are not overly sensitive to outliers – E.g., median is more robust than the mean, L1 is more robust than L2, etc • Automated outlier detection algorithms? …not always useful – E.g., fit probability density model to N-1 points and determine how likely the Nth point is – May not work well in high dimensions and/or if there are multiple outliers • In general: for large data sets outliers you can probably assume that outliers are present and proceed with caution…. Padhraic Smyth, UC Irvine: CS 277, Winter 2014 54 Multivariate Visualization • Multivariate -> multiple variables • 2 variables: scatter plots, etc • 3 variables: – – – – • 4 variables: – – • 3-dimensional plots Look impressive, but often not that useful Can be cognitively challenging to interpret Alternatives: overlay color-coding (e.g., categorical data) on 2d scatter plot 3d with color or time Can be effective in certain situations, but tricky Higher dimensions – – – – Generally difficult Scatter plots, icon plots, parallel coordinates: all have weaknesses Alternative: “map” data to lower dimensions, e.g., PCA or multidimensional scaling Main problem: high-dimensional structure may not be apparent in low-dimensional views Padhraic Smyth, UC Irvine: CS 277, Winter 2014 55 Using Icons to Encode Information, e.g., Star Plots Each star represents a single observation. Star plots are used to examine the relative values for a single data point The star plot consists of a sequence of equi-angular spokes, called radii, with each spoke representing one of the variables. Useful for small data sets with up to 10 or so variables 1 2 3 4 Price Mileage (MPG) 1978 Repair Record (1 = Worst, 5 = Best) 1977 Repair Record (1 = Worst, 5 = Best) 5 6 7 8 Headroom Rear Seat Room Trunk Space Weight Limitations? Small data sets, small dimensions Ordering of variables may affect perception 9 Length Padhraic Smyth, UC Irvine: CS 277, Winter 2014 56 Another Example of Icon Plots Figure from statsoft.com Padhraic Smyth, UC Irvine: CS 277, Winter 2014 57 Combining Scatter Plots and Icon Plots Figure from statsoft.com Padhraic Smyth, UC Irvine: CS 277, Winter 2014 58 Chernoff Faces • Variable values associated with facial characteristic parameters, e.g., head eccentricity, eye eccentricity, pupil size, eyebrow slant, nose size, mouth shape, eye spacing, eye size, mouth length and degree of mouth opening • Limitations? – Only up to 10 or so dimensions – Overemphasizes certain variables because of our perceptual biases Padhraic Smyth, UC Irvine: CS 277, Winter 2014 59 Padhraic Smyth, UC Irvine: CS 277, Winter 2014 60 Parallel Coordinates Method Epileptic Seizure Data 1 (of n) cases Interactive “brushing” is useful for seeing distinctions (this case is a “brushed” one, with a darker line, to standout from the n-1 other cases) Padhraic Smyth, UC Irvine: CS 277, Winter 2014 61 More elaborate parallel coordinates example (from E. Wegman, 1999). 12,000 bank customers with 8 variables Additional “dependent” variable is profit (green for positive, red for negative) Padhraic Smyth, UC Irvine: CS 277, Winter 2014 62 Interactive “Grand Tour” Techniques • “Grand Tour” idea – – – – – Cycle continuously through multiple projections of the data Cycles through all possible projections (depending on time constraints) Projects can be 1, 2, or 3d typically (often 2d) Can link with scatter plot matrices (see following example) Asimov (1985) • Example on following 2 slides – 7 dimensional physics data, color-coded by group, shown with (a) Standard scatter matrix (b) 2 static snapshots of grand tour Padhraic Smyth, UC Irvine: CS 277, Winter 2014 63 Padhraic Smyth, UC Irvine: CS 277, Winter 2014 64 Padhraic Smyth, UC Irvine: CS 277, Winter 2014 65 Visualization of an email network using 2-dimensional graph drawing or “embedding” Data from 500 researchers at Hewlett-Packard over approximately 1 year. Various structural elements of the network are apparent Padhraic Smyth, UC Irvine: CS 277, Winter 2014 66 Exploratory Data Analysis Visualizing Time-Series Data Padhraic Smyth, UC Irvine: CS 277, Winter 2014 67 Time-Series Data: Example 1 Historical data on millions of miles flown by UK airline passengers …..note a number of different systematic effects Summer “double peaks” (favor early or late) Summer peaks steady growth trend New Year bumps Padhraic Smyth, UC Irvine: CS 277, Winter 2014 68 Time-Series Data: Example 2 Data from study on weight measurements over time of children in Scotland Experimental Study: More milk -> better health? 20,000 children: 5k raw, 5k pasteurize, 10k control (no supplement) mean weight vs mean age for 10k control group Weight Would expect smooth weight growth plot. Plot shows an unexpected pattern (steps), not apparent from raw data table. Age Why do the children appear to grow in spurts? Padhraic Smyth, UC Irvine: CS 277, Winter 2014 69 Time-Series Data: Example 3 (Google Trends) Search Query = whiskey Padhraic Smyth, UC Irvine: CS 277, Winter 2014 70 Time-Series Data: Example 4 (Google Trends) Search Query = NSA Padhraic Smyth, UC Irvine: CS 277, Winter 2014 71 Spatial Distribution of the Same Data (Google Trends) Search Query = whiskey Padhraic Smyth, UC Irvine: CS 277, Winter 2014 72 Summary on Exploration/Visualization • Always useful and worthwhile to visualize data – – – – human visual system is excellent at pattern recognition gives us a general idea of how data is distributed, e.g., extreme skew detect “obvious outliers” and errors in the data gain a general understanding of low-dimensional properties • Many different visualization techniques • Limitations – generally only useful up to 3 or 4 dimensions – massive data: only so many pixels on a screen - but subsampling is useful Padhraic Smyth, UC Irvine: CS 277, Winter 2014